Self-Supervised Equivariant Regularization Reconciles Multiple Instance Learning: Joint Referable Diabetic Retinopathy Classification and Lesion Segmentation

Paper and Code

Oct 12, 2022

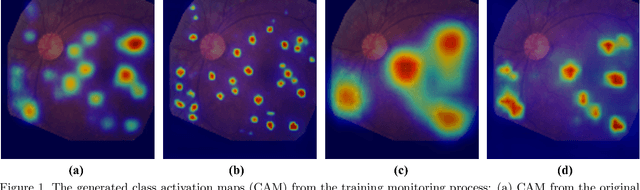

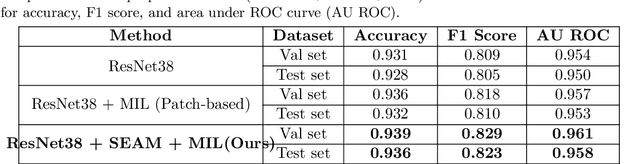

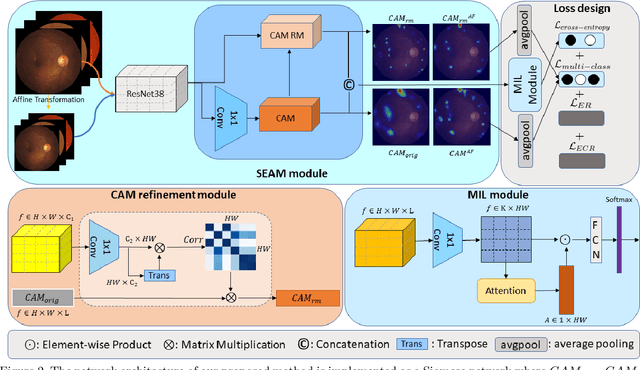

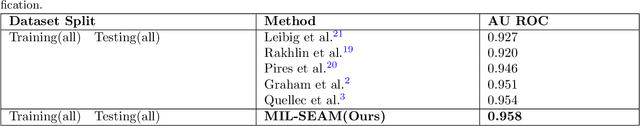

Lesion appearance is a crucial clue for medical providers to distinguish referable diabetic retinopathy (rDR) from non-referable DR. Most existing large-scale DR datasets contain only image-level labels rather than pixel-based annotations. This motivates us to develop algorithms to classify rDR and segment lesions via image-level labels. This paper leverages self-supervised equivariant learning and attention-based multi-instance learning (MIL) to tackle this problem. MIL is an effective strategy to differentiate positive and negative instances, helping us discard background regions (negative instances) while localizing lesion regions (positive ones). However, MIL only provides coarse lesion localization and cannot distinguish lesions located across adjacent patches. Conversely, a self-supervised equivariant attention mechanism (SEAM) generates a segmentation-level class activation map (CAM) that can guide patch extraction of lesions more accurately. Our work aims at integrating both methods to improve rDR classification accuracy. We conduct extensive validation experiments on the Eyepacs dataset, achieving an area under the receiver operating characteristic curve (AU ROC) of 0.958, outperforming current state-of-the-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge