Nadia Bianchi-Berthouze

Pain level and pain-related behaviour classification using GRU-based sparsely-connected RNNs

Dec 20, 2022

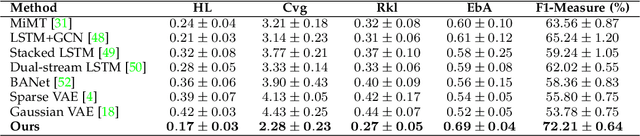

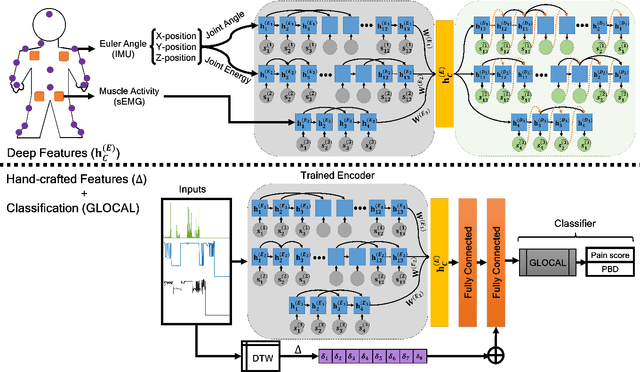

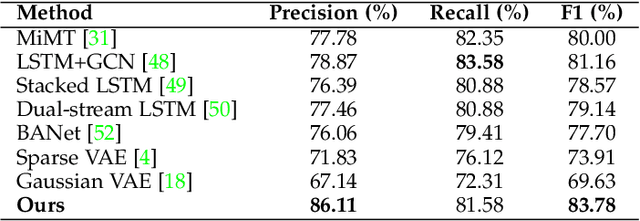

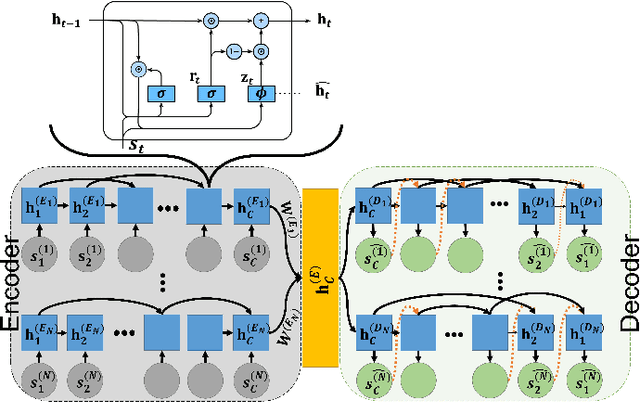

Abstract:There is a growing body of studies on applying deep learning to biometrics analysis. Certain circumstances, however, could impair the objective measures and accuracy of the proposed biometric data analysis methods. For instance, people with chronic pain (CP) unconsciously adapt specific body movements to protect themselves from injury or additional pain. Because there is no dedicated benchmark database to analyse this correlation, we considered one of the specific circumstances that potentially influence a person's biometrics during daily activities in this study and classified pain level and pain-related behaviour in the EmoPain database. To achieve this, we proposed a sparsely-connected recurrent neural networks (s-RNNs) ensemble with the gated recurrent unit (GRU) that incorporates multiple autoencoders using a shared training framework. This architecture is fed by multidimensional data collected from inertial measurement unit (IMU) and surface electromyography (sEMG) sensors. Furthermore, to compensate for variations in the temporal dimension that may not be perfectly represented in the latent space of s-RNNs, we fused hand-crafted features derived from information-theoretic approaches with represented features in the shared hidden state. We conducted several experiments which indicate that the proposed method outperforms the state-of-the-art approaches in classifying both pain level and pain-related behaviour.

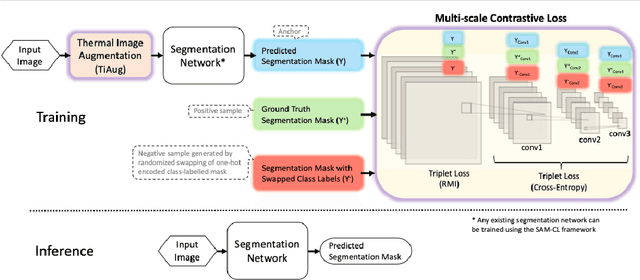

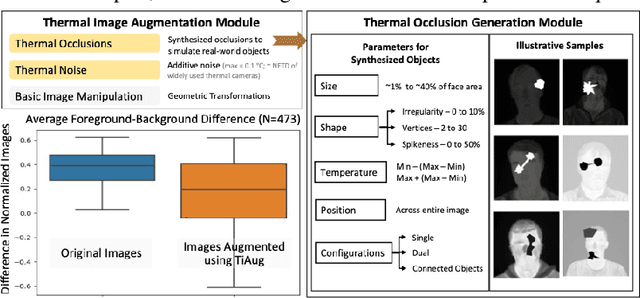

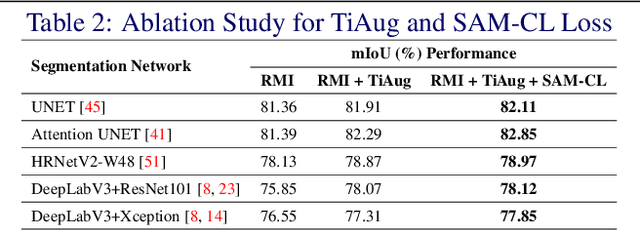

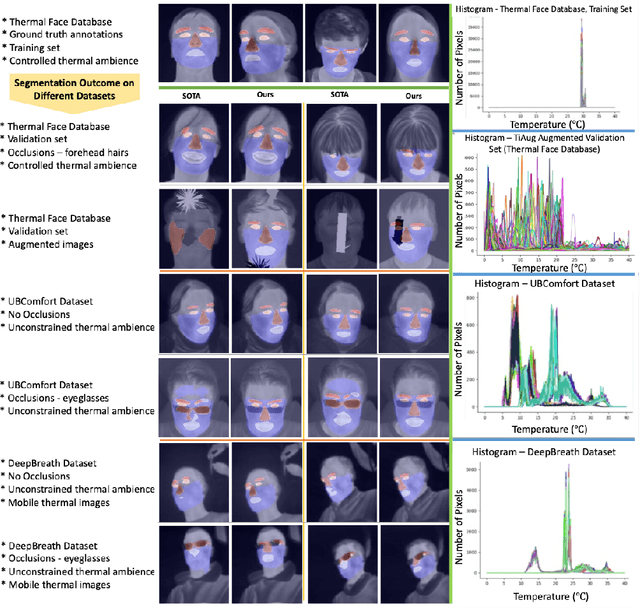

Self-adversarial Multi-scale Contrastive Learning for Semantic Segmentation of Thermal Facial Images

Oct 07, 2022

Abstract:Segmentation of thermal facial images is a challenging task. This is because facial features often lack salience due to high-dynamic thermal range scenes and occlusion issues. Limited availability of datasets from unconstrained settings further limits the use of the state-of-the-art segmentation networks, loss functions and learning strategies which have been built and validated for RGB images. To address the challenge, we propose Self-Adversarial Multi-scale Contrastive Learning (SAM-CL) framework as a new training strategy for thermal image segmentation. SAM-CL framework consists of a SAM-CL loss function and a thermal image augmentation (TiAug) module as a domain-specific augmentation technique. We use the Thermal-Face-Database to demonstrate effectiveness of our approach. Experiments conducted on the existing segmentation networks (UNET, Attention-UNET, DeepLabV3 and HRNetv2) evidence the consistent performance gains from the SAM-CL framework. Furthermore, we present a qualitative analysis with UBComfort and DeepBreath datasets to discuss how our proposed methods perform in handling unconstrained situations.

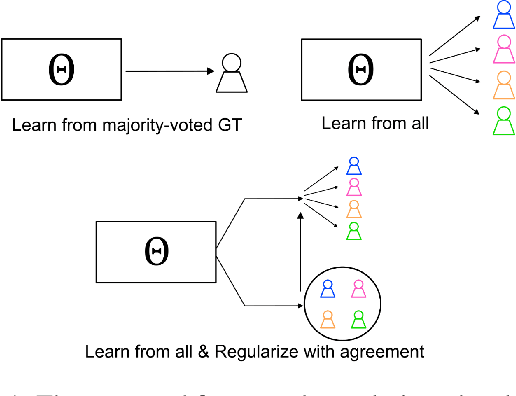

AgreementLearning: An End-to-End Framework for Learning with Multiple Annotators without Groundtruth

Sep 08, 2021

Abstract:The annotation of domain experts is important for some medical applications where the objective groundtruth is ambiguous to define, e.g., the rehabilitation for some chronic diseases, and the prescreening of some musculoskeletal abnormalities without further medical examinations. However, improper uses of the annotations may hinder developing reliable models. On one hand, forcing the use of a single groundtruth generated from multiple annotations is less informative for the modeling. On the other hand, feeding the model with all the annotations without proper regularization is noisy given existing disagreements. For such issues, we propose a novel agreement learning framework to tackle the challenge of learning from multiple annotators without objective groundtruth. The framework has two streams, with one stream fitting with the multiple annotators and the other stream learning agreement information between the annotators. In particular, the agreement learning stream produces regularization information to the classifier stream, tuning its decision to be better in line with the agreement between the annotators. The proposed method can be easily plugged to existing backbones developed with majority-voted groundtruth or multiple annotations. Thereon, experiments on two medical datasets demonstrate improved agreement levels with annotators.

Leveraging Activity Recognition to Enable Protective Behavior Detection in Continuous Data

Nov 16, 2020

Abstract:Protective behavior exhibited by people with chronic pain (CP) during physical activities is the key to understanding their physical and emotional states. Existing automatic protective behavior detection (PBD) methods depend on pre-segmentation of activity instances as they expect situations where activity types are predefined. However, during everyday management, people pass from one activity to another, and support should be delivered continuously and personalized to the activity type and presence of protective behavior. Hence, to facilitate ubiquitous CP management, it becomes critical to enable accurate PBD over continuous data. In this paper, we propose to integrate automatic human activity recognition (HAR) with PBD via a novel hierarchical HAR-PBD architecture comprising GC-LSTM networks, and alleviate the class imbalances therein using a CFCC loss function. Through in-depth evaluation of the approach using a CP patients' dataset, we show that the leveraging of HAR, GC-LSTM networks and the CFCC loss function leads to clear increase in PBD performance against the state-of-the-art (macro F1 score of 0.81 vs. 0.66 and PR-AUC of 0.60 vs. 0.44). We conclude by discussing possible use cases of the HAR-PBD architecture in the context of CP management and other situations. We also discuss the current limitations and ways forward.

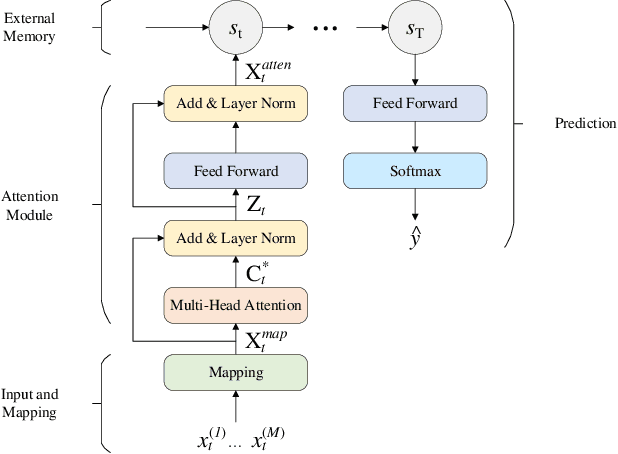

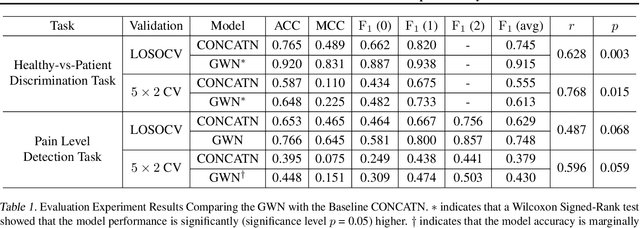

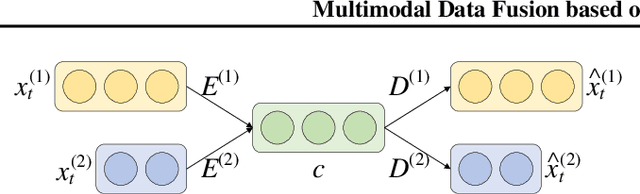

Multimodal Data Fusion based on the Global Workspace Theory

Jan 26, 2020

Abstract:We propose a novel neural network architecture, named the Global Workspace Network (GWN), that addresses the challenge of dynamic uncertainties in multimodal data fusion. The GWN is inspired by the well-established Global Workspace Theory from cognitive science. We implement it as a model of attention, between multiple modalities, that evolves through time. The GWN achieved F1 score of 0.92, averaged over two classes, for the discrimination between patient and healthy participants, based on the multimodal EmoPain dataset captured from people with chronic pain and healthy people performing different types of exercise movements in unconstrained settings. In this task, the GWN significantly outperformed a vanilla architecture. It additionally outperformed the vanilla model in further classification of three pain levels for a patient (average F1 score = 0.75) based on the EmoPain dataset. We further provide extensive analysis of the behaviour of GWN and its ability to deal with uncertainty in multimodal data.

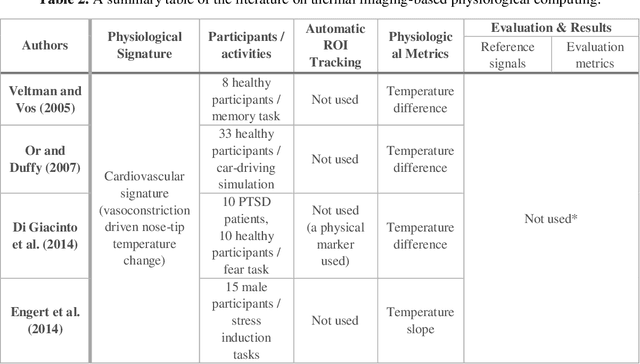

Physiological and Affective Computing through Thermal Imaging: A Survey

Aug 27, 2019

Abstract:Thermal imaging-based physiological and affective computing is an emerging research area enabling technologies to monitor our bodily functions and understand psychological and affective needs in a contactless manner. However, up to recently, research has been mainly carried out in very controlled lab settings. As small size and even low-cost versions of thermal video cameras have started to appear on the market, mobile thermal imaging is opening its door to ubiquitous and real-world applications. Here we review the literature on the use of thermal imaging to track changes in physiological cues relevant to affective computing and the technological requirements set so far. In doing so, we aim to establish computational and methodological pipelines from thermal images of the human skin to affective states and outline the research opportunities and challenges to be tackled to make ubiquitous real-life thermal imaging-based affect monitoring a possibility.

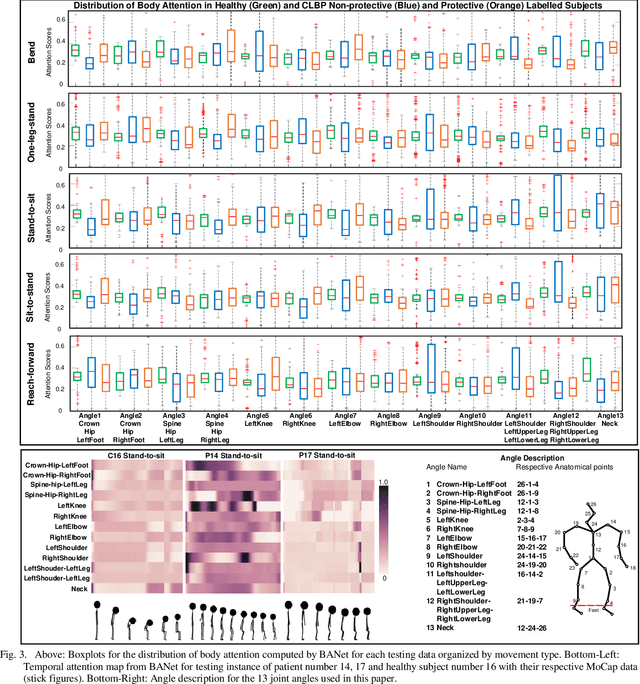

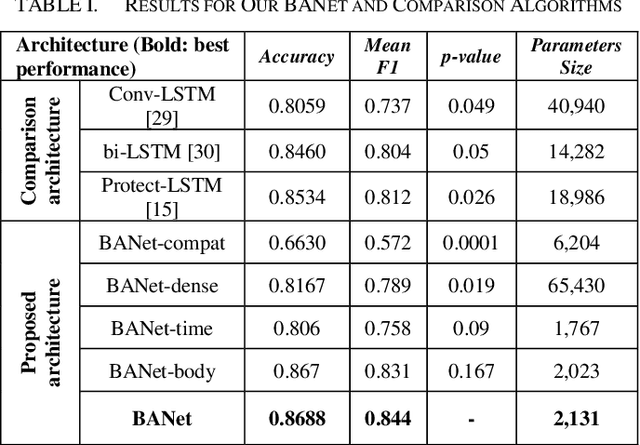

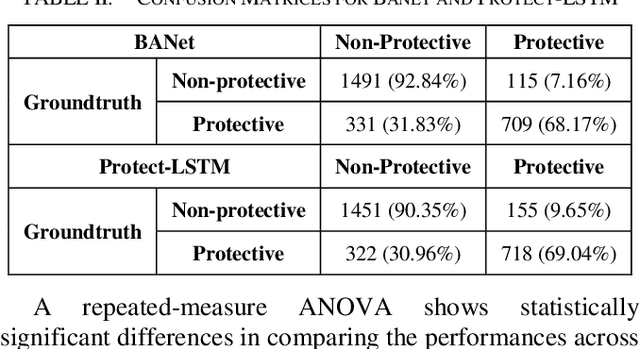

Learning Bodily and Temporal Attention in Protective Movement Behavior Detection

Apr 24, 2019

Abstract:For people with chronic pain (CP), the assessment of protective behavior during physical functioning is essential to understand their subjective pain-related experiences (e.g., fear and anxiety toward pain and injury) and how they deal with such experiences (avoidance or reliance on specific body joints), with the ultimate goal of guiding intervention. Advances in deep learning (DL) can enable the development of such intervention. Using the EmoPain MoCap dataset, we investigate how attention-based DL architectures can be used to improve the detection of protective behavior by capturing the most informative biomechanical cues characterizing specific movements and the strategies used to execute them to cope with pain-related experience. We propose an end-to-end neural network architecture based on attention mechanism, named BodyAttentionNet (BANet). BANet is designed to learn temporal and body-joint regions that are informative to the detection of protective behavior. The approach can consider the variety of ways people execute one movement (including healthy people) and it is independent of the type of movement analyzed. We also explore variants of this architecture to understand the contribution of both temporal and bodily attention mechanisms. Through extensive experiments with other state-of-the-art machine learning techniques used with motion capture data, we show a statistically significant improvement achieved by combining the two attention mechanisms. In addition, the BANet architecture requires a much lower number of parameters than the state-of-the-art ones for comparable if not higher performances.

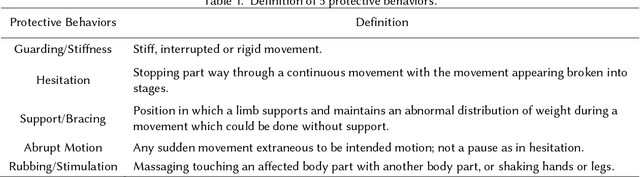

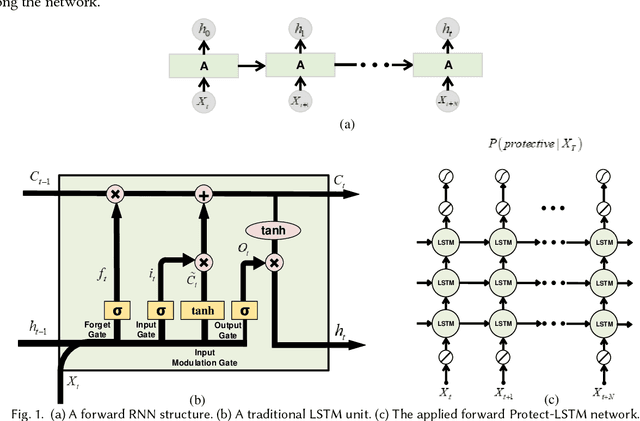

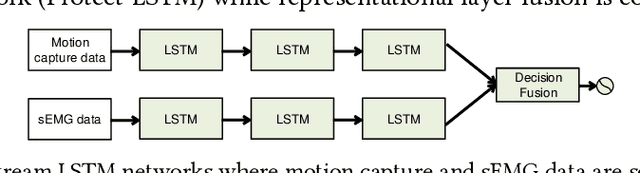

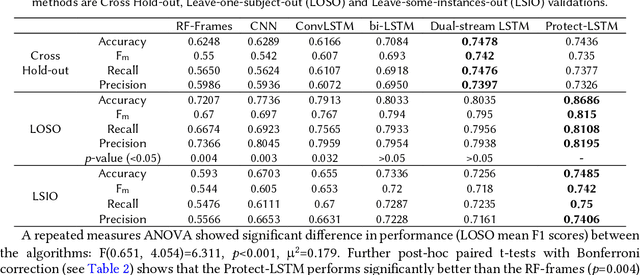

Automatic Detection of Protective Behavior in Chronic Pain Physical Rehabilitation: A Recurrent Neural Network Approach

Feb 24, 2019

Abstract:In chronic pain physical rehabilitation, physiotherapists adapt movement to current performance of patients especially based on the expression of protective behavior, gradually exposing them to feared but harmless and essential everyday movements. As physical rehabilitation moves outside the clinic, physical rehabilitation technology needs to automatically detect such behaviors so as to provide similar personalized support. In this paper, we investigate the use of a Long Short-Term Memory (LSTM) network, which we call Protect-LSTM, to detect events of protective behavior, based on motion capture and electromyography data of healthy people and people with chronic low back pain engaged in five everyday movements. Differently from previous work on the same dataset, we aim to continuously detect protective behavior within a movement rather than overall estimate the presence of such behavior. The Protect-LSTM reaches best average F1 score of 0.815 with leave-one-subject-out (LOSO) validation, using low level features, better than other algorithms. Performances increase for some movements when modelled separately (mean F1 scores: bending=0.77, standing on one leg=0.81, sit-to-stand=0.72, stand-to-sit=0.83, reaching forward=0.67). These results reach excellent level of agreement with the average ratings of physiotherapists. As such, the results show clear potential for in-home technology supported affect-based personalized physical rehabilitation.

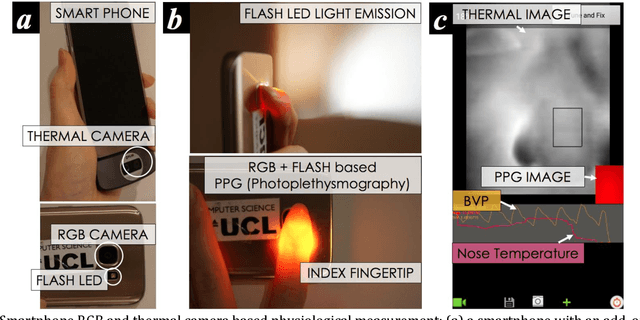

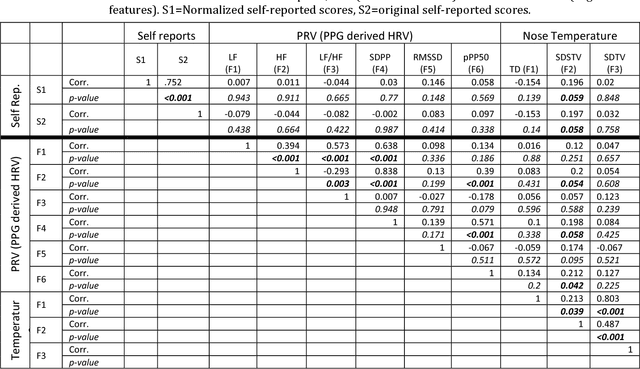

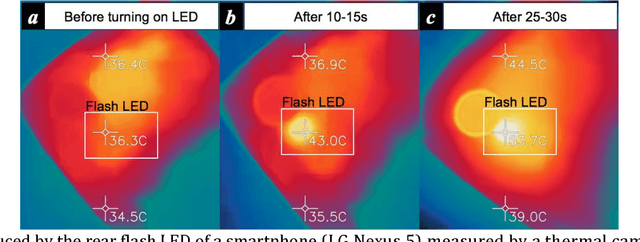

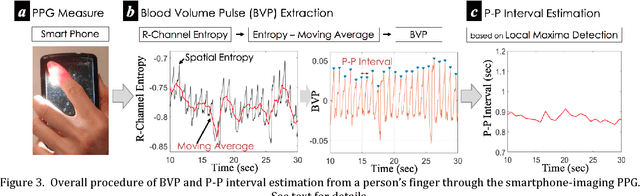

Instant Automated Inference of Perceived Mental Stress through Smartphone PPG and Thermal Imaging

Dec 21, 2018

Abstract:Background: A smartphone is a promising tool for daily cardiovascular measurement and mental stress monitoring. A smartphone camera-based PhotoPlethysmoGraphy (PPG) and a low-cost thermal camera can be used to create cheap, convenient and mobile monitoring systems. However, to ensure reliable monitoring results, a person has to remain still for several minutes while a measurement is being taken. This is very cumbersome and makes its use in real-life mobile situations quite impractical. Objective: We propose a system which combines PPG and thermography with the aim of improving cardiovascular signal quality and capturing stress responses quickly. Methods: Using a smartphone camera with a low cost thermal camera added on, we built a novel system which continuously and reliably measures two different types of cardiovascular events: i) blood volume pulse and ii) vasoconstriction/dilation-induced temperature changes of the nose tip. 17 healthy participants, involved in a series of stress-inducing mental workload tasks, measured their physiological responses to stressors over a short window of time (20 seconds) immediately after each task. Participants reported their level of perceived mental stress using a 10-cm Visual Analogue Scale (VAS). We used normalized K-means clustering to reduce interpersonal differences in the self-reported ratings. For the instant stress inference task, we built novel low-level feature sets representing variability of cardiovascular patterns. We then used the automatic feature learning capability of artificial Neural Networks (NN) to improve the mapping between the extracted set of features and the self-reported ratings. We compared our proposed method with existing hand-engineered features-based machine learning methods. Results, Conclusions: ... due to limited space here, we refer to our manuscript.

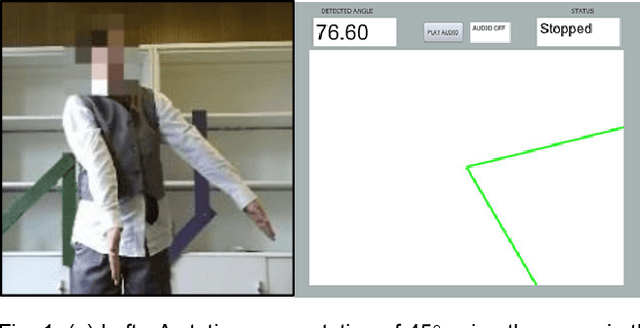

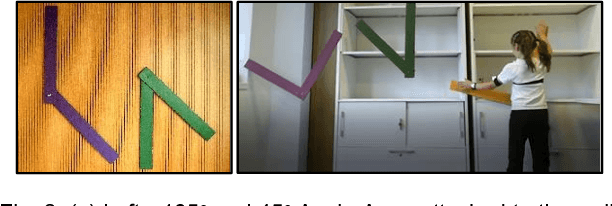

Automatic Detection of Reflective Thinking in Mathematical Problem Solving based on Unconstrained Bodily Exploration

Dec 18, 2018

Abstract:For technology (like serious games) that aims to deliver interactive learning, it is important to address relevant mental experiences such as reflective thinking during problem solving. To facilitate research in this direction, we present the weDraw-1 Movement Dataset of body movement sensor data and reflective thinking labels for 26 children solving mathematical problems in unconstrained settings where the body (full or parts) was required to explore these problems. Further, we provide qualitative analysis of behaviours that observers used in identifying reflective thinking moments in these sessions. The body movement cues from our compilation informed features that lead to average F1 score of 0.73 for automatic detection of reflective thinking based on Long Short-Term Memory neural networks. We further obtained 0.79 average F1 score for end-to-end detection of reflective thinking periods, i.e. based on raw sensor data. Finally, the algorithms resulted in 0.64 average F1 score for period subsegments as short as 4 seconds. Overall, our results show the possibility of detecting reflective thinking moments from body movement behaviours of a child exploring mathematical concepts bodily, such as within serious game play.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge