Temitayo Olugbade

Pain level and pain-related behaviour classification using GRU-based sparsely-connected RNNs

Dec 20, 2022

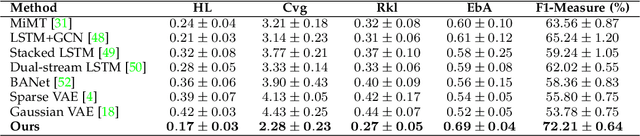

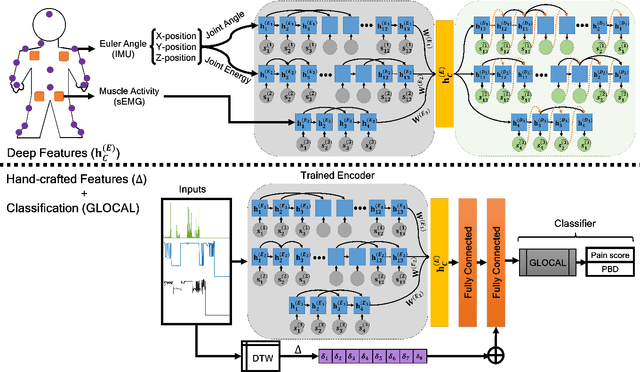

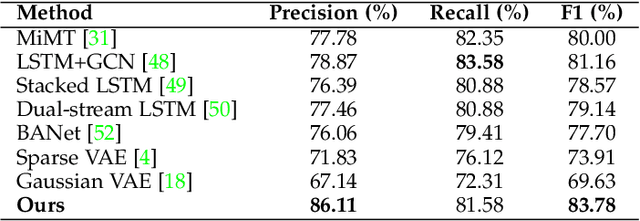

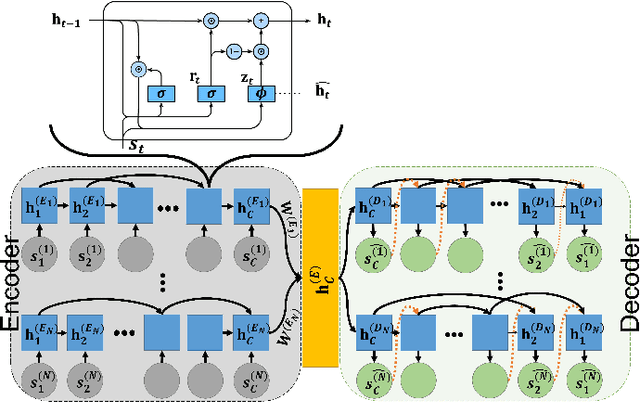

Abstract:There is a growing body of studies on applying deep learning to biometrics analysis. Certain circumstances, however, could impair the objective measures and accuracy of the proposed biometric data analysis methods. For instance, people with chronic pain (CP) unconsciously adapt specific body movements to protect themselves from injury or additional pain. Because there is no dedicated benchmark database to analyse this correlation, we considered one of the specific circumstances that potentially influence a person's biometrics during daily activities in this study and classified pain level and pain-related behaviour in the EmoPain database. To achieve this, we proposed a sparsely-connected recurrent neural networks (s-RNNs) ensemble with the gated recurrent unit (GRU) that incorporates multiple autoencoders using a shared training framework. This architecture is fed by multidimensional data collected from inertial measurement unit (IMU) and surface electromyography (sEMG) sensors. Furthermore, to compensate for variations in the temporal dimension that may not be perfectly represented in the latent space of s-RNNs, we fused hand-crafted features derived from information-theoretic approaches with represented features in the shared hidden state. We conducted several experiments which indicate that the proposed method outperforms the state-of-the-art approaches in classifying both pain level and pain-related behaviour.

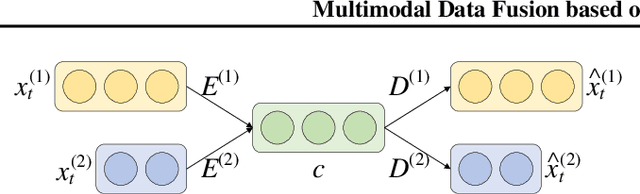

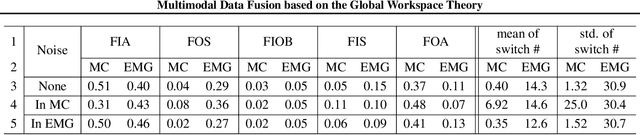

Multimodal Data Fusion based on the Global Workspace Theory

Jan 26, 2020

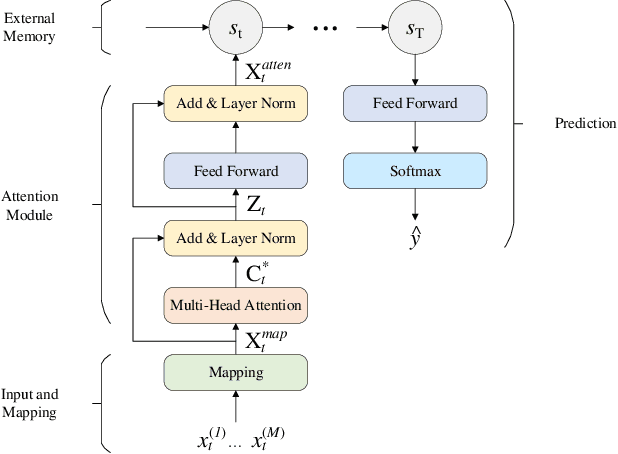

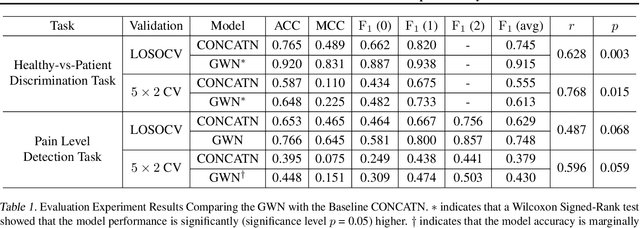

Abstract:We propose a novel neural network architecture, named the Global Workspace Network (GWN), that addresses the challenge of dynamic uncertainties in multimodal data fusion. The GWN is inspired by the well-established Global Workspace Theory from cognitive science. We implement it as a model of attention, between multiple modalities, that evolves through time. The GWN achieved F1 score of 0.92, averaged over two classes, for the discrimination between patient and healthy participants, based on the multimodal EmoPain dataset captured from people with chronic pain and healthy people performing different types of exercise movements in unconstrained settings. In this task, the GWN significantly outperformed a vanilla architecture. It additionally outperformed the vanilla model in further classification of three pain levels for a patient (average F1 score = 0.75) based on the EmoPain dataset. We further provide extensive analysis of the behaviour of GWN and its ability to deal with uncertainty in multimodal data.

EMOPAIN Challenge 2020: Multimodal Pain Evaluation from Facial and Bodily Expressions

Jan 25, 2020

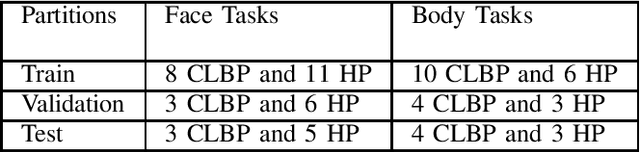

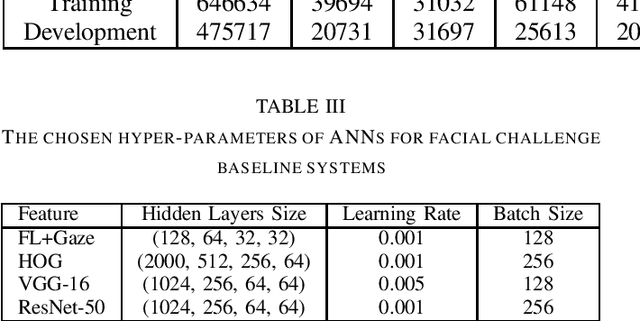

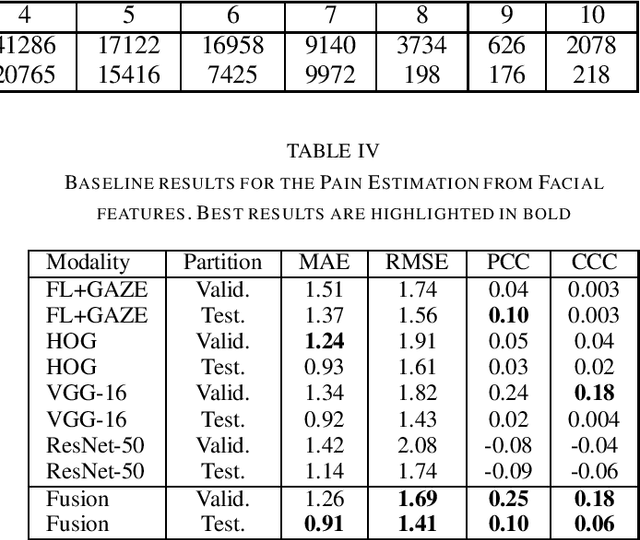

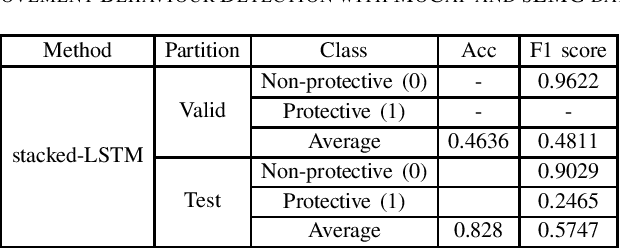

Abstract:The EmoPain 2020 Challenge is the first international competition aimed at creating a uniform platform for the comparison of machine learning and multimedia processing methods of automatic chronic pain assessment from human expressive behaviour, and also the identification of pain-related behaviours. The objective of the challenge is to promote research in the development of assistive technologies that help improve the quality of life for people with chronic pain via real-time monitoring and feedback to help manage their condition and remain physically active. The challenge also aims to encourage the use of the relatively underutilised, albeit vital bodily expression signals for automatic pain and pain-related emotion recognition. This paper presents a description of the challenge, competition guidelines, bench-marking dataset, and the baseline systems' architecture and performance on the three sub-tasks: pain estimation from facial expressions, pain recognition from multimodal movement, and protective movement behaviour detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge