Multimodal Data Fusion based on the Global Workspace Theory

Paper and Code

Jan 26, 2020

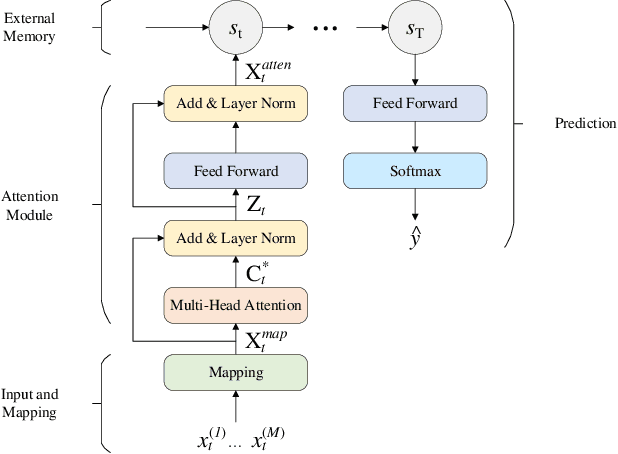

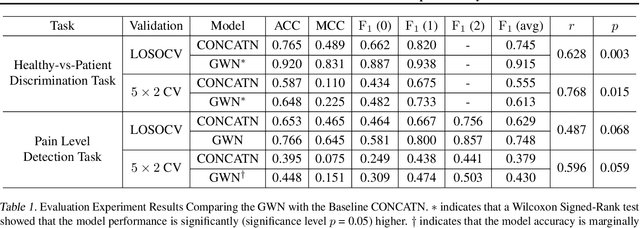

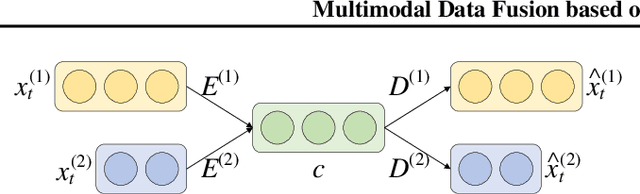

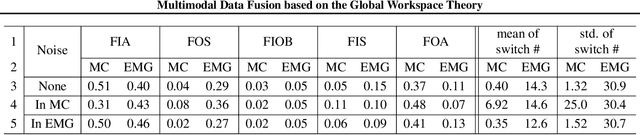

We propose a novel neural network architecture, named the Global Workspace Network (GWN), that addresses the challenge of dynamic uncertainties in multimodal data fusion. The GWN is inspired by the well-established Global Workspace Theory from cognitive science. We implement it as a model of attention, between multiple modalities, that evolves through time. The GWN achieved F1 score of 0.92, averaged over two classes, for the discrimination between patient and healthy participants, based on the multimodal EmoPain dataset captured from people with chronic pain and healthy people performing different types of exercise movements in unconstrained settings. In this task, the GWN significantly outperformed a vanilla architecture. It additionally outperformed the vanilla model in further classification of three pain levels for a patient (average F1 score = 0.75) based on the EmoPain dataset. We further provide extensive analysis of the behaviour of GWN and its ability to deal with uncertainty in multimodal data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge