Youngjun Cho

Spacer: Towards Engineered Scientific Inspiration

Aug 25, 2025Abstract:Recent advances in LLMs have made automated scientific research the next frontline in the path to artificial superintelligence. However, these systems are bound either to tasks of narrow scope or the limited creative capabilities of LLMs. We propose Spacer, a scientific discovery system that develops creative and factually grounded concepts without external intervention. Spacer attempts to achieve this via 'deliberate decontextualization,' an approach that disassembles information into atomic units - keywords - and draws creativity from unexplored connections between them. Spacer consists of (i) Nuri, an inspiration engine that builds keyword sets, and (ii) the Manifesting Pipeline that refines these sets into elaborate scientific statements. Nuri extracts novel, high-potential keyword sets from a keyword graph built with 180,000 academic publications in biological fields. The Manifesting Pipeline finds links between keywords, analyzes their logical structure, validates their plausibility, and ultimately drafts original scientific concepts. According to our experiments, the evaluation metric of Nuri accurately classifies high-impact publications with an AUROC score of 0.737. Our Manifesting Pipeline also successfully reconstructs core concepts from the latest top-journal articles solely from their keyword sets. An LLM-based scoring system estimates that this reconstruction was sound for over 85% of the cases. Finally, our embedding space analysis shows that outputs from Spacer are significantly more similar to leading publications compared with those from SOTA LLMs.

Efficient and Robust Multidimensional Attention in Remote Physiological Sensing through Target Signal Constrained Factorization

May 11, 2025Abstract:Remote physiological sensing using camera-based technologies offers transformative potential for non-invasive vital sign monitoring across healthcare and human-computer interaction domains. Although deep learning approaches have advanced the extraction of physiological signals from video data, existing methods have not been sufficiently assessed for their robustness to domain shifts. These shifts in remote physiological sensing include variations in ambient conditions, camera specifications, head movements, facial poses, and physiological states which often impact real-world performance significantly. Cross-dataset evaluation provides an objective measure to assess generalization capabilities across these domain shifts. We introduce Target Signal Constrained Factorization module (TSFM), a novel multidimensional attention mechanism that explicitly incorporates physiological signal characteristics as factorization constraints, allowing more precise feature extraction. Building on this innovation, we present MMRPhys, an efficient dual-branch 3D-CNN architecture designed for simultaneous multitask estimation of photoplethysmography (rPPG) and respiratory (rRSP) signals from multimodal RGB and thermal video inputs. Through comprehensive cross-dataset evaluation on five benchmark datasets, we demonstrate that MMRPhys with TSFM significantly outperforms state-of-the-art methods in generalization across domain shifts for rPPG and rRSP estimation, while maintaining a minimal inference latency suitable for real-time applications. Our approach establishes new benchmarks for robust multitask and multimodal physiological sensing and offers a computationally efficient framework for practical deployment in unconstrained environments. The web browser-based application featuring on-device real-time inference of MMRPhys model is available at https://physiologicailab.github.io/mmrphys-live

FactorizePhys: Matrix Factorization for Multidimensional Attention in Remote Physiological Sensing

Nov 03, 2024

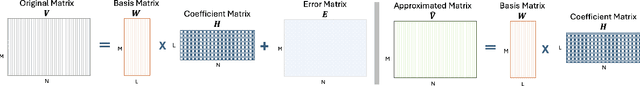

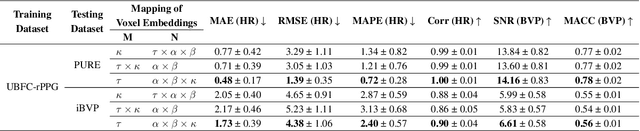

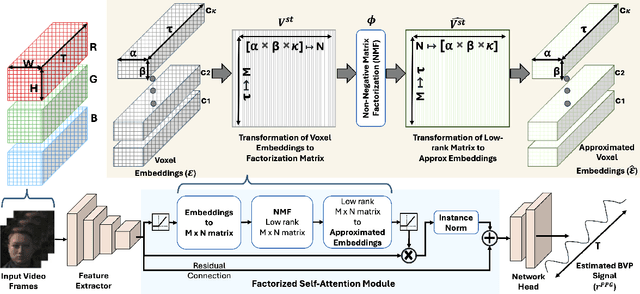

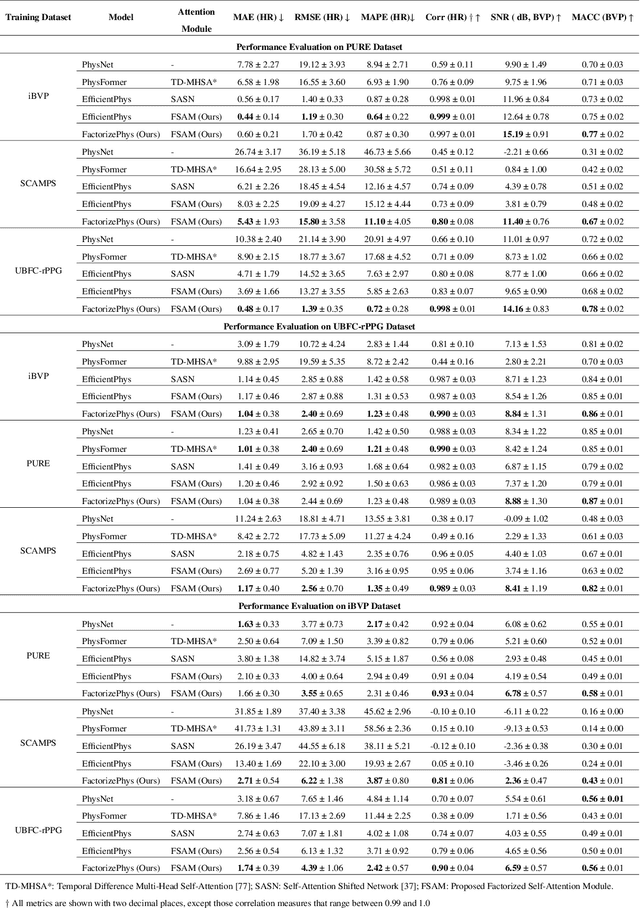

Abstract:Remote photoplethysmography (rPPG) enables non-invasive extraction of blood volume pulse signals through imaging, transforming spatial-temporal data into time series signals. Advances in end-to-end rPPG approaches have focused on this transformation where attention mechanisms are crucial for feature extraction. However, existing methods compute attention disjointly across spatial, temporal, and channel dimensions. Here, we propose the Factorized Self-Attention Module (FSAM), which jointly computes multidimensional attention from voxel embeddings using nonnegative matrix factorization. To demonstrate FSAM's effectiveness, we developed FactorizePhys, an end-to-end 3D-CNN architecture for estimating blood volume pulse signals from raw video frames. Our approach adeptly factorizes voxel embeddings to achieve comprehensive spatial, temporal, and channel attention, enhancing performance of generic signal extraction tasks. Furthermore, we deploy FSAM within an existing 2D-CNN-based rPPG architecture to illustrate its versatility. FSAM and FactorizePhys are thoroughly evaluated against state-of-the-art rPPG methods, each representing different types of architecture and attention mechanism. We perform ablation studies to investigate the architectural decisions and hyperparameters of FSAM. Experiments on four publicly available datasets and intuitive visualization of learned spatial-temporal features substantiate the effectiveness of FSAM and enhanced cross-dataset generalization in estimating rPPG signals, suggesting its broader potential as a multidimensional attention mechanism. The code is accessible at https://github.com/PhysiologicAILab/FactorizePhys.

Exploring Human-AI Perception Alignment in Sensory Experiences: Do LLMs Understand Textile Hand?

Jun 05, 2024

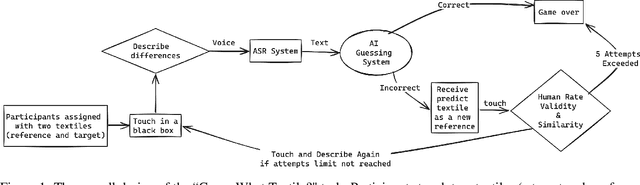

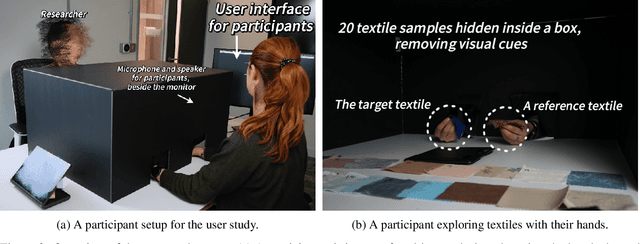

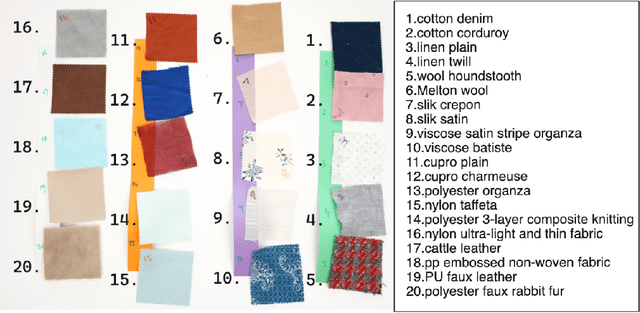

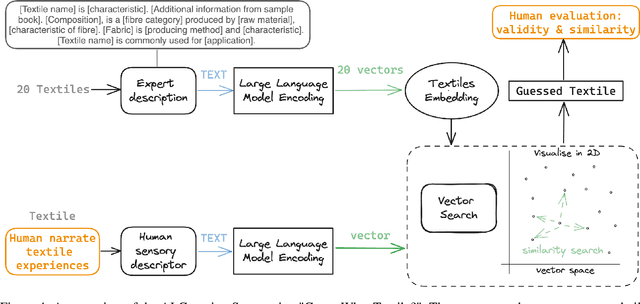

Abstract:Aligning large language models (LLMs) behaviour with human intent is critical for future AI. An important yet often overlooked aspect of this alignment is the perceptual alignment. Perceptual modalities like touch are more multifaceted and nuanced compared to other sensory modalities such as vision. This work investigates how well LLMs align with human touch experiences using the "textile hand" task. We created a "Guess What Textile" interaction in which participants were given two textile samples -- a target and a reference -- to handle. Without seeing them, participants described the differences between them to the LLM. Using these descriptions, the LLM attempted to identify the target textile by assessing similarity within its high-dimensional embedding space. Our results suggest that a degree of perceptual alignment exists, however varies significantly among different textile samples. For example, LLM predictions are well aligned for silk satin, but not for cotton denim. Moreover, participants didn't perceive their textile experiences closely matched by the LLM predictions. This is only the first exploration into perceptual alignment around touch, exemplified through textile hand. We discuss possible sources of this alignment variance, and how better human-AI perceptual alignment can benefit future everyday tasks.

Multi-Modal Hybrid Learning and Sequential Training for RGB-T Saliency Detection

Sep 13, 2023Abstract:RGB-T saliency detection has emerged as an important computer vision task, identifying conspicuous objects in challenging scenes such as dark environments. However, existing methods neglect the characteristics of cross-modal features and rely solely on network structures to fuse RGB and thermal features. To address this, we first propose a Multi-Modal Hybrid loss (MMHL) that comprises supervised and self-supervised loss functions. The supervised loss component of MMHL distinctly utilizes semantic features from different modalities, while the self-supervised loss component reduces the distance between RGB and thermal features. We further consider both spatial and channel information during feature fusion and propose the Hybrid Fusion Module to effectively fuse RGB and thermal features. Lastly, instead of jointly training the network with cross-modal features, we implement a sequential training strategy which performs training only on RGB images in the first stage and then learns cross-modal features in the second stage. This training strategy improves saliency detection performance without computational overhead. Results from performance evaluation and ablation studies demonstrate the superior performance achieved by the proposed method compared with the existing state-of-the-art methods.

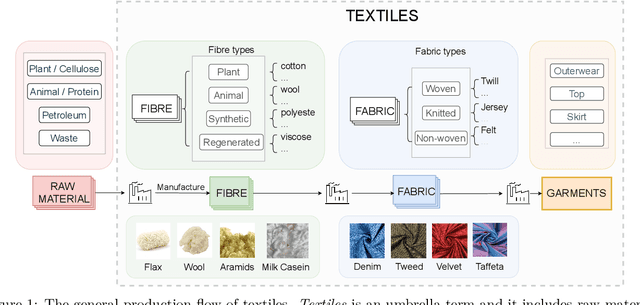

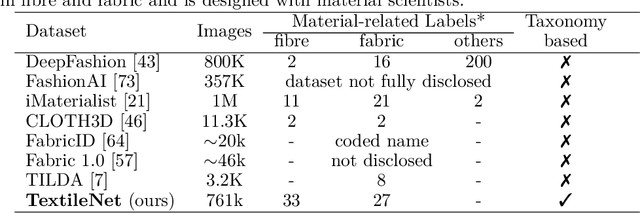

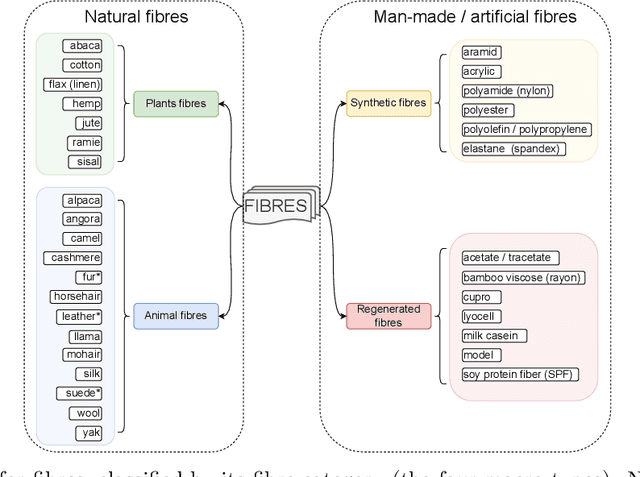

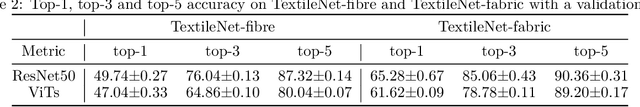

TextileNet: A Material Taxonomy-based Fashion Textile Dataset

Jan 15, 2023

Abstract:The rise of Machine Learning (ML) is gradually digitalizing and reshaping the fashion industry. Recent years have witnessed a number of fashion AI applications, for example, virtual try-ons. Textile material identification and categorization play a crucial role in the fashion textile sector, including fashion design, retails, and recycling. At the same time, Net Zero is a global goal and the fashion industry is undergoing a significant change so that textile materials can be reused, repaired and recycled in a sustainable manner. There is still a challenge in identifying textile materials automatically for garments, as we lack a low-cost and effective technique for identifying them. In light of this, we build the first fashion textile dataset, TextileNet, based on textile material taxonomies - a fibre taxonomy and a fabric taxonomy generated in collaboration with material scientists. TextileNet can be used to train and evaluate the state-of-the-art Deep Learning models for textile materials. We hope to standardize textile related datasets through the use of taxonomies. TextileNet contains 33 fibres labels and 27 fabrics labels, and has in total 760,949 images. We use standard Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs) to establish baselines for this dataset. Future applications for this dataset range from textile classification to optimization of the textile supply chain and interactive design for consumers. We envision that this can contribute to the development of a new AI-based fashion platform.

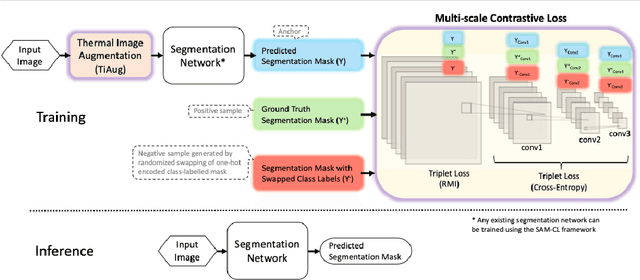

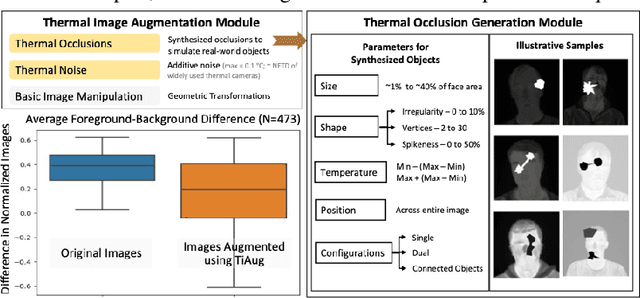

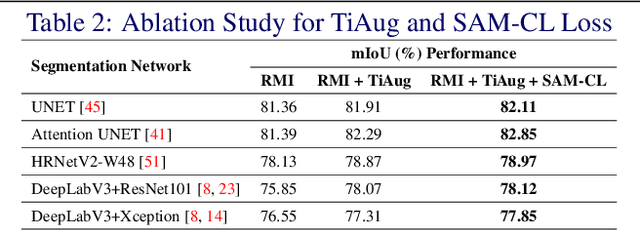

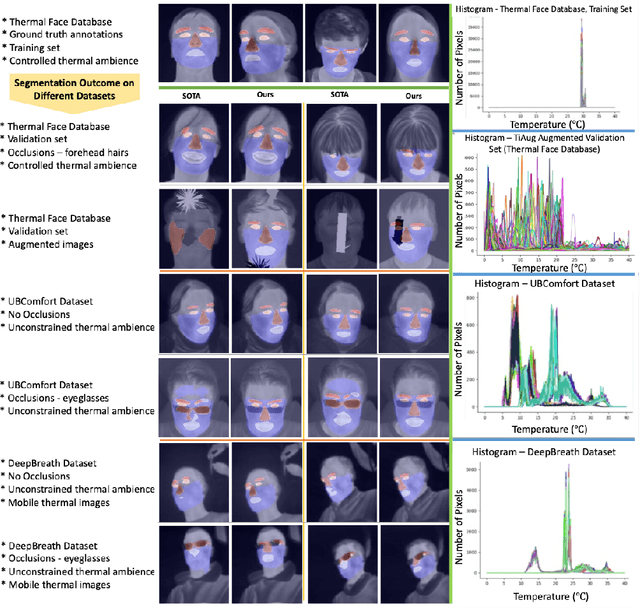

Self-adversarial Multi-scale Contrastive Learning for Semantic Segmentation of Thermal Facial Images

Oct 07, 2022

Abstract:Segmentation of thermal facial images is a challenging task. This is because facial features often lack salience due to high-dynamic thermal range scenes and occlusion issues. Limited availability of datasets from unconstrained settings further limits the use of the state-of-the-art segmentation networks, loss functions and learning strategies which have been built and validated for RGB images. To address the challenge, we propose Self-Adversarial Multi-scale Contrastive Learning (SAM-CL) framework as a new training strategy for thermal image segmentation. SAM-CL framework consists of a SAM-CL loss function and a thermal image augmentation (TiAug) module as a domain-specific augmentation technique. We use the Thermal-Face-Database to demonstrate effectiveness of our approach. Experiments conducted on the existing segmentation networks (UNET, Attention-UNET, DeepLabV3 and HRNetv2) evidence the consistent performance gains from the SAM-CL framework. Furthermore, we present a qualitative analysis with UBComfort and DeepBreath datasets to discuss how our proposed methods perform in handling unconstrained situations.

Rethinking Eye-blink: Assessing Task Difficulty through Physiological Representation of Spontaneous Blinking

Feb 12, 2021

Abstract:Continuous assessment of task difficulty and mental workload is essential in improving the usability and accessibility of interactive systems. Eye tracking data has often been investigated to achieve this ability, with reports on the limited role of standard blink metrics. Here, we propose a new approach to the analysis of eye-blink responses for automated estimation of task difficulty. The core module is a time-frequency representation of eye-blink, which aims to capture the richness of information reflected on blinking. In our first study, we show that this method significantly improves the sensitivity to task difficulty. We then demonstrate how to form a framework where the represented patterns are analyzed with multi-dimensional Long Short-Term Memory recurrent neural networks for their non-linear mapping onto difficulty-related parameters. This framework outperformed other methods that used hand-engineered features. This approach works with any built-in camera, without requiring specialized devices. We conclude by discussing how Rethinking Eye-blink can benefit real-world applications.

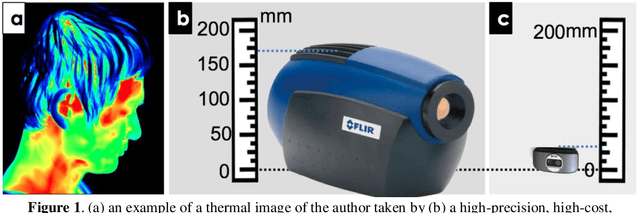

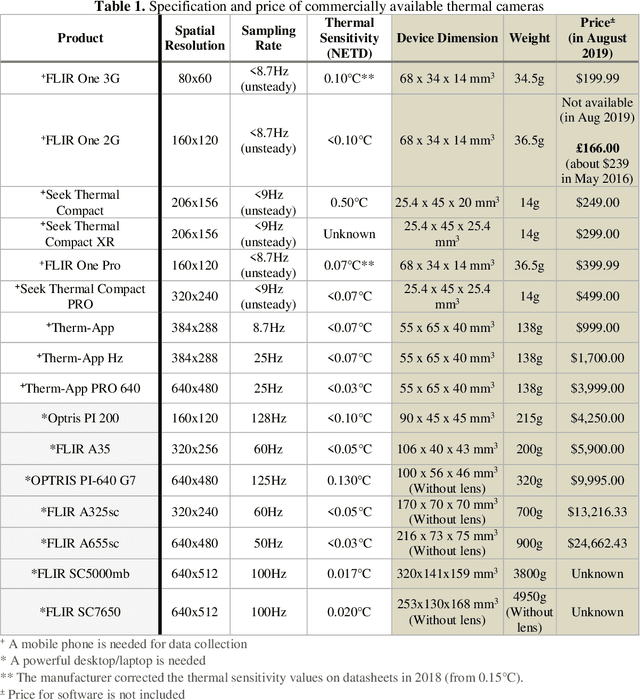

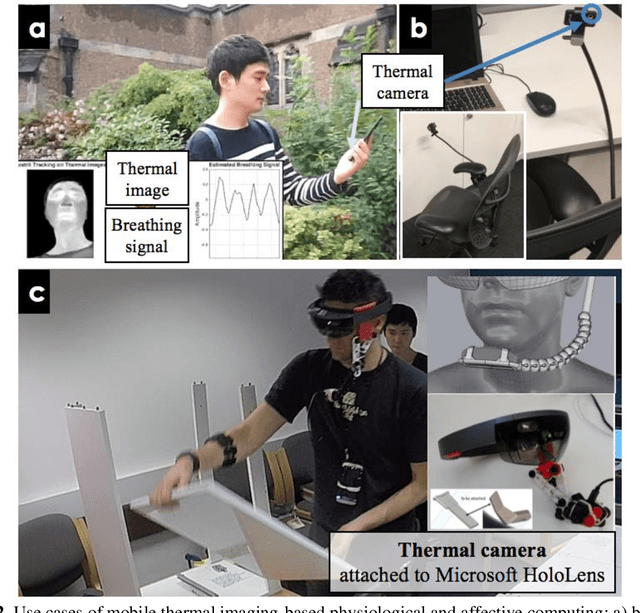

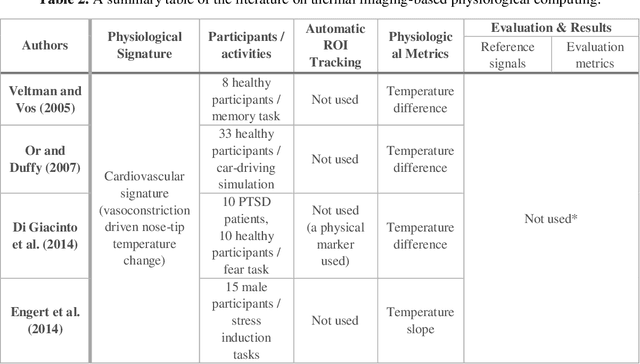

Physiological and Affective Computing through Thermal Imaging: A Survey

Aug 27, 2019

Abstract:Thermal imaging-based physiological and affective computing is an emerging research area enabling technologies to monitor our bodily functions and understand psychological and affective needs in a contactless manner. However, up to recently, research has been mainly carried out in very controlled lab settings. As small size and even low-cost versions of thermal video cameras have started to appear on the market, mobile thermal imaging is opening its door to ubiquitous and real-world applications. Here we review the literature on the use of thermal imaging to track changes in physiological cues relevant to affective computing and the technological requirements set so far. In doing so, we aim to establish computational and methodological pipelines from thermal images of the human skin to affective states and outline the research opportunities and challenges to be tackled to make ubiquitous real-life thermal imaging-based affect monitoring a possibility.

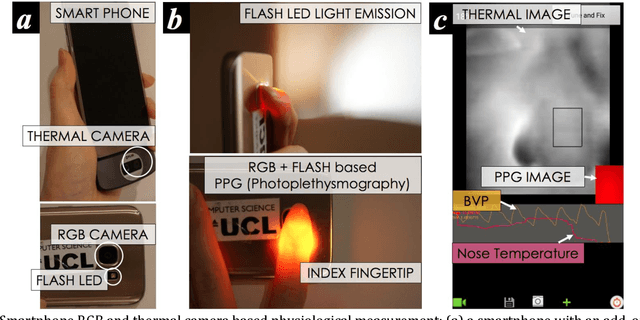

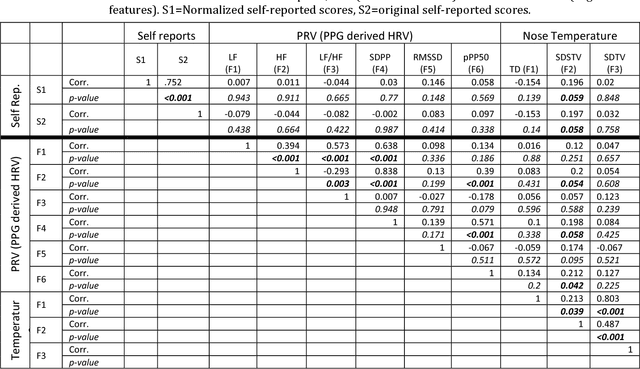

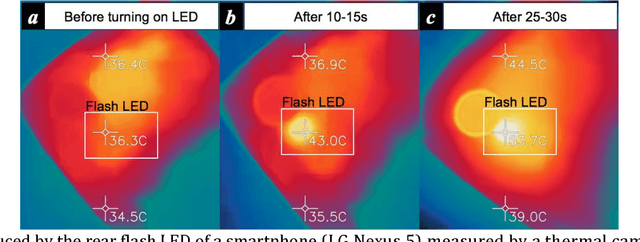

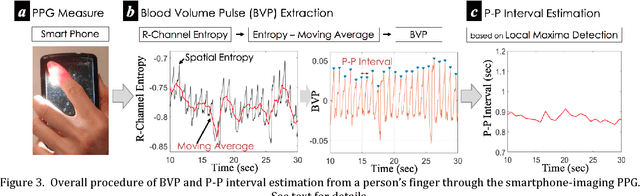

Instant Automated Inference of Perceived Mental Stress through Smartphone PPG and Thermal Imaging

Dec 21, 2018

Abstract:Background: A smartphone is a promising tool for daily cardiovascular measurement and mental stress monitoring. A smartphone camera-based PhotoPlethysmoGraphy (PPG) and a low-cost thermal camera can be used to create cheap, convenient and mobile monitoring systems. However, to ensure reliable monitoring results, a person has to remain still for several minutes while a measurement is being taken. This is very cumbersome and makes its use in real-life mobile situations quite impractical. Objective: We propose a system which combines PPG and thermography with the aim of improving cardiovascular signal quality and capturing stress responses quickly. Methods: Using a smartphone camera with a low cost thermal camera added on, we built a novel system which continuously and reliably measures two different types of cardiovascular events: i) blood volume pulse and ii) vasoconstriction/dilation-induced temperature changes of the nose tip. 17 healthy participants, involved in a series of stress-inducing mental workload tasks, measured their physiological responses to stressors over a short window of time (20 seconds) immediately after each task. Participants reported their level of perceived mental stress using a 10-cm Visual Analogue Scale (VAS). We used normalized K-means clustering to reduce interpersonal differences in the self-reported ratings. For the instant stress inference task, we built novel low-level feature sets representing variability of cardiovascular patterns. We then used the automatic feature learning capability of artificial Neural Networks (NN) to improve the mapping between the extracted set of features and the self-reported ratings. We compared our proposed method with existing hand-engineered features-based machine learning methods. Results, Conclusions: ... due to limited space here, we refer to our manuscript.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge