Sos S. Agaian

FactorizePhys: Matrix Factorization for Multidimensional Attention in Remote Physiological Sensing

Nov 03, 2024

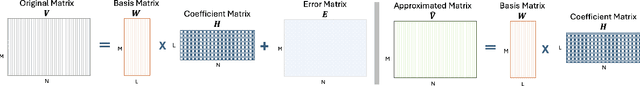

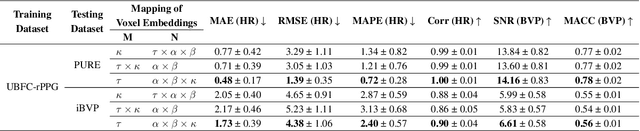

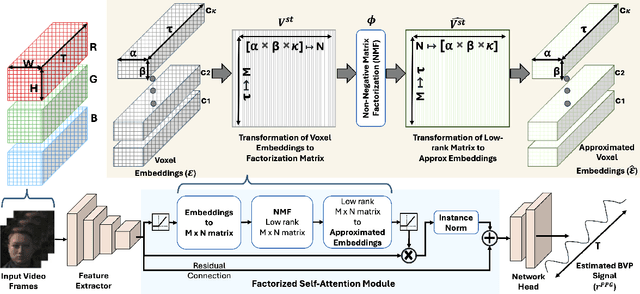

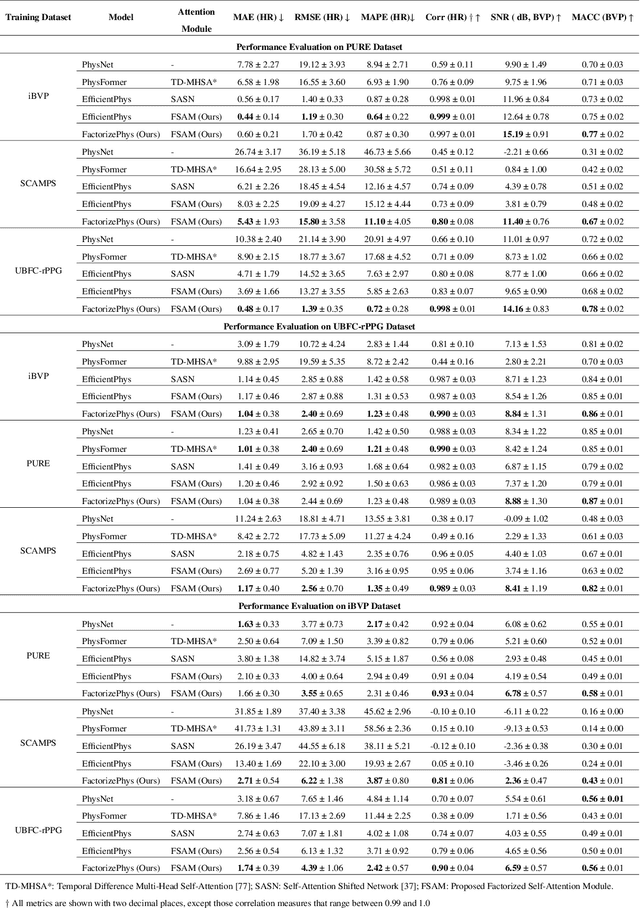

Abstract:Remote photoplethysmography (rPPG) enables non-invasive extraction of blood volume pulse signals through imaging, transforming spatial-temporal data into time series signals. Advances in end-to-end rPPG approaches have focused on this transformation where attention mechanisms are crucial for feature extraction. However, existing methods compute attention disjointly across spatial, temporal, and channel dimensions. Here, we propose the Factorized Self-Attention Module (FSAM), which jointly computes multidimensional attention from voxel embeddings using nonnegative matrix factorization. To demonstrate FSAM's effectiveness, we developed FactorizePhys, an end-to-end 3D-CNN architecture for estimating blood volume pulse signals from raw video frames. Our approach adeptly factorizes voxel embeddings to achieve comprehensive spatial, temporal, and channel attention, enhancing performance of generic signal extraction tasks. Furthermore, we deploy FSAM within an existing 2D-CNN-based rPPG architecture to illustrate its versatility. FSAM and FactorizePhys are thoroughly evaluated against state-of-the-art rPPG methods, each representing different types of architecture and attention mechanism. We perform ablation studies to investigate the architectural decisions and hyperparameters of FSAM. Experiments on four publicly available datasets and intuitive visualization of learned spatial-temporal features substantiate the effectiveness of FSAM and enhanced cross-dataset generalization in estimating rPPG signals, suggesting its broader potential as a multidimensional attention mechanism. The code is accessible at https://github.com/PhysiologicAILab/FactorizePhys.

MUCM-Net: A Mamba Powered UCM-Net for Skin Lesion Segmentation

May 24, 2024

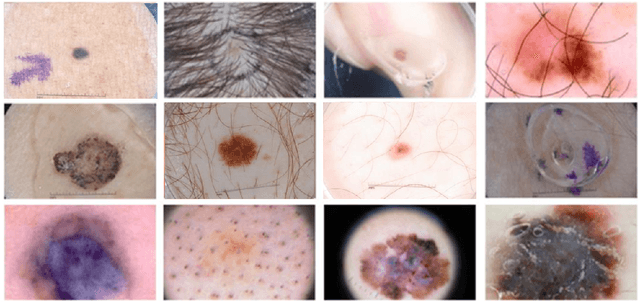

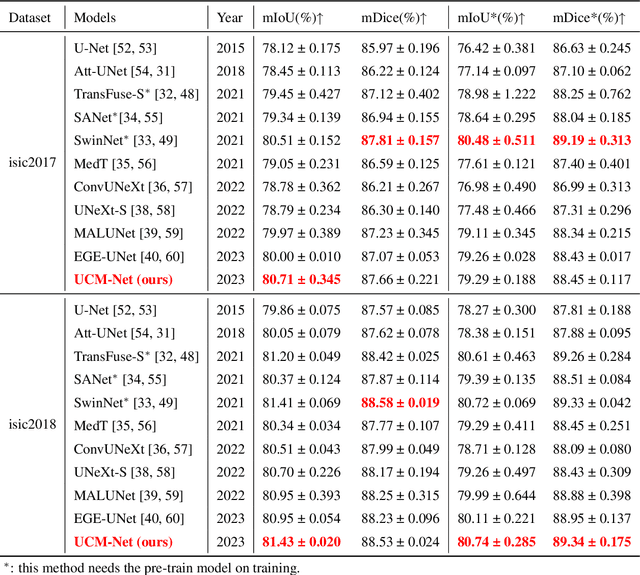

Abstract:Skin lesion segmentation is key for early skin cancer detection. Challenges in automatic segmentation from dermoscopic images include variations in color, texture, and artifacts of indistinct lesion boundaries. Deep learning methods like CNNs and U-Net have shown promise in addressing these issues. To further aid early diagnosis, especially on mobile devices with limited computing power, we present MUCM-Net. This efficient model combines Mamba State-Space Models with our UCM-Net architecture for improved feature learning and segmentation. MUCM-Net's Mamba-UCM Layer is optimized for mobile deployment, offering high accuracy with low computational needs. Tested on ISIC datasets, it outperforms other methods in accuracy and computational efficiency, making it a scalable tool for early detection in settings with limited resources. Our MUCM-Net source code is available for research and collaboration, supporting advances in mobile health diagnostics and the fight against skin cancer. In order to facilitate accessibility and further research in the field, the MUCM-Net source code is https://github.com/chunyuyuan/MUCM-Net

NDELS: A Novel Approach for Nighttime Dehazing, Low-Light Enhancement, and Light Suppression

Dec 11, 2023Abstract:This paper tackles the intricate challenge of improving the quality of nighttime images under hazy and low-light conditions. Overcoming issues including nonuniform illumination glows, texture blurring, glow effects, color distortion, noise disturbance, and overall, low light have proven daunting. Despite the inherent difficulties, this paper introduces a pioneering solution named Nighttime Dehazing, Low-Light Enhancement, and Light Suppression (NDELS). NDELS utilizes a unique network that combines three essential processes to enhance visibility, brighten low-light regions, and effectively suppress glare from bright light sources. In contrast to limited progress in nighttime dehazing, unlike its daytime counterpart, NDELS presents a comprehensive and innovative approach. The efficacy of NDELS is rigorously validated through extensive comparisons with eight state-of-the-art algorithms across four diverse datasets. Experimental results showcase the superior performance of our method, demonstrating its outperformance in terms of overall image quality, including color and edge enhancement. Quantitative (PSNR, SSIM) and qualitative metrics (CLIPIQA, MANIQA, TRES), measure these results.

UCM-Net: A Lightweight and Efficient Solution for Skin Lesion Segmentation using MLP and CNN

Oct 14, 2023

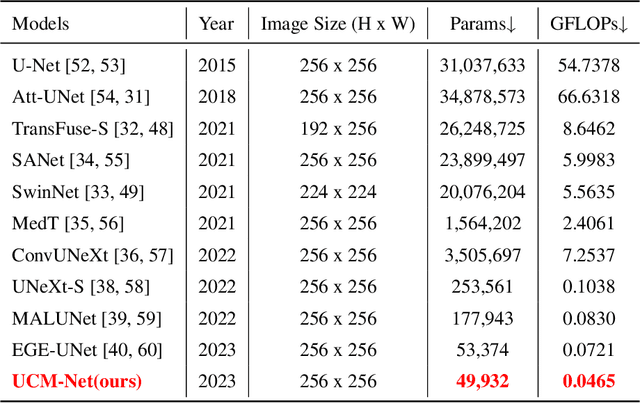

Abstract:Skin cancer is a significant public health problem, and computer-aided diagnosis can help to prevent and treat it. A crucial step for computer-aided diagnosis is accurately segmenting skin lesions in images, which allows for lesion detection, classification, and analysis. However, this task is challenging due to the diverse characteristics of lesions, such as appearance, shape, size, color, texture, and location, as well as image quality issues like noise, artifacts, and occlusions. Deep learning models have recently been applied to skin lesion segmentation, but they have high parameter counts and computational demands, making them unsuitable for mobile health applications. To address this challenge, we propose UCM-Net, a novel, efficient, and lightweight solution that integrates Multi-Layer Perceptions (MLP) and Convolutional Neural Networks (CNN). Unlike conventional UNet architectures, our UCMNet-Block reduces parameter overhead and enhances UCM-Net's learning capabilities, leading to robust segmentation performance. We validate UCM-Net's competitiveness through extensive experiments on isic2017 and isic2018 datasets. Remarkably, UCM-Net has less than 50KB parameters and less than 0.05 Giga-Operations Per Second (GLOPs), setting a new possible standard for efficiency in skin lesion segmentation. The source code will be publicly available.

Quantum-Inspired Edge Detection Algorithms Implementation using New Dynamic Visual Data Representation and Short-Length Convolution Computation

Oct 31, 2022Abstract:As the availability of imagery data continues to swell, so do the demands on transmission, storage and processing power. Processing requirements to handle this plethora of data is quickly outpacing the utility of conventional processing techniques. Transitioning to quantum processing and algorithms that offer promising efficiencies over conventional methods can address some of these issues. However, to make this transformation possible, fundamental issues of implementing real time Quantum algorithms must be overcome for crucial processes needed for intelligent analysis applications. For example, consider edge detection tasks which require time-consuming acquisition processes and are further hindered by the complexity of the devices used thus limiting feasibility for implementation in real-time applications. Convolution is another example of an operation that is essential for signal and image processing applications, where the mathematical operations consist of an intelligent mixture of multiplication and addition that require considerable computational resources. This paper studies a new paired transform-based quantum representation and computation of one-dimensional and 2-D signals convolutions and gradients. A new visual data representation is defined to simplify convolution calculations making it feasible to parallelize convolution and gradient operations for more efficient performance. The new data representation is demonstrated on multiple illustrative examples for quantum edge detection, gradients, and convolution. Furthermore, the efficiency of the proposed approach is shown on real-world images.

An Efficient Calculation of Quaternion Correlation of Signals and Color Images

May 10, 2022

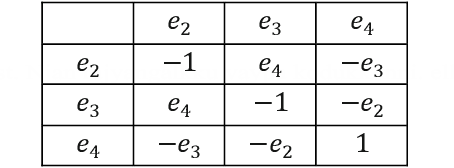

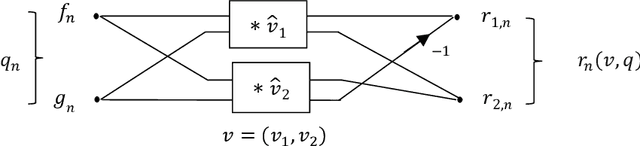

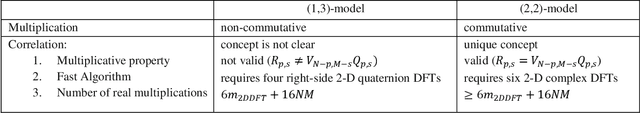

Abstract:Over the past century, a correlation has been an essential mathematical technique utilized in engineering sciences, including practically every signal/image processing field. This paper describes an effective method of calculating the correlation function of signals and color images in quaternion algebra. We propose using the quaternions with a commutative multiplication operation and defining the corresponding correlation function in this arithmetic. The correlation between quaternion signals and images can be calculated by multiplying two quaternion DFTs of signals and images. The complexity of the correlation of color images is three times higher than in complex algebra.

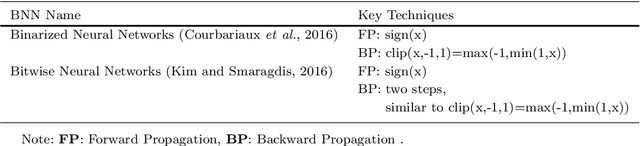

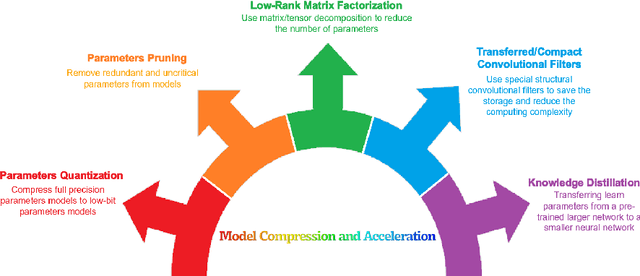

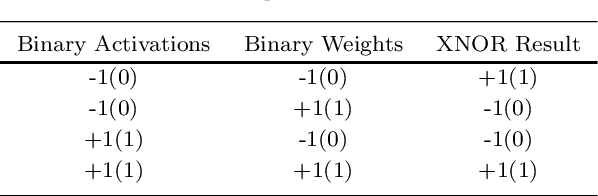

A comprehensive review of Binary Neural Network

Oct 19, 2021

Abstract:Binary Neural Network (BNN) method is an extreme application of convolutional neural network (CNN) parameter quantization. As opposed to the original CNN methods which employed floating-point computation with full-precision weights and activations, BBN uses 1-bit activations and weights. With BBNs, a significant amount of storage, network complexity and energy consumption can be reduced, and neural networks can be implemented more efficiently in embedded applications. Unfortunately, binarization causes severe information loss. A gap still exists between full-precision CNN models and their binarized counterparts. The recent developments in BNN have led to a lot of algorithms and solutions that have helped address this issue. This article provides a full overview of recent developments in BNN. The present paper focuses exclusively on 1-bit activations and weights networks, as opposed to previous surveys in which low-bit works are mixed in. In this paper, we conduct a complete investigation of BNN's development from their predecessors to the latest BNN algorithms and techniques, presenting a broad design pipeline, and discussing each module's variants. Along the way, this paper examines BNN (a) purpose: their early successes and challenges; (b) BNN optimization: selected representative works that contain key optimization techniques; (c) deployment: open-source frameworks for BNN modeling and development; (d) terminal: efficient computing architectures and devices for BNN and (e) applications: diverse applications with BNN. Moreover, this paper discusses potential directions and future research opportunities for the latest BNN algorithms and techniques, presents a broad design pipeline, and discusses each module's variants.

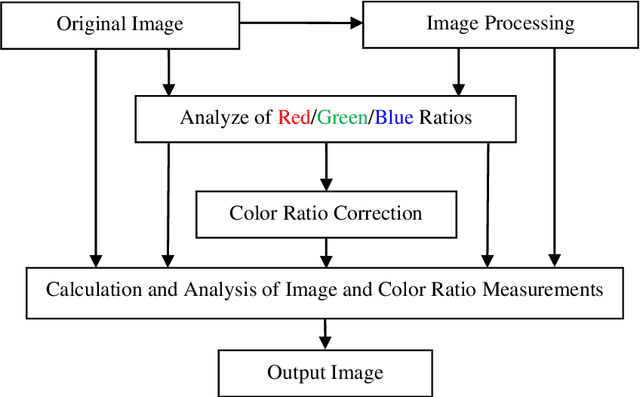

Color-Coded Symbology and New Computer Vision Tool to Predict the Historical Color Pallets of the Renaissance Oil Artworks

Feb 27, 2021

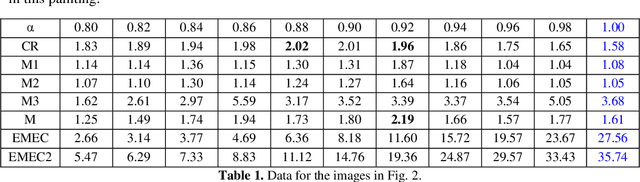

Abstract:In this paper, we discuss possible color palletes, prediction and analysis of originality of the colors that Artists used on the Renaissance oil paintings. This framework goal is to help to use the color symbology and image enhancement tools, to predict the historical color palletes of the Renaissance oil artworks. This work is only the start of a development to explore the possibilities of prediction of color palletes of the Renaissance oil artworks. We believe that framework might be very useful in the prediction of color palletes of the Renaissance oil artworks and other artworks. The images in number 105 have been taken from the paintings of three well-known artists, Rafael, Leonardo Da Vinci, and Rembrandt that are available in the Olga's Gallery. Images are processed in the frequency domain to enhance a quality of images and ratios of primary colors are calculated and analyzed by using new measurements of color-ratios.

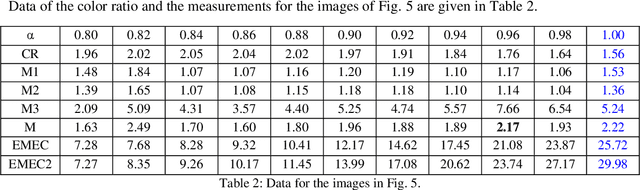

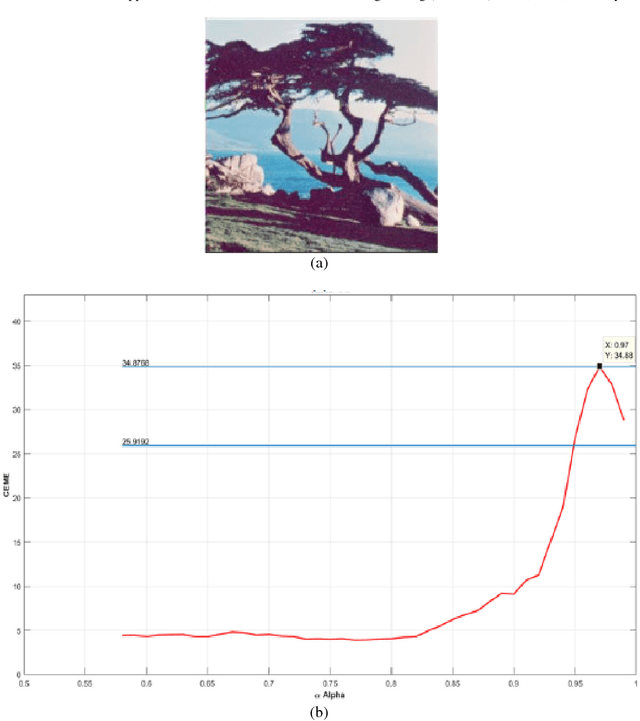

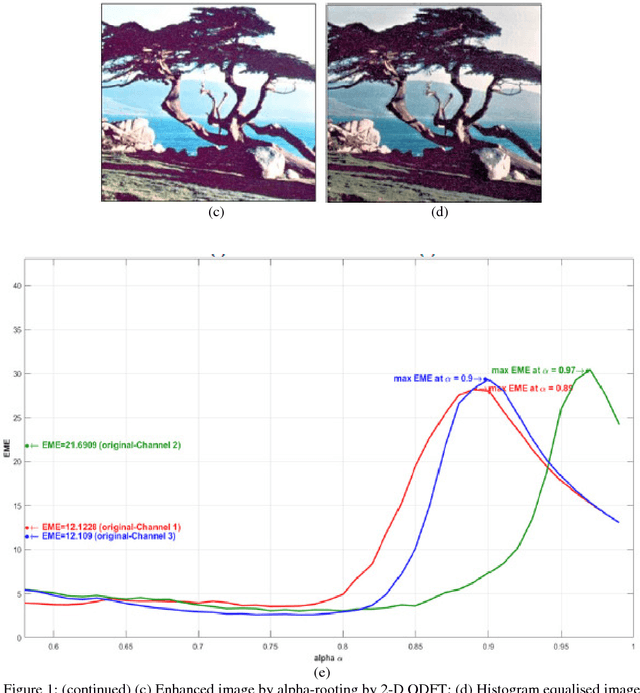

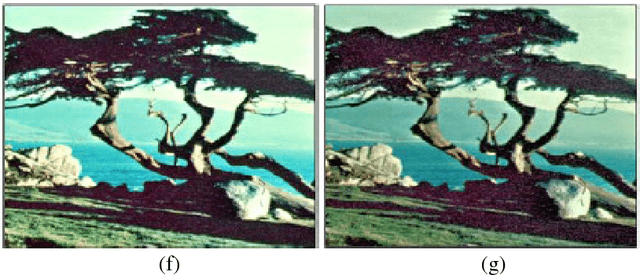

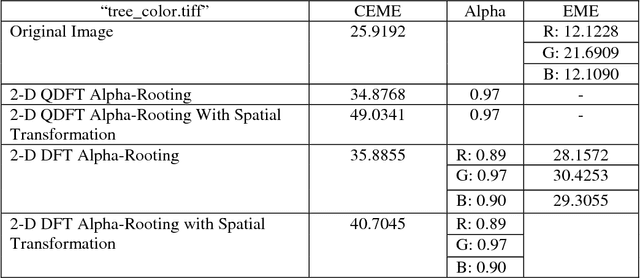

Alpha-rooting color image enhancement method by two-side 2-D quaternion discrete Fourier transform followed by spatial transformation

Jul 20, 2018

Abstract:In this paper a quaternion approach of enhancement method is proposed in which color in the image is considered as a single entity. This new method is referred as the alpha-rooting method of color image enhancement by the two-dimensional quaternion discrete Fourier transform (2-D QDFT) followed by a spatial transformation. The results of the proposed color image enhancement method are compared with its counterpart channel-by-channel enhancement algorithm by the 2-D DFT. The image enhancements are quantified to the enhancement measure that is based on visual perception referred as the color enhancement measure estimation (CEME). The preliminary experiment results show that the quaternion approach of image enhancement is an effective color image enhancement technique.

* 21 pages

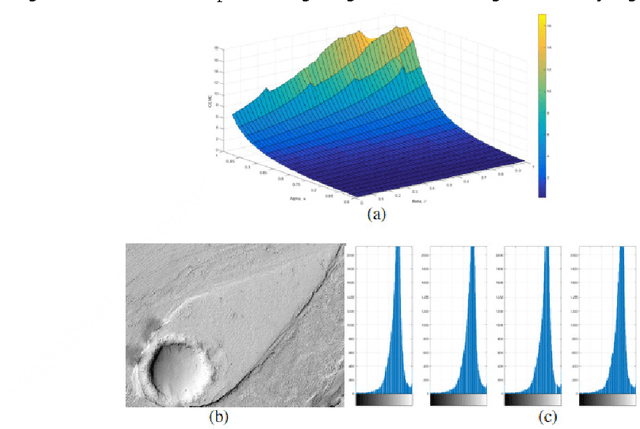

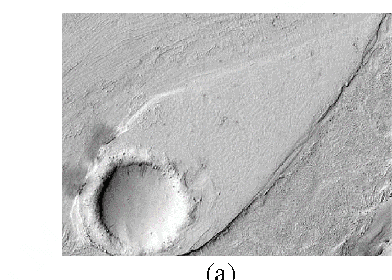

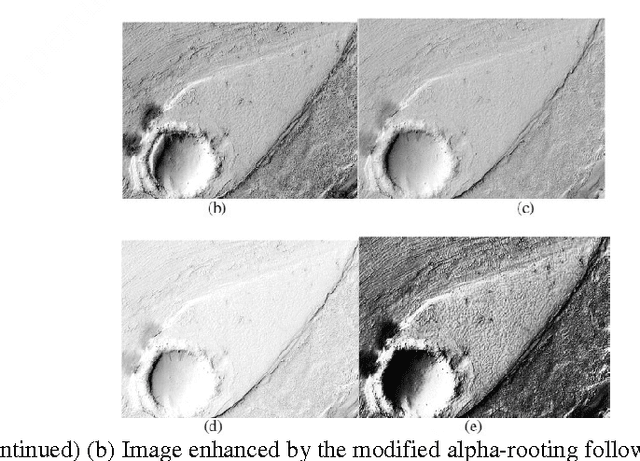

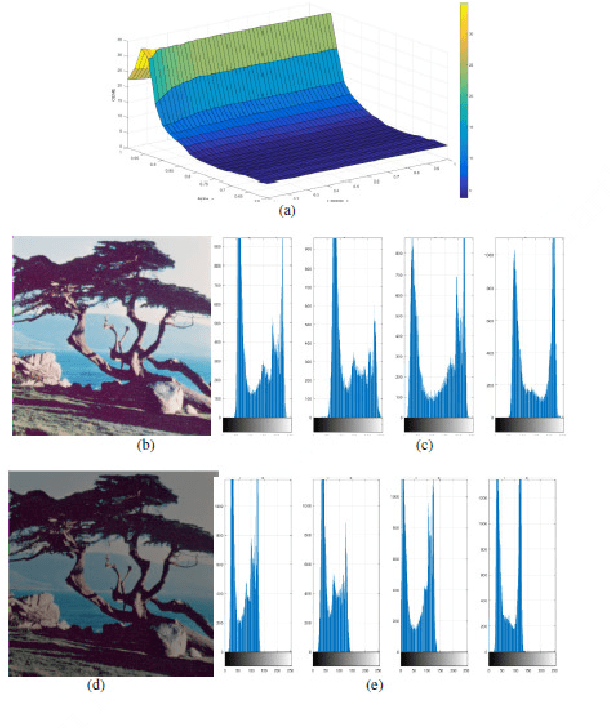

Modified Alpha-Rooting Color Image Enhancement Method On The Two-Side 2-D Quaternion Discrete Fourier Transform And The 2-D Discrete Fourier Transform

Jul 15, 2017

Abstract:Color in an image is resolved into 3 or 4 color components and 2-Dimages of these components are stored in separate channels. Most of the color image enhancement algorithms are applied channel-by-channel on each image. But such a system of color image processing is not processing the original color. When a color image is represented as a quaternion image, processing is done in original colors. This paper proposes an implementation of the quaternion approach of enhancement algorithm for enhancing color images and is referred as the modified alpha-rooting by the two-dimensional quaternion discrete Fourier transform (2-D QDFT). Enhancement results of this proposed method are compared with the channel-by-channel image enhancement by the 2-D DFT. Enhancements in color images are quantitatively measured by the color enhancement measure estimation (CEME), which allows for selecting optimum parameters for processing by the genetic algorithm. Enhancement of color images by the quaternion based method allows for obtaining images which are closer to the genuine representation of the real original color.

* 16 pages, 53 figures (including sub-figures)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge