A comprehensive review of Binary Neural Network

Paper and Code

Oct 19, 2021

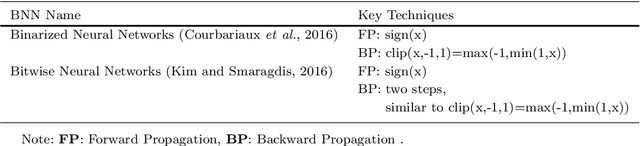

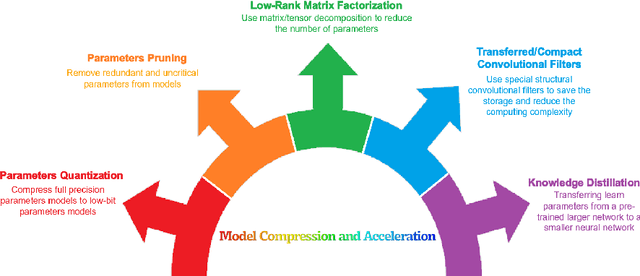

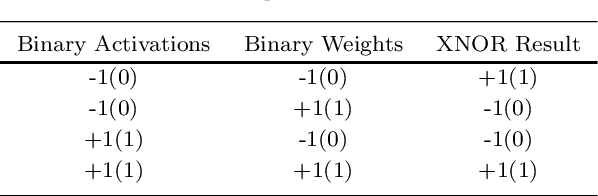

Binary Neural Network (BNN) method is an extreme application of convolutional neural network (CNN) parameter quantization. As opposed to the original CNN methods which employed floating-point computation with full-precision weights and activations, BBN uses 1-bit activations and weights. With BBNs, a significant amount of storage, network complexity and energy consumption can be reduced, and neural networks can be implemented more efficiently in embedded applications. Unfortunately, binarization causes severe information loss. A gap still exists between full-precision CNN models and their binarized counterparts. The recent developments in BNN have led to a lot of algorithms and solutions that have helped address this issue. This article provides a full overview of recent developments in BNN. The present paper focuses exclusively on 1-bit activations and weights networks, as opposed to previous surveys in which low-bit works are mixed in. In this paper, we conduct a complete investigation of BNN's development from their predecessors to the latest BNN algorithms and techniques, presenting a broad design pipeline, and discussing each module's variants. Along the way, this paper examines BNN (a) purpose: their early successes and challenges; (b) BNN optimization: selected representative works that contain key optimization techniques; (c) deployment: open-source frameworks for BNN modeling and development; (d) terminal: efficient computing architectures and devices for BNN and (e) applications: diverse applications with BNN. Moreover, this paper discusses potential directions and future research opportunities for the latest BNN algorithms and techniques, presents a broad design pipeline, and discusses each module's variants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge