Marianna Obrist

AcoustoBots: A swarm of robots for acoustophoretic multimodal interactions

May 12, 2025Abstract:Acoustophoresis has enabled novel interaction capabilities, such as levitation, volumetric displays, mid-air haptic feedback, and directional sound generation, to open new forms of multimodal interactions. However, its traditional implementation as a singular static unit limits its dynamic range and application versatility. This paper introduces AcoustoBots - a novel convergence of acoustophoresis with a movable and reconfigurable phased array of transducers for enhanced application versatility. We mount a phased array of transducers on a swarm of robots to harness the benefits of multiple mobile acoustophoretic units. This offers a more flexible and interactive platform that enables a swarm of acoustophoretic multimodal interactions. Our novel AcoustoBots design includes a hinge actuation system that controls the orientation of the mounted phased array of transducers to achieve high flexibility in a swarm of acoustophoretic multimodal interactions. In addition, we designed a BeadDispenserBot that can deliver particles to trapping locations, which automates the acoustic levitation interaction. These attributes allow AcoustoBots to independently work for a common cause and interchange between modalities, allowing for novel augmentations (e.g., a swarm of haptics, audio, and levitation) and bilateral interactions with users in an expanded interaction area. We detail our design considerations, challenges, and methodological approach to extend acoustophoretic central control in distributed settings. This work demonstrates a scalable acoustic control framework with two mobile robots, laying the groundwork for future deployment in larger robotic swarms. Finally, we characterize the performance of our AcoustoBots and explore the potential interactive scenarios they can enable.

Sensory-driven microinterventions for improved health and wellbeing

Mar 18, 2025

Abstract:The five senses are gateways to our wellbeing and their decline is considered a significant public health challenge which is linked to multiple conditions that contribute significantly to morbidity and mortality. Modern technology, with its ubiquitous nature and fast data processing has the ability to leverage the power of the senses to transform our approach to day to day healthcare, with positive effects on our quality of life. Here, we introduce the idea of sensory-driven microinterventions for preventative, personalised healthcare. Microinterventions are targeted, timely, minimally invasive strategies that seamlessly integrate into our daily life. This idea harnesses human's sensory capabilities, leverages technological advances in sensory stimulation and real-time processing ability for sensing the senses. The collection of sensory data from our continuous interaction with technology - for example the tone of voice, gait movement, smart home behaviour - opens up a shift towards personalised technology-enabled, sensory-focused healthcare interventions, coupled with the potential of early detection and timely treatment of sensory deficits that can signal critical health insights, especially for neurodegenerative diseases such as Parkinson's disease.

Sniff AI: Is My 'Spicy' Your 'Spicy'? Exploring LLM's Perceptual Alignment with Human Smell Experiences

Nov 11, 2024Abstract:Aligning AI with human intent is important, yet perceptual alignment-how AI interprets what we see, hear, or smell-remains underexplored. This work focuses on olfaction, human smell experiences. We conducted a user study with 40 participants to investigate how well AI can interpret human descriptions of scents. Participants performed "sniff and describe" interactive tasks, with our designed AI system attempting to guess what scent the participants were experiencing based on their descriptions. These tasks evaluated the Large Language Model's (LLMs) contextual understanding and representation of scent relationships within its internal states - high-dimensional embedding space. Both quantitative and qualitative methods were used to evaluate the AI system's performance. Results indicated limited perceptual alignment, with biases towards certain scents, like lemon and peppermint, and continued failing to identify others, like rosemary. We discuss these findings in light of human-AI alignment advancements, highlighting the limitations and opportunities for enhancing HCI systems with multisensory experience integration.

Exploring Human-AI Perception Alignment in Sensory Experiences: Do LLMs Understand Textile Hand?

Jun 05, 2024

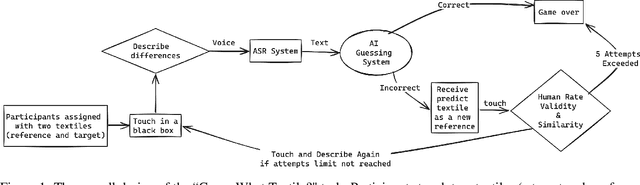

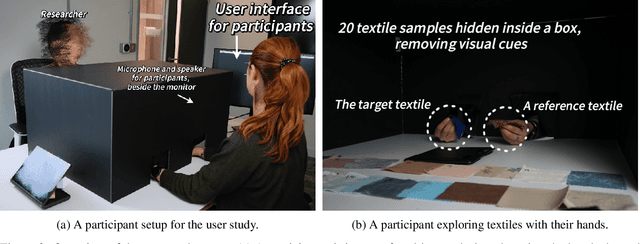

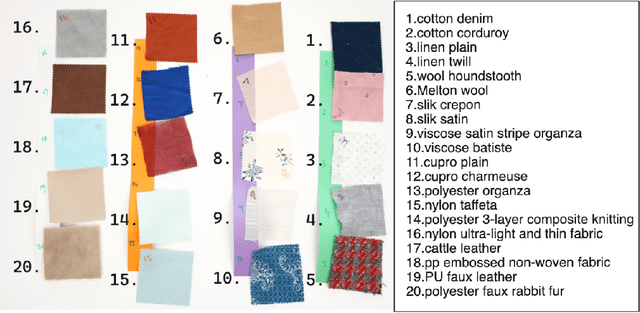

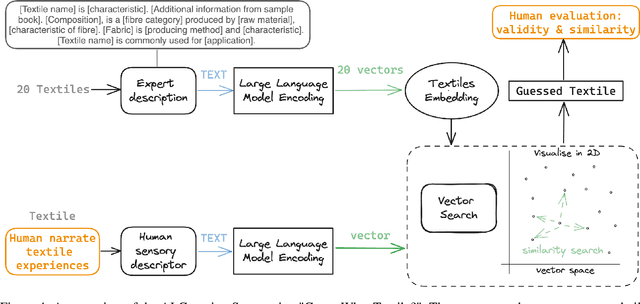

Abstract:Aligning large language models (LLMs) behaviour with human intent is critical for future AI. An important yet often overlooked aspect of this alignment is the perceptual alignment. Perceptual modalities like touch are more multifaceted and nuanced compared to other sensory modalities such as vision. This work investigates how well LLMs align with human touch experiences using the "textile hand" task. We created a "Guess What Textile" interaction in which participants were given two textile samples -- a target and a reference -- to handle. Without seeing them, participants described the differences between them to the LLM. Using these descriptions, the LLM attempted to identify the target textile by assessing similarity within its high-dimensional embedding space. Our results suggest that a degree of perceptual alignment exists, however varies significantly among different textile samples. For example, LLM predictions are well aligned for silk satin, but not for cotton denim. Moreover, participants didn't perceive their textile experiences closely matched by the LLM predictions. This is only the first exploration into perceptual alignment around touch, exemplified through textile hand. We discuss possible sources of this alignment variance, and how better human-AI perceptual alignment can benefit future everyday tasks.

TextileNet: A Material Taxonomy-based Fashion Textile Dataset

Jan 15, 2023

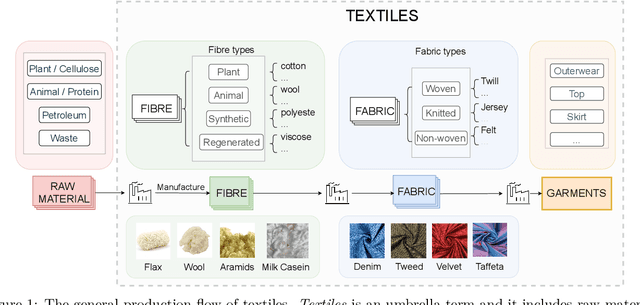

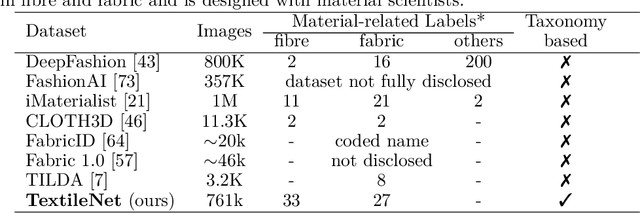

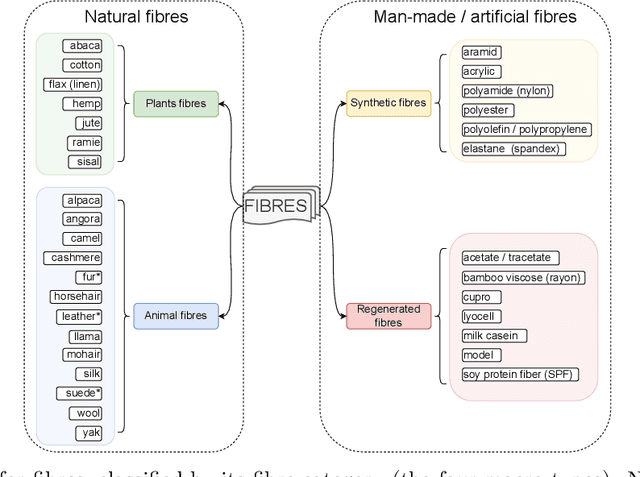

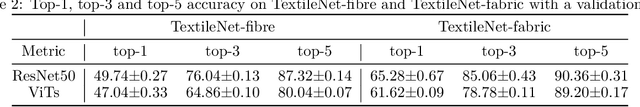

Abstract:The rise of Machine Learning (ML) is gradually digitalizing and reshaping the fashion industry. Recent years have witnessed a number of fashion AI applications, for example, virtual try-ons. Textile material identification and categorization play a crucial role in the fashion textile sector, including fashion design, retails, and recycling. At the same time, Net Zero is a global goal and the fashion industry is undergoing a significant change so that textile materials can be reused, repaired and recycled in a sustainable manner. There is still a challenge in identifying textile materials automatically for garments, as we lack a low-cost and effective technique for identifying them. In light of this, we build the first fashion textile dataset, TextileNet, based on textile material taxonomies - a fibre taxonomy and a fabric taxonomy generated in collaboration with material scientists. TextileNet can be used to train and evaluate the state-of-the-art Deep Learning models for textile materials. We hope to standardize textile related datasets through the use of taxonomies. TextileNet contains 33 fibres labels and 27 fabrics labels, and has in total 760,949 images. We use standard Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs) to establish baselines for this dataset. Future applications for this dataset range from textile classification to optimization of the textile supply chain and interactive design for consumers. We envision that this can contribute to the development of a new AI-based fashion platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge