Mukesh Mohania

SymTax: Symbiotic Relationship and Taxonomy Fusion for Effective Citation Recommendation

May 26, 2024

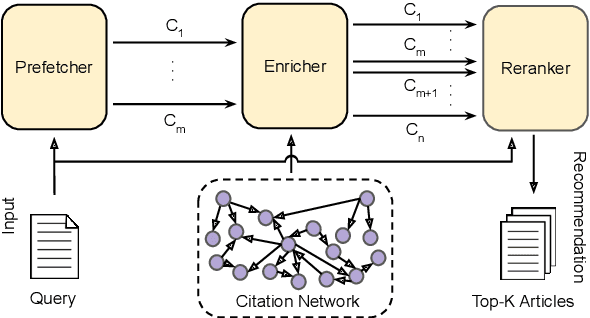

Abstract:Citing pertinent literature is pivotal to writing and reviewing a scientific document. Existing techniques mainly focus on the local context or the global context for recommending citations but fail to consider the actual human citation behaviour. We propose SymTax, a three-stage recommendation architecture that considers both the local and the global context, and additionally the taxonomical representations of query-candidate tuples and the Symbiosis prevailing amongst them. SymTax learns to embed the infused taxonomies in the hyperbolic space and uses hyperbolic separation as a latent feature to compute query-candidate similarity. We build a novel and large dataset ArSyTa containing 8.27 million citation contexts and describe the creation process in detail. We conduct extensive experiments and ablation studies to demonstrate the effectiveness and design choice of each module in our framework. Also, combinatorial analysis from our experiments shed light on the choice of language models (LMs) and fusion embedding, and the inclusion of section heading as a signal. Our proposed module that captures the symbiotic relationship solely leads to performance gains of 26.66% and 39.25% in Recall@5 w.r.t. SOTA on ACL-200 and RefSeer datasets, respectively. The complete framework yields a gain of 22.56% in Recall@5 wrt SOTA on our proposed dataset. The code and dataset are available at https://github.com/goyalkaraniit/SymTax

Coherence and Diversity through Noise: Self-Supervised Paraphrase Generation via Structure-Aware Denoising

Feb 06, 2023Abstract:In this paper, we propose SCANING, an unsupervised framework for paraphrasing via controlled noise injection. We focus on the novel task of paraphrasing algebraic word problems having practical applications in online pedagogy as a means to reduce plagiarism as well as ensure understanding on the part of the student instead of rote memorization. This task is more complex than paraphrasing general-domain corpora due to the difficulty in preserving critical information for solution consistency of the paraphrased word problem, managing the increased length of the text and ensuring diversity in the generated paraphrase. Existing approaches fail to demonstrate adequate performance on at least one, if not all, of these facets, necessitating the need for a more comprehensive solution. To this end, we model the noising search space as a composition of contextual and syntactic aspects and sample noising functions consisting of either one or both aspects. This allows for learning a denoising function that operates over both aspects and produces semantically equivalent and syntactically diverse outputs through grounded noise injection. The denoising function serves as a foundation for learning a paraphrasing function which operates solely in the input-paraphrase space without carrying any direct dependency on noise. We demonstrate SCANING considerably improves performance in terms of both semantic preservation and producing diverse paraphrases through extensive automated and manual evaluation across 4 datasets.

TagRec++: Hierarchical Label Aware Attention Network for Question Categorization

Aug 10, 2022

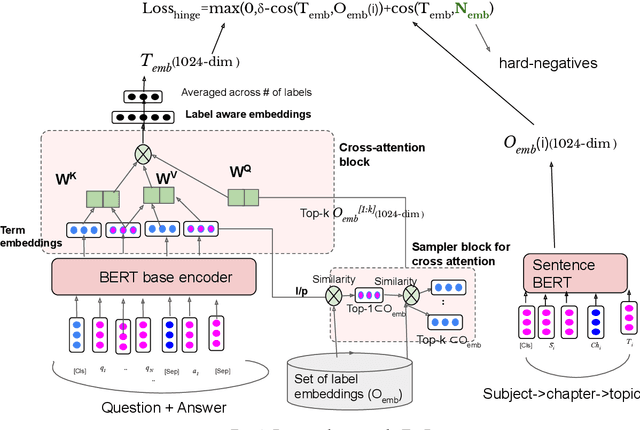

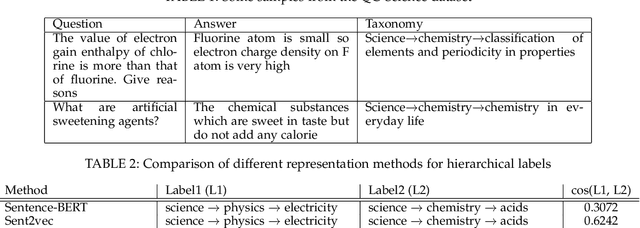

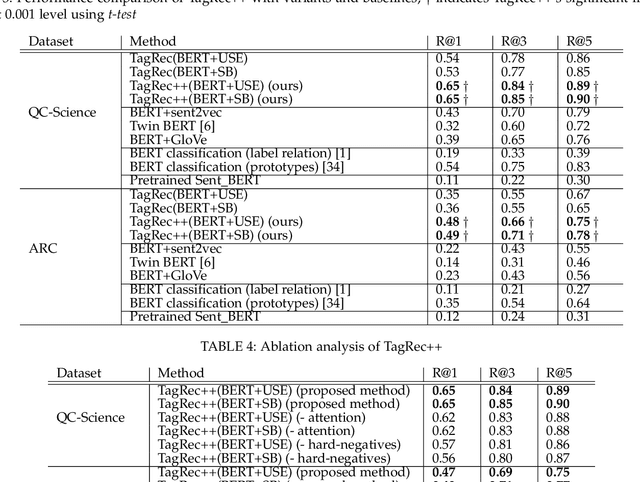

Abstract:Online learning systems have multiple data repositories in the form of transcripts, books and questions. To enable ease of access, such systems organize the content according to a well defined taxonomy of hierarchical nature (subject-chapter-topic). The task of categorizing inputs to the hierarchical labels is usually cast as a flat multi-class classification problem. Such approaches ignore the semantic relatedness between the terms in the input and the tokens in the hierarchical labels. Alternate approaches also suffer from class imbalance when they only consider leaf level nodes as labels. To tackle the issues, we formulate the task as a dense retrieval problem to retrieve the appropriate hierarchical labels for each content. In this paper, we deal with categorizing questions. We model the hierarchical labels as a composition of their tokens and use an efficient cross-attention mechanism to fuse the information with the term representations of the content. We also propose an adaptive in-batch hard negative sampling approach which samples better negatives as the training progresses. We demonstrate that the proposed approach \textit{TagRec++} outperforms existing state-of-the-art approaches on question datasets as measured by Recall@k. In addition, we demonstrate zero-shot capabilities of \textit{TagRec++} and ability to adapt to label changes.

'John ate 5 apples' != 'John ate some apples': Self-Supervised Paraphrase Quality Detection for Algebraic Word Problems

Jun 16, 2022

Abstract:This paper introduces the novel task of scoring paraphrases for Algebraic Word Problems (AWP) and presents a self-supervised method for doing so. In the current online pedagogical setting, paraphrasing these problems is helpful for academicians to generate multiple syntactically diverse questions for assessments. It also helps induce variation to ensure that the student has understood the problem instead of just memorizing it or using unfair means to solve it. The current state-of-the-art paraphrase generation models often cannot effectively paraphrase word problems, losing a critical piece of information (such as numbers or units) which renders the question unsolvable. There is a need for paraphrase scoring methods in the context of AWP to enable the training of good paraphrasers. Thus, we propose ParaQD, a self-supervised paraphrase quality detection method using novel data augmentations that can learn latent representations to separate a high-quality paraphrase of an algebraic question from a poor one by a wide margin. Through extensive experimentation, we demonstrate that our method outperforms existing state-of-the-art self-supervised methods by up to 32% while also demonstrating impressive zero-shot performance.

K-12BERT: BERT for K-12 education

May 24, 2022

Abstract:Online education platforms are powered by various NLP pipelines, which utilize models like BERT to aid in content curation. Since the inception of the pre-trained language models like BERT, there have also been many efforts toward adapting these pre-trained models to specific domains. However, there has not been a model specifically adapted for the education domain (particularly K-12) across subjects to the best of our knowledge. In this work, we propose to train a language model on a corpus of data curated by us across multiple subjects from various sources for K-12 education. We also evaluate our model, K12-BERT, on downstream tasks like hierarchical taxonomy tagging.

Topic Aware Contextualized Embeddings for High Quality Phrase Extraction

Jan 17, 2022Abstract:Keyphrase extraction from a given document is the task of automatically extracting salient phrases that best describe the document. This paper proposes a novel unsupervised graph-based ranking method to extract high-quality phrases from a given document. We obtain the contextualized embeddings from pre-trained language models enriched with topic vectors from Latent Dirichlet Allocation (LDA) to represent the candidate phrases and the document. We introduce a scoring mechanism for the phrases using the information obtained from contextualized embeddings and the topic vectors. The salient phrases are extracted using a ranking algorithm on an undirected graph constructed for the given document. In the undirected graph, the nodes represent the phrases, and the edges between the phrases represent the semantic relatedness between them, weighted by a score obtained from the scoring mechanism. To demonstrate the efficacy of our proposed method, we perform several experiments on open source datasets in the science domain and observe that our novel method outperforms existing unsupervised embedding based keyphrase extraction methods. For instance, on the SemEval2017 dataset, our method advances the F1 score from 0.2195 (EmbedRank) to 0.2819 at the top 10 extracted keyphrases. Several variants of the proposed algorithm are investigated to determine their effect on the quality of keyphrases. We further demonstrate the ability of our proposed method to collect additional high-quality keyphrases that are not present in the document from external knowledge bases like Wikipedia for enriching the document with newly discovered keyphrases. We evaluate this step on a collection of annotated documents. The F1-score at the top 10 expanded keyphrases is 0.60, indicating that our algorithm can also be used for 'concept' expansion using external knowledge.

BeautifAI -- A Personalised Occasion-oriented Makeup Recommendation System

Sep 13, 2021

Abstract:With the global metamorphosis of the beauty industry and the rising demand for beauty products worldwide, the need for an efficacious makeup recommendation system has never been more. Despite the significant advancements made towards personalised makeup recommendation, the current research still falls short of incorporating the context of occasion in makeup recommendation and integrating feedback for users. In this work, we propose BeautifAI, a novel makeup recommendation system, delivering personalised occasion-oriented makeup recommendations to users while providing real-time previews and continuous feedback. The proposed work's novel contributions, including the incorporation of occasion context, region-wise makeup recommendation, real-time makeup previews and continuous makeup feedback, set our system apart from the current work in makeup recommendation. We also demonstrate our proposed system's efficacy in providing personalised makeup recommendation by conducting a user study.

TagRec: Automated Tagging of Questions with Hierarchical Learning Taxonomy

Jul 03, 2021

Abstract:Online educational platforms organize academic questions based on a hierarchical learning taxonomy (subject-chapter-topic). Automatically tagging new questions with existing taxonomy will help organize these questions into different classes of hierarchical taxonomy so that they can be searched based on the facets like chapter. This task can be formulated as a flat multi-class classification problem. Usually, flat classification based methods ignore the semantic relatedness between the terms in the hierarchical taxonomy and the questions. Some traditional methods also suffer from the class imbalance issues as they consider only the leaf nodes ignoring the hierarchy. Hence, we formulate the problem as a similarity-based retrieval task where we optimize the semantic relatedness between the taxonomy and the questions. We demonstrate that our method helps to handle the unseen labels and hence can be used for taxonomy tagging in the wild. In this method, we augment the question with its corresponding answer to capture more semantic information and then align the question-answer pair's contextualized embedding with the corresponding label (taxonomy) vector representations. The representations are aligned by fine-tuning a transformer based model with a loss function that is a combination of the cosine similarity and hinge rank loss. The loss function maximizes the similarity between the question-answer pair and the correct label representations and minimizes the similarity to unrelated labels. Finally, we perform experiments on two real-world datasets. We show that the proposed learning method outperforms representations learned using the multi-class classification method and other state of the art methods by 6% as measured by Recall@k. We also demonstrate the performance of the proposed method on unseen but related learning content like the learning objectives without re-training the network.

Towards a Predictive Patent Analytics and Evaluation Platform

Oct 31, 2019

Abstract:The importance of patents is well recognised across many regions of the world. Many patent mining systems have been proposed, but with limited predictive capabilities. In this demo, we showcase how predictive algorithms leveraging the state-of-the-art machine learning and deep learning techniques can be used to improve understanding of patents for inventors, patent evaluators, and business analysts alike. Our demo video is available at http://ibm.biz/ecml2019-demo-patent-analytics

Document Chunking and Learning Objective Generation for Instruction Design

Aug 06, 2018

Abstract:Instructional Systems Design is the practice of creating of instructional experiences that make the acquisition of knowledge and skill more efficient, effective, and appealing. Specifically in designing courses, an hour of training material can require between 30 to 500 hours of effort in sourcing and organizing reference data for use in just the preparation of course material. In this paper, we present the first system of its kind that helps reduce the effort associated with sourcing reference material and course creation. We present algorithms for document chunking and automatic generation of learning objectives from content, creating descriptive content metadata to improve content-discoverability. Unlike existing methods, the learning objectives generated by our system incorporate pedagogically motivated Bloom's verbs. We demonstrate the usefulness of our methods using real world data from the banking industry and through a live deployment at a large pharmaceutical company.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge