Mohan Chen

Enhanced Knowledge Injection for Radiology Report Generation

Nov 01, 2023

Abstract:Automatic generation of radiology reports holds crucial clinical value, as it can alleviate substantial workload on radiologists and remind less experienced ones of potential anomalies. Despite the remarkable performance of various image captioning methods in the natural image field, generating accurate reports for medical images still faces challenges, i.e., disparities in visual and textual data, and lack of accurate domain knowledge. To address these issues, we propose an enhanced knowledge injection framework, which utilizes two branches to extract different types of knowledge. The Weighted Concept Knowledge (WCK) branch is responsible for introducing clinical medical concepts weighted by TF-IDF scores. The Multimodal Retrieval Knowledge (MRK) branch extracts triplets from similar reports, emphasizing crucial clinical information related to entity positions and existence. By integrating this finer-grained and well-structured knowledge with the current image, we are able to leverage the multi-source knowledge gain to ultimately facilitate more accurate report generation. Extensive experiments have been conducted on two public benchmarks, demonstrating that our method achieves superior performance over other state-of-the-art methods. Ablation studies further validate the effectiveness of two extracted knowledge sources.

Dynamic Graph Message Passing Networks for Visual Recognition

Sep 20, 2022

Abstract:Modelling long-range dependencies is critical for scene understanding tasks in computer vision. Although convolution neural networks (CNNs) have excelled in many vision tasks, they are still limited in capturing long-range structured relationships as they typically consist of layers of local kernels. A fully-connected graph, such as the self-attention operation in Transformers, is beneficial for such modelling, however, its computational overhead is prohibitive. In this paper, we propose a dynamic graph message passing network, that significantly reduces the computational complexity compared to related works modelling a fully-connected graph. This is achieved by adaptively sampling nodes in the graph, conditioned on the input, for message passing. Based on the sampled nodes, we dynamically predict node-dependent filter weights and the affinity matrix for propagating information between them. This formulation allows us to design a self-attention module, and more importantly a new Transformer-based backbone network, that we use for both image classification pretraining, and for addressing various downstream tasks (object detection, instance and semantic segmentation). Using this model, we show significant improvements with respect to strong, state-of-the-art baselines on four different tasks. Our approach also outperforms fully-connected graphs while using substantially fewer floating-point operations and parameters. Code and models will be made publicly available at https://github.com/fudan-zvg/DGMN2

DeePKS+ABACUS as a Bridge between Expensive Quantum Mechanical Models and Machine Learning Potentials

Jun 21, 2022

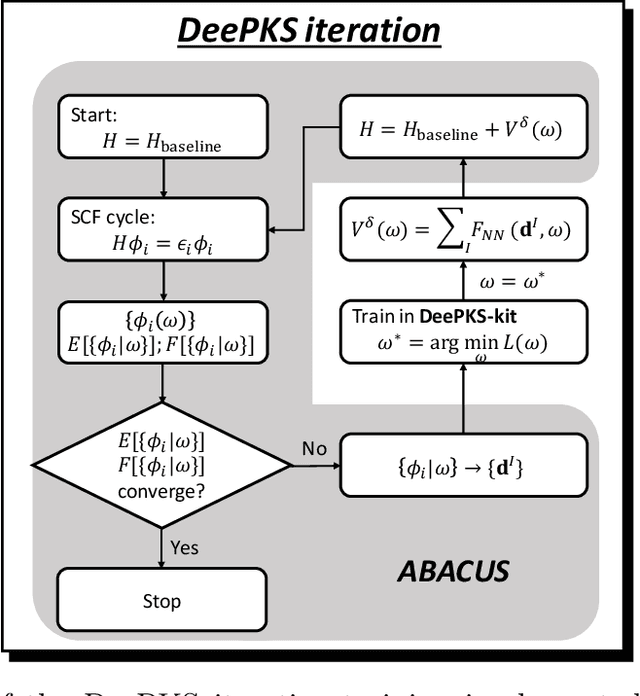

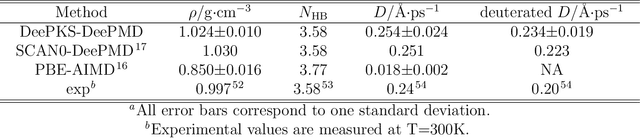

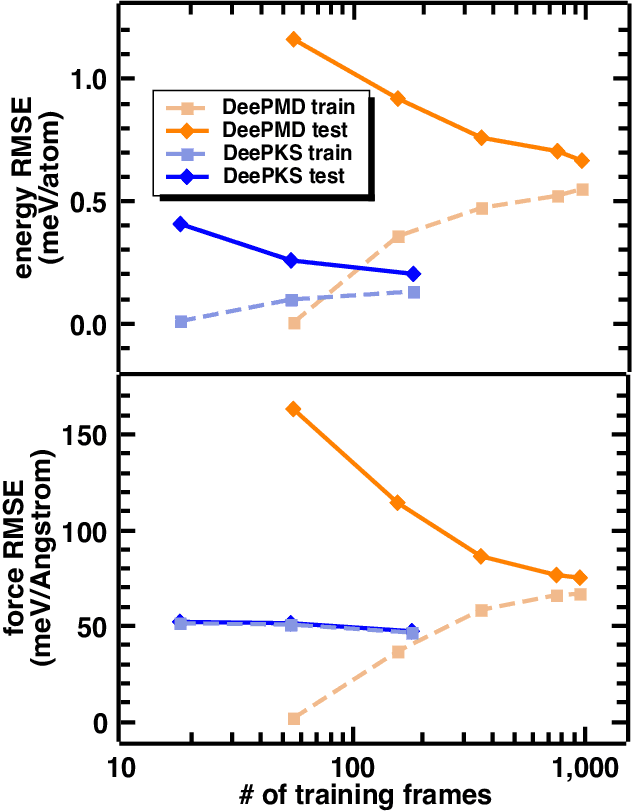

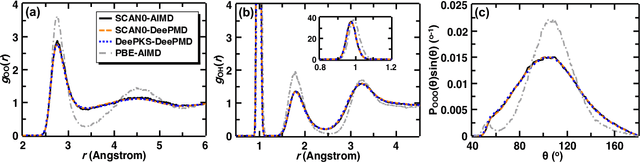

Abstract:Recently, the development of machine learning (ML) potentials has made it possible to perform large-scale and long-time molecular simulations with the accuracy of quantum mechanical (QM) models. However, for high-level QM methods, such as density functional theory (DFT) at the meta-GGA level and/or with exact exchange, quantum Monte Carlo, etc., generating a sufficient amount of data for training a ML potential has remained computationally challenging due to their high cost. In this work, we demonstrate that this issue can be largely alleviated with Deep Kohn-Sham (DeePKS), a ML-based DFT model. DeePKS employs a computationally efficient neural network-based functional model to construct a correction term added upon a cheap DFT model. Upon training, DeePKS offers closely-matched energies and forces compared with high-level QM method, but the number of training data required is orders of magnitude less than that required for training a reliable ML potential. As such, DeePKS can serve as a bridge between expensive QM models and ML potentials: one can generate a decent amount of high-accuracy QM data to train a DeePKS model, and then use the DeePKS model to label a much larger amount of configurations to train a ML potential. This scheme for periodic systems is implemented in a DFT package ABACUS, which is open-source and ready for use in various applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge