Minglei Lu

VinT-6D: A Large-Scale Object-in-hand Dataset from Vision, Touch and Proprioception

Jan 06, 2025

Abstract:This paper addresses the scarcity of large-scale datasets for accurate object-in-hand pose estimation, which is crucial for robotic in-hand manipulation within the ``Perception-Planning-Control" paradigm. Specifically, we introduce VinT-6D, the first extensive multi-modal dataset integrating vision, touch, and proprioception, to enhance robotic manipulation. VinT-6D comprises 2 million VinT-Sim and 0.1 million VinT-Real splits, collected via simulations in MuJoCo and Blender and a custom-designed real-world platform. This dataset is tailored for robotic hands, offering models with whole-hand tactile perception and high-quality, well-aligned data. To the best of our knowledge, the VinT-Real is the largest considering the collection difficulties in the real-world environment so that it can bridge the gap of simulation to real compared to the previous works. Built upon VinT-6D, we present a benchmark method that shows significant improvements in performance by fusing multi-modal information. The project is available at https://VinT-6D.github.io/.

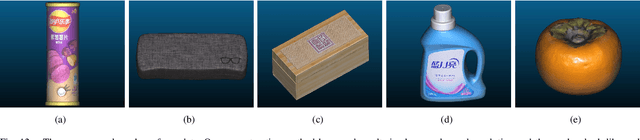

Category-level Object Detection, Pose Estimation and Reconstruction from Stereo Images

Jul 09, 2024Abstract:We study the 3D object understanding task for manipulating everyday objects with different material properties (diffuse, specular, transparent and mixed). Existing monocular and RGB-D methods suffer from scale ambiguity due to missing or imprecise depth measurements. We present CODERS, a one-stage approach for Category-level Object Detection, pose Estimation and Reconstruction from Stereo images. The base of our pipeline is an implicit stereo matching module that combines stereo image features with 3D position information. Concatenating this presented module and the following transform-decoder architecture leads to end-to-end learning of multiple tasks required by robot manipulation. Our approach significantly outperforms all competing methods in the public TOD dataset. Furthermore, trained on simulated data, CODERS generalize well to unseen category-level object instances in real-world robot manipulation experiments. Our dataset, code, and demos will be available on our project page.

Bridging scales in multiscale bubble growth dynamics with correlated fluctuations using neural operator learning

Mar 20, 2024Abstract:The intricate process of bubble growth dynamics involves a broad spectrum of physical phenomena from microscale mechanics of bubble formation to macroscale interplay between bubbles and surrounding thermo-hydrodynamics. Traditional bubble dynamics models including atomistic approaches and continuum-based methods segment the bubble dynamics into distinct scale-specific models. In order to bridge the gap between microscale stochastic fluid models and continuum-based fluid models for bubble dynamics, we develop a composite neural operator model to unify the analysis of nonlinear bubble dynamics across microscale and macroscale regimes by integrating a many-body dissipative particle dynamics (mDPD) model with a continuum-based Rayleigh-Plesset (RP) model through a novel neural network architecture, which consists of a deep operator network for learning the mean behavior of bubble growth subject to pressure variations and a long short-term memory network for learning the statistical features of correlated fluctuations in microscale bubble dynamics. Training and testing data are generated by conducting mDPD and RP simulations for nonlinear bubble dynamics with initial bubble radii ranging from 0.1 to 1.5 micrometers. Results show that the trained composite neural operator model can accurately predict bubble dynamics across scales, with a 99% accuracy for the time evaluation of the bubble radius under varying external pressure while containing correct size-dependent stochastic fluctuations in microscale bubble growth dynamics. The composite neural operator is the first deep learning surrogate for multiscale bubble growth dynamics that can capture correct stochastic fluctuations in microscopic fluid phenomena, which sets a new direction for future research in multiscale fluid dynamics modeling.

Deep neural operator for learning transient response of interpenetrating phase composites subject to dynamic loading

Mar 30, 2023Abstract:Additive manufacturing has been recognized as an industrial technological revolution for manufacturing, which allows fabrication of materials with complex three-dimensional (3D) structures directly from computer-aided design models. The mechanical properties of interpenetrating phase composites (IPCs), especially response to dynamic loading, highly depend on their 3D structures. In general, for each specified structural design, it could take hours or days to perform either finite element analysis (FEA) or experiments to test the mechanical response of IPCs to a given dynamic load. To accelerate the physics-based prediction of mechanical properties of IPCs for various structural designs, we employ a deep neural operator (DNO) to learn the transient response of IPCs under dynamic loading as surrogate of physics-based FEA models. We consider a 3D IPC beam formed by two metals with a ratio of Young's modulus of 2.7, wherein random blocks of constituent materials are used to demonstrate the generality and robustness of the DNO model. To obtain FEA results of IPC properties, 5,000 random time-dependent strain loads generated by a Gaussian process kennel are applied to the 3D IPC beam, and the reaction forces and stress fields inside the IPC beam under various loading are collected. Subsequently, the DNO model is trained using an incremental learning method with sequence-to-sequence training implemented in JAX, leading to a 100X speedup compared to widely used vanilla deep operator network models. After an offline training, the DNO model can act as surrogate of physics-based FEA to predict the transient mechanical response in terms of reaction force and stress distribution of the IPCs to various strain loads in one second at an accuracy of 98%. Also, the learned operator is able to provide extended prediction of the IPC beam subject to longer random strain loads at a reasonably well accuracy.

What Stops Learning-based 3D Registration from Working in the Real World?

Nov 19, 2021

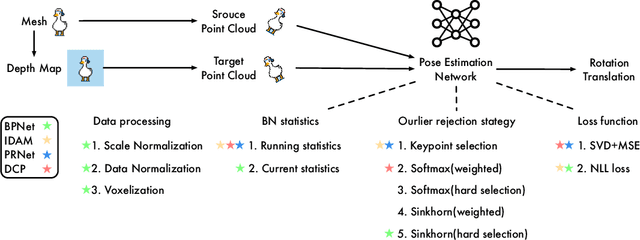

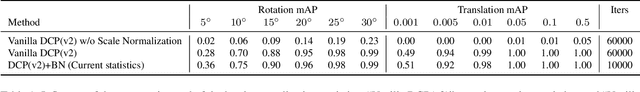

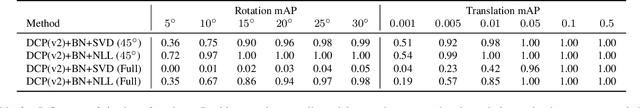

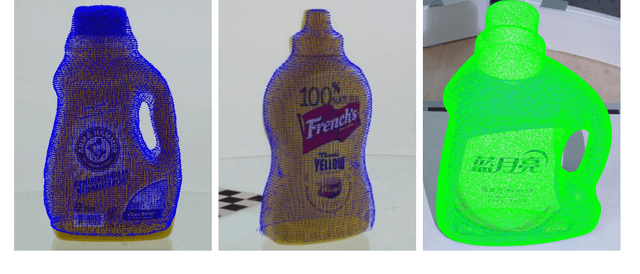

Abstract:Much progress has been made on the task of learning-based 3D point cloud registration, with existing methods yielding outstanding results on standard benchmarks, such as ModelNet40, even in the partial-to-partial matching scenario. Unfortunately, these methods still struggle in the presence of real data. In this work, we identify the sources of these failures, analyze the reasons behind them, and propose solutions to tackle them. We summarise our findings into a set of guidelines and demonstrate their effectiveness by applying them to different baseline methods, DCP and IDAM. In short, our guidelines improve both their training convergence and testing accuracy. Ultimately, this translates to a best-practice 3D registration network (BPNet), constituting the first learning-based method able to handle previously-unseen objects in real-world data. Despite being trained only on synthetic data, our model generalizes to real data without any fine-tuning, reaching an accuracy of up to 67% on point clouds of unseen objects obtained with a commercial sensor.

A Method to Generate High Precision Mesh Model and RGB-D Datasetfor 6D Pose Estimation Task

Nov 17, 2020

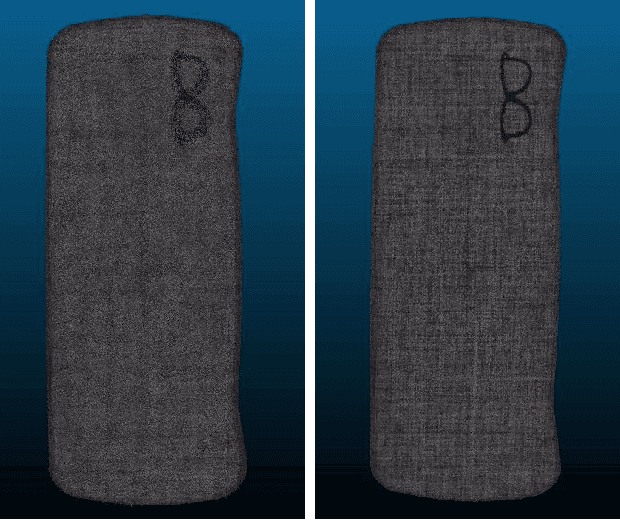

Abstract:Recently, 3D version has been improved greatly due to the development of deep neural networks. A high quality dataset is important to the deep learning method. Existing datasets for 3D vision has been constructed, such as Bigbird and YCB. However, the depth sensors used to make these datasets are out of date, which made the resolution and accuracy of the datasets cannot full fill the higher standards of demand. Although the equipment and technology got better, but no one was trying to collect new and better dataset. Here we are trying to fill that gap. To this end, we propose a new method for object reconstruction, which takes into account the speed, accuracy and robustness. Our method could be used to produce large dataset with better and more accurate annotation. More importantly, our data is more close to the rendering data, which shrinking the gap between the real data and synthetic data further.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge