Miguel Bordallo López

Thermal Imaging for Contactless Cardiorespiratory and Sudomotor Response Monitoring

Feb 12, 2026Abstract:Thermal infrared imaging captures skin temperature changes driven by autonomic regulation and can potentially provide contactless estimation of electrodermal activity (EDA), heart rate (HR), and breathing rate (BR). While visible-light methods address HR and BR, they cannot access EDA, a standard marker of sympathetic activation. This paper characterizes the extraction of these three biosignals from facial thermal video using a signal-processing pipeline that tracks anatomical regions, applies spatial aggregation, and separates slow sudomotor trends from faster cardiorespiratory components. For HR, we apply an orthogonal matrix image transformation (OMIT) decomposition across multiple facial regions of interest (ROIs), and for BR we average nasal and cheek signals before spectral peak detection. We evaluate 288 EDA configurations and the HR/BR pipeline on 31 sessions from the public SIMULATOR STUDY 1 (SIM1) driver monitoring dataset. The best fixed EDA configuration (nose region, exponential moving average) reaches a mean absolute correlation of $0.40 \pm 0.23$ against palm EDA, with individual sessions reaching 0.89. BR estimation achieves a mean absolute error of $3.1 \pm 1.1$ bpm, while HR estimation yields $13.8 \pm 7.5$ bpm MAE, limited by the low camera frame rate (7.5 Hz). We report signal polarity alternation across sessions, short thermodynamic latency for well-tracked signals, and condition-dependent and demographic effects on extraction quality. These results provide baseline performance bounds and design guidance for thermal contactless biosignal estimation.

From Theory to Throughput: CUDA-Optimized APML for Large-Batch 3D Learning

Dec 17, 2025

Abstract:Loss functions are fundamental to learning accurate 3D point cloud models, yet common choices trade geometric fidelity for computational cost. Chamfer Distance is efficient but permits many-to-one correspondences, while Earth Mover Distance better reflects one-to-one transport at high computational cost. APML approximates transport with differentiable Sinkhorn iterations and an analytically derived temperature, but its dense formulation scales quadratically in memory. We present CUDA-APML, a sparse GPU implementation that thresholds negligible assignments and runs adaptive softmax, bidirectional symmetrization, and Sinkhorn normalization directly in COO form. This yields near-linear memory scaling and preserves gradients on the stored support, while pairwise distance evaluation remains quadratic in the current implementation. On ShapeNet and MM-Fi, CUDA-APML matches dense APML within a small tolerance while reducing peak GPU memory by 99.9%. Code available at: https://github.com/Multimodal-Sensing-Lab/apml

Quality-Aware Framework for Video-Derived Respiratory Signals

Dec 16, 2025

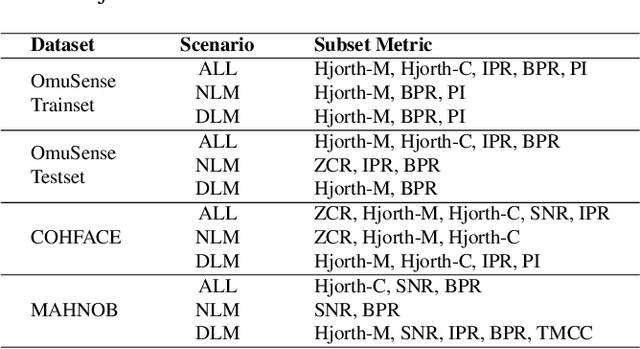

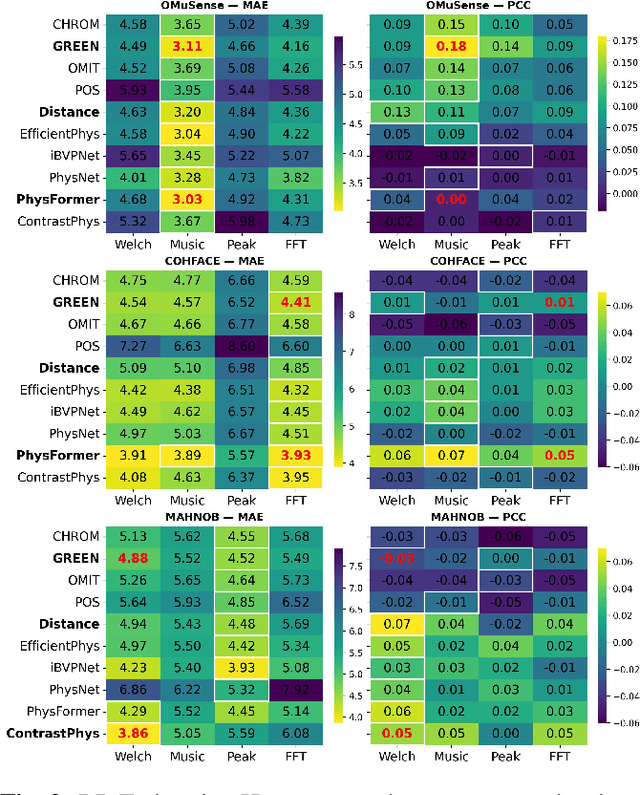

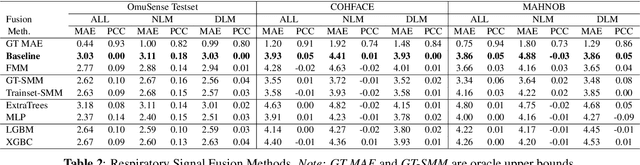

Abstract:Video-based respiratory rate (RR) estimation is often unreliable due to inconsistent signal quality across extraction methods. We present a predictive, quality-aware framework that integrates heterogeneous signal sources with dynamic assessment of reliability. Ten signals are extracted from facial remote photoplethysmography (rPPG), upper-body motion, and deep learning pipelines, and analyzed using four spectral estimators: Welch's method, Multiple Signal Classification (MUSIC), Fast Fourier Transform (FFT), and peak detection. Segment-level quality indices are then used to train machine learning models that predict accuracy or select the most reliable signal. This enables adaptive signal fusion and quality-based segment filtering. Experiments on three public datasets (OMuSense-23, COHFACE, MAHNOB-HCI) show that the proposed framework achieves lower RR estimation errors than individual methods in most cases, with performance gains depending on dataset characteristics. These findings highlight the potential of quality-driven predictive modeling to deliver scalable and generalizable video-based respiratory monitoring solutions.

APML: Adaptive Probabilistic Matching Loss for Robust 3D Point Cloud Reconstruction

Sep 09, 2025Abstract:Training deep learning models for point cloud prediction tasks such as shape completion and generation depends critically on loss functions that measure discrepancies between predicted and ground-truth point sets. Commonly used functions such as Chamfer Distance (CD), HyperCD, and InfoCD rely on nearest-neighbor assignments, which often induce many-to-one correspondences, leading to point congestion in dense regions and poor coverage in sparse regions. These losses also involve non-differentiable operations due to index selection, which may affect gradient-based optimization. Earth Mover Distance (EMD) enforces one-to-one correspondences and captures structural similarity more effectively, but its cubic computational complexity limits its practical use. We propose the Adaptive Probabilistic Matching Loss (APML), a fully differentiable approximation of one-to-one matching that leverages Sinkhorn iterations on a temperature-scaled similarity matrix derived from pairwise distances. We analytically compute the temperature to guarantee a minimum assignment probability, eliminating manual tuning. APML achieves near-quadratic runtime, comparable to Chamfer-based losses, and avoids non-differentiable operations. When integrated into state-of-the-art architectures (PoinTr, PCN, FoldingNet) on ShapeNet benchmarks and on a spatiotemporal Transformer (CSI2PC) that generates 3D human point clouds from WiFi CSI measurements, APM loss yields faster convergence, superior spatial distribution, especially in low-density regions, and improved or on-par quantitative performance without additional hyperparameter search. The code is available at: https://github.com/apm-loss/apml.

Exponentially Weighted Instance-Aware Repeat Factor Sampling for Long-Tailed Object Detection Model Training in Unmanned Aerial Vehicles Surveillance Scenarios

Mar 27, 2025Abstract:Object detection models often struggle with class imbalance, where rare categories appear significantly less frequently than common ones. Existing sampling-based rebalancing strategies, such as Repeat Factor Sampling (RFS) and Instance-Aware Repeat Factor Sampling (IRFS), mitigate this issue by adjusting sample frequencies based on image and instance counts. However, these methods are based on linear adjustments, which limit their effectiveness in long-tailed distributions. This work introduces Exponentially Weighted Instance-Aware Repeat Factor Sampling (E-IRFS), an extension of IRFS that applies exponential scaling to better differentiate between rare and frequent classes. E-IRFS adjusts sampling probabilities using an exponential function applied to the geometric mean of image and instance frequencies, ensuring a more adaptive rebalancing strategy. We evaluate E-IRFS on a dataset derived from the Fireman-UAV-RGBT Dataset and four additional public datasets, using YOLOv11 object detection models to identify fire, smoke, people and lakes in emergency scenarios. The results show that E-IRFS improves detection performance by 22\% over the baseline and outperforms RFS and IRFS, particularly for rare categories. The analysis also highlights that E-IRFS has a stronger effect on lightweight models with limited capacity, as these models rely more on data sampling strategies to address class imbalance. The findings demonstrate that E-IRFS improves rare object detection in resource-constrained environments, making it a suitable solution for real-time applications such as UAV-based emergency monitoring.

Evaluation of Video-Based rPPG in Challenging Environments: Artifact Mitigation and Network Resilience

May 02, 2024Abstract:Video-based remote photoplethysmography (rPPG) has emerged as a promising technology for non-contact vital sign monitoring, especially under controlled conditions. However, the accurate measurement of vital signs in real-world scenarios faces several challenges, including artifacts induced by videocodecs, low-light noise, degradation, low dynamic range, occlusions, and hardware and network constraints. In this article, we systematically investigate comprehensive investigate these issues, measuring their detrimental effects on the quality of rPPG measurements. Additionally, we propose practical strategies for mitigating these challenges to improve the dependability and resilience of video-based rPPG systems. We detail methods for effective biosignal recovery in the presence of network limitations and present denoising and inpainting techniques aimed at preserving video frame integrity. Through extensive evaluations and direct comparisons, we demonstrate the effectiveness of the approaches in enhancing rPPG measurements under challenging environments, contributing to the development of more reliable and effective remote vital sign monitoring technologies.

Ray Launching-Based Computation of Exact Paths with Noisy Dense Point Clouds

Mar 11, 2024

Abstract:Point clouds have been a recent interest for ray tracing-based radio channel characterization, as sensors such as RGB-D cameras and laser scanners can be utilized to generate an accurate virtual copy of a physical environment. In this paper, a novel ray launching algorithm is presented, which operates directly on noisy point clouds acquired from sensor data. It produces coarse paths that are further refined to exact paths consisting of reflections and diffractions. A commercial ray tracing tool is utilized as the baseline for validating the simulated paths. A significant majority of the baseline paths is found. The robustness to noise is examined by artificially applying noise along the normal vector of each point. It is observed that the proposed method is capable of adapting to noise and finds similar paths compared to the baseline path trajectories with noisy point clouds. This is prevalent especially if the normal vectors of the points are estimated accurately. Lastly, a simulation is performed with a reconstructed point cloud and compared against channel measurements and the baseline paths. The resulting paths demonstrate similarity with the baseline path trajectories and exhibit an analogous pattern to the aggregated impulse response extracted from the measurements. Code available at https://github.com/nvaara/NimbusRT

A Ray Launching Approach for Computing Exact Paths with Point Clouds

Feb 21, 2024

Abstract:Ray tracing is a deterministic method that produces propagation paths between a transmitter and a receiver. The simulation accuracy is significantly influenced by the environment details. One way to capture the environment with great precision is the utilization of depth sensors and cameras. Such reconstructed environment is in the form of a point cloud. However, utilizing such data directly in ray tracing, a key aspect on vision-aided wireless communications, often involves a significant trade-off between accuracy and execution time. In this paper, we propose an open source novel and fast point cloud-based ray launching algorithm that produces exact paths, which provide a good basis for accurate modeling of radio channel characteristics. In experiments, preliminary validation of the ray tracer output is obtained with the aid of a commercial ray tracer.

Multi-objective Feature Selection in Remote Health Monitoring Applications

Jan 10, 2024Abstract:Radio frequency (RF) signals have facilitated the development of non-contact human monitoring tasks, such as vital signs measurement, activity recognition, and user identification. In some specific scenarios, an RF signal analysis framework may prioritize the performance of one task over that of others. In response to this requirement, we employ a multi-objective optimization approach inspired by biological principles to select discriminative features that enhance the accuracy of breathing patterns recognition while simultaneously impeding the identification of individual users. This approach is validated using a novel vital signs dataset consisting of 50 subjects engaged in four distinct breathing patterns. Our findings indicate a remarkable result: a substantial divergence in accuracy between breathing recognition and user identification. As a complementary viewpoint, we present a contrariwise result to maximize user identification accuracy and minimize the system's capacity for breathing activity recognition.

Non-contact Multimodal Indoor Human Monitoring Systems: A Survey

Dec 11, 2023

Abstract:Indoor human monitoring systems leverage a wide range of sensors, including cameras, radio devices, and inertial measurement units, to collect extensive data from users and the environment. These sensors contribute diverse data modalities, such as video feeds from cameras, received signal strength indicators and channel state information from WiFi devices, and three-axis acceleration data from inertial measurement units. In this context, we present a comprehensive survey of multimodal approaches for indoor human monitoring systems, with a specific focus on their relevance in elderly care. Our survey primarily highlights non-contact technologies, particularly cameras and radio devices, as key components in the development of indoor human monitoring systems. Throughout this article, we explore well-established techniques for extracting features from multimodal data sources. Our exploration extends to methodologies for fusing these features and harnessing multiple modalities to improve the accuracy and robustness of machine learning models. Furthermore, we conduct comparative analysis across different data modalities in diverse human monitoring tasks and undertake a comprehensive examination of existing multimodal datasets. This extensive survey not only highlights the significance of indoor human monitoring systems but also affirms their versatile applications. In particular, we emphasize their critical role in enhancing the quality of elderly care, offering valuable insights into the development of non-contact monitoring solutions applicable to the needs of aging populations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge