Manuel Lage Cañellas

Thermal Imaging for Contactless Cardiorespiratory and Sudomotor Response Monitoring

Feb 12, 2026Abstract:Thermal infrared imaging captures skin temperature changes driven by autonomic regulation and can potentially provide contactless estimation of electrodermal activity (EDA), heart rate (HR), and breathing rate (BR). While visible-light methods address HR and BR, they cannot access EDA, a standard marker of sympathetic activation. This paper characterizes the extraction of these three biosignals from facial thermal video using a signal-processing pipeline that tracks anatomical regions, applies spatial aggregation, and separates slow sudomotor trends from faster cardiorespiratory components. For HR, we apply an orthogonal matrix image transformation (OMIT) decomposition across multiple facial regions of interest (ROIs), and for BR we average nasal and cheek signals before spectral peak detection. We evaluate 288 EDA configurations and the HR/BR pipeline on 31 sessions from the public SIMULATOR STUDY 1 (SIM1) driver monitoring dataset. The best fixed EDA configuration (nose region, exponential moving average) reaches a mean absolute correlation of $0.40 \pm 0.23$ against palm EDA, with individual sessions reaching 0.89. BR estimation achieves a mean absolute error of $3.1 \pm 1.1$ bpm, while HR estimation yields $13.8 \pm 7.5$ bpm MAE, limited by the low camera frame rate (7.5 Hz). We report signal polarity alternation across sessions, short thermodynamic latency for well-tracked signals, and condition-dependent and demographic effects on extraction quality. These results provide baseline performance bounds and design guidance for thermal contactless biosignal estimation.

From Theory to Throughput: CUDA-Optimized APML for Large-Batch 3D Learning

Dec 17, 2025

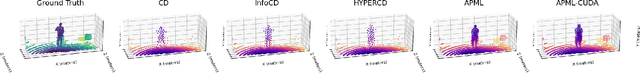

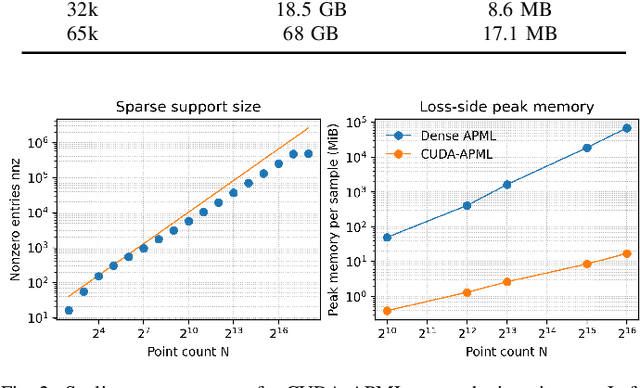

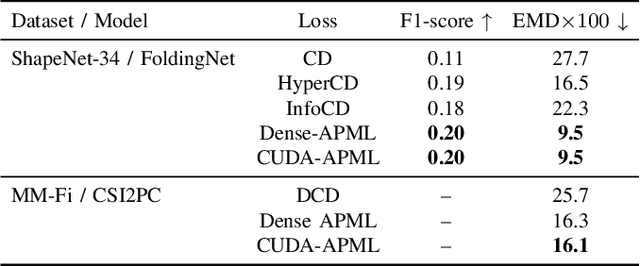

Abstract:Loss functions are fundamental to learning accurate 3D point cloud models, yet common choices trade geometric fidelity for computational cost. Chamfer Distance is efficient but permits many-to-one correspondences, while Earth Mover Distance better reflects one-to-one transport at high computational cost. APML approximates transport with differentiable Sinkhorn iterations and an analytically derived temperature, but its dense formulation scales quadratically in memory. We present CUDA-APML, a sparse GPU implementation that thresholds negligible assignments and runs adaptive softmax, bidirectional symmetrization, and Sinkhorn normalization directly in COO form. This yields near-linear memory scaling and preserves gradients on the stored support, while pairwise distance evaluation remains quadratic in the current implementation. On ShapeNet and MM-Fi, CUDA-APML matches dense APML within a small tolerance while reducing peak GPU memory by 99.9%. Code available at: https://github.com/Multimodal-Sensing-Lab/apml

Quality-Aware Framework for Video-Derived Respiratory Signals

Dec 16, 2025

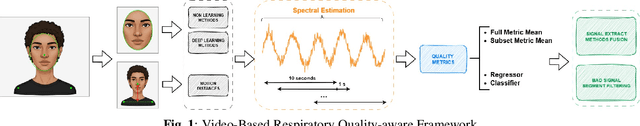

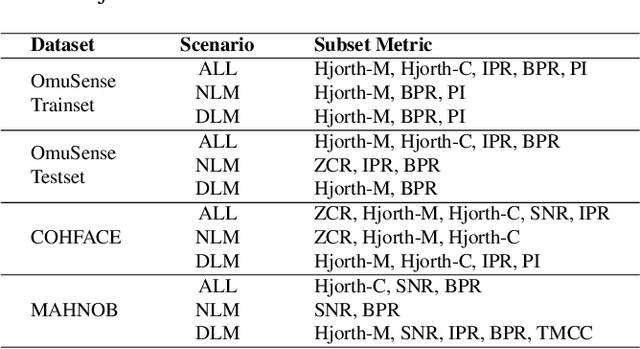

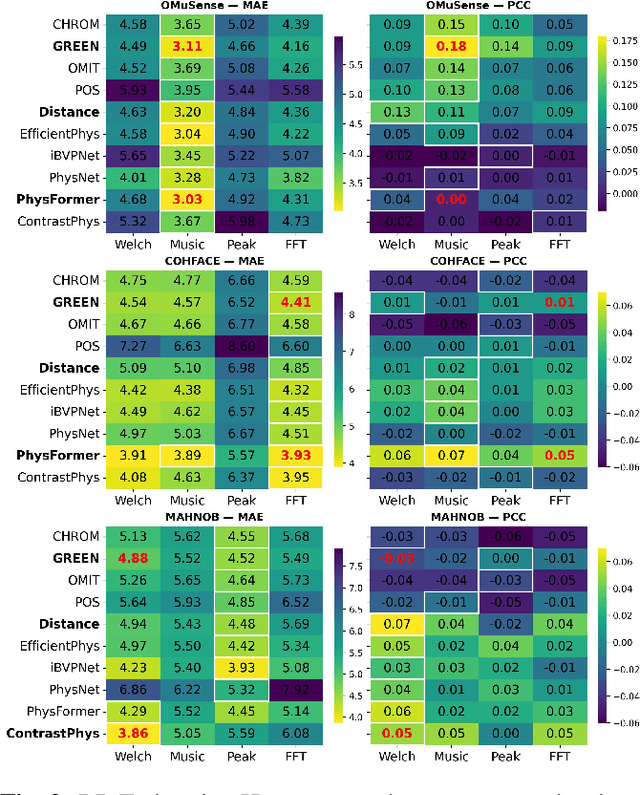

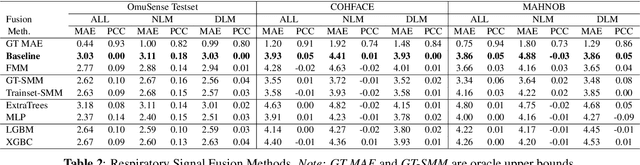

Abstract:Video-based respiratory rate (RR) estimation is often unreliable due to inconsistent signal quality across extraction methods. We present a predictive, quality-aware framework that integrates heterogeneous signal sources with dynamic assessment of reliability. Ten signals are extracted from facial remote photoplethysmography (rPPG), upper-body motion, and deep learning pipelines, and analyzed using four spectral estimators: Welch's method, Multiple Signal Classification (MUSIC), Fast Fourier Transform (FFT), and peak detection. Segment-level quality indices are then used to train machine learning models that predict accuracy or select the most reliable signal. This enables adaptive signal fusion and quality-based segment filtering. Experiments on three public datasets (OMuSense-23, COHFACE, MAHNOB-HCI) show that the proposed framework achieves lower RR estimation errors than individual methods in most cases, with performance gains depending on dataset characteristics. These findings highlight the potential of quality-driven predictive modeling to deliver scalable and generalizable video-based respiratory monitoring solutions.

Multi-objective Feature Selection in Remote Health Monitoring Applications

Jan 10, 2024Abstract:Radio frequency (RF) signals have facilitated the development of non-contact human monitoring tasks, such as vital signs measurement, activity recognition, and user identification. In some specific scenarios, an RF signal analysis framework may prioritize the performance of one task over that of others. In response to this requirement, we employ a multi-objective optimization approach inspired by biological principles to select discriminative features that enhance the accuracy of breathing patterns recognition while simultaneously impeding the identification of individual users. This approach is validated using a novel vital signs dataset consisting of 50 subjects engaged in four distinct breathing patterns. Our findings indicate a remarkable result: a substantial divergence in accuracy between breathing recognition and user identification. As a complementary viewpoint, we present a contrariwise result to maximize user identification accuracy and minimize the system's capacity for breathing activity recognition.

Non-contact Multimodal Indoor Human Monitoring Systems: A Survey

Dec 11, 2023

Abstract:Indoor human monitoring systems leverage a wide range of sensors, including cameras, radio devices, and inertial measurement units, to collect extensive data from users and the environment. These sensors contribute diverse data modalities, such as video feeds from cameras, received signal strength indicators and channel state information from WiFi devices, and three-axis acceleration data from inertial measurement units. In this context, we present a comprehensive survey of multimodal approaches for indoor human monitoring systems, with a specific focus on their relevance in elderly care. Our survey primarily highlights non-contact technologies, particularly cameras and radio devices, as key components in the development of indoor human monitoring systems. Throughout this article, we explore well-established techniques for extracting features from multimodal data sources. Our exploration extends to methodologies for fusing these features and harnessing multiple modalities to improve the accuracy and robustness of machine learning models. Furthermore, we conduct comparative analysis across different data modalities in diverse human monitoring tasks and undertake a comprehensive examination of existing multimodal datasets. This extensive survey not only highlights the significance of indoor human monitoring systems but also affirms their versatile applications. In particular, we emphasize their critical role in enhancing the quality of elderly care, offering valuable insights into the development of non-contact monitoring solutions applicable to the needs of aging populations.

Audio-Based Classification of Respiratory Diseases using Advanced Signal Processing and Machine Learning for Assistive Diagnosis Support

Sep 12, 2023

Abstract:In global healthcare, respiratory diseases are a leading cause of mortality, underscoring the need for rapid and accurate diagnostics. To advance rapid screening techniques via auscultation, our research focuses on employing one of the largest publicly available medical database of respiratory sounds to train multiple machine learning models able to classify different health conditions. Our method combines Empirical Mode Decomposition (EMD) and spectral analysis to extract physiologically relevant biosignals from acoustic data, closely tied to cardiovascular and respiratory patterns, making our approach apart in its departure from conventional audio feature extraction practices. We use Power Spectral Density analysis and filtering techniques to select Intrinsic Mode Functions (IMFs) strongly correlated with underlying physiological phenomena. These biosignals undergo a comprehensive feature extraction process for predictive modeling. Initially, we deploy a binary classification model that demonstrates a balanced accuracy of 87% in distinguishing between healthy and diseased individuals. Subsequently, we employ a six-class classification model that achieves a balanced accuracy of 72% in diagnosing specific respiratory conditions like pneumonia and chronic obstructive pulmonary disease (COPD). For the first time, we also introduce regression models that estimate age and body mass index (BMI) based solely on acoustic data, as well as a model for gender classification. Our findings underscore the potential of this approach to significantly enhance assistive and remote diagnostic capabilities.

Estimating exercise-induced fatigue from thermal facial images

Sep 12, 2023

Abstract:Exercise-induced fatigue resulting from physical activity can be an early indicator of overtraining, illness, or other health issues. In this article, we present an automated method for estimating exercise-induced fatigue levels through the use of thermal imaging and facial analysis techniques utilizing deep learning models. Leveraging a novel dataset comprising over 400,000 thermal facial images of rested and fatigued users, our results suggest that exercise-induced fatigue levels could be predicted with only one static thermal frame with an average error smaller than 15\%. The results emphasize the viability of using thermal imaging in conjunction with deep learning for reliable exercise-induced fatigue estimation.

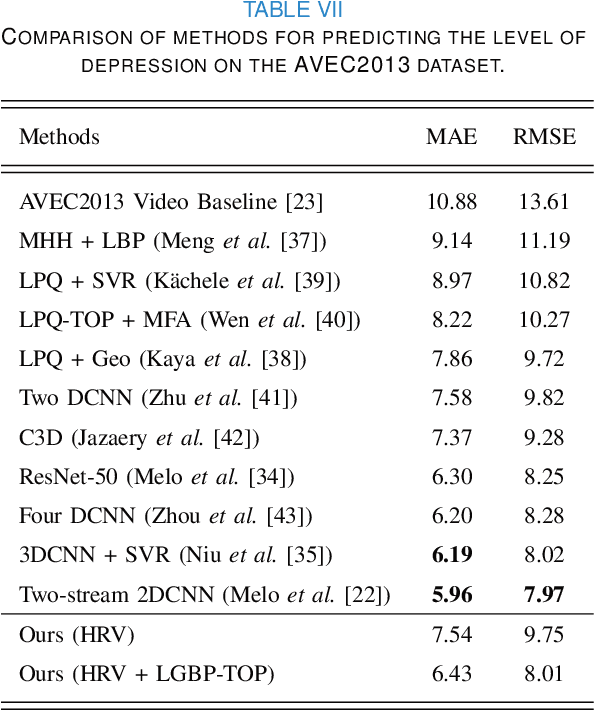

Improving Depression estimation from facial videos with face alignment, training optimization and scheduling

Dec 13, 2022Abstract:Deep learning models have shown promising results in recognizing depressive states using video-based facial expressions. While successful models typically leverage using 3D-CNNs or video distillation techniques, the different use of pretraining, data augmentation, preprocessing, and optimization techniques across experiments makes it difficult to make fair architectural comparisons. We propose instead to enhance two simple models based on ResNet-50 that use only static spatial information by using two specific face alignment methods and improved data augmentation, optimization, and scheduling techniques. Our extensive experiments on benchmark datasets obtain similar results to sophisticated spatio-temporal models for single streams, while the score-level fusion of two different streams outperforms state-of-the-art methods. Our findings suggest that specific modifications in the preprocessing and training process result in noticeable differences in the performance of the models and could hide the actual originally attributed to the use of different neural network architectures.

Depression Recognition using Remote Photoplethysmography from Facial Videos

Jun 09, 2022

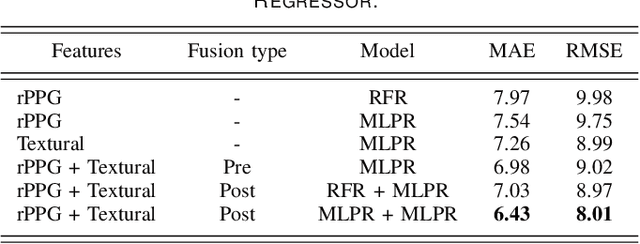

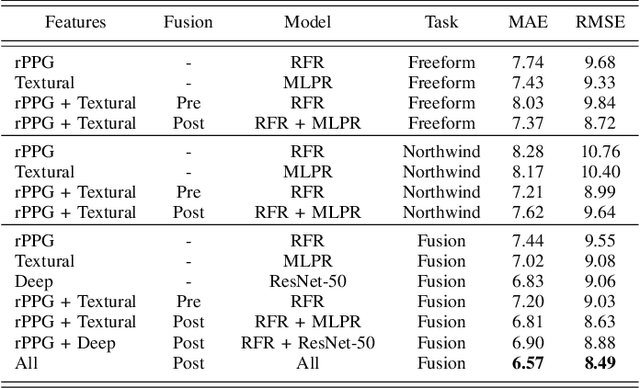

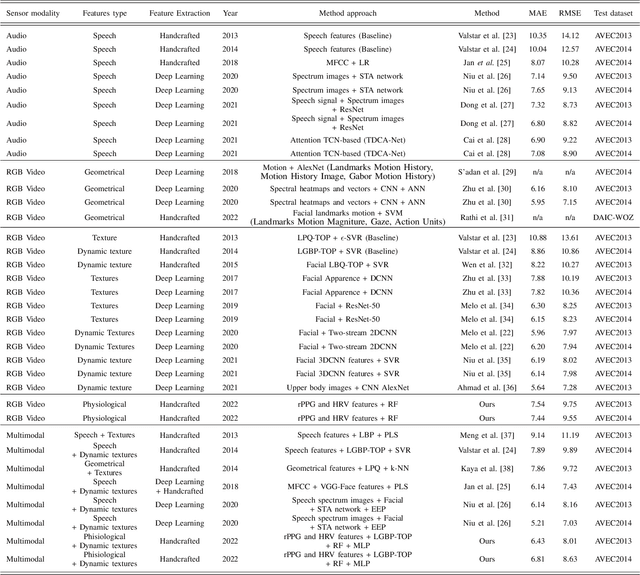

Abstract:Depression is a mental illness that may be harmful to an individual's health. The detection of mental health disorders in the early stages and a precise diagnosis are critical to avoid social, physiological, or psychological side effects. This work analyzes physiological signals to observe if different depressive states have a noticeable impact on the blood volume pulse (BVP) and the heart rate variability (HRV) response. Although typically, HRV features are calculated from biosignals obtained with contact-based sensors such as wearables, we propose instead a novel scheme that directly extracts them from facial videos, just based on visual information, removing the need for any contact-based device. Our solution is based on a pipeline that is able to extract complete remote photoplethysmography signals (rPPG) in a fully unsupervised manner. We use these rPPG signals to calculate over 60 statistical, geometrical, and physiological features that are further used to train several machine learning regressors to recognize different levels of depression. Experiments on two benchmark datasets indicate that this approach offers comparable results to other audiovisual modalities based on voice or facial expression, potentially complementing them. In addition, the results achieved for the proposed method show promising and solid performance that outperforms hand-engineered methods and is comparable to deep learning-based approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge