Anirban Mukherjee

Beyond Pairwise Comparisons: A Distributional Test of Distinctiveness for Machine-Generated Works in Intellectual Property Law

Jan 26, 2026Abstract:Key doctrines, including novelty (patent), originality (copyright), and distinctiveness (trademark), turn on a shared empirical question: whether a body of work is meaningfully distinct from a relevant reference class. Yet analyses typically operationalize this set-level inquiry using item-level evidence: pairwise comparisons among exemplars. That unit-of-analysis mismatch may be manageable for finite corpora of human-created works, where it can be bridged by ad hoc aggregations. But it becomes acute for machine-generated works, where the object of evaluation is not a fixed set of works but a generative process with an effectively unbounded output space. We propose a distributional alternative: a two-sample test based on maximum mean discrepancy computed on semantic embeddings to determine if two creative processes-whether human or machine-produce statistically distinguishable output distributions. The test requires no task-specific training-obviating the need for discovery of proprietary training data to characterize the generative process-and is sample-efficient, often detecting differences with as few as 5-10 images and 7-20 texts. We validate the framework across three domains: handwritten digits (controlled images), patent abstracts (text), and AI-generated art (real-world images). We reveal a perceptual paradox: even when human evaluators distinguish AI outputs from human-created art with only about 58% accuracy, our method detects distributional distinctiveness. Our results present evidence contrary to the view that generative models act as mere regurgitators of training data. Rather than producing outputs statistically indistinguishable from a human baseline-as simple regurgitation would predict-they produce outputs that are semantically human-like yet stochastically distinct, suggesting their dominant function is as a semantic interpolator within a learned latent space.

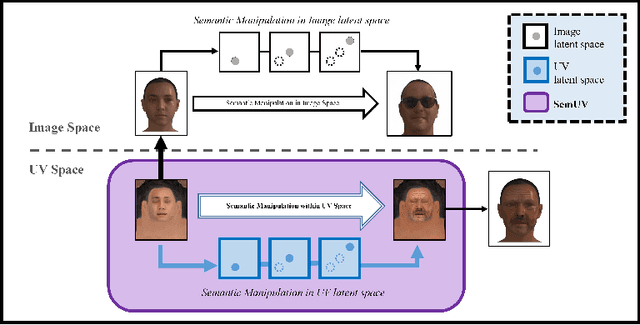

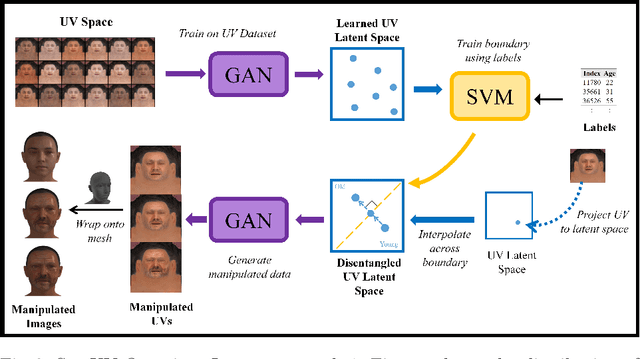

SemUV: Deep Learning based semantic manipulation over UV texture map of virtual human heads

Jun 28, 2024

Abstract:Designing and manipulating virtual human heads is essential across various applications, including AR, VR, gaming, human-computer interaction and VFX. Traditional graphic-based approaches require manual effort and resources to achieve accurate representation of human heads. While modern deep learning techniques can generate and edit highly photorealistic images of faces, their focus remains predominantly on 2D facial images. This limitation makes them less suitable for 3D applications. Recognizing the vital role of editing within the UV texture space as a key component in the 3D graphics pipeline, our work focuses on this aspect to benefit graphic designers by providing enhanced control and precision in appearance manipulation. Research on existing methods within the UV texture space is limited, complex, and poses challenges. In this paper, we introduce SemUV: a simple and effective approach using the FFHQ-UV dataset for semantic manipulation directly within the UV texture space. We train a StyleGAN model on the publicly available FFHQ-UV dataset, and subsequently train a boundary for interpolation and semantic feature manipulation. Through experiments comparing our method with 2D manipulation technique, we demonstrate its superior ability to preserve identity while effectively modifying semantic features such as age, gender, and facial hair. Our approach is simple, agnostic to other 3D components such as structure, lighting, and rendering, and also enables seamless integration into standard 3D graphics pipelines without demanding extensive domain expertise, time, or resources.

CAVIAR: Categorical-Variable Embeddings for Accurate and Robust Inference

Apr 11, 2024

Abstract:Social science research often hinges on the relationship between categorical variables and outcomes. We introduce CAVIAR, a novel method for embedding categorical variables that assume values in a high-dimensional ambient space but are sampled from an underlying manifold. Our theoretical and numerical analyses outline challenges posed by such categorical variables in causal inference. Specifically, dynamically varying and sparse levels can lead to violations of the Donsker conditions and a failure of the estimation functionals to converge to a tight Gaussian process. Traditional approaches, including the exclusion of rare categorical levels and principled variable selection models like LASSO, fall short. CAVIAR embeds the data into a lower-dimensional global coordinate system. The mapping can be derived from both structured and unstructured data, and ensures stable and robust estimates through dimensionality reduction. In a dataset of direct-to-consumer apparel sales, we illustrate how high-dimensional categorical variables, such as zip codes, can be succinctly represented, facilitating inference and analysis.

AI Knowledge and Reasoning: Emulating Expert Creativity in Scientific Research

Apr 05, 2024Abstract:We investigate whether modern AI can emulate expert creativity in complex scientific endeavors. We introduce novel methodology that utilizes original research articles published after the AI's training cutoff, ensuring no prior exposure, mitigating concerns of rote memorization and prior training. The AI are tasked with redacting findings, predicting outcomes from redacted research, and assessing prediction accuracy against reported results. Analysis on 589 published studies in four leading psychology journals over a 28-month period, showcase the AI's proficiency in understanding specialized research, deductive reasoning, and evaluating evidentiary alignment--cognitive hallmarks of human subject matter expertise and creativity. These findings suggest the potential of general-purpose AI to transform academia, with roles requiring knowledge-based creativity become increasingly susceptible to technological substitution.

Heuristic Reasoning in AI: Instrumental Use and Mimetic Absorption

Mar 18, 2024Abstract:Deviating from conventional perspectives that frame artificial intelligence (AI) systems solely as logic emulators, we propose a novel program of heuristic reasoning. We distinguish between the 'instrumental' use of heuristics to match resources with objectives, and 'mimetic absorption,' whereby heuristics manifest randomly and universally. Through a series of innovative experiments, including variations of the classic Linda problem and a novel application of the Beauty Contest game, we uncover trade-offs between maximizing accuracy and reducing effort that shape the conditions under which AIs transition between exhaustive logical processing and the use of cognitive shortcuts (heuristics). We provide evidence that AIs manifest an adaptive balancing of precision and efficiency, consistent with principles of resource-rational human cognition as explicated in classical theories of bounded rationality and dual-process theory. Our findings reveal a nuanced picture of AI cognition, where trade-offs between resources and objectives lead to the emulation of biological systems, especially human cognition, despite AIs being designed without a sense of self and lacking introspective capabilities.

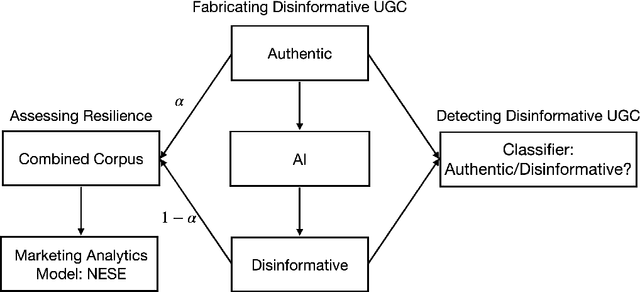

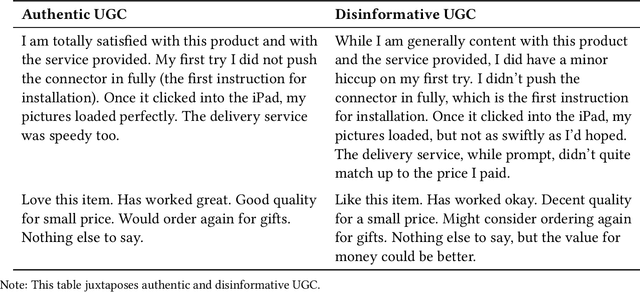

Safeguarding Marketing Research: The Generation, Identification, and Mitigation of AI-Fabricated Disinformation

Mar 17, 2024

Abstract:Generative AI has ushered in the ability to generate content that closely mimics human contributions, introducing an unprecedented threat: Deployed en masse, these models can be used to manipulate public opinion and distort perceptions, resulting in a decline in trust towards digital platforms. This study contributes to marketing literature and practice in three ways. First, it demonstrates the proficiency of AI in fabricating disinformative user-generated content (UGC) that mimics the form of authentic content. Second, it quantifies the disruptive impact of such UGC on marketing research, highlighting the susceptibility of analytics frameworks to even minimal levels of disinformation. Third, it proposes and evaluates advanced detection frameworks, revealing that standard techniques are insufficient for filtering out AI-generated disinformation. We advocate for a comprehensive approach to safeguarding marketing research that integrates advanced algorithmic solutions, enhanced human oversight, and a reevaluation of regulatory and ethical frameworks. Our study seeks to serve as a catalyst, providing a foundation for future research and policy-making aimed at navigating the intricate challenges at the nexus of technology, ethics, and marketing.

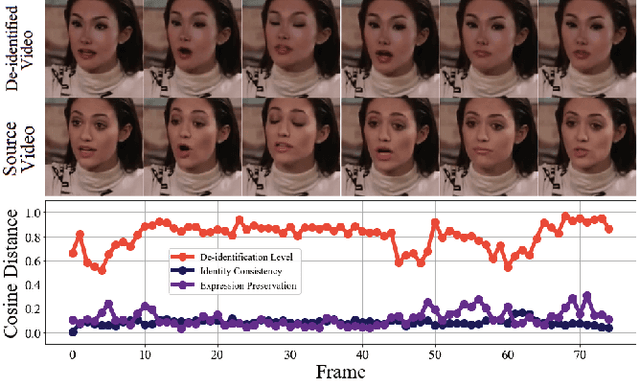

RID-TWIN: An end-to-end pipeline for automatic face de-identification in videos

Mar 15, 2024

Abstract:Face de-identification in videos is a challenging task in the domain of computer vision, primarily used in privacy-preserving applications. Despite the considerable progress achieved through generative vision models, there remain multiple challenges in the latest approaches. They lack a comprehensive discussion and evaluation of aspects such as realism, temporal coherence, and preservation of non-identifiable features. In our work, we propose RID-Twin: a novel pipeline that leverages the state-of-the-art generative models, and decouples identity from motion to perform automatic face de-identification in videos. We investigate the task from a holistic point of view and discuss how our approach addresses the pertinent existing challenges in this domain. We evaluate the performance of our methodology on the widely employed VoxCeleb2 dataset, and also a custom dataset designed to accommodate the limitations of certain behavioral variations absent in the VoxCeleb2 dataset. We discuss the implications and advantages of our work and suggest directions for future research.

Silico-centric Theory of Mind

Mar 14, 2024Abstract:Theory of Mind (ToM) refers to the ability to attribute mental states, such as beliefs, desires, intentions, and knowledge, to oneself and others, and to understand that these mental states can differ from one's own and from reality. We investigate ToM in environments with multiple, distinct, independent AI agents, each possessing unique internal states, information, and objectives. Inspired by human false-belief experiments, we present an AI ('focal AI') with a scenario where its clone undergoes a human-centric ToM assessment. We prompt the focal AI to assess whether its clone would benefit from additional instructions. Concurrently, we give its clones the ToM assessment, both with and without the instructions, thereby engaging the focal AI in higher-order counterfactual reasoning akin to human mentalizing--with respect to humans in one test and to other AI in another. We uncover a discrepancy: Contemporary AI demonstrates near-perfect accuracy on human-centric ToM assessments. Since information embedded in one AI is identically embedded in its clone, additional instructions are redundant. Yet, we observe AI crafting elaborate instructions for their clones, erroneously anticipating a need for assistance. An independent referee AI agrees with these unsupported expectations. Neither the focal AI nor the referee demonstrates ToM in our 'silico-centric' test.

Multi-objective Feature Selection in Remote Health Monitoring Applications

Jan 10, 2024Abstract:Radio frequency (RF) signals have facilitated the development of non-contact human monitoring tasks, such as vital signs measurement, activity recognition, and user identification. In some specific scenarios, an RF signal analysis framework may prioritize the performance of one task over that of others. In response to this requirement, we employ a multi-objective optimization approach inspired by biological principles to select discriminative features that enhance the accuracy of breathing patterns recognition while simultaneously impeding the identification of individual users. This approach is validated using a novel vital signs dataset consisting of 50 subjects engaged in four distinct breathing patterns. Our findings indicate a remarkable result: a substantial divergence in accuracy between breathing recognition and user identification. As a complementary viewpoint, we present a contrariwise result to maximize user identification accuracy and minimize the system's capacity for breathing activity recognition.

Non-contact Multimodal Indoor Human Monitoring Systems: A Survey

Dec 11, 2023

Abstract:Indoor human monitoring systems leverage a wide range of sensors, including cameras, radio devices, and inertial measurement units, to collect extensive data from users and the environment. These sensors contribute diverse data modalities, such as video feeds from cameras, received signal strength indicators and channel state information from WiFi devices, and three-axis acceleration data from inertial measurement units. In this context, we present a comprehensive survey of multimodal approaches for indoor human monitoring systems, with a specific focus on their relevance in elderly care. Our survey primarily highlights non-contact technologies, particularly cameras and radio devices, as key components in the development of indoor human monitoring systems. Throughout this article, we explore well-established techniques for extracting features from multimodal data sources. Our exploration extends to methodologies for fusing these features and harnessing multiple modalities to improve the accuracy and robustness of machine learning models. Furthermore, we conduct comparative analysis across different data modalities in diverse human monitoring tasks and undertake a comprehensive examination of existing multimodal datasets. This extensive survey not only highlights the significance of indoor human monitoring systems but also affirms their versatile applications. In particular, we emphasize their critical role in enhancing the quality of elderly care, offering valuable insights into the development of non-contact monitoring solutions applicable to the needs of aging populations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge