Michal Yemini

Confidence Boosts Trust-Based Resilience in Cooperative Multi-Robot Systems

Jun 10, 2025Abstract:Wireless communication-based multi-robot systems open the door to cyberattacks that can disrupt safety and performance of collaborative robots. The physical channel supporting inter-robot communication offers an attractive opportunity to decouple the detection of malicious robots from task-relevant data exchange between legitimate robots. Yet, trustworthiness indications coming from physical channels are uncertain and must be handled with this in mind. In this paper, we propose a resilient protocol for multi-robot operation wherein a parameter {\lambda}t accounts for how confident a robot is about the legitimacy of nearby robots that the physical channel indicates. Analytical results prove that our protocol achieves resilient coordination with arbitrarily many malicious robots under mild assumptions. Tuning {\lambda}t allows a designer to trade between near-optimal inter-robot coordination and quick task execution; see Fig. 1. This is a fundamental performance tradeoff and must be carefully evaluated based on the task at hand. The effectiveness of our approach is numerically verified with experiments involving platoons of autonomous cars where some vehicles are maliciously spoofed.

Fast Distributed Optimization over Directed Graphs under Malicious Attacks using Trust

Jul 09, 2024

Abstract:In this work, we introduce the Resilient Projected Push-Pull (RP3) algorithm designed for distributed optimization in multi-agent cyber-physical systems with directed communication graphs and the presence of malicious agents. Our algorithm leverages stochastic inter-agent trust values and gradient tracking to achieve geometric convergence rates in expectation even in adversarial environments. We introduce growing constraint sets to limit the impact of the malicious agents without compromising the geometric convergence rate of the algorithm. We prove that RP3 converges to the nominal optimal solution almost surely and in the $r$-th mean for any $r\geq 1$, provided the step sizes are sufficiently small and the constraint sets are appropriately chosen. We validate our approach with numerical studies on average consensus and multi-robot target tracking problems, demonstrating that RP3 effectively mitigates the impact of malicious agents and achieves the desired geometric convergence.

Privacy Preserving Semi-Decentralized Mean Estimation over Intermittently-Connected Networks

Jun 06, 2024Abstract:We consider the problem of privately estimating the mean of vectors distributed across different nodes of an unreliable wireless network, where communications between nodes can fail intermittently. We adopt a semi-decentralized setup, wherein to mitigate the impact of intermittently connected links, nodes can collaborate with their neighbors to compute a local consensus, which they relay to a central server. In such a setting, the communications between any pair of nodes must ensure that the privacy of the nodes is rigorously maintained to prevent unauthorized information leakage. We study the tradeoff between collaborative relaying and privacy leakage due to the data sharing among nodes and, subsequently, propose PriCER: Private Collaborative Estimation via Relaying -- a differentially private collaborative algorithm for mean estimation to optimize this tradeoff. The privacy guarantees of PriCER arise (i) implicitly, by exploiting the inherent stochasticity of the flaky network connections, and (ii) explicitly, by adding Gaussian perturbations to the estimates exchanged by the nodes. Local and central privacy guarantees are provided against eavesdroppers who can observe different signals, such as the communications amongst nodes during local consensus and (possibly multiple) transmissions from the relays to the central server. We substantiate our theoretical findings with numerical simulations. Our implementation is available at https://github.com/rajarshisaha95/private-collaborative-relaying.

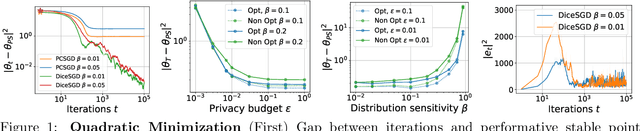

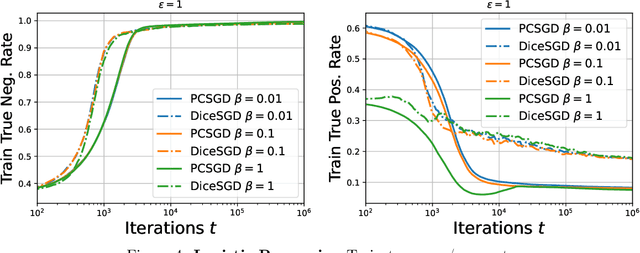

Clipped SGD Algorithms for Privacy Preserving Performative Prediction: Bias Amplification and Remedies

Apr 17, 2024

Abstract:Clipped stochastic gradient descent (SGD) algorithms are among the most popular algorithms for privacy preserving optimization that reduces the leakage of users' identity in model training. This paper studies the convergence properties of these algorithms in a performative prediction setting, where the data distribution may shift due to the deployed prediction model. For example, the latter is caused by strategical users during the training of loan policy for banks. Our contributions are two-fold. First, we show that the straightforward implementation of a projected clipped SGD (PCSGD) algorithm may converge to a biased solution compared to the performative stable solution. We quantify the lower and upper bound for the magnitude of the bias and demonstrate a bias amplification phenomenon where the bias grows with the sensitivity of the data distribution. Second, we suggest two remedies to the bias amplification effect. The first one utilizes an optimal step size design for PCSGD that takes the privacy guarantee into account. The second one uses the recently proposed DiceSGD algorithm [Zhang et al., 2024]. We show that the latter can successfully remove the bias and converge to the performative stable solution. Numerical experiments verify our analysis.

The Role of Confidence for Trust-based Resilient Consensus

Apr 11, 2024

Abstract:We consider a multi-agent system where agents aim to achieve a consensus despite interactions with malicious agents that communicate misleading information. Physical channels supporting communication in cyberphysical systems offer attractive opportunities to detect malicious agents, nevertheless, trustworthiness indications coming from the channel are subject to uncertainty and need to be treated with this in mind. We propose a resilient consensus protocol that incorporates trust observations from the channel and weighs them with a parameter that accounts for how confident an agent is regarding its understanding of the legitimacy of other agents in the network, with no need for the initial observation window $T_0$ that has been utilized in previous works. Analytical and numerical results show that (i) our protocol achieves a resilient consensus in the presence of malicious agents and (ii) the steady-state deviation from nominal consensus can be minimized by a suitable choice of the confidence parameter that depends on the statistics of trust observations.

How Physicality Enables Trust: A New Era of Trust-Centered Cyberphysical Systems

Nov 13, 2023

Abstract:Multi-agent cyberphysical systems enable new capabilities in efficiency, resilience, and security. The unique characteristics of these systems prompt a reevaluation of their security concepts, including their vulnerabilities, and mechanisms to mitigate these vulnerabilities. This survey paper examines how advancement in wireless networking, coupled with the sensing and computing in cyberphysical systems, can foster novel security capabilities. This study delves into three main themes related to securing multi-agent cyberphysical systems. First, we discuss the threats that are particularly relevant to multi-agent cyberphysical systems given the potential lack of trust between agents. Second, we present prospects for sensing, contextual awareness, and authentication, enabling the inference and measurement of ``inter-agent trust" for these systems. Third, we elaborate on the application of quantifiable trust notions to enable ``resilient coordination," where ``resilient" signifies sustained functionality amid attacks on multiagent cyberphysical systems. We refer to the capability of cyberphysical systems to self-organize, and coordinate to achieve a task as autonomy. This survey unveils the cyberphysical character of future interconnected systems as a pivotal catalyst for realizing robust, trust-centered autonomy in tomorrow's world.

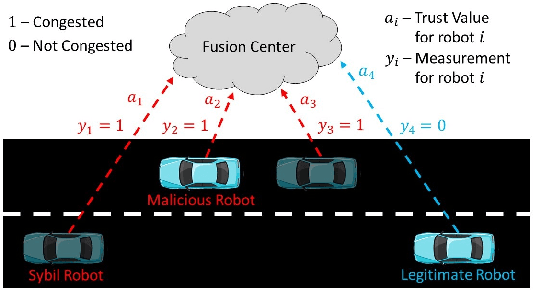

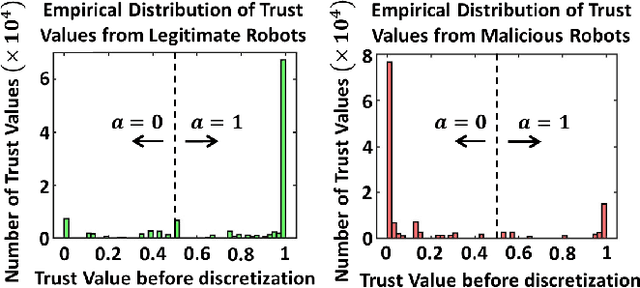

Exploiting Trust for Resilient Hypothesis Testing with Malicious Robots (evolved version)

Mar 07, 2023

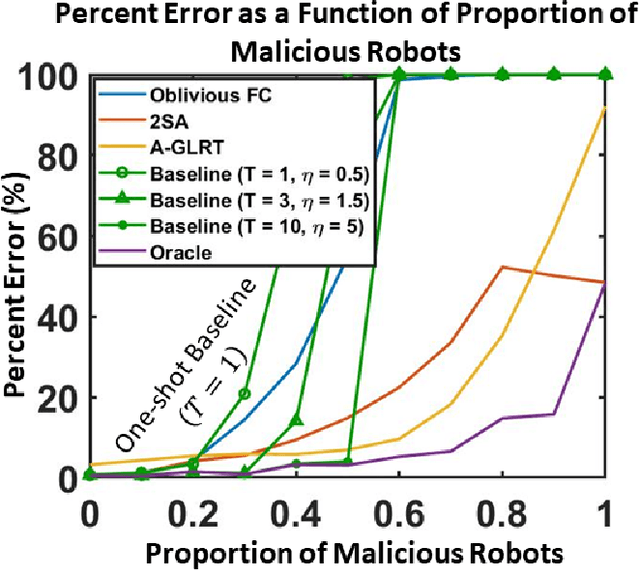

Abstract:We develop a resilient binary hypothesis testing framework for decision making in adversarial multi-robot crowdsensing tasks. This framework exploits stochastic trust observations between robots to arrive at tractable, resilient decision making at a centralized Fusion Center (FC) even when i) there exist malicious robots in the network and their number may be larger than the number of legitimate robots, and ii) the FC uses one-shot noisy measurements from all robots. We derive two algorithms to achieve this. The first is the Two Stage Approach (2SA) that estimates the legitimacy of robots based on received trust observations, and provably minimizes the probability of detection error in the worst-case malicious attack. Here, the proportion of malicious robots is known but arbitrary. For the case of an unknown proportion of malicious robots, we develop the Adversarial Generalized Likelihood Ratio Test (A-GLRT) that uses both the reported robot measurements and trust observations to estimate the trustworthiness of robots, their reporting strategy, and the correct hypothesis simultaneously. We exploit special problem structure to show that this approach remains computationally tractable despite several unknown problem parameters. We deploy both algorithms in a hardware experiment where a group of robots conducts crowdsensing of traffic conditions on a mock-up road network similar in spirit to Google Maps, subject to a Sybil attack. We extract the trust observations for each robot from actual communication signals which provide statistical information on the uniqueness of the sender. We show that even when the malicious robots are in the majority, the FC can reduce the probability of detection error to 30.5% and 29% for the 2SA and the A-GLRT respectively.

Collaborative Mean Estimation over Intermittently Connected Networks with Peer-To-Peer Privacy

Feb 28, 2023Abstract:This work considers the problem of Distributed Mean Estimation (DME) over networks with intermittent connectivity, where the goal is to learn a global statistic over the data samples localized across distributed nodes with the help of a central server. To mitigate the impact of intermittent links, nodes can collaborate with their neighbors to compute local consensus which they forward to the central server. In such a setup, the communications between any pair of nodes must satisfy local differential privacy constraints. We study the tradeoff between collaborative relaying and privacy leakage due to the additional data sharing among nodes and, subsequently, propose a novel differentially private collaborative algorithm for DME to achieve the optimal tradeoff. Finally, we present numerical simulations to substantiate our theoretical findings.

Resilient Distributed Optimization for Multi-Agent Cyberphysical Systems

Dec 05, 2022Abstract:Enhancing resilience in distributed networks in the face of malicious agents is an important problem for which many key theoretical results and applications require further development and characterization. This work focuses on the problem of distributed optimization in multi-agent cyberphysical systems, where a legitimate agent's dynamic is influenced both by the values it receives from potentially malicious neighboring agents, and by its own self-serving target function. We develop a new algorithmic and analytical framework to achieve resilience for the class of problems where stochastic values of trust between agents exist and can be exploited. In this case we show that convergence to the true global optimal point can be recovered, both in mean and almost surely, even in the presence of malicious agents. Furthermore, we provide expected convergence rate guarantees in the form of upper bounds on the expected squared distance to the optimal value. Finally, we present numerical results that validate the analytical convergence guarantees we present in this paper even when the malicious agents compose the majority of agents in the network.

Exploiting Trust for Resilient Hypothesis Testing with Malicious Robots

Sep 25, 2022

Abstract:We develop a resilient binary hypothesis testing framework for decision making in adversarial multi-robot crowdsensing tasks. This framework exploits stochastic trust observations between robots to arrive at tractable, resilient decision making at a centralized Fusion Center (FC) even when i) there exist malicious robots in the network and their number may be larger than the number of legitimate robots, and ii) the FC uses one-shot noisy measurements from all robots. We derive two algorithms to achieve this. The first is the Two Stage Approach (2SA) that estimates the legitimacy of robots based on received trust observations, and provably minimizes the probability of detection error in the worst-case malicious attack. Here, the proportion of malicious robots is known but arbitrary. For the case of an unknown proportion of malicious robots, we develop the Adversarial Generalized Likelihood Ratio Test (A-GLRT) that uses both the reported robot measurements and trust observations to estimate the trustworthiness of robots, their reporting strategy, and the correct hypothesis simultaneously. We exploit special problem structure to show that this approach remains computationally tractable despite several unknown problem parameters. We deploy both algorithms in a hardware experiment where a group of robots conducts crowdsensing of traffic conditions on a mock-up road network similar in spirit to Google Maps, subject to a Sybil attack. We extract the trust observations for each robot from actual communication signals which provide statistical information on the uniqueness of the sender. We show that even when the malicious robots are in the majority, the FC can reduce the probability of detection error to 30.5% and 29% for the 2SA and the A-GLRT respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge