Mohamed Seif

Adversary-Aware Private Inference over Wireless Channels

Oct 23, 2025Abstract:AI-based sensing at wireless edge devices has the potential to significantly enhance Artificial Intelligence (AI) applications, particularly for vision and perception tasks such as in autonomous driving and environmental monitoring. AI systems rely both on efficient model learning and inference. In the inference phase, features extracted from sensing data are utilized for prediction tasks (e.g., classification or regression). In edge networks, sensors and model servers are often not co-located, which requires communication of features. As sensitive personal data can be reconstructed by an adversary, transformation of the features are required to reduce the risk of privacy violations. While differential privacy mechanisms provide a means of protecting finite datasets, protection of individual features has not been addressed. In this paper, we propose a novel framework for privacy-preserving AI-based sensing, where devices apply transformations of extracted features before transmission to a model server.

Spectral Graph Clustering under Differential Privacy: Balancing Privacy, Accuracy, and Efficiency

Oct 08, 2025Abstract:We study the problem of spectral graph clustering under edge differential privacy (DP). Specifically, we develop three mechanisms: (i) graph perturbation via randomized edge flipping combined with adjacency matrix shuffling, which enforces edge privacy while preserving key spectral properties of the graph. Importantly, shuffling considerably amplifies the guarantees: whereas flipping edges with a fixed probability alone provides only a constant epsilon edge DP guarantee as the number of nodes grows, the shuffled mechanism achieves (epsilon, delta) edge DP with parameters that tend to zero as the number of nodes increase; (ii) private graph projection with additive Gaussian noise in a lower-dimensional space to reduce dimensionality and computational complexity; and (iii) a noisy power iteration method that distributes Gaussian noise across iterations to ensure edge DP while maintaining convergence. Our analysis provides rigorous privacy guarantees and a precise characterization of the misclassification error rate. Experiments on synthetic and real-world networks validate our theoretical analysis and illustrate the practical privacy-utility trade-offs.

Detecting Post-generation Edits to Watermarked LLM Outputs via Combinatorial Watermarking

Oct 02, 2025Abstract:Watermarking has become a key technique for proprietary language models, enabling the distinction between AI-generated and human-written text. However, in many real-world scenarios, LLM-generated content may undergo post-generation edits, such as human revisions or even spoofing attacks, making it critical to detect and localize such modifications. In this work, we introduce a new task: detecting post-generation edits locally made to watermarked LLM outputs. To this end, we propose a combinatorial pattern-based watermarking framework, which partitions the vocabulary into disjoint subsets and embeds the watermark by enforcing a deterministic combinatorial pattern over these subsets during generation. We accompany the combinatorial watermark with a global statistic that can be used to detect the watermark. Furthermore, we design lightweight local statistics to flag and localize potential edits. We introduce two task-specific evaluation metrics, Type-I error rate and detection accuracy, and evaluate our method on open-source LLMs across a variety of editing scenarios, demonstrating strong empirical performance in edit localization.

Game-Theoretic Joint Incentive and Cut Layer Selection Mechanism in Split Federated Learning

Dec 10, 2024

Abstract:To alleviate the training burden in federated learning while enhancing convergence speed, Split Federated Learning (SFL) has emerged as a promising approach by combining the advantages of federated and split learning. However, recent studies have largely overlooked competitive situations. In this framework, the SFL model owner can choose the cut layer to balance the training load between the server and clients, ensuring the necessary level of privacy for the clients. Additionally, the SFL model owner sets incentives to encourage client participation in the SFL process. The optimization strategies employed by the SFL model owner influence clients' decisions regarding the amount of data they contribute, taking into account the shared incentives over clients and anticipated energy consumption during SFL. To address this framework, we model the problem using a hierarchical decision-making approach, formulated as a single-leader multi-follower Stackelberg game. We demonstrate the existence and uniqueness of the Nash equilibrium among clients and analyze the Stackelberg equilibrium by examining the leader's game. Furthermore, we discuss privacy concerns related to differential privacy and the criteria for selecting the minimum required cut layer. Our findings show that the Stackelberg equilibrium solution maximizes the utility for both the clients and the SFL model owner.

Collaborative Inference over Wireless Channels with Feature Differential Privacy

Oct 25, 2024

Abstract:Collaborative inference among multiple wireless edge devices has the potential to significantly enhance Artificial Intelligence (AI) applications, particularly for sensing and computer vision. This approach typically involves a three-stage process: a) data acquisition through sensing, b) feature extraction, and c) feature encoding for transmission. However, transmitting the extracted features poses a significant privacy risk, as sensitive personal data can be exposed during the process. To address this challenge, we propose a novel privacy-preserving collaborative inference mechanism, wherein each edge device in the network secures the privacy of extracted features before transmitting them to a central server for inference. Our approach is designed to achieve two primary objectives: 1) reducing communication overhead and 2) ensuring strict privacy guarantees during feature transmission, while maintaining effective inference performance. Additionally, we introduce an over-the-air pooling scheme specifically designed for classification tasks, which provides formal guarantees on the privacy of transmitted features and establishes a lower bound on classification accuracy.

Privacy Preserving Semi-Decentralized Mean Estimation over Intermittently-Connected Networks

Jun 06, 2024Abstract:We consider the problem of privately estimating the mean of vectors distributed across different nodes of an unreliable wireless network, where communications between nodes can fail intermittently. We adopt a semi-decentralized setup, wherein to mitigate the impact of intermittently connected links, nodes can collaborate with their neighbors to compute a local consensus, which they relay to a central server. In such a setting, the communications between any pair of nodes must ensure that the privacy of the nodes is rigorously maintained to prevent unauthorized information leakage. We study the tradeoff between collaborative relaying and privacy leakage due to the data sharing among nodes and, subsequently, propose PriCER: Private Collaborative Estimation via Relaying -- a differentially private collaborative algorithm for mean estimation to optimize this tradeoff. The privacy guarantees of PriCER arise (i) implicitly, by exploiting the inherent stochasticity of the flaky network connections, and (ii) explicitly, by adding Gaussian perturbations to the estimates exchanged by the nodes. Local and central privacy guarantees are provided against eavesdroppers who can observe different signals, such as the communications amongst nodes during local consensus and (possibly multiple) transmissions from the relays to the central server. We substantiate our theoretical findings with numerical simulations. Our implementation is available at https://github.com/rajarshisaha95/private-collaborative-relaying.

Clustering Mixtures of Discrete Distributions: A Note on Mitra's Algorithm

May 29, 2024Abstract:In this note, we provide a refined analysis of Mitra's algorithm \cite{mitra2008clustering} for classifying general discrete mixture distribution models. Built upon spectral clustering \cite{mcsherry2001spectral}, this algorithm offers compelling conditions for probability distributions. We enhance this analysis by tailoring the model to bipartite stochastic block models, resulting in more refined conditions. Compared to those derived in \cite{mitra2008clustering}, our improved separation conditions are obtained.

Exploring the Privacy-Energy Consumption Tradeoff for Split Federated Learning

Nov 15, 2023Abstract:Split Federated Learning (SFL) has recently emerged as a promising distributed learning technology, leveraging the strengths of both federated learning and split learning. It emphasizes the advantages of rapid convergence while addressing privacy concerns. As a result, this innovation has received significant attention from both industry and academia. However, since the model is split at a specific layer, known as a cut layer, into both client-side and server-side models for the SFL, the choice of the cut layer in SFL can have a substantial impact on the energy consumption of clients and their privacy, as it influences the training burden and the output of the client-side models. Moreover, the design challenge of determining the cut layer is highly intricate, primarily due to the inherent heterogeneity in the computing and networking capabilities of clients. In this article, we provide a comprehensive overview of the SFL process and conduct a thorough analysis of energy consumption and privacy. This analysis takes into account the influence of various system parameters on the cut layer selection strategy. Additionally, we provide an illustrative example of the cut layer selection, aiming to minimize the risk of clients from reconstructing the raw data at the server while sustaining energy consumption within the required energy budget, which involve trade-offs. Finally, we address open challenges in this field including their applications to 6G technology. These directions represent promising avenues for future research and development.

Collaborative Mean Estimation over Intermittently Connected Networks with Peer-To-Peer Privacy

Feb 28, 2023Abstract:This work considers the problem of Distributed Mean Estimation (DME) over networks with intermittent connectivity, where the goal is to learn a global statistic over the data samples localized across distributed nodes with the help of a central server. To mitigate the impact of intermittent links, nodes can collaborate with their neighbors to compute local consensus which they forward to the central server. In such a setup, the communications between any pair of nodes must satisfy local differential privacy constraints. We study the tradeoff between collaborative relaying and privacy leakage due to the additional data sharing among nodes and, subsequently, propose a novel differentially private collaborative algorithm for DME to achieve the optimal tradeoff. Finally, we present numerical simulations to substantiate our theoretical findings.

Differentially Private Community Detection for Stochastic Block Models

Jan 31, 2022

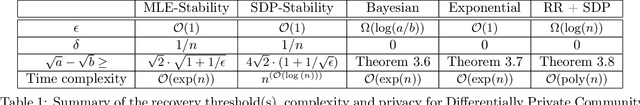

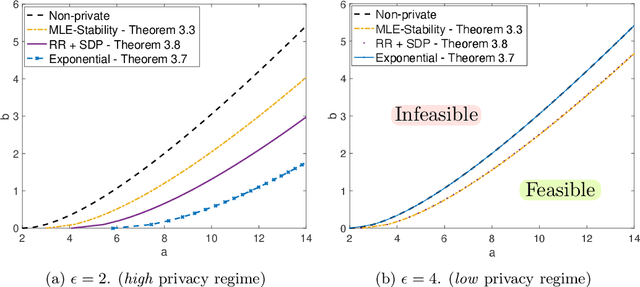

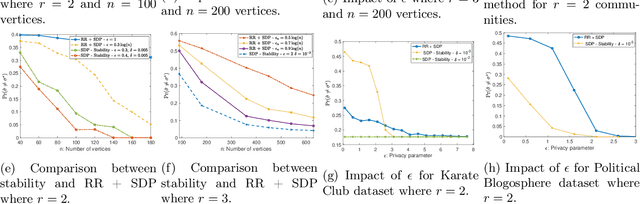

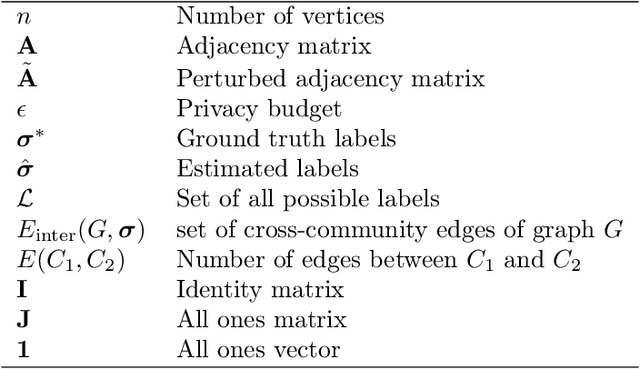

Abstract:The goal of community detection over graphs is to recover underlying labels/attributes of users (e.g., political affiliation) given the connectivity between users (represented by adjacency matrix of a graph). There has been significant recent progress on understanding the fundamental limits of community detection when the graph is generated from a stochastic block model (SBM). Specifically, sharp information theoretic limits and efficient algorithms have been obtained for SBMs as a function of $p$ and $q$, which represent the intra-community and inter-community connection probabilities. In this paper, we study the community detection problem while preserving the privacy of the individual connections (edges) between the vertices. Focusing on the notion of $(\epsilon, \delta)$-edge differential privacy (DP), we seek to understand the fundamental tradeoffs between $(p, q)$, DP budget $(\epsilon, \delta)$, and computational efficiency for exact recovery of the community labels. To this end, we present and analyze the associated information-theoretic tradeoffs for three broad classes of differentially private community recovery mechanisms: a) stability based mechanism; b) sampling based mechanisms; and c) graph perturbation mechanisms. Our main findings are that stability and sampling based mechanisms lead to a superior tradeoff between $(p,q)$ and the privacy budget $(\epsilon, \delta)$; however this comes at the expense of higher computational complexity. On the other hand, albeit low complexity, graph perturbation mechanisms require the privacy budget $\epsilon$ to scale as $\Omega(\log(n))$ for exact recovery. To the best of our knowledge, this is the first work to study the impact of privacy constraints on the fundamental limits for community detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge