Andrea Goldsmith

Exploiting Trust for Resilient Hypothesis Testing with Malicious Robots (evolved version)

Mar 07, 2023

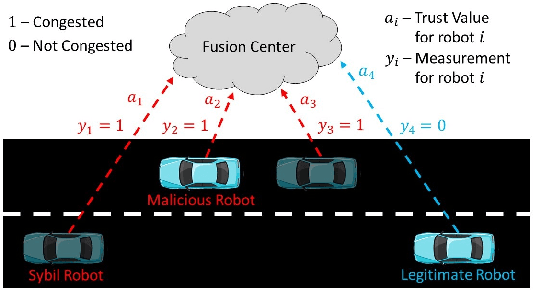

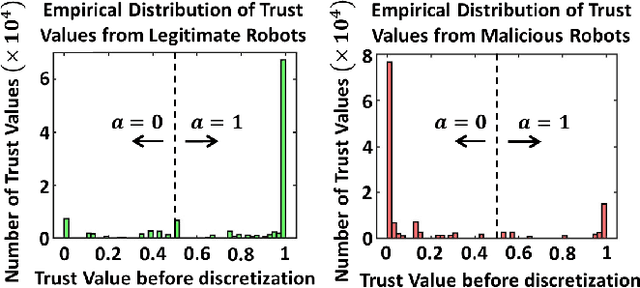

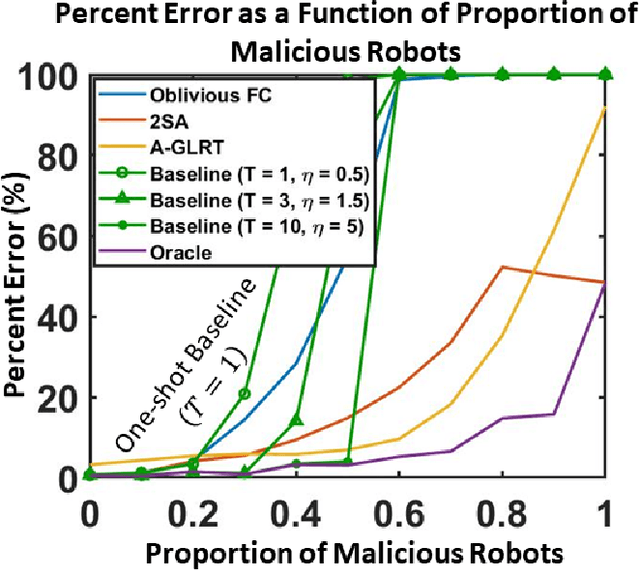

Abstract:We develop a resilient binary hypothesis testing framework for decision making in adversarial multi-robot crowdsensing tasks. This framework exploits stochastic trust observations between robots to arrive at tractable, resilient decision making at a centralized Fusion Center (FC) even when i) there exist malicious robots in the network and their number may be larger than the number of legitimate robots, and ii) the FC uses one-shot noisy measurements from all robots. We derive two algorithms to achieve this. The first is the Two Stage Approach (2SA) that estimates the legitimacy of robots based on received trust observations, and provably minimizes the probability of detection error in the worst-case malicious attack. Here, the proportion of malicious robots is known but arbitrary. For the case of an unknown proportion of malicious robots, we develop the Adversarial Generalized Likelihood Ratio Test (A-GLRT) that uses both the reported robot measurements and trust observations to estimate the trustworthiness of robots, their reporting strategy, and the correct hypothesis simultaneously. We exploit special problem structure to show that this approach remains computationally tractable despite several unknown problem parameters. We deploy both algorithms in a hardware experiment where a group of robots conducts crowdsensing of traffic conditions on a mock-up road network similar in spirit to Google Maps, subject to a Sybil attack. We extract the trust observations for each robot from actual communication signals which provide statistical information on the uniqueness of the sender. We show that even when the malicious robots are in the majority, the FC can reduce the probability of detection error to 30.5% and 29% for the 2SA and the A-GLRT respectively.

Exploiting Trust for Resilient Hypothesis Testing with Malicious Robots

Sep 25, 2022

Abstract:We develop a resilient binary hypothesis testing framework for decision making in adversarial multi-robot crowdsensing tasks. This framework exploits stochastic trust observations between robots to arrive at tractable, resilient decision making at a centralized Fusion Center (FC) even when i) there exist malicious robots in the network and their number may be larger than the number of legitimate robots, and ii) the FC uses one-shot noisy measurements from all robots. We derive two algorithms to achieve this. The first is the Two Stage Approach (2SA) that estimates the legitimacy of robots based on received trust observations, and provably minimizes the probability of detection error in the worst-case malicious attack. Here, the proportion of malicious robots is known but arbitrary. For the case of an unknown proportion of malicious robots, we develop the Adversarial Generalized Likelihood Ratio Test (A-GLRT) that uses both the reported robot measurements and trust observations to estimate the trustworthiness of robots, their reporting strategy, and the correct hypothesis simultaneously. We exploit special problem structure to show that this approach remains computationally tractable despite several unknown problem parameters. We deploy both algorithms in a hardware experiment where a group of robots conducts crowdsensing of traffic conditions on a mock-up road network similar in spirit to Google Maps, subject to a Sybil attack. We extract the trust observations for each robot from actual communication signals which provide statistical information on the uniqueness of the sender. We show that even when the malicious robots are in the majority, the FC can reduce the probability of detection error to 30.5% and 29% for the 2SA and the A-GLRT respectively.

Partner-Aware Algorithms in Decentralized Cooperative Bandit Teams

Oct 02, 2021

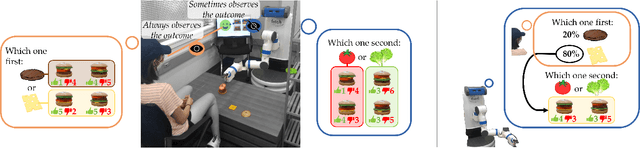

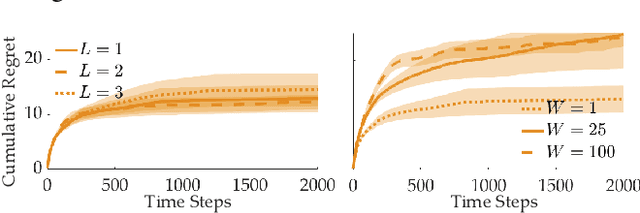

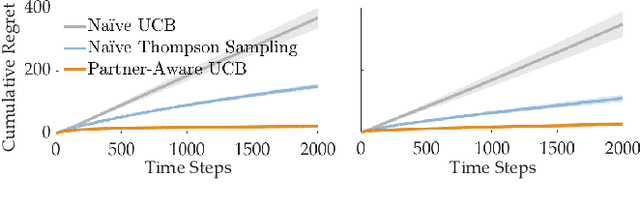

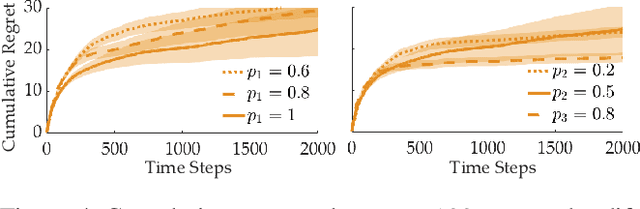

Abstract:When humans collaborate with each other, they often make decisions by observing others and considering the consequences that their actions may have on the entire team, instead of greedily doing what is best for just themselves. We would like our AI agents to effectively collaborate in a similar way by capturing a model of their partners. In this work, we propose and analyze a decentralized Multi-Armed Bandit (MAB) problem with coupled rewards as an abstraction of more general multi-agent collaboration. We demonstrate that na\"ive extensions of single-agent optimal MAB algorithms fail when applied for decentralized bandit teams. Instead, we propose a Partner-Aware strategy for joint sequential decision-making that extends the well-known single-agent Upper Confidence Bound algorithm. We analytically show that our proposed strategy achieves logarithmic regret, and provide extensive experiments involving human-AI and human-robot collaboration to validate our theoretical findings. Our results show that the proposed partner-aware strategy outperforms other known methods, and our human subject studies suggest humans prefer to collaborate with AI agents implementing our partner-aware strategy.

Characterizing Trust and Resilience in Distributed Consensus for Cyberphysical Systems

Mar 09, 2021

Abstract:This work considers the problem of resilient consensus where stochastic values of trust between agents are available. Specifically, we derive a unified mathematical framework to characterize convergence, deviation of the consensus from the true consensus value, and expected convergence rate, when there exists additional information of trust between agents. We show that under certain conditions on the stochastic trust values and consensus protocol: 1) almost sure convergence to a common limit value is possible even when malicious agents constitute more than half of the network connectivity, 2) the deviation of the converged limit, from the case where there is no attack, i.e., the true consensus value, can be bounded with probability that approaches 1 exponentially, and 3) correct classification of malicious and legitimate agents can be attained in finite time almost surely. Further, the expected convergence rate decays exponentially with the quality of the trust observations between agents.

Bayesian Algorithms for Decentralized Stochastic Bandits

Oct 28, 2020

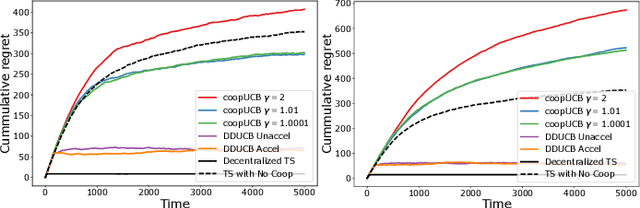

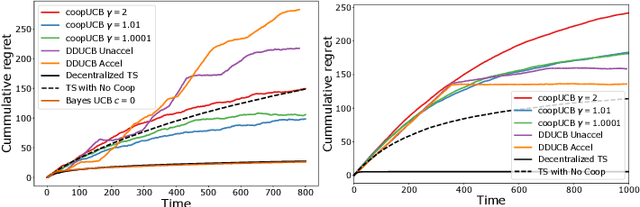

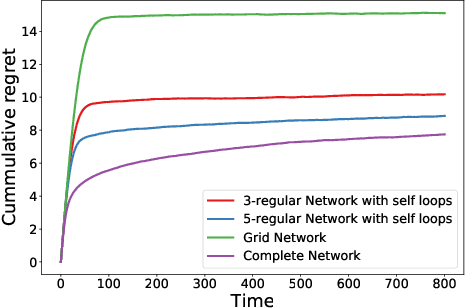

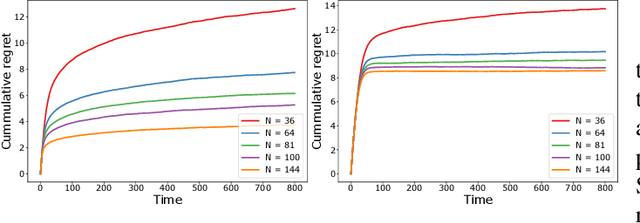

Abstract:We study a decentralized cooperative multi-agent multi-armed bandit problem with $K$ arms and $N$ agents connected over a network. In our model, each arm's reward distribution is same for all agents, and rewards are drawn independently across agents and over time steps. In each round, agents choose an arm to play and subsequently send a message to their neighbors. The goal is to minimize cumulative regret averaged over the entire network. We propose a decentralized Bayesian multi-armed bandit framework that extends single-agent Bayesian bandit algorithms to the decentralized setting. Specifically, we study an information assimilation algorithm that can be combined with existing Bayesian algorithms, and using this, we propose a decentralized Thompson Sampling algorithm and decentralized Bayes-UCB algorithm. We analyze the decentralized Thompson Sampling algorithm under Bernoulli rewards and establish a problem-dependent upper bound on the cumulative regret. We show that regret incurred scales logarithmically over the time horizon with constants that match those of an optimal centralized agent with access to all observations across the network. Our analysis also characterizes the cumulative regret in terms of the network structure. Through extensive numerical studies, we show that our extensions of Thompson Sampling and Bayes-UCB incur lesser cumulative regret than the state-of-art algorithms inspired by the Upper Confidence Bound algorithm. We implement our proposed decentralized Thompson Sampling under gossip protocol, and over time-varying networks, where each communication link has a fixed probability of failure.

Construction of Polar Codes with Reinforcement Learning

Sep 19, 2020

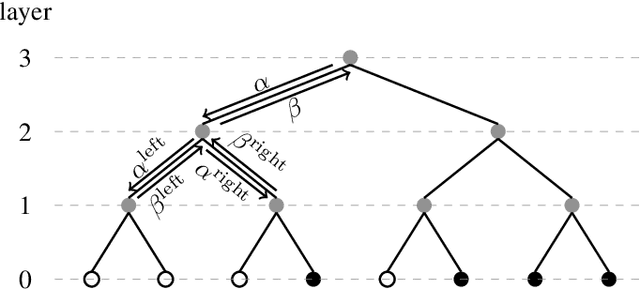

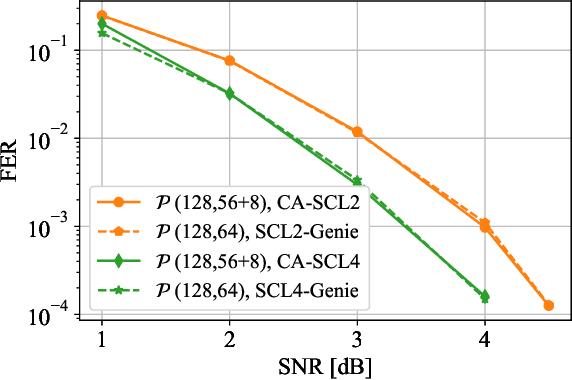

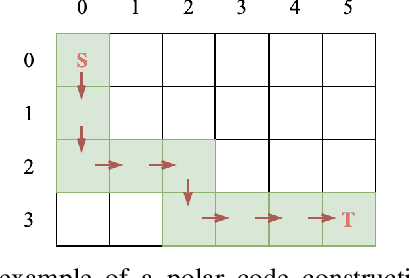

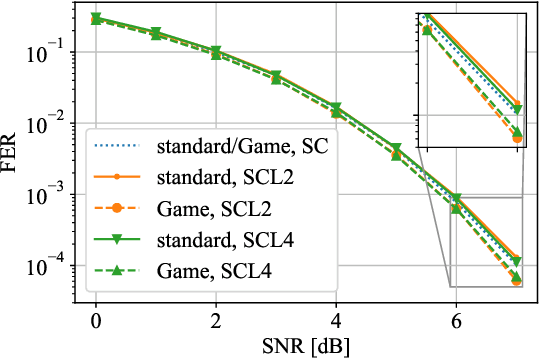

Abstract:This paper formulates the polar-code construction problem for the successive-cancellation list (SCL) decoder as a maze-traversing game, which can be solved by reinforcement learning techniques. The proposed method provides a novel technique for polar-code construction that no longer depends on sorting and selecting bit-channels by reliability. Instead, this technique decides whether the input bits should be frozen in a purely sequential manner. The equivalence of optimizing the polar-code construction for the SCL decoder under this technique and maximizing the expected reward of traversing a maze is drawn. Simulation results show that the standard polar-code constructions that are designed for the successive-cancellation decoder are no longer optimal for the SCL decoder with respect to the frame error rate. In contrast, the simulations show that, with a reasonable amount of training, the game-based construction method finds code constructions that have lower frame-error rate for various code lengths and decoders compared to standard constructions.

Distributed Convex Optimization With Limited Communications

Oct 29, 2018

Abstract:In this paper, a distributed convex optimization algorithm, termed \emph{distributed coordinate dual averaging} (DCDA) algorithm, is proposed. The DCDA algorithm addresses the scenario of a large distributed optimization problem with limited communication among nodes in the network. Currently known distributed subgradient methods, such as the distributed dual averaging or the distributed alternating direction method of multipliers algorithms, assume that nodes can exchange messages of large cardinality. Such network communication capabilities are not valid in many scenarios of practical relevance. In the DCDA algorithm, on the other hand, communication of each coordinate of the optimization variable is restricted over time. For the proposed algorithm, we bound the rate of convergence under different communication protocols and network architectures. We also consider the extensions to the case of imperfect gradient knowledge and the case in which transmitted messages are corrupted by additive noise or are quantized. Relevant numerical simulations are also provided.

Neural Network Detection of Data Sequences in Communication Systems

Aug 28, 2018

Abstract:We consider detection based on deep learning, and show it is possible to train detectors that perform well without any knowledge of the underlying channel models. Moreover, when the channel model is known, we demonstrate that it is possible to train detectors that do not require channel state information (CSI). In particular, a technique we call a sliding bidirectional recurrent neural network (SBRNN) is proposed for detection where, after training, the detector estimates the data in real-time as the signal stream arrives at the receiver. We evaluate this algorithm, as well as other neural network (NN) architectures, using the Poisson channel model, which is applicable to both optical and molecular communication systems. In addition, we also evaluate the performance of this detection method applied to data sent over a molecular communication platform, where the channel model is difficult to model analytically. We show that SBRNN is computationally efficient, and can perform detection under various channel conditions without knowing the underlying channel model. We also demonstrate that the bit error rate (BER) performance of the proposed SBRNN detector is better than that of a Viterbi detector with imperfect CSI as well as that of other NN detectors that have been previously proposed. Finally, we show that the SBRNN can perform well in rapidly changing channels, where the coherence time is on the order of a single symbol duration.

Sliding Bidirectional Recurrent Neural Networks for Sequence Detection in Communication Systems

Feb 19, 2018

Abstract:The design and analysis of communication systems typically rely on the development of mathematical models that describe the underlying communication channel. However, in some systems, such as molecular communication systems where chemical signals are used for transfer of information, the underlying channel models are unknown. In these scenarios, a completely new approach to design and analysis is required. In this work, we focus on one important aspect of communication systems, the detection algorithms, and demonstrate that by using tools from deep learning, it is possible to train detectors that perform well without any knowledge of the underlying channel models. We propose a technique we call sliding bidirectional recurrent neural network (SBRNN) for real-time sequence detection. We evaluate this algorithm using experimental data that is collected by a chemical communication platform, where the channel model is unknown and difficult to model analytically. We show that deep learning algorithms perform significantly better than a detector proposed in previous works, and the SBRNN outperforms other techniques considered in this work.

Deep Learning for Joint Source-Channel Coding of Text

Feb 19, 2018

Abstract:We consider the problem of joint source and channel coding of structured data such as natural language over a noisy channel. The typical approach to this problem in both theory and practice involves performing source coding to first compress the text and then channel coding to add robustness for the transmission across the channel. This approach is optimal in terms of minimizing end-to-end distortion with arbitrarily large block lengths of both the source and channel codes when transmission is over discrete memoryless channels. However, the optimality of this approach is no longer ensured for documents of finite length and limitations on the length of the encoding. We will show in this scenario that we can achieve lower word error rates by developing a deep learning based encoder and decoder. While the approach of separate source and channel coding would minimize bit error rates, our approach preserves semantic information of sentences by first embedding sentences in a semantic space where sentences closer in meaning are located closer together, and then performing joint source and channel coding on these embeddings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge