Md. Mostofa Ali Patwary

Deep Learning Scaling is Predictable, Empirically

Dec 01, 2017

Abstract:Deep learning (DL) creates impactful advances following a virtuous recipe: model architecture search, creating large training data sets, and scaling computation. It is widely believed that growing training sets and models should improve accuracy and result in better products. As DL application domains grow, we would like a deeper understanding of the relationships between training set size, computational scale, and model accuracy improvements to advance the state-of-the-art. This paper presents a large scale empirical characterization of generalization error and model size growth as training sets grow. We introduce a methodology for this measurement and test four machine learning domains: machine translation, language modeling, image processing, and speech recognition. Our empirical results show power-law generalization error scaling across a breadth of factors, resulting in power-law exponents---the "steepness" of the learning curve---yet to be explained by theoretical work. Further, model improvements only shift the error but do not appear to affect the power-law exponent. We also show that model size scales sublinearly with data size. These scaling relationships have significant implications on deep learning research, practice, and systems. They can assist model debugging, setting accuracy targets, and decisions about data set growth. They can also guide computing system design and underscore the importance of continued computational scaling.

A New Parallel Algorithm for Two-Pass Connected Component Labeling

Jun 20, 2016

Abstract:Connected Component Labeling (CCL) is an important step in pattern recognition and image processing. It assigns labels to the pixels such that adjacent pixels sharing the same features are assigned the same label. Typically, CCL requires several passes over the data. We focus on two-pass technique where each pixel is given a provisional label in the first pass whereas an actual label is assigned in the second pass. We present a scalable parallel two-pass CCL algorithm, called PAREMSP, which employs a scan strategy and the best union-find technique called REMSP, which uses REM's algorithm for storing label equivalence information of pixels in a 2-D image. In the first pass, we divide the image among threads and each thread runs the scan phase along with REMSP simultaneously. In the second phase, we assign the final labels to the pixels. As REMSP is easily parallelizable, we use the parallel version of REMSP for merging the pixels on the boundary. Our experiments show the scalability of PAREMSP achieving speedups up to $20.1$ using $24$ cores on shared memory architecture using OpenMP for an image of size $465.20$ MB. We find that our proposed parallel algorithm achieves linear scaling for a large resolution fixed problem size as the number of processing elements are increased. Additionally, the parallel algorithm does not make use of any hardware specific routines, and thus is highly portable.

Scalable Bayesian Optimization Using Deep Neural Networks

Jul 13, 2015

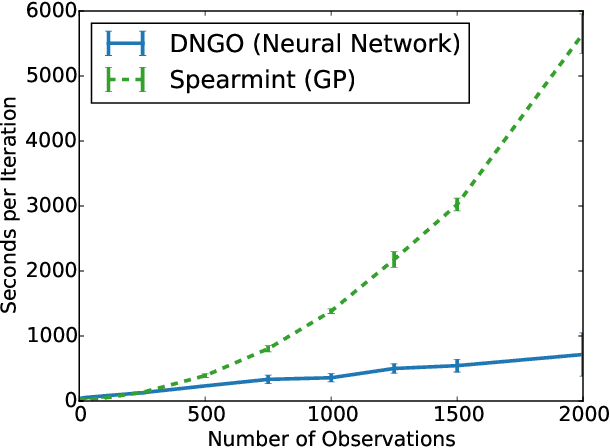

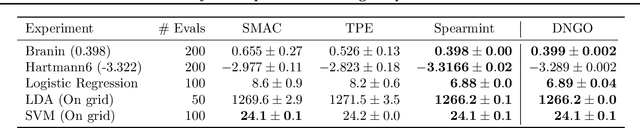

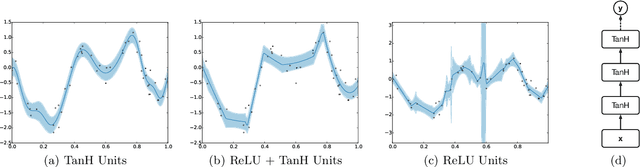

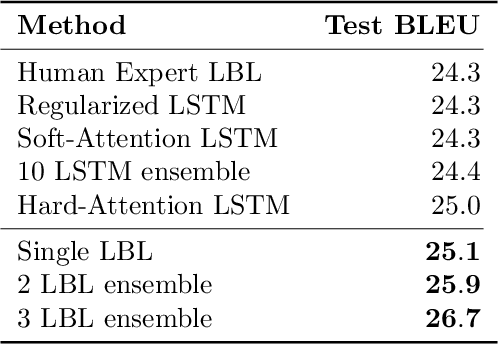

Abstract:Bayesian optimization is an effective methodology for the global optimization of functions with expensive evaluations. It relies on querying a distribution over functions defined by a relatively cheap surrogate model. An accurate model for this distribution over functions is critical to the effectiveness of the approach, and is typically fit using Gaussian processes (GPs). However, since GPs scale cubically with the number of observations, it has been challenging to handle objectives whose optimization requires many evaluations, and as such, massively parallelizing the optimization. In this work, we explore the use of neural networks as an alternative to GPs to model distributions over functions. We show that performing adaptive basis function regression with a neural network as the parametric form performs competitively with state-of-the-art GP-based approaches, but scales linearly with the number of data rather than cubically. This allows us to achieve a previously intractable degree of parallelism, which we apply to large scale hyperparameter optimization, rapidly finding competitive models on benchmark object recognition tasks using convolutional networks, and image caption generation using neural language models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge