Mattes Mollenhauer

Float8@2bits: Entropy Coding Enables Data-Free Model Compression

Jan 30, 2026Abstract:Post-training compression is currently divided into two contrasting regimes. On the one hand, fast, data-free, and model-agnostic methods (e.g., NF4 or HQQ) offer maximum accessibility but suffer from functional collapse at extreme bit-rates below 4 bits. On the other hand, techniques leveraging calibration data or extensive recovery training achieve superior fidelity but impose high computational constraints and face uncertain robustness under data distribution shifts. We introduce EntQuant, the first framework to unite the advantages of these distinct paradigms. By matching the performance of data-dependent methods with the speed and universality of data-free techniques, EntQuant enables practical utility in the extreme compression regime. Our method decouples numerical precision from storage cost via entropy coding, compressing a 70B parameter model in less than 30 minutes. We demonstrate that EntQuant does not only achieve state-of-the-art results on standard evaluation sets and models, but also retains functional performance on more complex benchmarks with instruction-tuned models, all at modest inference overhead.

Regularized least squares learning with heavy-tailed noise is minimax optimal

May 20, 2025Abstract:This paper examines the performance of ridge regression in reproducing kernel Hilbert spaces in the presence of noise that exhibits a finite number of higher moments. We establish excess risk bounds consisting of subgaussian and polynomial terms based on the well known integral operator framework. The dominant subgaussian component allows to achieve convergence rates that have previously only been derived under subexponential noise - a prevalent assumption in related work from the last two decades. These rates are optimal under standard eigenvalue decay conditions, demonstrating the asymptotic robustness of regularized least squares against heavy-tailed noise. Our derivations are based on a Fuk-Nagaev inequality for Hilbert-space valued random variables.

Choose Your Model Size: Any Compression by a Single Gradient Descent

Feb 03, 2025

Abstract:The adoption of Foundation Models in resource-constrained environments remains challenging due to their large size and inference costs. A promising way to overcome these limitations is post-training compression, which aims to balance reduced model size against performance degradation. This work presents Any Compression via Iterative Pruning (ACIP), a novel algorithmic approach to determine a compression-performance trade-off from a single stochastic gradient descent run. To ensure parameter efficiency, we use an SVD-reparametrization of linear layers and iteratively prune their singular values with a sparsity-inducing penalty. The resulting pruning order gives rise to a global parameter ranking that allows us to materialize models of any target size. Importantly, the compressed models exhibit strong predictive downstream performance without the need for costly fine-tuning. We evaluate ACIP on a large selection of open-weight LLMs and tasks, and demonstrate state-of-the-art results compared to existing factorisation-based compression methods. We also show that ACIP seamlessly complements common quantization-based compression techniques.

Optimal Rates for Vector-Valued Spectral Regularization Learning Algorithms

May 23, 2024Abstract:We study theoretical properties of a broad class of regularized algorithms with vector-valued output. These spectral algorithms include kernel ridge regression, kernel principal component regression, various implementations of gradient descent and many more. Our contributions are twofold. First, we rigorously confirm the so-called saturation effect for ridge regression with vector-valued output by deriving a novel lower bound on learning rates; this bound is shown to be suboptimal when the smoothness of the regression function exceeds a certain level. Second, we present the upper bound for the finite sample risk general vector-valued spectral algorithms, applicable to both well-specified and misspecified scenarios (where the true regression function lies outside of the hypothesis space) which is minimax optimal in various regimes. All of our results explicitly allow the case of infinite-dimensional output variables, proving consistency of recent practical applications.

Towards Optimal Sobolev Norm Rates for the Vector-Valued Regularized Least-Squares Algorithm

Dec 13, 2023Abstract:We present the first optimal rates for infinite-dimensional vector-valued ridge regression on a continuous scale of norms that interpolate between $L_2$ and the hypothesis space, which we consider as a vector-valued reproducing kernel Hilbert space. These rates allow to treat the misspecified case in which the true regression function is not contained in the hypothesis space. We combine standard assumptions on the capacity of the hypothesis space with a novel tensor product construction of vector-valued interpolation spaces in order to characterize the smoothness of the regression function. Our upper bound not only attains the same rate as real-valued kernel ridge regression, but also removes the assumption that the target regression function is bounded. For the lower bound, we reduce the problem to the scalar setting using a projection argument. We show that these rates are optimal in most cases and independent of the dimension of the output space. We illustrate our results for the special case of vector-valued Sobolev spaces.

Learning linear operators: Infinite-dimensional regression as a well-behaved non-compact inverse problem

Nov 16, 2022Abstract:We consider the problem of learning a linear operator $\theta$ between two Hilbert spaces from empirical observations, which we interpret as least squares regression in infinite dimensions. We show that this goal can be reformulated as an inverse problem for $\theta$ with the undesirable feature that its forward operator is generally non-compact (even if $\theta$ is assumed to be compact or of $p$-Schatten class). However, we prove that, in terms of spectral properties and regularisation theory, this inverse problem is equivalent to the known compact inverse problem associated with scalar response regression. Our framework allows for the elegant derivation of dimension-free rates for generic learning algorithms under H\"older-type source conditions. The proofs rely on the combination of techniques from kernel regression with recent results on concentration of measure for sub-exponential Hilbertian random variables. The obtained rates hold for a variety of practically-relevant scenarios in functional regression as well as nonlinear regression with operator-valued kernels and match those of classical kernel regression with scalar response.

Optimal Rates for Regularized Conditional Mean Embedding Learning

Aug 02, 2022Abstract:We address the consistency of a kernel ridge regression estimate of the conditional mean embedding (CME), which is an embedding of the conditional distribution of $Y$ given $X$ into a target reproducing kernel Hilbert space $\mathcal{H}_Y$. The CME allows us to take conditional expectations of target RKHS functions, and has been employed in nonparametric causal and Bayesian inference. We address the misspecified setting, where the target CME is in the space of Hilbert-Schmidt operators acting from an input interpolation space between $\mathcal{H}_X$ and $L_2$, to $\mathcal{H}_Y$. This space of operators is shown to be isomorphic to a newly defined vector-valued interpolation space. Using this isomorphism, we derive a novel and adaptive statistical learning rate for the empirical CME estimator under the misspecified setting. Our analysis reveals that our rates match the optimal $O(\log n / n)$ rates without assuming $\mathcal{H}_Y$ to be finite dimensional. We further establish a lower bound on the learning rate, which shows that the obtained upper bound is optimal.

Nonparametric approximation of conditional expectation operators

Jan 07, 2021

Abstract:Given the joint distribution of two random variables $X,Y$ on some second countable locally compact Hausdorff space, we investigate the statistical approximation of the $L^2$-operator defined by $[Pf](x) := \mathbb{E}[ f(Y) \mid X = x ]$ under minimal assumptions. By modifying its domain, we prove that $P$ can be arbitrarily well approximated in operator norm by Hilbert--Schmidt operators acting on a reproducing kernel Hilbert space. This fact allows to estimate $P$ uniformly by finite-rank operators over a dense subspace even when $P$ is not compact. In terms of modes of convergence, we thereby obtain the superiority of kernel-based techniques over classically used parametric projection approaches such as Galerkin methods. This also provides a novel perspective on which limiting object the nonparametric estimate of $P$ converges to. As an application, we show that these results are particularly important for a large family of spectral analysis techniques for Markov transition operators. Our investigation also gives a new asymptotic perspective on the so-called kernel conditional mean embedding, which is the theoretical foundation of a wide variety of techniques in kernel-based nonparametric inference.

Kernel autocovariance operators of stationary processes: Estimation and convergence

Apr 02, 2020Abstract:We consider autocovariance operators of a stationary stochastic process on a Polish space that is embedded into a reproducing kernel Hilbert space. We investigate how empirical estimates of these operators converge along realizations of the process under various conditions. In particular, we examine ergodic and strongly mixing processes and prove several asymptotic results as well as finite sample error bounds with a detailed analysis for the Gaussian kernel. We provide applications of our theory in terms of consistency results for kernel PCA with dependent data and the conditional mean embedding of transition probabilities. Finally, we use our approach to examine the nonparametric estimation of Markov transition operators and highlight how our theory can give a consistency analysis for a large family of spectral analysis methods including kernel-based dynamic mode decomposition.

Kernel Conditional Density Operators

May 27, 2019

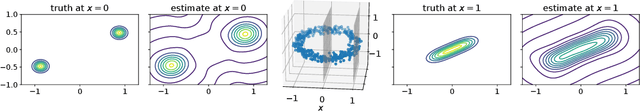

Abstract:We introduce a conditional density estimation model termed the conditional density operator. It naturally captures multivariate, multimodal output densities and is competitive with recent neural conditional density models and Gaussian processes. To derive the model, we propose a novel approach to the reconstruction of probability densities from their kernel mean embeddings by drawing connections to estimation of Radon-Nikodym derivatives in the reproducing kernel Hilbert space (RKHS). We prove finite sample error bounds which are independent of problem dimensionality. Furthermore, the resulting conditional density model is applied to real-world data and we demonstrate its versatility and competitive performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge