Massimiliano de Leoni

Exploring LLM Features in Predictive Process Monitoring for Small-Scale Event-Logs

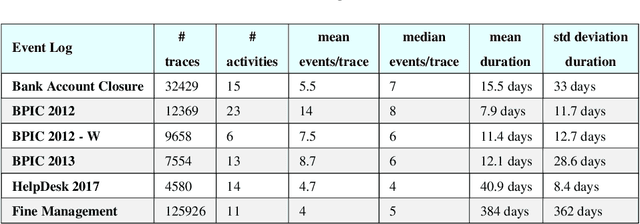

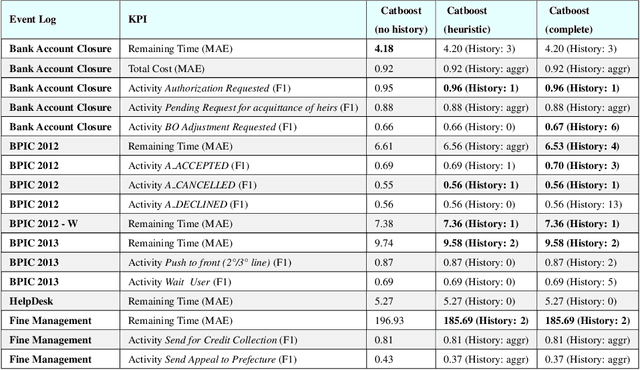

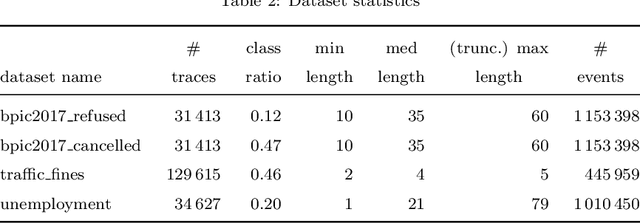

Jan 16, 2026Abstract:Predictive Process Monitoring is a branch of process mining that aims to predict the outcome of an ongoing process. Recently, it leveraged machine-and-deep learning architectures. In this paper, we extend our prior LLM-based Predictive Process Monitoring framework, which was initially focused on total time prediction via prompting. The extension consists of comprehensively evaluating its generality, semantic leverage, and reasoning mechanisms, also across multiple Key Performance Indicators. Empirical evaluations conducted on three distinct event logs and across the Key Performance Indicators of Total Time and Activity Occurrence prediction indicate that, in data-scarce settings with only 100 traces, the LLM surpasses the benchmark methods. Furthermore, the experiments also show that the LLM exploits both its embodied prior knowledge and the internal correlations among training traces. Finally, we examine the reasoning strategies employed by the model, demonstrating that the LLM does not merely replicate existing predictive methods but performs higher-order reasoning to generate the predictions.

Online Discovery of Simulation Models for Evolving Business Processes (Extended Version)

Jun 11, 2025Abstract:Business Process Simulation (BPS) refers to techniques designed to replicate the dynamic behavior of a business process. Many approaches have been proposed to automatically discover simulation models from historical event logs, reducing the cost and time to manually design them. However, in dynamic business environments, organizations continuously refine their processes to enhance efficiency, reduce costs, and improve customer satisfaction. Existing techniques to process simulation discovery lack adaptability to real-time operational changes. In this paper, we propose a streaming process simulation discovery technique that integrates Incremental Process Discovery with Online Machine Learning methods. This technique prioritizes recent data while preserving historical information, ensuring adaptation to evolving process dynamics. Experiments conducted on four different event logs demonstrate the importance in simulation of giving more weight to recent data while retaining historical knowledge. Our technique not only produces more stable simulations but also exhibits robustness in handling concept drift, as highlighted in one of the use cases.

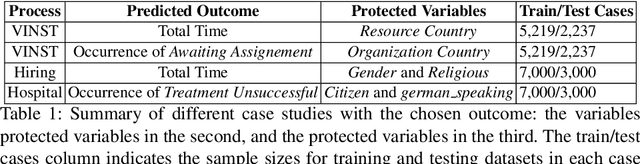

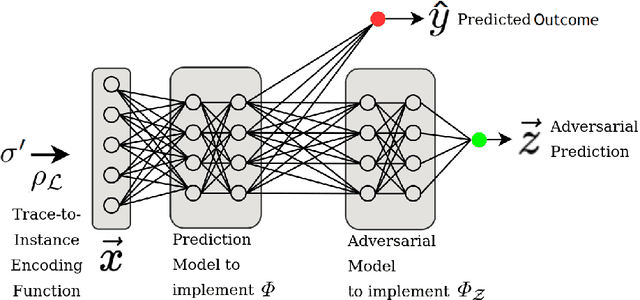

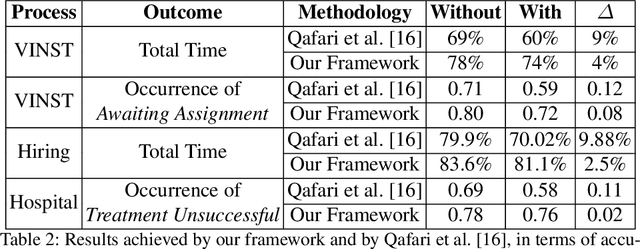

Achieving Fairness in Predictive Process Analytics via Adversarial Learning (Extended Version)

Oct 03, 2024

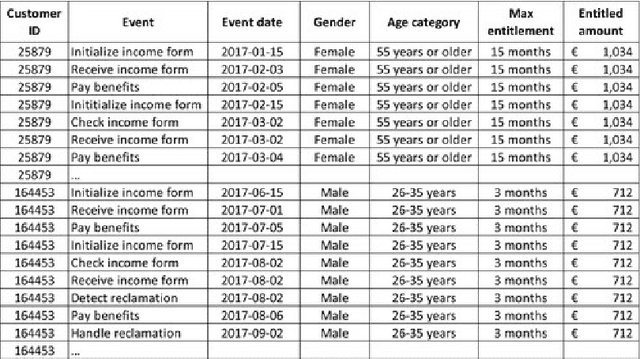

Abstract:Predictive business process analytics has become important for organizations, offering real-time operational support for their processes. However, these algorithms often perform unfair predictions because they are based on biased variables (e.g., gender or nationality), namely variables embodying discrimination. This paper addresses the challenge of integrating a debiasing phase into predictive business process analytics to ensure that predictions are not influenced by biased variables. Our framework leverages on adversial debiasing is evaluated on four case studies, showing a significant reduction in the contribution of biased variables to the predicted value. The proposed technique is also compared with the state of the art in fairness in process mining, illustrating that our framework allows for a more enhanced level of fairness, while retaining a better prediction quality.

An Explainable Decision Support System for Predictive Process Analytics

Jul 26, 2022

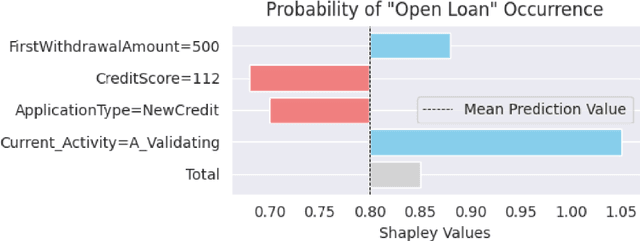

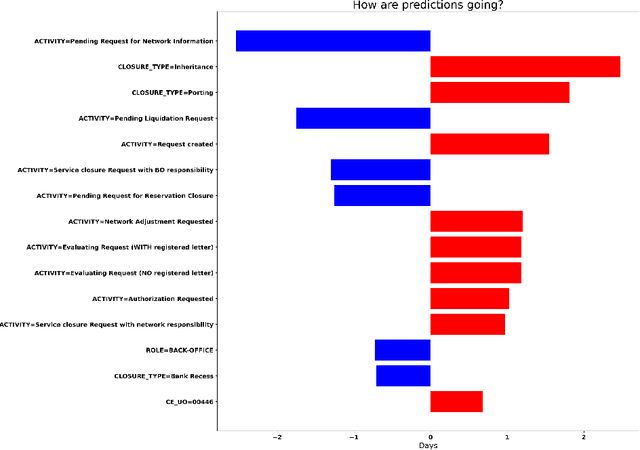

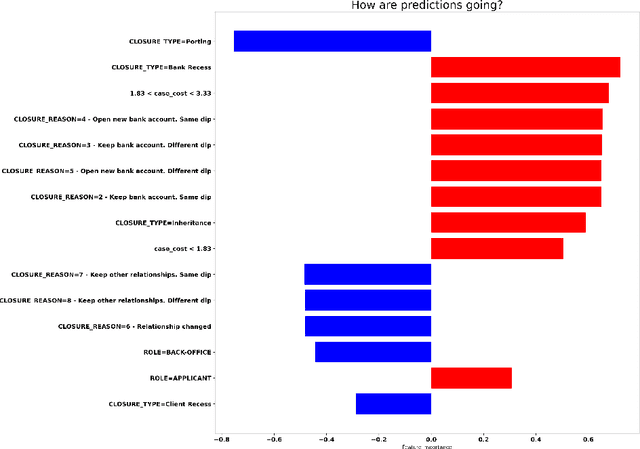

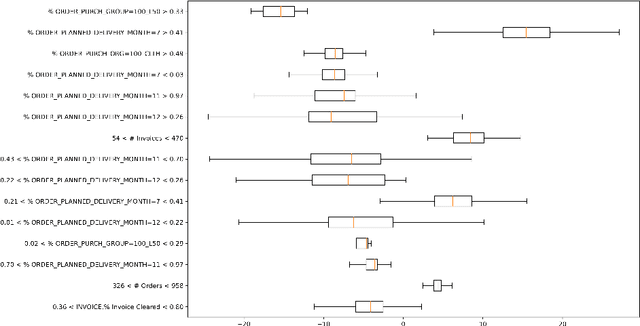

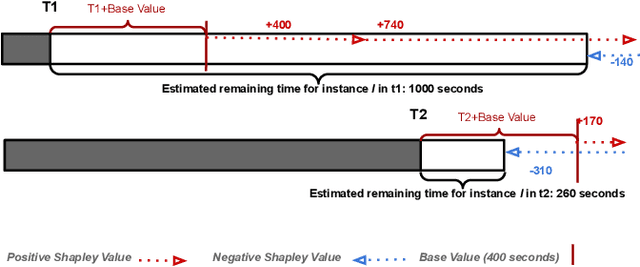

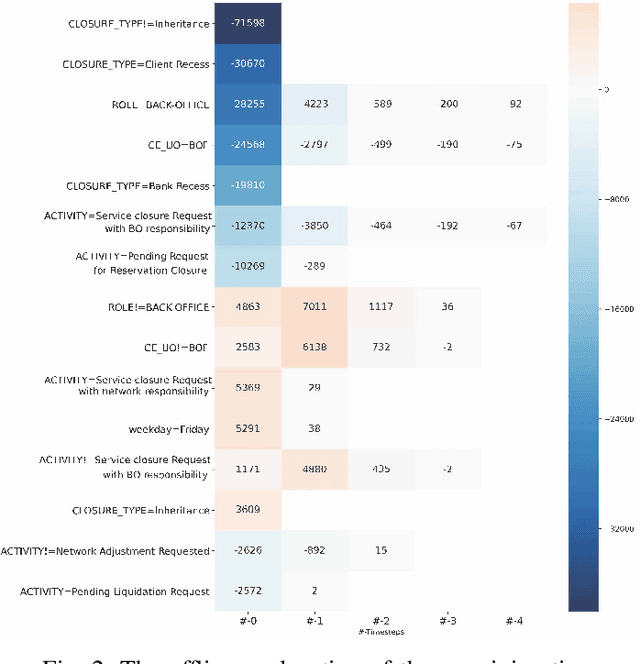

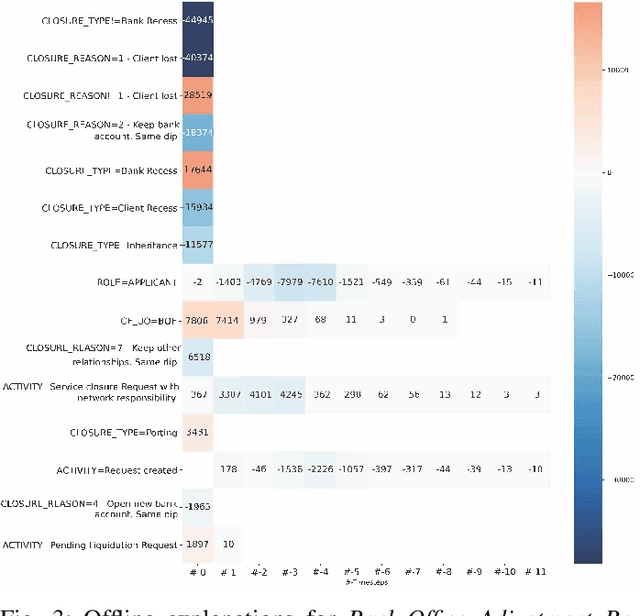

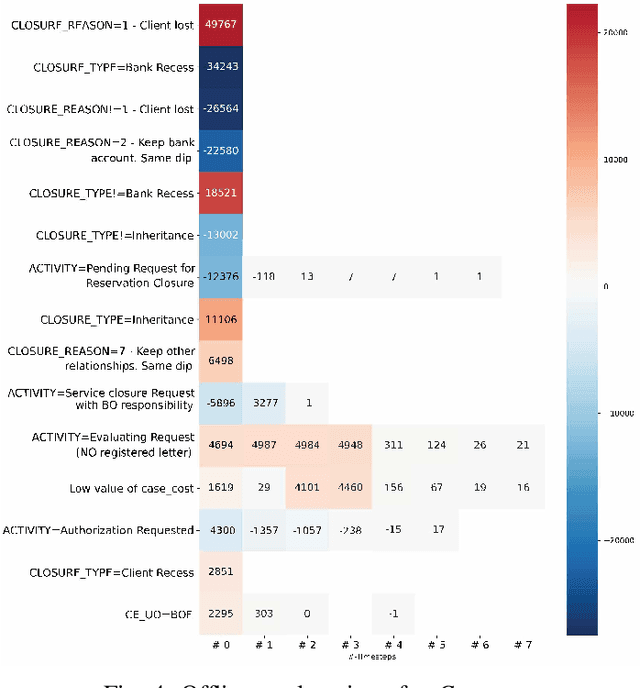

Abstract:Predictive Process Analytics is becoming an essential aid for organizations, providing online operational support of their processes. However, process stakeholders need to be provided with an explanation of the reasons why a given process execution is predicted to behave in a certain way. Otherwise, they will be unlikely to trust the predictive monitoring technology and, hence, adopt it. This paper proposes a predictive analytics framework that is also equipped with explanation capabilities based on the game theory of Shapley Values. The framework has been implemented in the IBM Process Mining suite and commercialized for business users. The framework has been tested on real-life event data to assess the quality of the predictions and the corresponding evaluations. In particular, a user evaluation has been performed in order to understand if the explanations provided by the system were intelligible to process stakeholders.

Object-centric Process Predictive Analytics

Mar 05, 2022

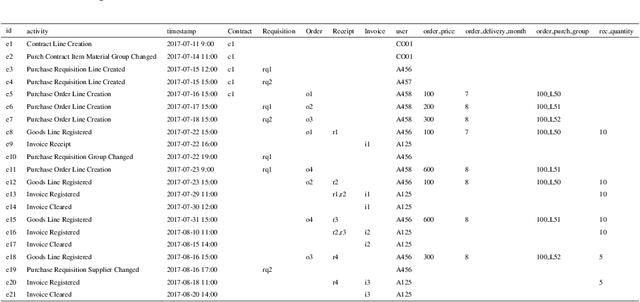

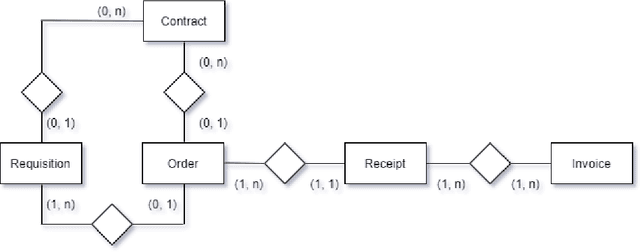

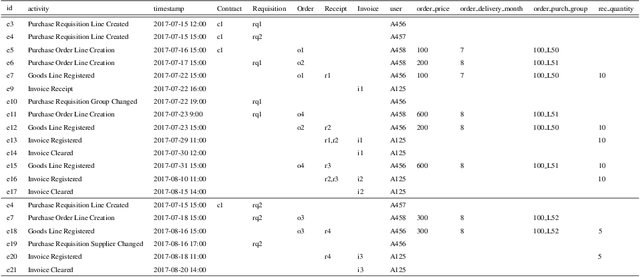

Abstract:Object-centric processes (a.k.a. Artifact-centric processes) are implementations of a paradigm where an instance of one process is not executed in isolation but interacts with other instances of the same or other processes. Interactions take place through bridging events where instances exchange data. Object-centric processes are recently gaining popularity in academia and industry, because their nature is observed in many application scenarios. This poses significant challenges in predictive analytics due to the complex intricacy of the process instances that relate to each other via many-to-many associations. Existing research is unable to directly exploit the benefits of these interactions, thus limiting the prediction quality. This paper proposes an approach to incorporate the information about the object interactions into the predictive models. The approach is assessed on real-life object-centric process event data, using different KPIs. The results are compared with a naive approach that overlooks the object interactions, thus illustrating the benefits of their use on the prediction quality.

Explainable Predictive Process Monitoring

Sep 16, 2020

Abstract:Predictive Business Process Monitoring is becoming an essential aid for organizations, providing online operational support of their processes. This paper tackles the fundamental problem of equipping predictive business process monitoring with explanation capabilities, so that not only the what but also the why is reported when predicting generic KPIs like remaining time, or activity execution. We use the game theory of Shapley Values to obtain robust explanations of the predictions. The approach has been implemented and tested on real-life benchmarks, showing for the first time how explanations can be given in the field of predictive business process monitoring.

Fire Now, Fire Later: Alarm-Based Systems for Prescriptive Process Monitoring

May 23, 2019

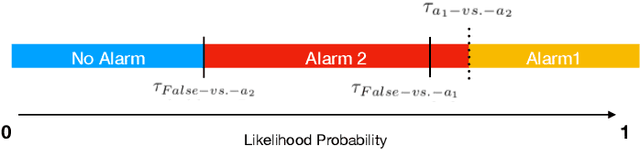

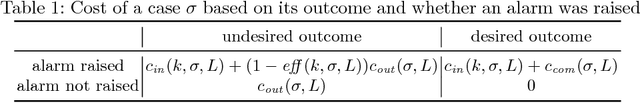

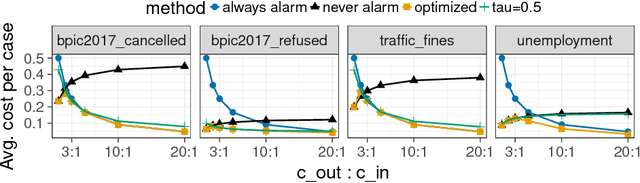

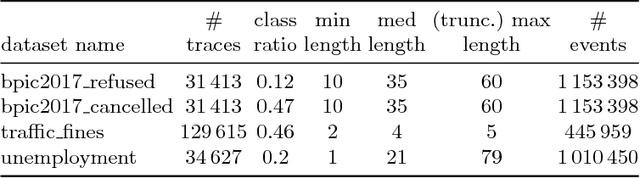

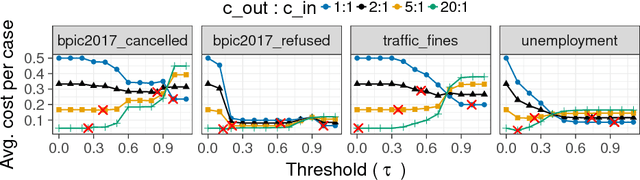

Abstract:Predictive process monitoring is a family of techniques to analyze events produced during the execution of a business process in order to predict the future state or the final outcome of running process instances. Existing techniques in this field are able to predict, at each step of a process instance, the likelihood that it will lead to an undesired outcome.These techniques, however, focus on generating predictions and do not prescribe when and how process workers should intervene to decrease the cost of undesired outcomes. This paper proposes a framework for prescriptive process monitoring, which extends predictive monitoring with the ability to generate alarms that trigger interventions to prevent an undesired outcome or mitigate its effect. The framework incorporates a parameterized cost model to assess the cost-benefit trade-off of generating alarms. We show how to optimize the generation of alarms given an event log of past process executions and a set of cost model parameters. The proposed approaches are empirically evaluated using a range of real-life event logs. The experimental results show that the net cost of undesired outcomes can be minimized by changing the threshold for generating alarms, as the process instance progresses. Moreover, introducing delays for triggering alarms, instead of triggering them as soon as the probability of an undesired outcome exceeds a threshold, leads to lower net costs.

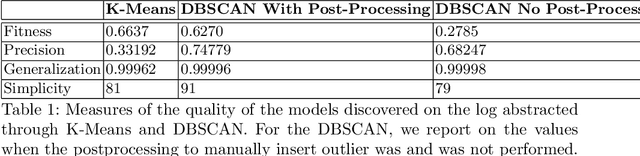

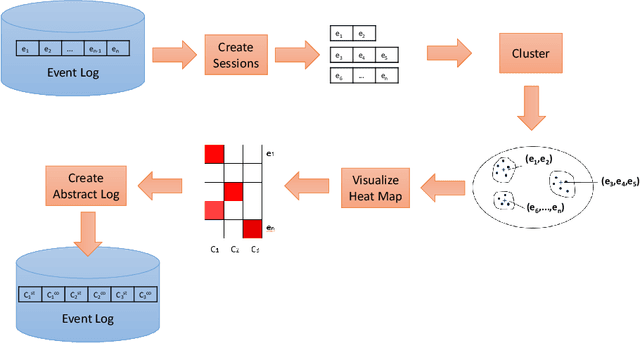

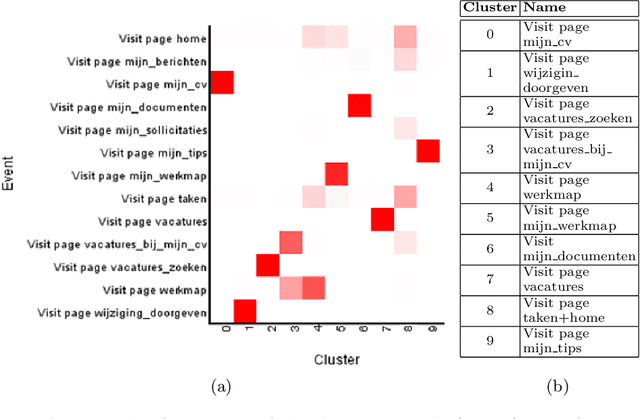

From Low-Level Events to Activities -- A Session-Based Approach

Mar 15, 2019

Abstract:Process-Mining techniques aim to use event data about past executions to gain insight into how processes are executed. While these techniques are proven to be very valuable, they are less successful to reach their goal if the process is flexible and, hence, events can potentially occur in any order. Furthermore, information systems can record events at very low level, which do not match the high-level concepts known at business level. Without abstracting sequences of events to high-level concepts, the results of applying process mining (e.g., discovered models) easily become very complex and difficult to interpret, which ultimately means that they are of little use. A large body of research exists on event abstraction but typically a large amount of domain knowledge is required to be fed in, which is often not readily available. Other abstraction techniques are unsupervised, which give lower accuracy. This paper puts forward a technique that requires limited domain knowledge that can be easily provided. Traces are divided in sessions, and each session is abstracted as one single high-level activity execution. The abstraction is based on a combination of automatic clustering and visualization methods. The technique was assessed on two case studies that evidently exhibits a large amount of behavior. The results clearly illustrate the benefits of the abstraction to convey knowledge to stakeholders.

Alarm-Based Prescriptive Process Monitoring

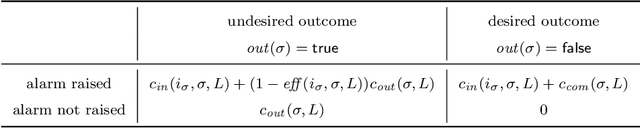

Jun 19, 2018

Abstract:Predictive process monitoring is concerned with the analysis of events produced during the execution of a process in order to predict the future state of ongoing cases thereof. Existing techniques in this field are able to predict, at each step of a case, the likelihood that the case will end up in an undesired outcome. These techniques, however, do not take into account what process workers may do with the generated predictions in order to decrease the likelihood of undesired outcomes. This paper proposes a framework for prescriptive process monitoring, which extends predictive process monitoring approaches with the concepts of alarms, interventions, compensations, and mitigation effects. The framework incorporates a parameterized cost model to assess the cost-benefit tradeoffs of applying prescriptive process monitoring in a given setting. The paper also outlines an approach to optimize the generation of alarms given a dataset and a set of cost model parameters. The proposed approach is empirically evaluated using a range of real-life event logs.

Time and Activity Sequence Prediction of Business Process Instances

Feb 24, 2016

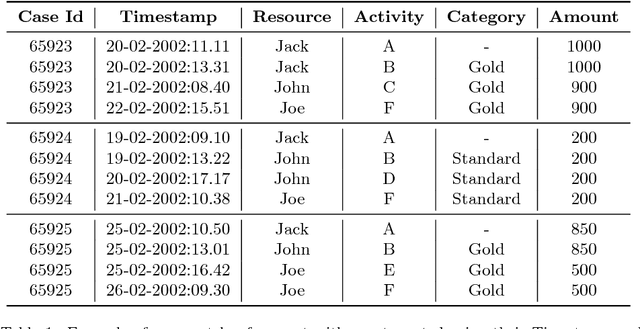

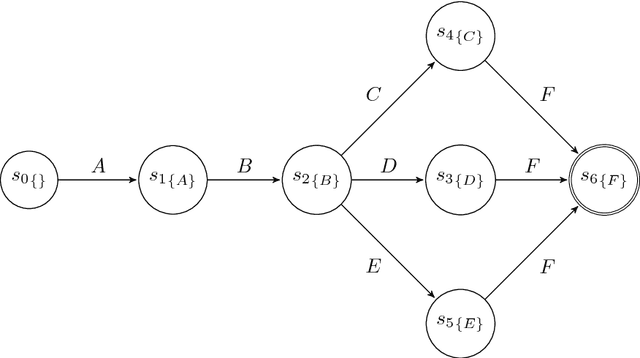

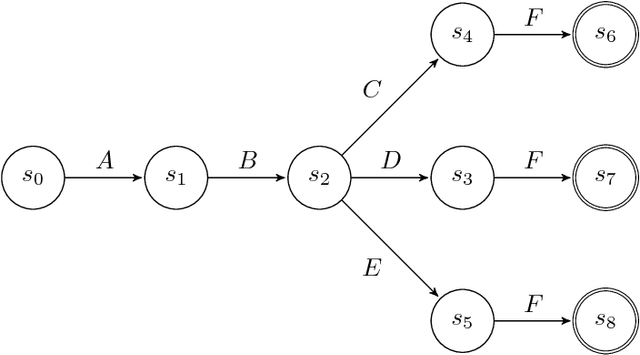

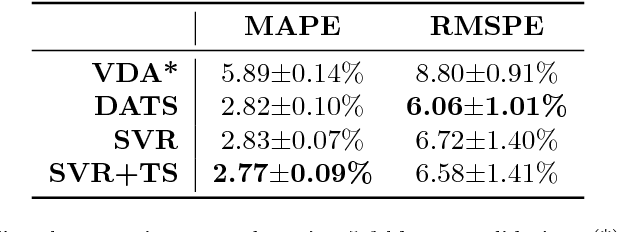

Abstract:The ability to know in advance the trend of running process instances, with respect to different features, such as the expected completion time, would allow business managers to timely counteract to undesired situations, in order to prevent losses. Therefore, the ability to accurately predict future features of running business process instances would be a very helpful aid when managing processes, especially under service level agreement constraints. However, making such accurate forecasts is not easy: many factors may influence the predicted features. Many approaches have been proposed to cope with this problem but all of them assume that the underling process is stationary. However, in real cases this assumption is not always true. In this work we present new methods for predicting the remaining time of running cases. In particular we propose a method, assuming process stationarity, which outperforms the state-of-the-art and two other methods which are able to make predictions even with non-stationary processes. We also describe an approach able to predict the full sequence of activities that a running case is going to take. All these methods are extensively evaluated on two real case studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge