Stephan A. Fahrenkrog-Petersen

Fairness in Healthcare Processes: A Quantitative Analysis of Decision Making in Triage

Jan 16, 2026Abstract:Fairness in automated decision-making has become a critical concern, particularly in high-pressure healthcare scenarios such as emergency triage, where fast and equitable decisions are essential. Process mining is increasingly investigating fairness. There is a growing area focusing on fairness-aware algorithms. So far, we know less how these concepts perform on empirical healthcare data or how they cover aspects of justice theory. This study addresses this research problem and proposes a process mining approach to assess fairness in triage by linking real-life event logs with conceptual dimensions of justice. Using the MIMICEL event log (as derived from MIMIC-IV ED), we analyze time, re-do, deviation and decision as process outcomes, and evaluate the influence of age, gender, race, language and insurance using the Kruskal-Wallis, Chi-square and effect size measurements. These outcomes are mapped to justice dimensions to support the development of a conceptual framework. The results demonstrate which aspects of potential unfairness in high-acuity and sub-acute surface. In this way, this study contributes empirical insights that support further research in responsible, fairness-aware process mining in healthcare.

SHAining on Process Mining: Explaining Event Log Characteristics Impact on Algorithms

Sep 10, 2025Abstract:Process mining aims to extract and analyze insights from event logs, yet algorithm metric results vary widely depending on structural event log characteristics. Existing work often evaluates algorithms on a fixed set of real-world event logs but lacks a systematic analysis of how event log characteristics impact algorithms individually. Moreover, since event logs are generated from processes, where characteristics co-occur, we focus on associational rather than causal effects to assess how strong the overlapping individual characteristic affects evaluation metrics without assuming isolated causal effects, a factor often neglected by prior work. We introduce SHAining, the first approach to quantify the marginal contribution of varying event log characteristics to process mining algorithms' metrics. Using process discovery as a downstream task, we analyze over 22,000 event logs covering a wide span of characteristics to uncover which affect algorithms across metrics (e.g., fitness, precision, complexity) the most. Furthermore, we offer novel insights about how the value of event log characteristics correlates with their contributed impact, assessing the algorithm's robustness.

Control-flow Reconstruction Attacks on Business Process Models

Sep 17, 2024

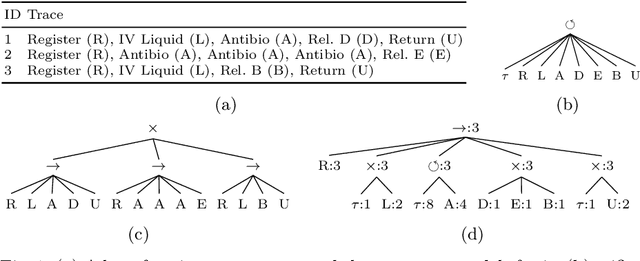

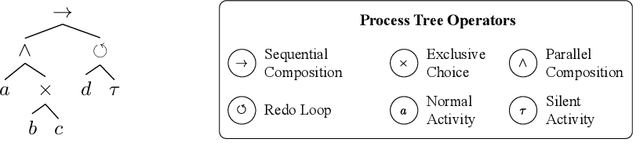

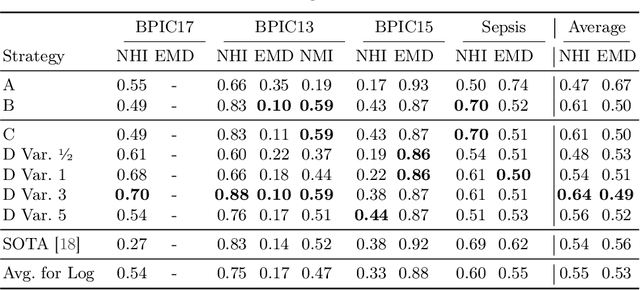

Abstract:Process models may be automatically generated from event logs that contain as-is data of a business process. While such models generalize over the control-flow of specific, recorded process executions, they are often also annotated with behavioural statistics, such as execution frequencies.Based thereon, once a model is published, certain insights about the original process executions may be reconstructed, so that an external party may extract confidential information about the business process. This work is the first to empirically investigate such reconstruction attempts based on process models. To this end, we propose different play-out strategies that reconstruct the control-flow from process trees, potentially exploiting frequency annotations. To assess the potential success of such reconstruction attacks on process models, and hence the risks imposed by publishing them, we compare the reconstructed process executions with those of the original log for several real-world datasets.

Unraveling the Never-Ending Story of Lifecycles and Vitalizing Processes

Jul 25, 2024

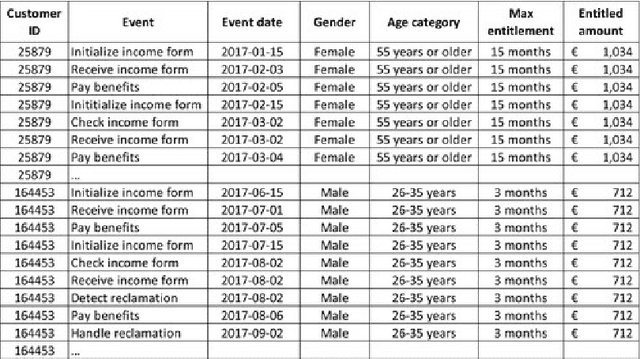

Abstract:Business process management (BPM) has been widely used to discover, model, analyze, and optimize organizational processes. BPM looks at these processes with analysis techniques that assume a clearly defined start and end. However, not all processes adhere to this logic, with the consequence that their behavior cannot be appropriately captured by BPM analysis techniques. This paper addresses this research problem at a conceptual level. More specifically, we introduce the notion of vitalizing business processes that target the lifecycle process of one or more entities. We show the existence of lifecycle processes in many industries and that their appropriate conceptualizations pave the way for the definition of suitable modeling and analysis techniques. This paper provides a set of requirements for their analysis, and a conceptualization of lifecycle and vitalizing processes.

SaCoFa: Semantics-aware Control-flow Anonymization for Process Mining

Sep 17, 2021

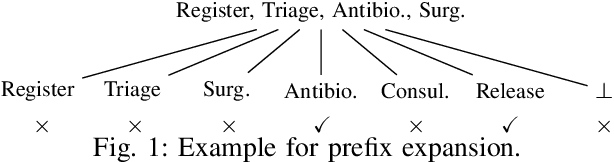

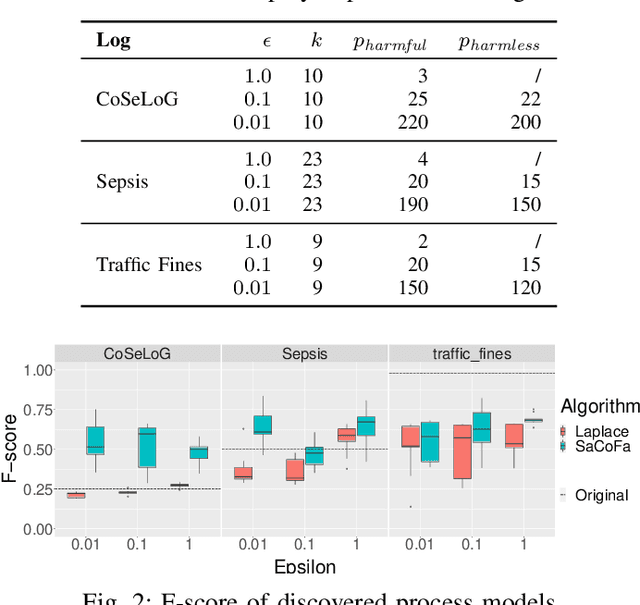

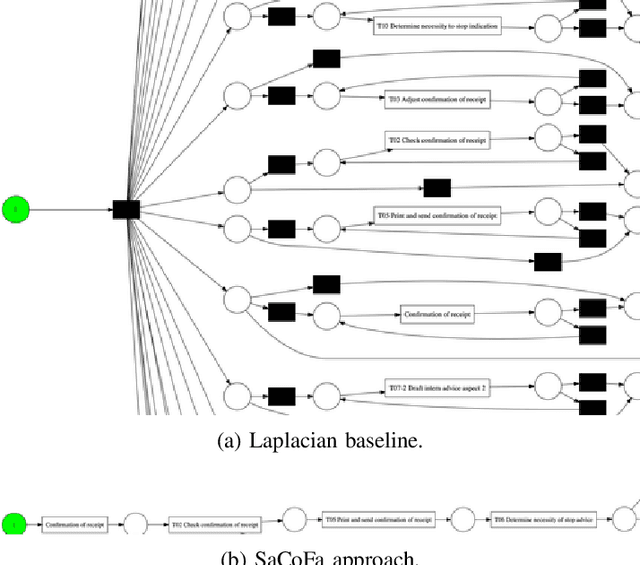

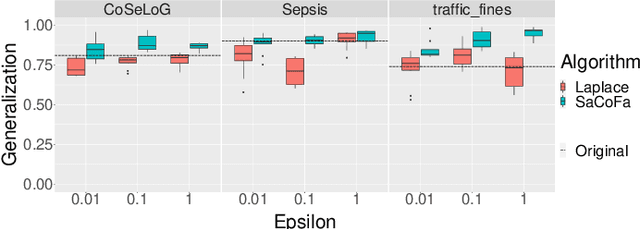

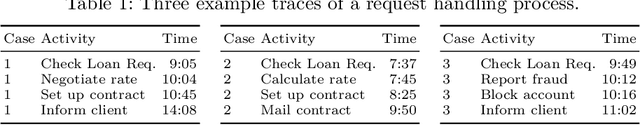

Abstract:Privacy-preserving process mining enables the analysis of business processes using event logs, while giving guarantees on the protection of sensitive information on process stakeholders. To this end, existing approaches add noise to the results of queries that extract properties of an event log, such as the frequency distribution of trace variants, for analysis.Noise insertion neglects the semantics of the process, though, and may generate traces not present in the original log. This is problematic. It lowers the utility of the published data and makes noise easily identifiable, as some traces will violate well-known semantic constraints.In this paper, we therefore argue for privacy preservation that incorporates a process semantics. For common trace-variant queries, we show how, based on the exponential mechanism, semantic constraints are incorporated to ensure differential privacy of the query result. Experiments demonstrate that our semantics-aware anonymization yields event logs of significantly higher utility than existing approaches.

A Distance Measure for Privacy-preserving Process Mining based on Feature Learning

Aug 10, 2021

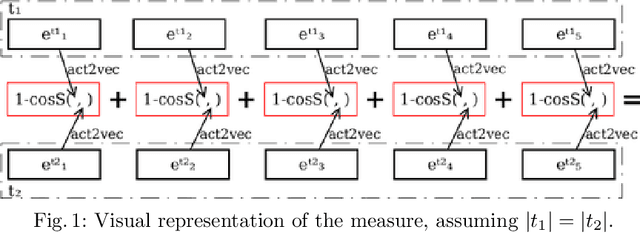

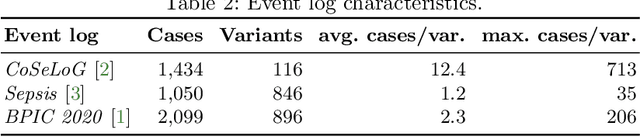

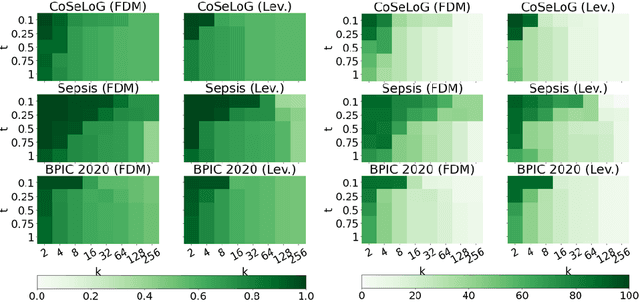

Abstract:To enable process analysis based on an event log without compromising the privacy of individuals involved in process execution, a log may be anonymized. Such anonymization strives to transform a log so that it satisfies provable privacy guarantees, while largely maintaining its utility for process analysis. Existing techniques perform anonymization using simple, syntactic measures to identify suitable transformation operations. This way, the semantics of the activities referenced by the events in a trace are neglected, potentially leading to transformations in which events of unrelated activities are merged. To avoid this and incorporate the semantics of activities during anonymization, we propose to instead incorporate a distance measure based on feature learning. Specifically, we show how embeddings of events enable the definition of a distance measure for traces to guide event log anonymization. Our experiments with real-world data indicate that anonymization using this measure, compared to a syntactic one, yields logs that are closer to the original log in various dimensions and, hence, have higher utility for process analysis.

Fire Now, Fire Later: Alarm-Based Systems for Prescriptive Process Monitoring

May 23, 2019

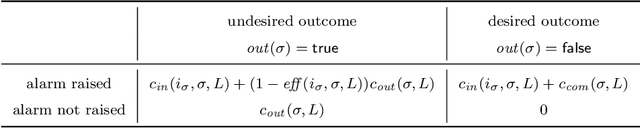

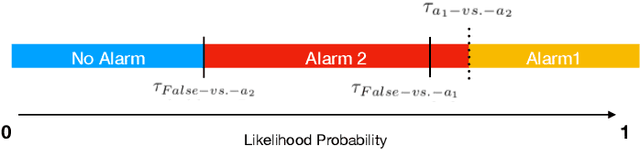

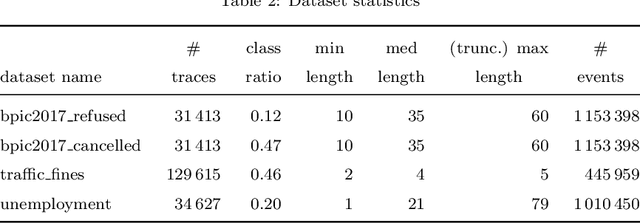

Abstract:Predictive process monitoring is a family of techniques to analyze events produced during the execution of a business process in order to predict the future state or the final outcome of running process instances. Existing techniques in this field are able to predict, at each step of a process instance, the likelihood that it will lead to an undesired outcome.These techniques, however, focus on generating predictions and do not prescribe when and how process workers should intervene to decrease the cost of undesired outcomes. This paper proposes a framework for prescriptive process monitoring, which extends predictive monitoring with the ability to generate alarms that trigger interventions to prevent an undesired outcome or mitigate its effect. The framework incorporates a parameterized cost model to assess the cost-benefit trade-off of generating alarms. We show how to optimize the generation of alarms given an event log of past process executions and a set of cost model parameters. The proposed approaches are empirically evaluated using a range of real-life event logs. The experimental results show that the net cost of undesired outcomes can be minimized by changing the threshold for generating alarms, as the process instance progresses. Moreover, introducing delays for triggering alarms, instead of triggering them as soon as the probability of an undesired outcome exceeds a threshold, leads to lower net costs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge