Martin Huber

Learning From a Steady Hand: A Weakly Supervised Agent for Robot Assistance under Microscopy

Jan 28, 2026Abstract:This paper rethinks steady-hand robotic manipulation by using a weakly supervised framework that fuses calibration-aware perception with admittance control. Unlike conventional automation that relies on labor-intensive 2D labeling, our framework leverages reusable warm-up trajectories to extract implicit spatial information, thereby achieving calibration-aware, depth-resolved perception without the need for external fiducials or manual depth annotation. By explicitly characterizing residuals from observation and calibration models, the system establishes a task-space error budget from recorded warm-ups. The uncertainty budget yields a lateral closed-loop accuracy of approx. 49 micrometers at 95% confidence (worst-case testing subset) and a depth accuracy of <= 291 micrometers at 95% confidence bound during large in-plane moves. In a within-subject user study (N=8), the learned agent reduces overall NASA-TLX workload by 77.1% relative to the simple steady-hand assistance baseline. These results demonstrate that the weakly supervised agent improves the reliability of microscope-guided biomedical micromanipulation without introducing complex setup requirements, offering a practical framework for microscope-guided intervention.

Hydra: Marker-Free RGB-D Hand-Eye Calibration

Apr 29, 2025Abstract:This work presents an RGB-D imaging-based approach to marker-free hand-eye calibration using a novel implementation of the iterative closest point (ICP) algorithm with a robust point-to-plane (PTP) objective formulated on a Lie algebra. Its applicability is demonstrated through comprehensive experiments using three well known serial manipulators and two RGB-D cameras. With only three randomly chosen robot configurations, our approach achieves approximately 90% successful calibrations, demonstrating 2-3x higher convergence rates to the global optimum compared to both marker-based and marker-free baselines. We also report 2 orders of magnitude faster convergence time (0.8 +/- 0.4 s) for 9 robot configurations over other marker-free methods. Our method exhibits significantly improved accuracy (5 mm in task space) over classical approaches (7 mm in task space) whilst being marker-free. The benchmarking dataset and code are open sourced under Apache 2.0 License, and a ROS 2 integration with robot abstraction is provided to facilitate deployment.

Evaluating Robotic Approach Techniques for the Insertion of a Straight Instrument into a Vitreoretinal Surgery Trocar

Jan 13, 2025

Abstract:Advances in vitreoretinal robotic surgery enable precise techniques for gene therapies. This study evaluates three robotic approaches using the 7-DoF robotic arm for docking a micro-precise tool to a trocar: fully co-manipulated, hybrid co-manipulated/teleoperated, and hybrid with camera assistance. The fully co-manipulated approach was the fastest but had a 42% success rate. Hybrid methods showed higher success rates (91.6% and 100%) and completed tasks within 2 minutes. NASA Task Load Index (TLX) assessments indicated lower physical demand and effort for hybrid approaches.

Difference-in-Differences with Time-varying Continuous Treatments using Double/Debiased Machine Learning

Oct 28, 2024

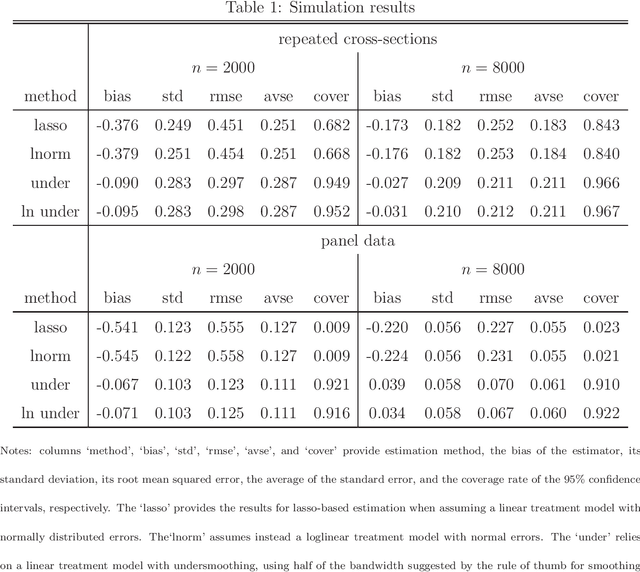

Abstract:We propose a difference-in-differences (DiD) method for a time-varying continuous treatment and multiple time periods. Our framework assesses the average treatment effect on the treated (ATET) when comparing two non-zero treatment doses. The identification is based on a conditional parallel trend assumption imposed on the mean potential outcome under the lower dose, given observed covariates and past treatment histories. We employ kernel-based ATET estimators for repeated cross-sections and panel data adopting the double/debiased machine learning framework to control for covariates and past treatment histories in a data-adaptive manner. We also demonstrate the asymptotic normality of our estimation approach under specific regularity conditions. In a simulation study, we find a compelling finite sample performance of undersmoothed versions of our estimators in setups with several thousand observations.

Vision and Contact based Optimal Control for Autonomous Trocar Docking

Jul 31, 2024Abstract:Future operating theatres will be equipped with robots to perform various surgical tasks including, for example, endoscope control. Human-in-the-loop supervisory control architectures where the surgeon selects from several autonomous sequences is already being successfully applied in preclinical tests. Inserting an endoscope into a trocar or introducer is a key step for every keyhole surgical procedure -- hereafter we will only refer to this device as a "trocar". Our goal is to develop a controller for autonomous trocar docking. Autonomous trocar docking is a version of the peg-in-hole problem. Extensive work in the robotics literature addresses this problem. The peg-in-hole problem has been widely studied in the context of assembly where, typically, the hole is considered static and rigid to interaction. In our case, however, the trocar is not fixed and responds to interaction. We consider a variety of surgical procedures where surgeons will utilize contact between the endoscope and trocar in order to complete the insertion successfully. To the best of our knowledge, we have not found literature that explores this particular generalization of the problem directly. Our primary contribution in this work is an optimal control formulation for automated trocar docking. We use a nonlinear optimization program to model the task, minimizing a cost function subject to constraints to find optimal joint configurations. The controller incorporates a geometric model for insertion and a force-feedback (FF) term to ensure patient safety by preventing excessive interaction forces with the trocar. Experiments, demonstrated on a real hardware lab setup, validate the approach. Our method successfully achieves trocar insertion on our real robot lab setup, and simulation trials demonstrate its ability to reduce interaction forces.

Semi-Autonomous Laparoscopic Robot Docking with Learned Hand-Eye Information Fusion

May 09, 2024Abstract:In this study, we introduce a novel shared-control system for key-hole docking operations, combining a commercial camera with occlusion-robust pose estimation and a hand-eye information fusion technique. This system is used to enhance docking precision and force-compliance safety. To train a hand-eye information fusion network model, we generated a self-supervised dataset using this docking system. After training, our pose estimation method showed improved accuracy compared to traditional methods, including observation-only approaches, hand-eye calibration, and conventional state estimation filters. In real-world phantom experiments, our approach demonstrated its effectiveness with reduced position dispersion (1.23\pm 0.81 mm vs. 2.47 \pm 1.22 mm) and force dispersion (0.78\pm 0.57 N vs. 1.15 \pm 0.97 N) compared to the control group. These advancements in semi-autonomy co-manipulation scenarios enhance interaction and stability. The study presents an anti-interference, steady, and precision solution with potential applications extending beyond laparoscopic surgery to other minimally invasive procedures.

Excitation Trajectory Optimization for Dynamic Parameter Identification Using Virtual Constraints in Hands-on Robotic System

Jan 29, 2024

Abstract:This paper proposes a novel, more computationally efficient method for optimizing robot excitation trajectories for dynamic parameter identification, emphasizing self-collision avoidance. This addresses the system identification challenges for getting high-quality training data associated with co-manipulated robotic arms that can be equipped with a variety of tools, a common scenario in industrial but also clinical and research contexts. Utilizing the Unified Robotics Description Format (URDF) to implement a symbolic Python implementation of the Recursive Newton-Euler Algorithm (RNEA), the approach aids in dynamically estimating parameters such as inertia using regression analyses on data from real robots. The excitation trajectory was evaluated and achieved on par criteria when compared to state-of-the-art reported results which didn't consider self-collision and tool calibrations. Furthermore, physical Human-Robot Interaction (pHRI) admittance control experiments were conducted in a surgical context to evaluate the derived inverse dynamics model showing a 30.1\% workload reduction by the NASA TLX questionnaire.

LBR-Stack: ROS 2 and Python Integration of KUKA FRI for Med and IIWA Robots

Nov 21, 2023

Abstract:The LBR-Stack is a collection of packages that simplify the usage and extend the capabilities of KUKA's Fast Robot Interface (FRI). It is designed for mission critical hard real-time applications. Supported are the KUKA LBR Med7/14 and KUKA LBR iiwa7/14 robots in the Gazebo simulation and for communication with real hardware.

Deep Homography Prediction for Endoscopic Camera Motion Imitation Learning

Jul 24, 2023Abstract:In this work, we investigate laparoscopic camera motion automation through imitation learning from retrospective videos of laparoscopic interventions. A novel method is introduced that learns to augment a surgeon's behavior in image space through object motion invariant image registration via homographies. Contrary to existing approaches, no geometric assumptions are made and no depth information is necessary, enabling immediate translation to a robotic setup. Deviating from the dominant approach in the literature which consist of following a surgical tool, we do not handcraft the objective and no priors are imposed on the surgical scene, allowing the method to discover unbiased policies. In this new research field, significant improvements are demonstrated over two baselines on the Cholec80 and HeiChole datasets, showcasing an improvement of 47% over camera motion continuation. The method is further shown to indeed predict camera motion correctly on the public motion classification labels of the AutoLaparo dataset. All code is made accessible on GitHub.

Deep Reinforcement Learning Based System for Intraoperative Hyperspectral Video Autofocusing

Jul 21, 2023Abstract:Hyperspectral imaging (HSI) captures a greater level of spectral detail than traditional optical imaging, making it a potentially valuable intraoperative tool when precise tissue differentiation is essential. Hardware limitations of current optical systems used for handheld real-time video HSI result in a limited focal depth, thereby posing usability issues for integration of the technology into the operating room. This work integrates a focus-tunable liquid lens into a video HSI exoscope, and proposes novel video autofocusing methods based on deep reinforcement learning. A first-of-its-kind robotic focal-time scan was performed to create a realistic and reproducible testing dataset. We benchmarked our proposed autofocus algorithm against traditional policies, and found our novel approach to perform significantly ($p<0.05$) better than traditional techniques ($0.070\pm.098$ mean absolute focal error compared to $0.146\pm.148$). In addition, we performed a blinded usability trial by having two neurosurgeons compare the system with different autofocus policies, and found our novel approach to be the most favourable, making our system a desirable addition for intraoperative HSI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge