Marco Saerens

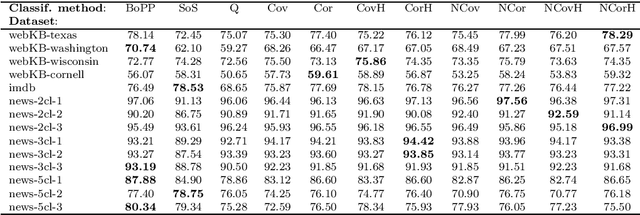

Relative Entropy-Regularized Optimal Transport on a Graph: a new algorithm and an experimental comparison

Aug 23, 2021

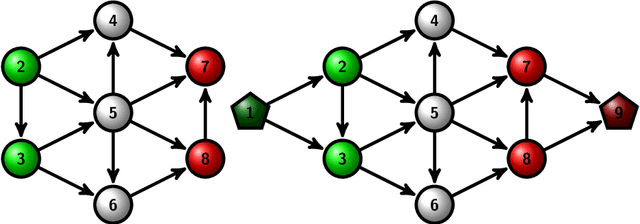

Abstract:Following [21, 23], the present work investigates a new relative entropy-regularized algorithm for solving the optimal transport on a graph problem within the randomized shortest paths formalism. More precisely, a unit flow is injected into a set of input nodes and collected from a set of output nodes while minimizing the expected transportation cost together with a paths relative entropy regularization term, providing a randomized routing policy. The main advantage of this new formulation is the fact that it can easily accommodate edge flow capacity constraints which commonly occur in real-world problems. The resulting optimal routing policy, i.e., the probability distribution of following an edge in each node, is Markovian and is computed by constraining the input and output flows to the prescribed marginal probabilities thanks to a variant of the algorithm developed in [8]. Besides, experimental comparisons with other recently developed techniques show that the distance measure between nodes derived from the introduced model provides competitive results on semi-supervised classification tasks.

Sparse Randomized Shortest Paths Routing with Tsallis Divergence Regularization

Jul 01, 2020

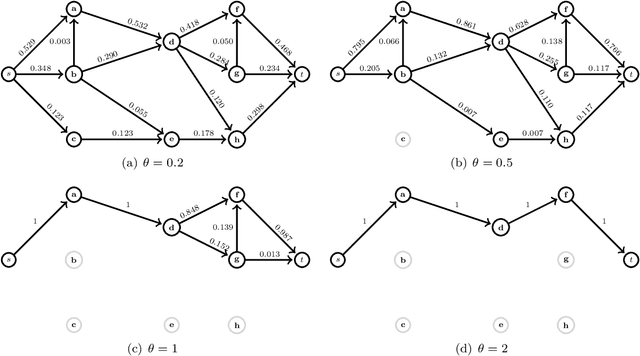

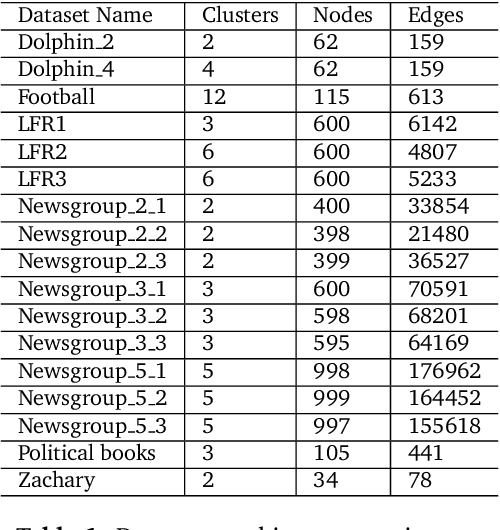

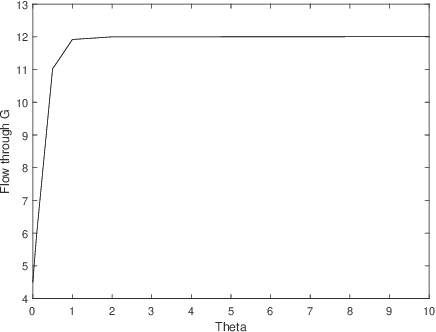

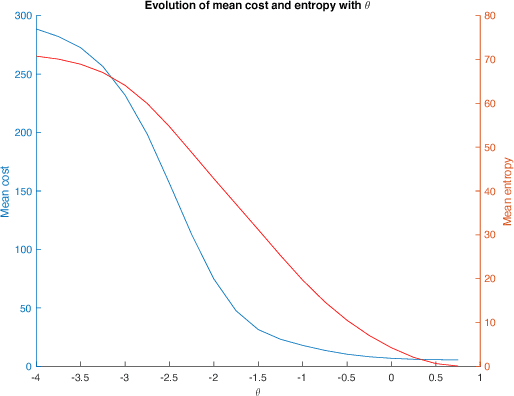

Abstract:This work elaborates on the important problem of (1) designing optimal randomized routing policies for reaching a target node t from a source note s on a weighted directed graph G and (2) defining distance measures between nodes interpolating between the least cost (based on optimal movements) and the commute-cost (based on a random walk on G), depending on a temperature parameter T. To this end, the randomized shortest path formalism (RSP, [2,99,124]) is rephrased in terms of Tsallis divergence regularization, instead of Kullback-Leibler divergence. The main consequence of this change is that the resulting routing policy (local transition probabilities) becomes sparser when T decreases, therefore inducing a sparse random walk on G converging to the least-cost directed acyclic graph when T tends to 0. Experimental comparisons on node clustering and semi-supervised classification tasks show that the derived dissimilarity measures based on expected routing costs provide state-of-the-art results. The sparse RSP is therefore a promising model of movements on a graph, balancing sparse exploitation and exploration in an optimal way.

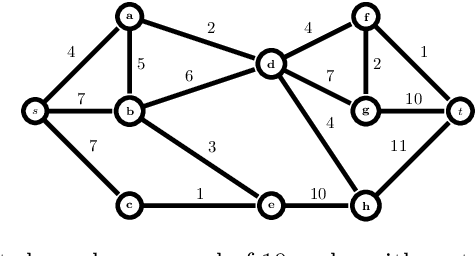

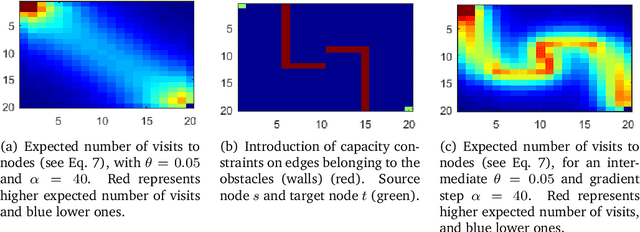

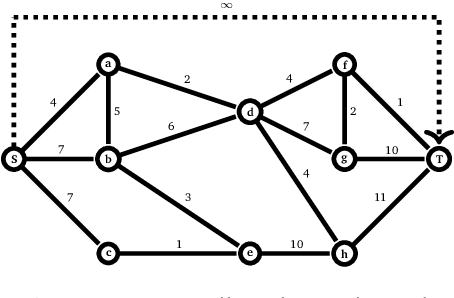

Randomized Shortest Paths with Net Flows and Capacity Constraints

Oct 04, 2019

Abstract:This work extends the randomized shortest paths model (RSP) by investigating the net flow RSP and adding capacity constraints on edge flows. The standard RSP is a model of movement, or spread, through a network interpolating between a random walk and a shortest path behavior. This framework assumes a unit flow injected into a source node and collected from a target node with flows minimizing the expected transportation cost together with a relative entropy regularization term. In this context, the present work first develops the net flow RSP model considering that edge flows in opposite directions neutralize each other (as in electrical networks) and proposes an algorithm for computing the expected routing costs between all pairs of nodes. This quantity is called the net flow RSP dissimilarity measure between nodes. Experimental comparisons on node clustering tasks show that the net flow RSP dissimilarity is competitive with other state-of-the-art techniques. In the second part of the paper, it is shown how to introduce capacity constraints on edge flows and a procedure solving this constrained problem by using Lagrangian duality is developed. These two extensions improve significantly the scope of applications of the RSP framework.

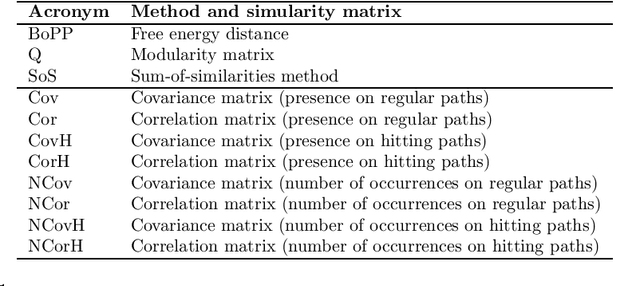

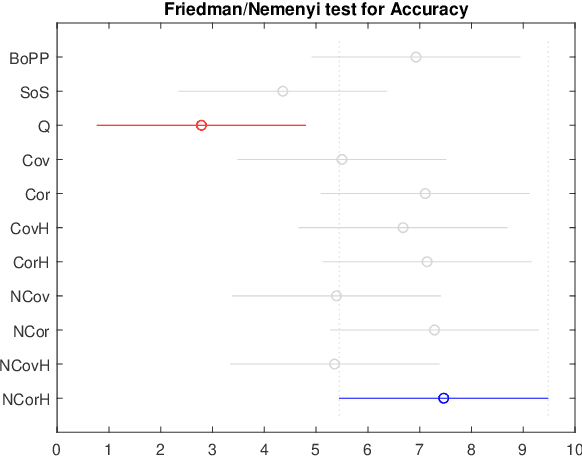

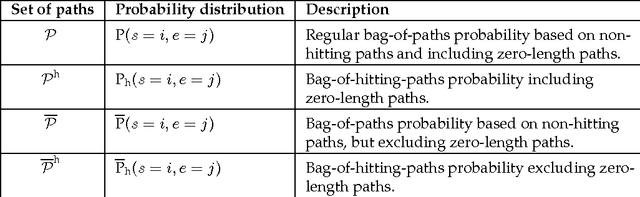

Covariance and Correlation Kernels on a Graph in the Generalized Bag-of-Paths Formalism

Feb 08, 2019

Abstract:This work derives closed-form expressions computing the expectation of co-presences and of number of co-occurences of nodes on paths sampled from a network according to general path weights (a bag of paths). The underlying idea is that two nodes are considered as similar when they appear together on (preferably short) paths of the network. The results are obtained for both regular and hitting paths and serve as a basis for computing new covariance and correlation measures between nodes. Experiments on semi-supervised classification show that the introduced similarity measures provide competitive performances compared to other state-of-the-art distances and similarities.

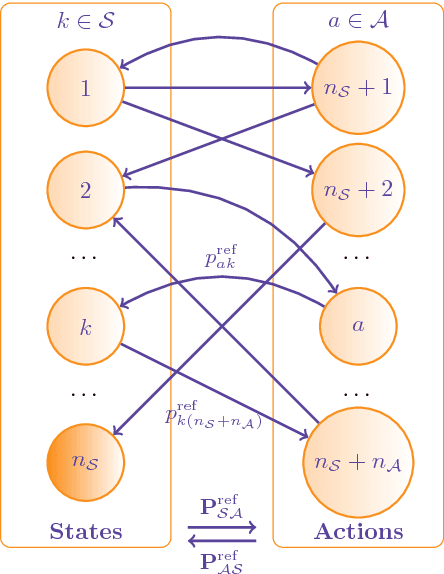

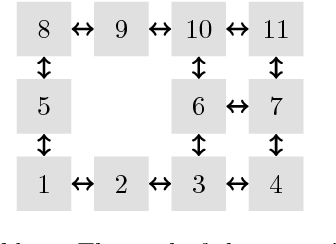

A Constrained Randomized Shortest-Paths Framework for Optimal Exploration

Jul 12, 2018

Abstract:The present work extends the randomized shortest-paths framework (RSP), interpolating between shortest-path and random-walk routing in a network, in three directions. First, it shows how to deal with equality constraints on a subset of transition probabilities and develops a generic algorithm for solving this constrained RSP problem using Lagrangian duality. Second, it derives a surprisingly simple iterative procedure to compute the optimal, randomized, routing policy generalizing the previously developed "soft" Bellman-Ford algorithm. The resulting algorithm allows balancing exploitation and exploration in an optimal way by interpolating between a pure random behavior and the deterministic, optimal, policy (least-cost paths) while satisfying the constraints. Finally, the two algorithms are applied to Markov decision problems by considering the process as a constrained RSP on a bipartite state-action graph. In this context, the derived "soft" value iteration algorithm appears to be closely related to dynamic policy programming as well as Kullback-Leibler and path integral control, and similar to a recently introduced reinforcement learning exploration strategy. This shows that this strategy is optimal in the RSP sense - it minimizes expected path cost subject to relative entropy constraint. Simulation results on illustrative examples show that the model behaves as expected.

Randomized Optimal Transport on a Graph: Framework and New Distance Measures

Jun 07, 2018

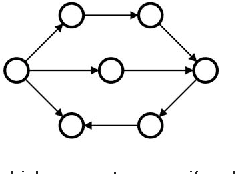

Abstract:The recently developed bag-of-paths framework consists in setting a Gibbs-Boltzmann distribution on all feasible paths of a graph. This probability distribution favors short paths over long ones, with a free parameter (the temperature $T > 0$) controlling the entropic level of the distribution. This formalism enables the computation of new distances or dissimilarities, interpolating between the shortest-path and the resistance distance, which have been shown to perform well in clustering and classification tasks. In this work, the bag-of-paths formalism is extended by adding two independent equality constraints fixing starting and ending nodes distributions of paths. When the temperature is low, this formalism is shown to be equivalent to a relaxation of the optimal transport problem on a network where paths carry a flow between two discrete distributions on nodes. The randomization is achieved by considering free energy minimization instead of traditional cost minimization. Algorithms computing the optimal free energy solution are developed for two types of paths: hitting (or absorbing) paths and non-hitting, regular paths, and require the inversion of an $n \times n$ matrix with $n$ being the number of nodes. Interestingly, for regular paths, the resulting optimal policy interpolates between the deterministic optimal transport policy ($T \rightarrow 0^{+}$) and the solution to the corresponding electrical circuit ($T \rightarrow \infty$). Two distance measures between nodes and a dissimilarity between groups of nodes, both integrating weights on nodes, are derived from this framework.

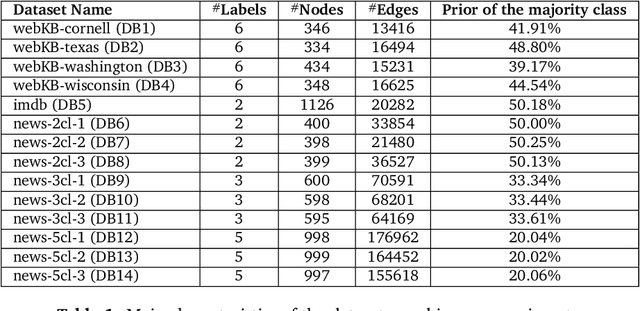

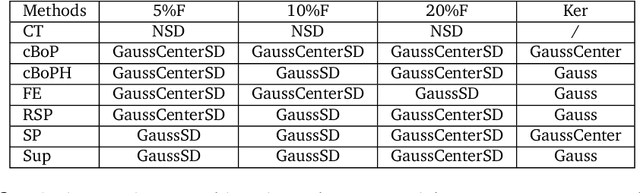

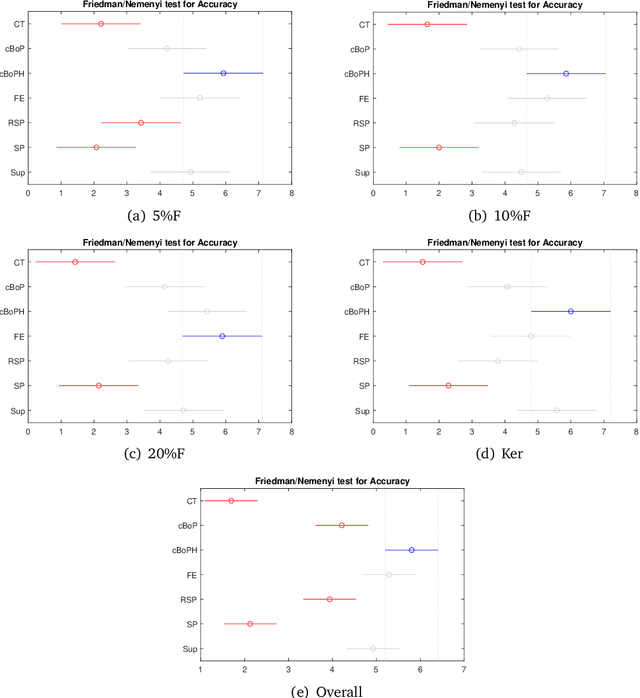

An experimental study of graph-based semi-supervised classification with additional node information

May 24, 2017

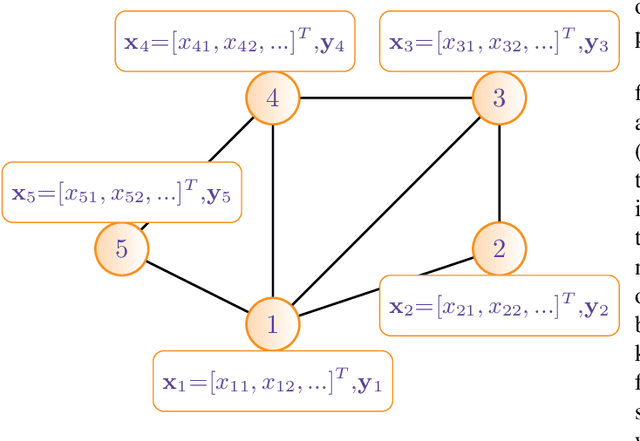

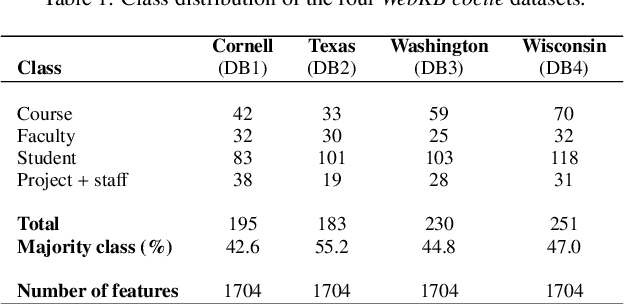

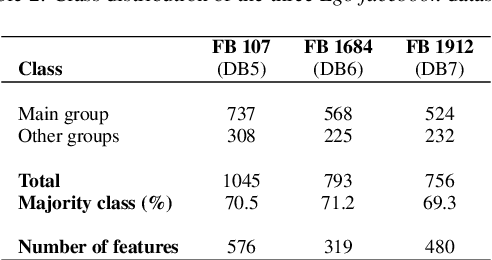

Abstract:The volume of data generated by internet and social networks is increasing every day, and there is a clear need for efficient ways of extracting useful information from them. As those data can take different forms, it is important to use all the available data representations for prediction. In this paper, we focus our attention on supervised classification using both regular plain, tabular, data and structural information coming from a network structure. 14 techniques are investigated and compared in this study and can be divided in three classes: the first one uses only the plain data to build a classification model, the second uses only the graph structure and the last uses both information sources. The relative performances in these three cases are investigated. Furthermore, the effect of using a graph embedding and well-known indicators in spatial statistics is also studied. Possible applications are automatic classification of web pages or other linked documents, of people in a social network or of proteins in a biological complex system, to name a few. Based on our comparison, we draw some general conclusions and advices to tackle this particular classification task: some datasets can be better explained by their graph structure (graph-driven), or by their feature set (features-driven). The most efficient methods are discussed in both cases.

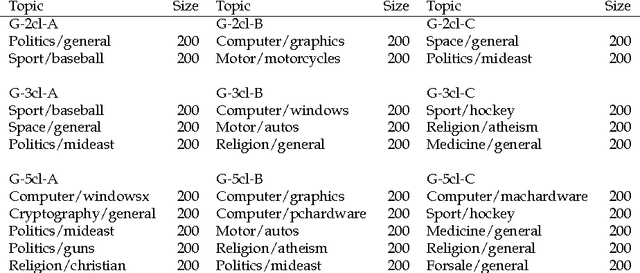

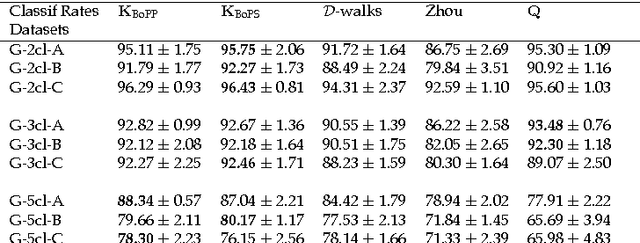

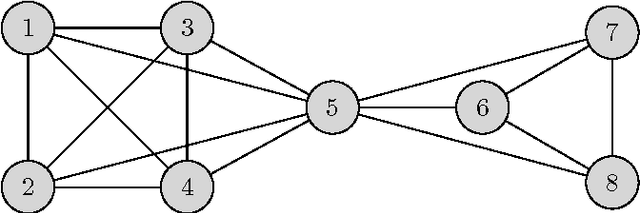

A bag-of-paths framework for network data analysis

Dec 14, 2016

Abstract:This work develops a generic framework, called the bag-of-paths (BoP), for link and network data analysis. The central idea is to assign a probability distribution on the set of all paths in a network. More precisely, a Gibbs-Boltzmann distribution is defined over a bag of paths in a network, that is, on a representation that considers all paths independently. We show that, under this distribution, the probability of drawing a path connecting two nodes can easily be computed in closed form by simple matrix inversion. This probability captures a notion of relatedness between nodes of the graph: two nodes are considered as highly related when they are connected by many, preferably low-cost, paths. As an application, two families of distances between nodes are derived from the BoP probabilities. Interestingly, the second distance family interpolates between the shortest path distance and the resistance distance. In addition, it extends the Bellman-Ford formula for computing the shortest path distance in order to integrate sub-optimal paths by simply replacing the minimum operator by the soft minimum operator. Experimental results on semi-supervised classification show that both of the new distance families are competitive with other state-of-the-art approaches. In addition to the distance measures studied in this paper, the bag-of-paths framework enables straightforward computation of many other relevant network measures.

* Manuscript submitted for publication

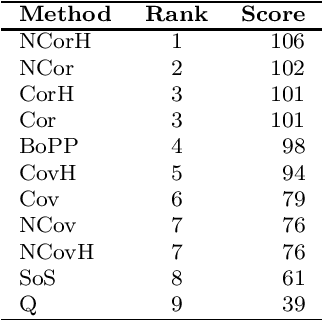

Developments in the theory of randomized shortest paths with a comparison of graph node distances

Oct 03, 2013

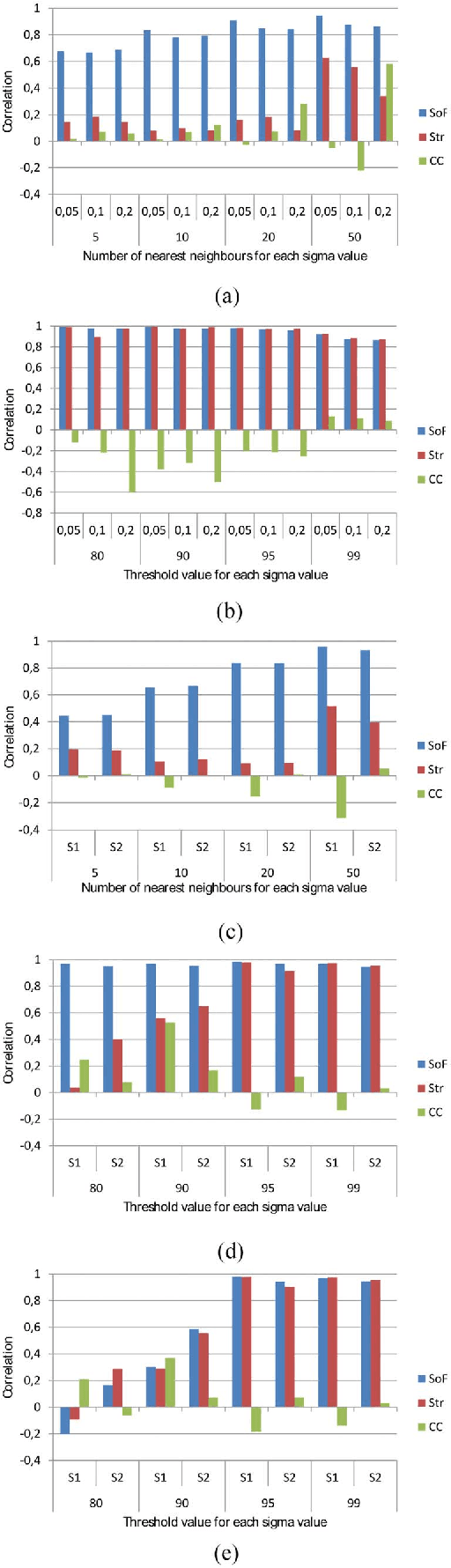

Abstract:There have lately been several suggestions for parametrized distances on a graph that generalize the shortest path distance and the commute time or resistance distance. The need for developing such distances has risen from the observation that the above-mentioned common distances in many situations fail to take into account the global structure of the graph. In this article, we develop the theory of one family of graph node distances, known as the randomized shortest path dissimilarity, which has its foundation in statistical physics. We show that the randomized shortest path dissimilarity can be easily computed in closed form for all pairs of nodes of a graph. Moreover, we come up with a new definition of a distance measure that we call the free energy distance. The free energy distance can be seen as an upgrade of the randomized shortest path dissimilarity as it defines a metric, in addition to which it satisfies the graph-geodetic property. The derivation and computation of the free energy distance are also straightforward. We then make a comparison between a set of generalized distances that interpolate between the shortest path distance and the commute time, or resistance distance. This comparison focuses on the applicability of the distances in graph node clustering and classification. The comparison, in general, shows that the parametrized distances perform well in the tasks. In particular, we see that the results obtained with the free energy distance are among the best in all the experiments.

The Sum-over-Forests density index: identifying dense regions in a graph

Jan 04, 2013

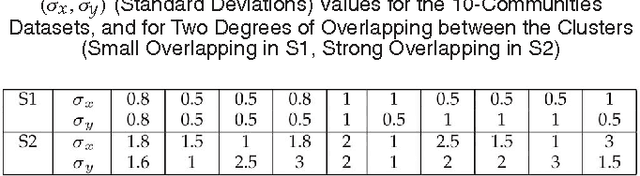

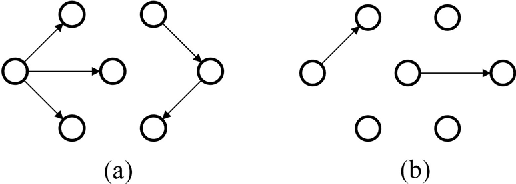

Abstract:This work introduces a novel nonparametric density index defined on graphs, the Sum-over-Forests (SoF) density index. It is based on a clear and intuitive idea: high-density regions in a graph are characterized by the fact that they contain a large amount of low-cost trees with high outdegrees while low-density regions contain few ones. Therefore, a Boltzmann probability distribution on the countable set of forests in the graph is defined so that large (high-cost) forests occur with a low probability while short (low-cost) forests occur with a high probability. Then, the SoF density index of a node is defined as the expected outdegree of this node in a non-trivial tree of the forest, thus providing a measure of density around that node. Following the matrix-forest theorem, and a statistical physics framework, it is shown that the SoF density index can be easily computed in closed form through a simple matrix inversion. Experiments on artificial and real data sets show that the proposed index performs well on finding dense regions, for graphs of various origins.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge