Marcelo Pereyra

Bayesian model selection and misspecification testing in imaging inverse problems only from noisy and partial measurements

Oct 31, 2025Abstract:Modern imaging techniques heavily rely on Bayesian statistical models to address difficult image reconstruction and restoration tasks. This paper addresses the objective evaluation of such models in settings where ground truth is unavailable, with a focus on model selection and misspecification diagnosis. Existing unsupervised model evaluation methods are often unsuitable for computational imaging due to their high computational cost and incompatibility with modern image priors defined implicitly via machine learning models. We herein propose a general methodology for unsupervised model selection and misspecification detection in Bayesian imaging sciences, based on a novel combination of Bayesian cross-validation and data fission, a randomized measurement splitting technique. The approach is compatible with any Bayesian imaging sampler, including diffusion and plug-and-play samplers. We demonstrate the methodology through experiments involving various scoring rules and types of model misspecification, where we achieve excellent selection and detection accuracy with a low computational cost.

Learning few-step posterior samplers by unfolding and distillation of diffusion models

Jul 03, 2025Abstract:Diffusion models (DMs) have emerged as powerful image priors in Bayesian computational imaging. Two primary strategies have been proposed for leveraging DMs in this context: Plug-and-Play methods, which are zero-shot and highly flexible but rely on approximations; and specialized conditional DMs, which achieve higher accuracy and faster inference for specific tasks through supervised training. In this work, we introduce a novel framework that integrates deep unfolding and model distillation to transform a DM image prior into a few-step conditional model for posterior sampling. A central innovation of our approach is the unfolding of a Markov chain Monte Carlo (MCMC) algorithm - specifically, the recently proposed LATINO Langevin sampler (Spagnoletti et al., 2025) - representing the first known instance of deep unfolding applied to a Monte Carlo sampling scheme. We demonstrate our proposed unfolded and distilled samplers through extensive experiments and comparisons with the state of the art, where they achieve excellent accuracy and computational efficiency, while retaining the flexibility to adapt to variations in the forward model at inference time.

Hypothesis Testing in Imaging Inverse Problems

May 28, 2025Abstract:This paper proposes a framework for semantic hypothesis testing tailored to imaging inverse problems. Modern imaging methods struggle to support hypothesis testing, a core component of the scientific method that is essential for the rigorous interpretation of experiments and robust interfacing with decision-making processes. There are three main reasons why image-based hypothesis testing is challenging. First, the difficulty of using a single observation to simultaneously reconstruct an image, formulate hypotheses, and quantify their statistical significance. Second, the hypotheses encountered in imaging are mostly of semantic nature, rather than quantitative statements about pixel values. Third, it is challenging to control test error probabilities because the null and alternative distributions are often unknown. Our proposed approach addresses these difficulties by leveraging concepts from self-supervised computational imaging, vision-language models, and non-parametric hypothesis testing with e-values. We demonstrate our proposed framework through numerical experiments related to image-based phenotyping, where we achieve excellent power while robustly controlling Type I errors.

Efficient Bayesian Computation Using Plug-and-Play Priors for Poisson Inverse Problems

Mar 20, 2025

Abstract:This paper introduces a novel plug-and-play (PnP) Langevin sampling methodology for Bayesian inference in low-photon Poisson imaging problems, a challenging class of problems with significant applications in astronomy, medicine, and biology. PnP Langevin sampling algorithms offer a powerful framework for Bayesian image restoration, enabling accurate point estimation as well as advanced inference tasks, including uncertainty quantification and visualization analyses, and empirical Bayesian inference for automatic model parameter tuning. However, existing PnP Langevin algorithms are not well-suited for low-photon Poisson imaging due to high solution uncertainty and poor regularity properties, such as exploding gradients and non-negativity constraints. To address these challenges, we propose two strategies for extending Langevin PnP sampling to Poisson imaging models: (i) an accelerated PnP Langevin method that incorporates boundary reflections and a Poisson likelihood approximation and (ii) a mirror sampling algorithm that leverages a Riemannian geometry to handle the constraints and the poor regularity of the likelihood without approximations. The effectiveness of these approaches is demonstrated through extensive numerical experiments and comparisons with state-of-the-art methods.

LATINO-PRO: LAtent consisTency INverse sOlver with PRompt Optimization

Mar 16, 2025Abstract:Text-to-image latent diffusion models (LDMs) have recently emerged as powerful generative models with great potential for solving inverse problems in imaging. However, leveraging such models in a Plug & Play (PnP), zero-shot manner remains challenging because it requires identifying a suitable text prompt for the unknown image of interest. Also, existing text-to-image PnP approaches are highly computationally expensive. We herein address these challenges by proposing a novel PnP inference paradigm specifically designed for embedding generative models within stochastic inverse solvers, with special attention to Latent Consistency Models (LCMs), which distill LDMs into fast generators. We leverage our framework to propose LAtent consisTency INverse sOlver (LATINO), the first zero-shot PnP framework to solve inverse problems with priors encoded by LCMs. Our conditioning mechanism avoids automatic differentiation and reaches SOTA quality in as little as 8 neural function evaluations. As a result, LATINO delivers remarkably accurate solutions and is significantly more memory and computationally efficient than previous approaches. We then embed LATINO within an empirical Bayesian framework that automatically calibrates the text prompt from the observed measurements by marginal maximum likelihood estimation. Extensive experiments show that prompt self-calibration greatly improves estimation, allowing LATINO with PRompt Optimization to define new SOTAs in image reconstruction quality and computational efficiency.

Self-supervised Conformal Prediction for Uncertainty Quantification in Imaging Problems

Feb 07, 2025

Abstract:Most image restoration problems are ill-conditioned or ill-posed and hence involve significant uncertainty. Quantifying this uncertainty is crucial for reliably interpreting experimental results, particularly when reconstructed images inform critical decisions and science. However, most existing image restoration methods either fail to quantify uncertainty or provide estimates that are highly inaccurate. Conformal prediction has recently emerged as a flexible framework to equip any estimator with uncertainty quantification capabilities that, by construction, have nearly exact marginal coverage. To achieve this, conformal prediction relies on abundant ground truth data for calibration. However, in image restoration problems, reliable ground truth data is often expensive or not possible to acquire. Also, reliance on ground truth data can introduce large biases in situations of distribution shift between calibration and deployment. This paper seeks to develop a more robust approach to conformal prediction for image restoration problems by proposing a self-supervised conformal prediction method that leverages Stein's Unbiased Risk Estimator (SURE) to self-calibrate itself directly from the observed noisy measurements, bypassing the need for ground truth. The method is suitable for any linear imaging inverse problem that is ill-conditioned, and it is especially powerful when used with modern self-supervised image restoration techniques that can also be trained directly from measurement data. The proposed approach is demonstrated through numerical experiments on image denoising and deblurring, where it delivers results that are remarkably accurate and comparable to those obtained by supervised conformal prediction with ground truth data.

Empirical Bayesian image restoration by Langevin sampling with a denoising diffusion implicit prior

Sep 06, 2024Abstract:Score-based diffusion methods provide a powerful strategy to solve image restoration tasks by flexibly combining a pre-trained foundational prior model with a likelihood function specified during test time. Such methods are predominantly derived from two stochastic processes: reversing Ornstein-Uhlenbeck, which underpins the celebrated denoising diffusion probabilistic models (DDPM) and denoising diffusion implicit models (DDIM), and the Langevin diffusion process. The solutions delivered by DDPM and DDIM are often remarkably realistic, but they are not always consistent with measurements because of likelihood intractability issues and the associated required approximations. Alternatively, using a Langevin process circumvents the intractable likelihood issue, but usually leads to restoration results of inferior quality and longer computing times. This paper presents a novel and highly computationally efficient image restoration method that carefully embeds a foundational DDPM denoiser within an empirical Bayesian Langevin algorithm, which jointly calibrates key model hyper-parameters as it estimates the model's posterior mean. Extensive experimental results on three canonical tasks (image deblurring, super-resolution, and inpainting) demonstrate that the proposed approach improves on state-of-the-art strategies both in image estimation accuracy and computing time.

Do Bayesian imaging methods report trustworthy probabilities?

May 13, 2024Abstract:Bayesian statistics is a cornerstone of imaging sciences, underpinning many and varied approaches from Markov random fields to score-based denoising diffusion models. In addition to powerful image estimation methods, the Bayesian paradigm also provides a framework for uncertainty quantification and for using image data as quantitative evidence. These probabilistic capabilities are important for the rigorous interpretation of experimental results and for robust interfacing of quantitative imaging pipelines with scientific and decision-making processes. However, are the probabilities delivered by existing Bayesian imaging methods meaningful under replication of an experiment, or are they only meaningful as subjective measures of belief? This paper presents a Monte Carlo method to explore this question. We then leverage the proposed Monte Carlo method and run a large experiment requiring 1,000 GPU-hours to probe the accuracy of five canonical Bayesian imaging methods that are representative of some of the main Bayesian imaging strategies from the past decades (a score-based denoising diffusion technique, a plug-and-play Langevin algorithm utilising a Lipschitz-regularised DnCNN denoiser, a Bayesian method with a dictionary-based prior trained subject to a log-concavity constraint, an empirical Bayesian method with a total-variation prior, and a hierarchical Bayesian Gibbs sampler based on a Gaussian Markov random field model). We find that, a few cases, the probabilities reported by modern Bayesian imaging techniques are in broad agreement with long-term averages as observed over a large number of replication of an experiment, but existing Bayesian imaging methods are generally not able to deliver reliable uncertainty quantification results.

Unsupervised Training of Convex Regularizers using Maximum Likelihood Estimation

Apr 08, 2024

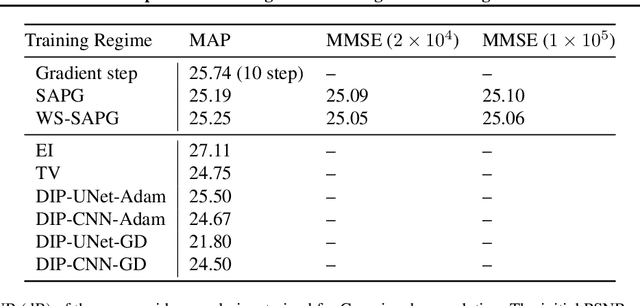

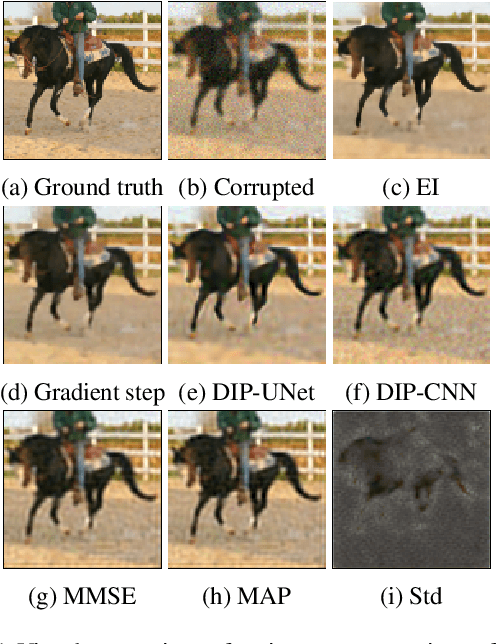

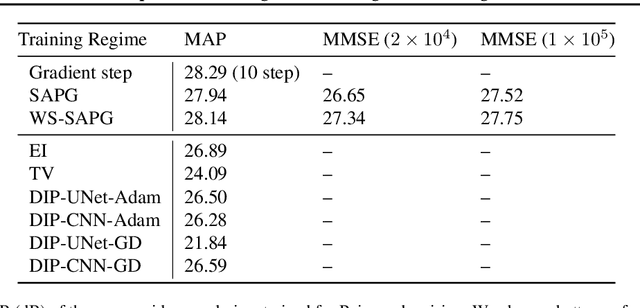

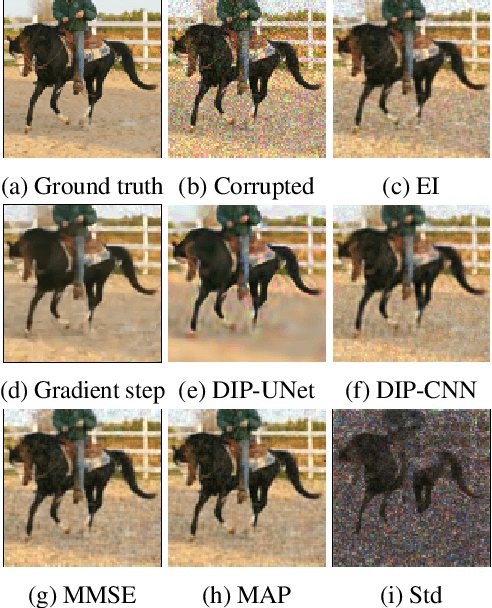

Abstract:Unsupervised learning is a training approach in the situation where ground truth data is unavailable, such as inverse imaging problems. We present an unsupervised Bayesian training approach to learning convex neural network regularizers using a fixed noisy dataset, based on a dual Markov chain estimation method. Compared to classical supervised adversarial regularization methods, where there is access to both clean images as well as unlimited to noisy copies, we demonstrate close performance on natural image Gaussian deconvolution and Poisson denoising tasks.

Statistical modelling and Bayesian inversion for a Compton imaging system: application to radioactive source localisation

Feb 16, 2024Abstract:This paper presents a statistical forward model for a Compton imaging system, called Compton imager. This system, under development at the University of Illinois Urbana Champaign, is a variant of Compton cameras with a single type of sensors which can simultaneously act as scatterers and absorbers. This imager is convenient for imaging situations requiring a wide field of view. The proposed statistical forward model is then used to solve the inverse problem of estimating the location and energy of point-like sources from observed data. This inverse problem is formulated and solved in a Bayesian framework by using a Metropolis within Gibbs algorithm for the estimation of the location, and an expectation-maximization algorithm for the estimation of the energy. This approach leads to more accurate estimation when compared with the deterministic standard back-projection approach, with the additional benefit of uncertainty quantification in the low photon imaging setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge