Manabu Tsukada

Trust, Don't Trust, or Flip: Robust Preference-Based Reinforcement Learning with Multi-Expert Feedback

Jan 26, 2026Abstract:Preference-based reinforcement learning (PBRL) offers a promising alternative to explicit reward engineering by learning from pairwise trajectory comparisons. However, real-world preference data often comes from heterogeneous annotators with varying reliability; some accurate, some noisy, and some systematically adversarial. Existing PBRL methods either treat all feedback equally or attempt to filter out unreliable sources, but both approaches fail when faced with adversarial annotators who systematically provide incorrect preferences. We introduce TriTrust-PBRL (TTP), a unified framework that jointly learns a shared reward model and expert-specific trust parameters from multi-expert preference feedback. The key insight is that trust parameters naturally evolve during gradient-based optimization to be positive (trust), near zero (ignore), or negative (flip), enabling the model to automatically invert adversarial preferences and recover useful signal rather than merely discarding corrupted feedback. We provide theoretical analysis establishing identifiability guarantees and detailed gradient analysis that explains how expert separation emerges naturally during training without explicit supervision. Empirically, we evaluate TTP on four diverse domains spanning manipulation tasks (MetaWorld) and locomotion (DM Control) under various corruption scenarios. TTP achieves state-of-the-art robustness, maintaining near-oracle performance under adversarial corruption while standard PBRL methods fail catastrophically. Notably, TTP outperforms existing baselines by successfully learning from mixed expert pools containing both reliable and adversarial annotators, all while requiring no expert features beyond identification indices and integrating seamlessly with existing PBRL pipelines.

SUG-Occ: An Explicit Semantics and Uncertainty Guided Sparse Learning Framework for Real-Time 3D Occupancy Prediction

Jan 16, 2026Abstract:As autonomous driving moves toward full scene understanding, 3D semantic occupancy prediction has emerged as a crucial perception task, offering voxel-level semantics beyond traditional detection and segmentation paradigms. However, such a refined representation for scene understanding incurs prohibitive computation and memory overhead, posing a major barrier to practical real-time deployment. To address this, we propose SUG-Occ, an explicit Semantics and Uncertainty Guided Sparse Learning Enabled 3D Occupancy Prediction Framework, which exploits the inherent sparsity of 3D scenes to reduce redundant computation while maintaining geometric and semantic completeness. Specifically, we first utilize semantic and uncertainty priors to suppress projections from free space during view transformation while employing an explicit unsigned distance encoding to enhance geometric consistency, producing a structurally consistent sparse 3D representation. Secondly, we design an cascade sparse completion module via hyper cross sparse convolution and generative upsampling to enable efficiently coarse-to-fine reasoning. Finally, we devise an object contextual representation (OCR) based mask decoder that aggregates global semantic context from sparse features and refines voxel-wise predictions via lightweight query-context interactions, avoiding expensive attention operations over volumetric features. Extensive experiments on SemanticKITTI benchmark demonstrate that the proposed approach outperforms the baselines, achieving a 7.34/% improvement in accuracy and a 57.8\% gain in efficiency.

Trajectory Planning for UAV-Based Smart Farming Using Imitation-Based Triple Deep Q-Learning

Dec 21, 2025Abstract:Unmanned aerial vehicles (UAVs) have emerged as a promising auxiliary platform for smart agriculture, capable of simultaneously performing weed detection, recognition, and data collection from wireless sensors. However, trajectory planning for UAV-based smart agriculture is challenging due to the high uncertainty of the environment, partial observations, and limited battery capacity of UAVs. To address these issues, we formulate the trajectory planning problem as a Markov decision process (MDP) and leverage multi-agent reinforcement learning (MARL) to solve it. Furthermore, we propose a novel imitation-based triple deep Q-network (ITDQN) algorithm, which employs an elite imitation mechanism to reduce exploration costs and utilizes a mediator Q-network over a double deep Q-network (DDQN) to accelerate and stabilize training and improve performance. Experimental results in both simulated and real-world environments demonstrate the effectiveness of our solution. Moreover, our proposed ITDQN outperforms DDQN by 4.43\% in weed recognition rate and 6.94\% in data collection rate.

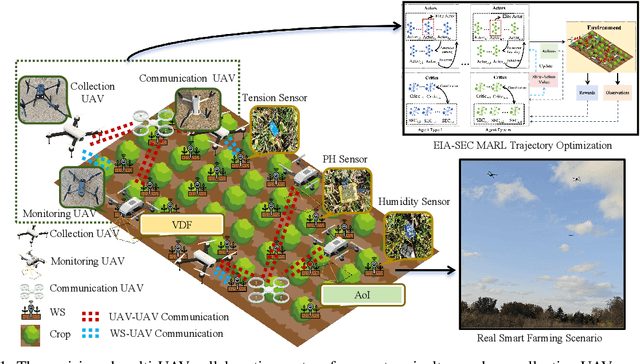

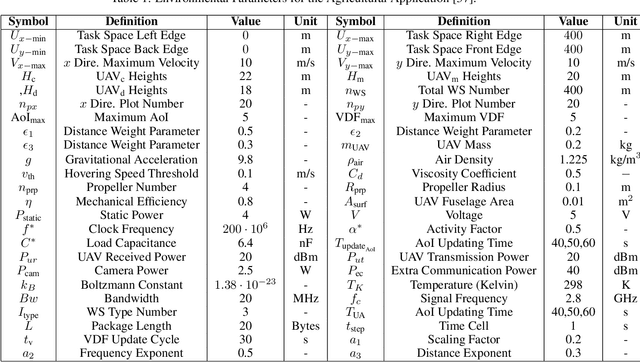

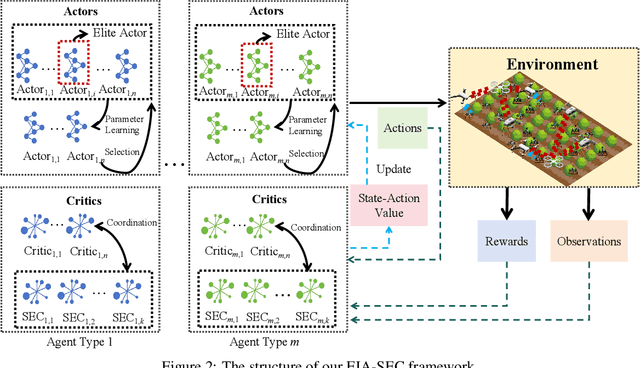

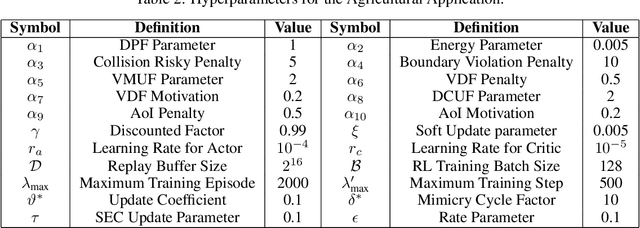

EIA-SEC: Improved Actor-Critic Framework for Multi-UAV Collaborative Control in Smart Agriculture

Dec 21, 2025

Abstract:The widespread application of wireless communication technology has promoted the development of smart agriculture, where unmanned aerial vehicles (UAVs) play a multifunctional role. We target a multi-UAV smart agriculture system where UAVs cooperatively perform data collection, image acquisition, and communication tasks. In this context, we model a Markov decision process to solve the multi-UAV trajectory planning problem. Moreover, we propose a novel Elite Imitation Actor-Shared Ensemble Critic (EIA-SEC) framework, where agents adaptively learn from the elite agent to reduce trial-and-error costs, and a shared ensemble critic collaborates with each agent's local critic to ensure unbiased objective value estimates and prevent overestimation. Experimental results demonstrate that EIA-SEC outperforms state-of-the-art baselines in terms of reward performance, training stability, and convergence speed.

FM-EAC: Feature Model-based Enhanced Actor-Critic for Multi-Task Control in Dynamic Environments

Dec 17, 2025Abstract:Model-based reinforcement learning (MBRL) and model-free reinforcement learning (MFRL) evolve along distinct paths but converge in the design of Dyna-Q [1]. However, modern RL methods still struggle with effective transferability across tasks and scenarios. Motivated by this limitation, we propose a generalized algorithm, Feature Model-Based Enhanced Actor-Critic (FM-EAC), that integrates planning, acting, and learning for multi-task control in dynamic environments. FM-EAC combines the strengths of MBRL and MFRL and improves generalizability through the use of novel feature-based models and an enhanced actor-critic framework. Simulations in both urban and agricultural applications demonstrate that FM-EAC consistently outperforms many state-of-the-art MBRL and MFRL methods. More importantly, different sub-networks can be customized within FM-EAC according to user-specific requirements.

You Share Beliefs, I Adapt: Progressive Heterogeneous Collaborative Perception

Sep 11, 2025Abstract:Collaborative perception enables vehicles to overcome individual perception limitations by sharing information, allowing them to see further and through occlusions. In real-world scenarios, models on different vehicles are often heterogeneous due to manufacturer variations. Existing methods for heterogeneous collaborative perception address this challenge by fine-tuning adapters or the entire network to bridge the domain gap. However, these methods are impractical in real-world applications, as each new collaborator must undergo joint training with the ego vehicle on a dataset before inference, or the ego vehicle stores models for all potential collaborators in advance. Therefore, we pose a new question: Can we tackle this challenge directly during inference, eliminating the need for joint training? To answer this, we introduce Progressive Heterogeneous Collaborative Perception (PHCP), a novel framework that formulates the problem as few-shot unsupervised domain adaptation. Unlike previous work, PHCP dynamically aligns features by self-training an adapter during inference, eliminating the need for labeled data and joint training. Extensive experiments on the OPV2V dataset demonstrate that PHCP achieves strong performance across diverse heterogeneous scenarios. Notably, PHCP achieves performance comparable to SOTA methods trained on the entire dataset while using only a small amount of unlabeled data.

Towards Efficient Roadside LiDAR Deployment: A Fast Surrogate Metric Based on Entropy-Guided Visibility

Apr 09, 2025Abstract:The deployment of roadside LiDAR sensors plays a crucial role in the development of Cooperative Intelligent Transport Systems (C-ITS). However, the high cost of LiDAR sensors necessitates efficient placement strategies to maximize detection performance. Traditional roadside LiDAR deployment methods rely on expert insight, making them time-consuming. Automating this process, however, demands extensive computation, as it requires not only visibility evaluation but also assessing detection performance across different LiDAR placements. To address this challenge, we propose a fast surrogate metric, the Entropy-Guided Visibility Score (EGVS), based on information gain to evaluate object detection performance in roadside LiDAR configurations. EGVS leverages Traffic Probabilistic Occupancy Grids (TPOG) to prioritize critical areas and employs entropy-based calculations to quantify the information captured by LiDAR beams. This eliminates the need for direct detection performance evaluation, which typically requires extensive labeling and computational resources. By integrating EGVS into the optimization process, we significantly accelerate the search for optimal LiDAR configurations. Experimental results using the AWSIM simulator demonstrate that EGVS strongly correlates with Average Precision (AP) scores and effectively predicts object detection performance. This approach offers a computationally efficient solution for roadside LiDAR deployment, facilitating scalable smart infrastructure development.

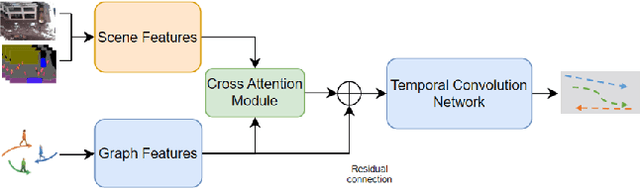

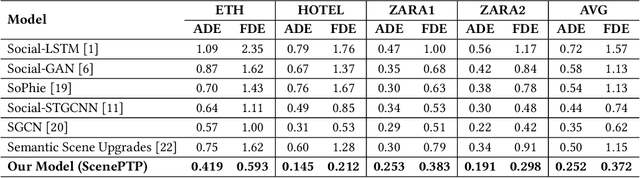

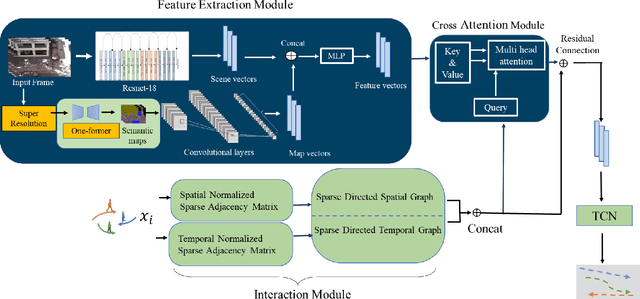

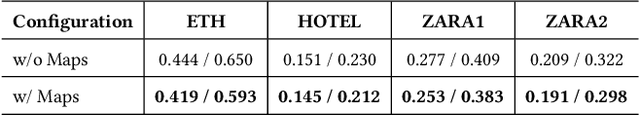

Where Do You Go? Pedestrian Trajectory Prediction using Scene Features

Jan 23, 2025

Abstract:Accurate prediction of pedestrian trajectories is crucial for enhancing the safety of autonomous vehicles and reducing traffic fatalities involving pedestrians. While numerous studies have focused on modeling interactions among pedestrians to forecast their movements, the influence of environmental factors and scene-object placements has been comparatively underexplored. In this paper, we present a novel trajectory prediction model that integrates both pedestrian interactions and environmental context to improve prediction accuracy. Our approach captures spatial and temporal interactions among pedestrians within a sparse graph framework. To account for pedestrian-scene interactions, we employ advanced image enhancement and semantic segmentation techniques to extract detailed scene features. These scene and interaction features are then fused through a cross-attention mechanism, enabling the model to prioritize relevant environmental factors that influence pedestrian movements. Finally, a temporal convolutional network processes the fused features to predict future pedestrian trajectories. Experimental results demonstrate that our method significantly outperforms existing state-of-the-art approaches, achieving ADE and FDE values of 0.252 and 0.372 meters, respectively, underscoring the importance of incorporating both social interactions and environmental context in pedestrian trajectory prediction.

Neural Error Covariance Estimation for Precise LiDAR Localization

Jan 05, 2025Abstract:Autonomous vehicles have gained significant attention due to technological advancements and their potential to transform transportation. A critical challenge in this domain is precise localization, particularly in LiDAR-based map matching, which is prone to errors due to degeneracy in the data. Most sensor fusion techniques, such as the Kalman filter, rely on accurate error covariance estimates for each sensor to improve localization accuracy. However, obtaining reliable covariance values for map matching remains a complex task. To address this challenge, we propose a neural network-based framework for predicting localization error covariance in LiDAR map matching. To achieve this, we introduce a novel dataset generation method specifically designed for error covariance estimation. In our evaluation using a Kalman filter, we achieved a 2 cm improvement in localization accuracy, a significant enhancement in this domain.

LiDAR-Camera Fusion for Video Panoptic Segmentation without Video Training

Dec 30, 2024

Abstract:Panoptic segmentation, which combines instance and semantic segmentation, has gained a lot of attention in autonomous vehicles, due to its comprehensive representation of the scene. This task can be applied for cameras and LiDAR sensors, but there has been a limited focus on combining both sensors to enhance image panoptic segmentation (PS). Although previous research has acknowledged the benefit of 3D data on camera-based scene perception, no specific study has explored the influence of 3D data on image and video panoptic segmentation (VPS).This work seeks to introduce a feature fusion module that enhances PS and VPS by fusing LiDAR and image data for autonomous vehicles. We also illustrate that, in addition to this fusion, our proposed model, which utilizes two simple modifications, can further deliver even more high-quality VPS without being trained on video data. The results demonstrate a substantial improvement in both the image and video panoptic segmentation evaluation metrics by up to 5 points.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge