Mahak Kothari

FRCSyn Challenge at WACV 2024:Face Recognition Challenge in the Era of Synthetic Data

Nov 17, 2023

Abstract:Despite the widespread adoption of face recognition technology around the world, and its remarkable performance on current benchmarks, there are still several challenges that must be covered in more detail. This paper offers an overview of the Face Recognition Challenge in the Era of Synthetic Data (FRCSyn) organized at WACV 2024. This is the first international challenge aiming to explore the use of synthetic data in face recognition to address existing limitations in the technology. Specifically, the FRCSyn Challenge targets concerns related to data privacy issues, demographic biases, generalization to unseen scenarios, and performance limitations in challenging scenarios, including significant age disparities between enrollment and testing, pose variations, and occlusions. The results achieved in the FRCSyn Challenge, together with the proposed benchmark, contribute significantly to the application of synthetic data to improve face recognition technology.

Deep Neural Networks on EEG Signals to Predict Auditory Attention Score Using Gramian Angular Difference Field

Oct 24, 2021

Abstract:Auditory attention is a selective type of hearing in which people focus their attention intentionally on a specific source of a sound or spoken words whilst ignoring or inhibiting other auditory stimuli. In some sense, the auditory attention score of an individual shows the focus the person can have in auditory tasks. The recent advancements in deep learning and in the non-invasive technologies recording neural activity beg the question, can deep learning along with technologies such as electroencephalography (EEG) be used to predict the auditory attention score of an individual? In this paper, we focus on this very problem of estimating a person's auditory attention level based on their brain's electrical activity captured using 14-channeled EEG signals. More specifically, we deal with attention estimation as a regression problem. The work has been performed on the publicly available Phyaat dataset. The concept of Gramian Angular Difference Field (GADF) has been used to convert time-series EEG data into an image having 14 channels, enabling us to train various deep learning models such as 2D CNN, 3D CNN, and convolutional autoencoders. Their performances have been compared amongst themselves as well as with the work done previously. Amongst the different models we tried, 2D CNN gave the best performance. It outperformed the existing methods by a decent margin of 0.22 mean absolute error (MAE).

Bayesian Optimisation for a Biologically Inspired Population Neural Network

Apr 13, 2021

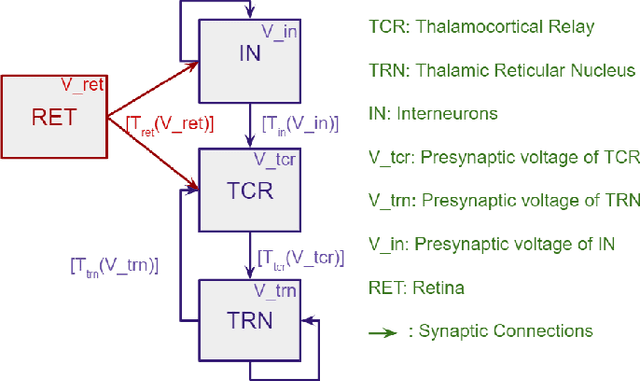

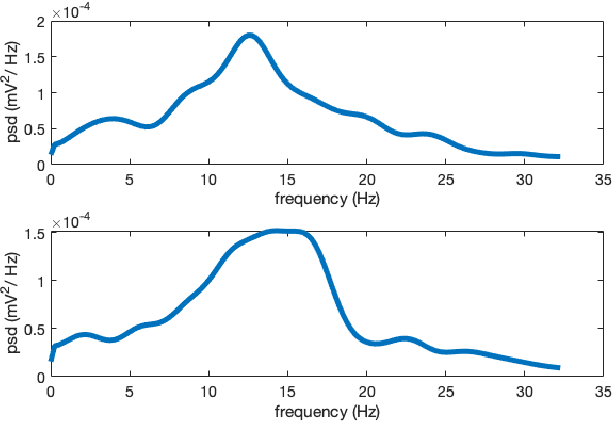

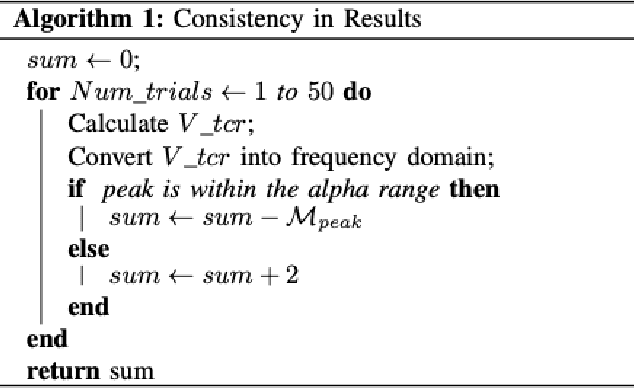

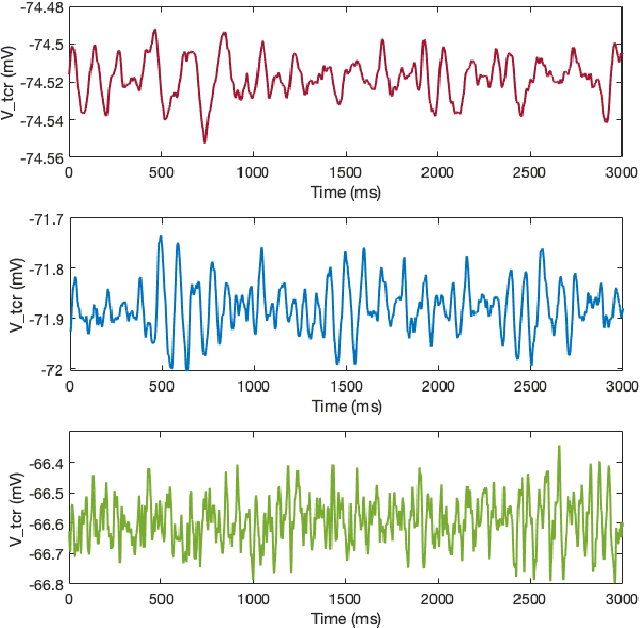

Abstract:We have used Bayesian Optimisation (BO) to find hyper-parameters in an existing biologically plausible population neural network. The 8-dimensional optimal hyper-parameter combination should be such that the network dynamics simulate the resting state alpha rhythm (8 - 13 Hz rhythms in brain signals). Each combination of these eight hyper-parameters constitutes a 'datapoint' in the parameter space. The best combination of these parameters leads to the neural network's output power spectral peak being constraint within the alpha band. Further, constraints were introduced to the BO algorithm based on qualitative observation of the network output time series, so that high amplitude pseudo-periodic oscillations are removed. Upon successful implementation for alpha band, we further optimised the network to oscillate within the theta (4 - 8 Hz) and beta (13 - 30 Hz) bands. The changing rhythms in the model can now be studied using the identified optimal hyper-parameters for the respective frequency bands. We have previously tuned parameters in the existing neural network by the trial-and-error approach; however, due to time and computational constraints, we could not vary more than three parameters at once. The approach detailed here, allows an automatic hyper-parameter search, producing reliable parameter sets for the network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge