Luca Rossi

LoRAP: Low-Rank Aggregation Prompting for Quantized Graph Neural Networks Training

Jan 21, 2026Abstract:Graph Neural Networks (GNNs) are neural networks that aim to process graph data, capturing the relationships and interactions between nodes using the message-passing mechanism. GNN quantization has emerged as a promising approach for reducing model size and accelerating inference in resource-constrained environments. Compared to quantization in LLMs, quantizing graph features is more emphasized in GNNs. Inspired by the above, we propose to leverage prompt learning, which manipulates the input data, to improve the performance of quantization-aware training (QAT) for GNNs. To mitigate the issue that prompting the node features alone can only make part of the quantized aggregation result optimal, we introduce Low-Rank Aggregation Prompting (LoRAP), which injects lightweight, input-dependent prompts into each aggregated feature to optimize the results of quantized aggregations. Extensive evaluations on 4 leading QAT frameworks over 9 graph datasets demonstrate that LoRAP consistently enhances the performance of low-bit quantized GNNs while introducing a minimal computational overhead.

RealHarm: A Collection of Real-World Language Model Application Failures

Apr 14, 2025Abstract:Language model deployments in consumer-facing applications introduce numerous risks. While existing research on harms and hazards of such applications follows top-down approaches derived from regulatory frameworks and theoretical analyses, empirical evidence of real-world failure modes remains underexplored. In this work, we introduce RealHarm, a dataset of annotated problematic interactions with AI agents built from a systematic review of publicly reported incidents. Analyzing harms, causes, and hazards specifically from the deployer's perspective, we find that reputational damage constitutes the predominant organizational harm, while misinformation emerges as the most common hazard category. We empirically evaluate state-of-the-art guardrails and content moderation systems to probe whether such systems would have prevented the incidents, revealing a significant gap in the protection of AI applications.

BioX-CPath: Biologically-driven Explainable Diagnostics for Multistain IHC Computational Pathology

Mar 26, 2025

Abstract:The development of biologically interpretable and explainable models remains a key challenge in computational pathology, particularly for multistain immunohistochemistry (IHC) analysis. We present BioX-CPath, an explainable graph neural network architecture for whole slide image (WSI) classification that leverages both spatial and semantic features across multiple stains. At its core, BioX-CPath introduces a novel Stain-Aware Attention Pooling (SAAP) module that generates biologically meaningful, stain-aware patient embeddings. Our approach achieves state-of-the-art performance on both Rheumatoid Arthritis and Sjogren's Disease multistain datasets. Beyond performance metrics, BioX-CPath provides interpretable insights through stain attention scores, entropy measures, and stain interaction scores, that permit measuring model alignment with known pathological mechanisms. This biological grounding, combined with strong classification performance, makes BioX-CPath particularly suitable for clinical applications where interpretability is key. Source code and documentation can be found at: https://github.com/AmayaGS/BioX-CPath.

PHGNN: A Novel Prompted Hypergraph Neural Network to Diagnose Alzheimer's Disease

Mar 18, 2025Abstract:The accurate diagnosis of Alzheimer's disease (AD) and prognosis of mild cognitive impairment (MCI) conversion are crucial for early intervention. However, existing multimodal methods face several challenges, from the heterogeneity of input data, to underexplored modality interactions, missing data due to patient dropouts, and limited data caused by the time-consuming and costly data collection process. In this paper, we propose a novel Prompted Hypergraph Neural Network (PHGNN) framework that addresses these limitations by integrating hypergraph based learning with prompt learning. Hypergraphs capture higher-order relationships between different modalities, while our prompt learning approach for hypergraphs, adapted from NLP, enables efficient training with limited data. Our model is validated through extensive experiments on the ADNI dataset, outperforming SOTA methods in both AD diagnosis and the prediction of MCI conversion.

SuperCap: Multi-resolution Superpixel-based Image Captioning

Mar 11, 2025Abstract:It has been a longstanding goal within image captioning to move beyond a dependence on object detection. We investigate using superpixels coupled with Vision Language Models (VLMs) to bridge the gap between detector-based captioning architectures and those that solely pretrain on large datasets. Our novel superpixel approach ensures that the model receives object-like features whilst the use of VLMs provides our model with open set object understanding. Furthermore, we extend our architecture to make use of multi-resolution inputs, allowing our model to view images in different levels of detail, and use an attention mechanism to determine which parts are most relevant to the caption. We demonstrate our model's performance with multiple VLMs and through a range of ablations detailing the impact of different architectural choices. Our full model achieves a competitive CIDEr score of $136.9$ on the COCO Karpathy split.

Graph Generation via Spectral Diffusion

Feb 29, 2024

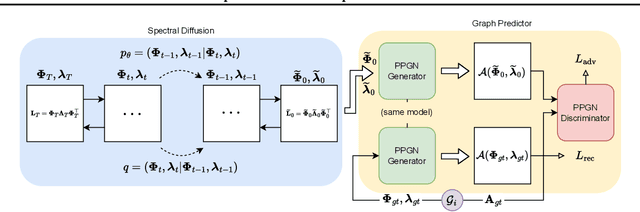

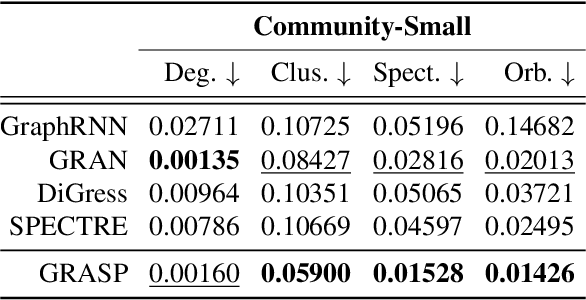

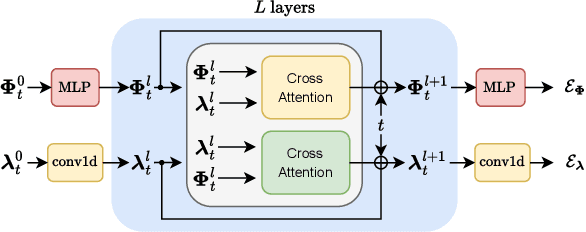

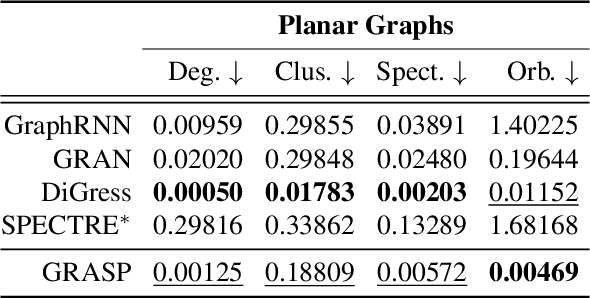

Abstract:In this paper, we present GRASP, a novel graph generative model based on 1) the spectral decomposition of the graph Laplacian matrix and 2) a diffusion process. Specifically, we propose to use a denoising model to sample eigenvectors and eigenvalues from which we can reconstruct the graph Laplacian and adjacency matrix. Our permutation invariant model can also handle node features by concatenating them to the eigenvectors of each node. Using the Laplacian spectrum allows us to naturally capture the structural characteristics of the graph and work directly in the node space while avoiding the quadratic complexity bottleneck that limits the applicability of other methods. This is achieved by truncating the spectrum, which as we show in our experiments results in a faster yet accurate generative process. An extensive set of experiments on both synthetic and real world graphs demonstrates the strengths of our model against state-of-the-art alternatives.

GNN-LoFI: a Novel Graph Neural Network through Localized Feature-based Histogram Intersection

Jan 17, 2024Abstract:Graph neural networks are increasingly becoming the framework of choice for graph-based machine learning. In this paper, we propose a new graph neural network architecture that substitutes classical message passing with an analysis of the local distribution of node features. To this end, we extract the distribution of features in the egonet for each local neighbourhood and compare them against a set of learned label distributions by taking the histogram intersection kernel. The similarity information is then propagated to other nodes in the network, effectively creating a message passing-like mechanism where the message is determined by the ensemble of the features. We perform an ablation study to evaluate the network's performance under different choices of its hyper-parameters. Finally, we test our model on standard graph classification and regression benchmarks, and we find that it outperforms widely used alternative approaches, including both graph kernels and graph neural networks.

Multi-Stain Self-Attention Graph Multiple Instance Learning Pipeline for Histopathology Whole Slide Images

Sep 19, 2023

Abstract:Whole Slide Images (WSIs) present a challenging computer vision task due to their gigapixel size and presence of numerous artefacts. Yet they are a valuable resource for patient diagnosis and stratification, often representing the gold standard for diagnostic tasks. Real-world clinical datasets tend to come as sets of heterogeneous WSIs with labels present at the patient-level, with poor to no annotations. Weakly supervised attention-based multiple instance learning approaches have been developed in recent years to address these challenges, but can fail to resolve both long and short-range dependencies. Here we propose an end-to-end multi-stain self-attention graph (MUSTANG) multiple instance learning pipeline, which is designed to solve a weakly-supervised gigapixel multi-image classification task, where the label is assigned at the patient-level, but no slide-level labels or region annotations are available. The pipeline uses a self-attention based approach by restricting the operations to a highly sparse k-Nearest Neighbour Graph of embedded WSI patches based on the Euclidean distance. We show this approach achieves a state-of-the-art F1-score/AUC of 0.89/0.92, outperforming the widely used CLAM model. Our approach is highly modular and can easily be modified to suit different clinical datasets, as it only requires a patient-level label without annotations and accepts WSI sets of different sizes, as the graphs can be of varying sizes and structures. The source code can be found at https://github.com/AmayaGS/MUSTANG.

Graph Neural Networks in Vision-Language Image Understanding: A Survey

Mar 07, 2023Abstract:2D image understanding is a complex problem within Computer Vision, but it holds the key to providing human level scene comprehension. It goes further than identifying the objects in an image, and instead it attempts to understand the scene. Solutions to this problem form the underpinning of a range of tasks, including image captioning, Visual Question Answering (VQA), and image retrieval. Graphs provide a natural way to represent the relational arrangement between objects in an image, and thus in recent years Graph Neural Networks (GNNs) have become a standard component of many 2D image understanding pipelines, becoming a core architectural component especially in the VQA group of tasks. In this survey, we review this rapidly evolving field and we provide a taxonomy of graph types used in 2D image understanding approaches, a comprehensive list of the GNN models used in this domain, and a roadmap of future potential developments. To the best of our knowledge, this is the first comprehensive survey that covers image captioning, visual question answering, and image retrieval techniques that focus on using GNNs as the main part of their architecture.

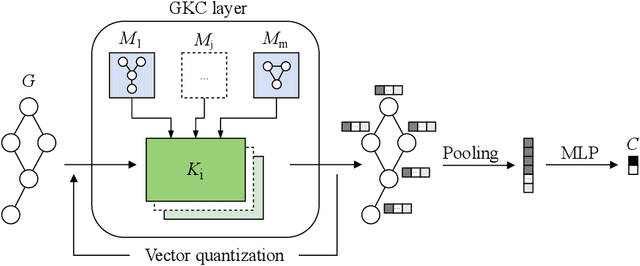

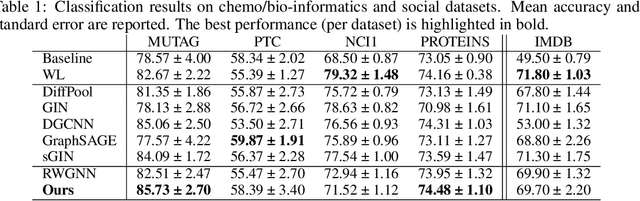

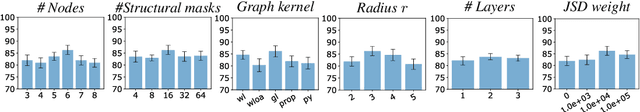

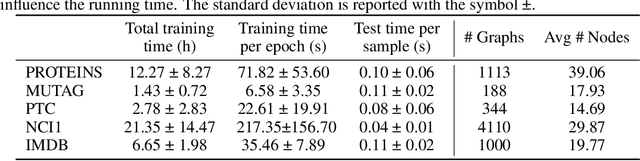

Graph Kernel Neural Networks

Dec 14, 2021

Abstract:The convolution operator at the core of many modern neural architectures can effectively be seen as performing a dot product between an input matrix and a filter. While this is readily applicable to data such as images, which can be represented as regular grids in the Euclidean space, extending the convolution operator to work on graphs proves more challenging, due to their irregular structure. In this paper, we propose to use graph kernels, i.e., kernel functions that compute an inner product on graphs, to extend the standard convolution operator to the graph domain. This allows us to define an entirely structural model that does not require computing the embedding of the input graph. Our architecture allows to plug-in any type and number of graph kernels and has the added benefit of providing some interpretability in terms of the structural masks that are learned during the training process, similarly to what happens for convolutional masks in traditional convolutional neural networks. We perform an extensive ablation study to investigate the impact of the model hyper-parameters and we show that our model achieves competitive performance on standard graph classification datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge