Lorena Escudero Sanchez

Developing Predictive and Robust Radiomics Models for Chemotherapy Response in High-Grade Serous Ovarian Carcinoma

Jan 13, 2026Abstract:Objectives: High-grade serous ovarian carcinoma (HGSOC) is typically diagnosed at an advanced stage with extensive peritoneal metastases, making treatment challenging. Neoadjuvant chemotherapy (NACT) is often used to reduce tumor burden before surgery, but about 40% of patients show limited response. Radiomics, combined with machine learning (ML), offers a promising non-invasive method for predicting NACT response by analyzing computed tomography (CT) imaging data. This study aimed to improve response prediction in HGSOC patients undergoing NACT by integration different feature selection methods. Materials and methods: A framework for selecting robust radiomics features was introduced by employing an automated randomisation algorithm to mimic inter-observer variability, ensuring a balance between feature robustness and prediction accuracy. Four response metrics were used: chemotherapy response score (CRS), RECIST, volume reduction (VolR), and diameter reduction (DiaR). Lesions in different anatomical sites were studied. Pre- and post-NACT CT scans were used for feature extraction and model training on one cohort, and an independent cohort was used for external testing. Results: The best prediction performance was achieved using all lesions combined for VolR prediction, with an AUC of 0.83. Omental lesions provided the best results for CRS prediction (AUC 0.77), while pelvic lesions performed best for DiaR (AUC 0.76). Conclusion: The integration of robustness into the feature selection processes ensures the development of reliable models and thus facilitates the implementation of the radiomics models in clinical applications for HGSOC patients. Future work should explore further applications of radiomics in ovarian cancer, particularly in real-time clinical settings.

Submanifold Sparse Convolutional Networks for Automated 3D Segmentation of Kidneys and Kidney Tumours in Computed Tomography

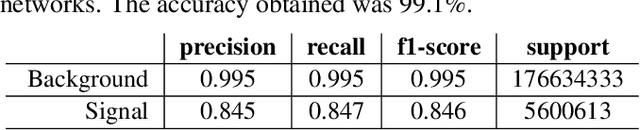

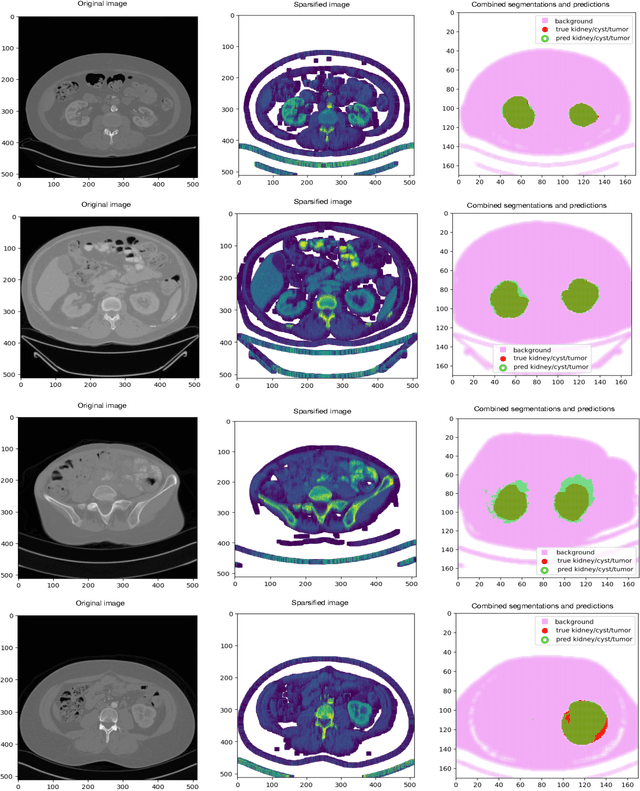

Nov 06, 2025Abstract:The accurate delineation of tumours in radiological images like Computed Tomography is a very specialised and time-consuming task, and currently a bottleneck preventing quantitative analyses to be performed routinely in the clinical setting. For this reason, developing methods for the automated segmentation of tumours in medical imaging is of the utmost importance and has driven significant efforts in recent years. However, challenges regarding the impracticality of 3D scans, given the large amount of voxels to be analysed, usually requires the downsampling of such images or using patches thereof when applying traditional convolutional neural networks. To overcome this problem, in this paper we propose a new methodology that uses, divided into two stages, voxel sparsification and submanifold sparse convolutional networks. This method allows segmentations to be performed with high-resolution inputs and a native 3D model architecture, obtaining state-of-the-art accuracies while significantly reducing the computational resources needed in terms of GPU memory and time. We studied the deployment of this methodology in the context of Computed Tomography images of renal cancer patients from the KiTS23 challenge, and our method achieved results competitive with the challenge winners, with Dice similarity coefficients of 95.8% for kidneys + masses, 85.7% for tumours + cysts, and 80.3% for tumours alone. Crucially, our method also offers significant computational improvements, achieving up to a 60% reduction in inference time and up to a 75\% reduction in VRAM usage compared to an equivalent dense architecture, across both CPU and various GPU cards tested.

Automated Segmentation of Computed Tomography Images with Submanifold Sparse Convolutional Networks

Dec 06, 2022

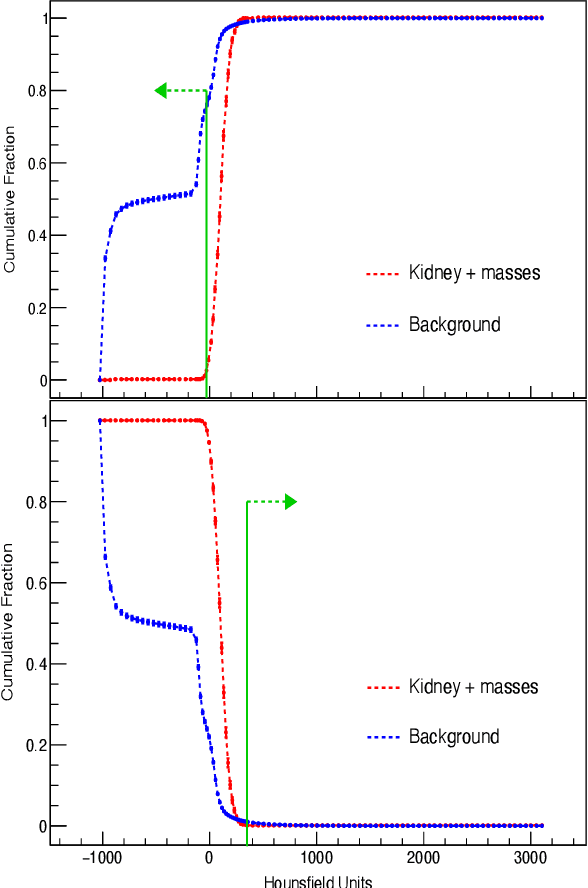

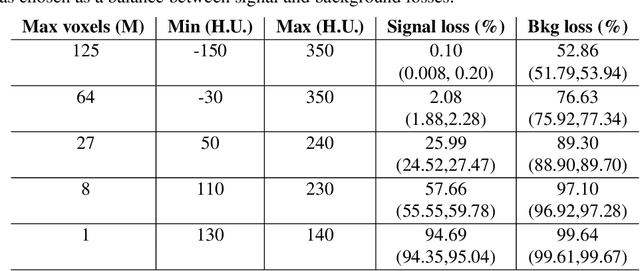

Abstract:Quantitative cancer image analysis relies on the accurate delineation of tumours, a very specialised and time-consuming task. For this reason, methods for automated segmentation of tumours in medical imaging have been extensively developed in recent years, being Computed Tomography one of the most popular imaging modalities explored. However, the large amount of 3D voxels in a typical scan is prohibitive for the entire volume to be analysed at once in conventional hardware. To overcome this issue, the processes of downsampling and/or resampling are generally implemented when using traditional convolutional neural networks in medical imaging. In this paper, we propose a new methodology that introduces a process of sparsification of the input images and submanifold sparse convolutional networks as an alternative to downsampling. As a proof of concept, we applied this new methodology to Computed Tomography images of renal cancer patients, obtaining performances of segmentations of kidneys and tumours competitive with previous methods (~84.6% Dice similarity coefficient), while achieving a significant improvement in computation time (2-3 min per training epoch).

Calibrating Ensembles for Scalable Uncertainty Quantification in Deep Learning-based Medical Segmentation

Sep 20, 2022

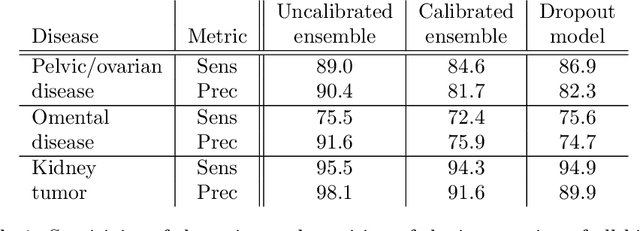

Abstract:Uncertainty quantification in automated image analysis is highly desired in many applications. Typically, machine learning models in classification or segmentation are only developed to provide binary answers; however, quantifying the uncertainty of the models can play a critical role for example in active learning or machine human interaction. Uncertainty quantification is especially difficult when using deep learning-based models, which are the state-of-the-art in many imaging applications. The current uncertainty quantification approaches do not scale well in high-dimensional real-world problems. Scalable solutions often rely on classical techniques, such as dropout, during inference or training ensembles of identical models with different random seeds to obtain a posterior distribution. In this paper, we show that these approaches fail to approximate the classification probability. On the contrary, we propose a scalable and intuitive framework to calibrate ensembles of deep learning models to produce uncertainty quantification measurements that approximate the classification probability. On unseen test data, we demonstrate improved calibration, sensitivity (in two out of three cases) and precision when being compared with the standard approaches. We further motivate the usage of our method in active learning, creating pseudo-labels to learn from unlabeled images and human-machine collaboration.

Advancing COVID-19 Diagnosis with Privacy-Preserving Collaboration in Artificial Intelligence

Nov 18, 2021

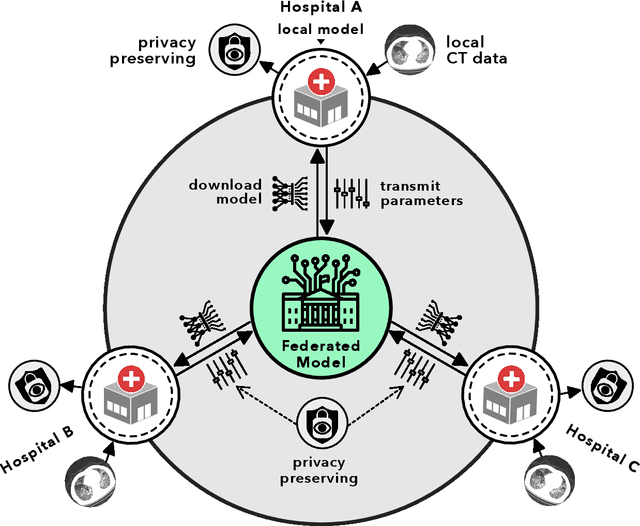

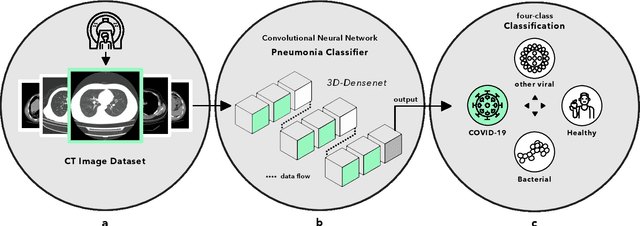

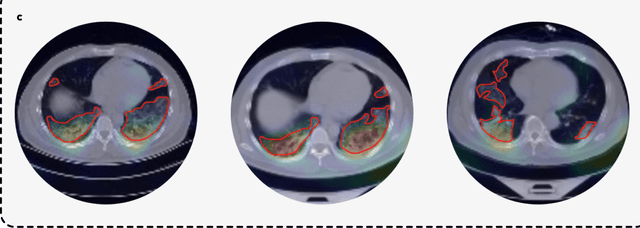

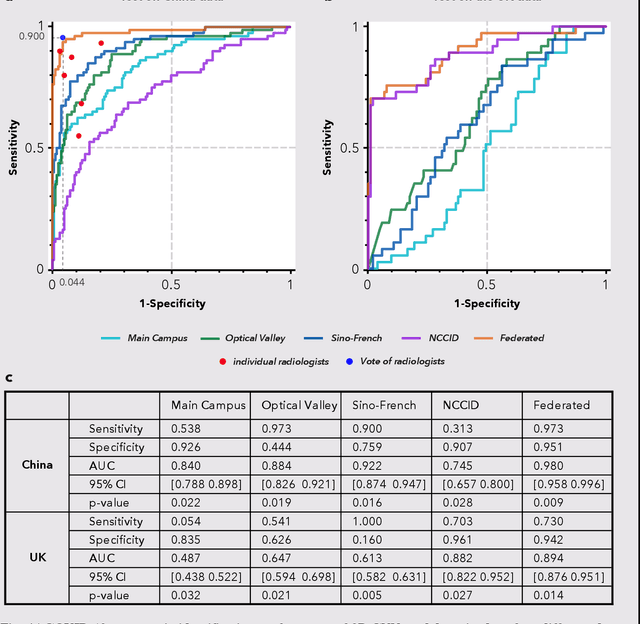

Abstract:Artificial intelligence (AI) provides a promising substitution for streamlining COVID-19 diagnoses. However, concerns surrounding security and trustworthiness impede the collection of large-scale representative medical data, posing a considerable challenge for training a well-generalised model in clinical practices. To address this, we launch the Unified CT-COVID AI Diagnostic Initiative (UCADI), where the AI model can be distributedly trained and independently executed at each host institution under a federated learning framework (FL) without data sharing. Here we show that our FL model outperformed all the local models by a large yield (test sensitivity /specificity in China: 0.973/0.951, in the UK: 0.730/0.942), achieving comparable performance with a panel of professional radiologists. We further evaluated the model on the hold-out (collected from another two hospitals leaving out the FL) and heterogeneous (acquired with contrast materials) data, provided visual explanations for decisions made by the model, and analysed the trade-offs between the model performance and the communication costs in the federated training process. Our study is based on 9,573 chest computed tomography scans (CTs) from 3,336 patients collected from 23 hospitals located in China and the UK. Collectively, our work advanced the prospects of utilising federated learning for privacy-preserving AI in digital health.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge