Jiehua Yang

Advancing COVID-19 Diagnosis with Privacy-Preserving Collaboration in Artificial Intelligence

Nov 18, 2021

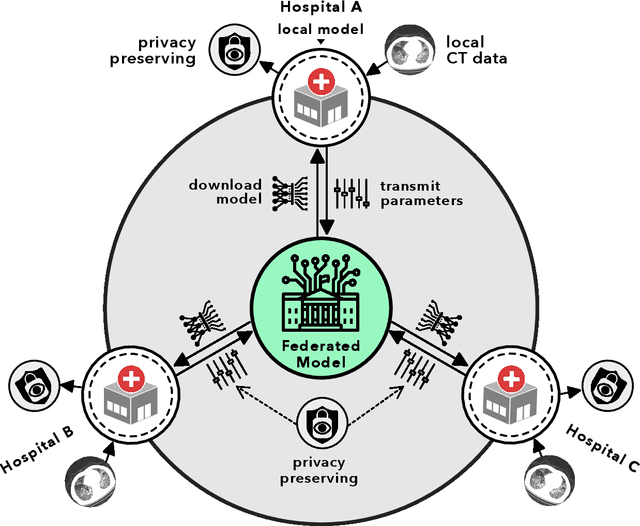

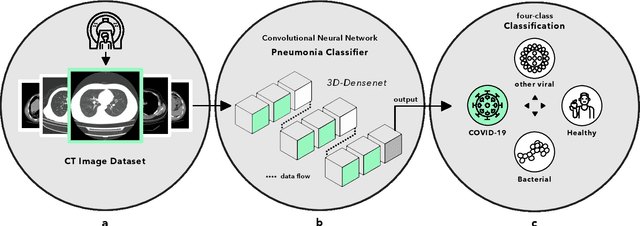

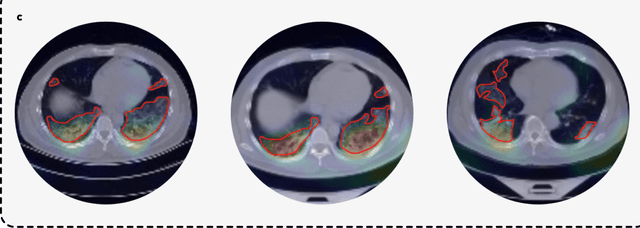

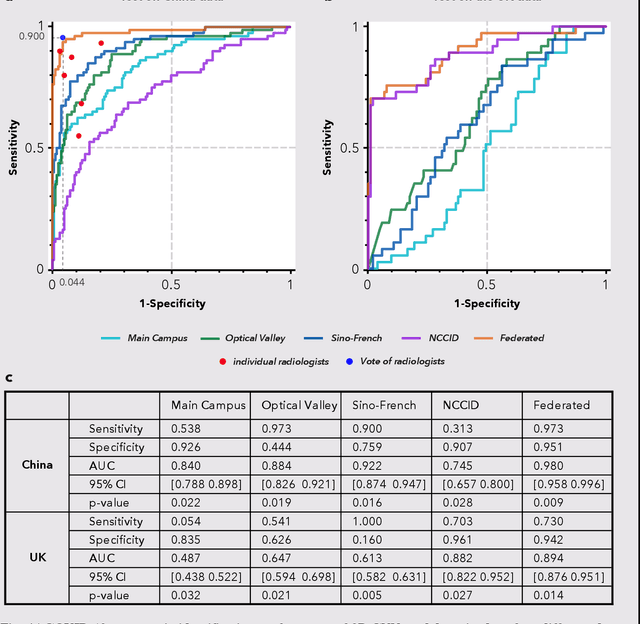

Abstract:Artificial intelligence (AI) provides a promising substitution for streamlining COVID-19 diagnoses. However, concerns surrounding security and trustworthiness impede the collection of large-scale representative medical data, posing a considerable challenge for training a well-generalised model in clinical practices. To address this, we launch the Unified CT-COVID AI Diagnostic Initiative (UCADI), where the AI model can be distributedly trained and independently executed at each host institution under a federated learning framework (FL) without data sharing. Here we show that our FL model outperformed all the local models by a large yield (test sensitivity /specificity in China: 0.973/0.951, in the UK: 0.730/0.942), achieving comparable performance with a panel of professional radiologists. We further evaluated the model on the hold-out (collected from another two hospitals leaving out the FL) and heterogeneous (acquired with contrast materials) data, provided visual explanations for decisions made by the model, and analysed the trade-offs between the model performance and the communication costs in the federated training process. Our study is based on 9,573 chest computed tomography scans (CTs) from 3,336 patients collected from 23 hospitals located in China and the UK. Collectively, our work advanced the prospects of utilising federated learning for privacy-preserving AI in digital health.

Affinity Space Adaptation for Semantic Segmentation Across Domains

Sep 26, 2020

Abstract:Semantic segmentation with dense pixel-wise annotation has achieved excellent performance thanks to deep learning. However, the generalization of semantic segmentation in the wild remains challenging. In this paper, we address the problem of unsupervised domain adaptation (UDA) in semantic segmentation. Motivated by the fact that source and target domain have invariant semantic structures, we propose to exploit such invariance across domains by leveraging co-occurring patterns between pairwise pixels in the output of structured semantic segmentation. This is different from most existing approaches that attempt to adapt domains based on individual pixel-wise information in image, feature, or output level. Specifically, we perform domain adaptation on the affinity relationship between adjacent pixels termed affinity space of source and target domain. To this end, we develop two affinity space adaptation strategies: affinity space cleaning and adversarial affinity space alignment. Extensive experiments demonstrate that the proposed method achieves superior performance against some state-of-the-art methods on several challenging benchmarks for semantic segmentation across domains. The code is available at https://github.com/idealwei/ASANet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge