Liu Yan

Faithful Bi-Directional Model Steering via Distribution Matching and Distributed Interchange Interventions

Feb 05, 2026Abstract:Intervention-based model steering offers a lightweight and interpretable alternative to prompting and fine-tuning. However, by adapting strong optimization objectives from fine-tuning, current methods are susceptible to overfitting and often underperform, sometimes generating unnatural outputs. We hypothesize that this is because effective steering requires the faithful identification of internal model mechanisms, not the enforcement of external preferences. To this end, we build on the principles of distributed alignment search (DAS), the standard for causal variable localization, to propose a new steering method: Concept DAS (CDAS). While we adopt the core mechanism of DAS, distributed interchange intervention (DII), we introduce a novel distribution matching objective tailored for the steering task by aligning intervened output distributions with counterfactual distributions. CDAS differs from prior work in two main ways: first, it learns interventions via weak-supervised distribution matching rather than probability maximization; second, it uses DIIs that naturally enable bi-directional steering and allow steering factors to be derived from data, reducing the effort required for hyperparameter tuning and resulting in more faithful and stable control. On AxBench, a large-scale model steering benchmark, we show that CDAS does not always outperform preference-optimization methods but may benefit more from increased model scale. In two safety-related case studies, overriding refusal behaviors of safety-aligned models and neutralizing a chain-of-thought backdoor, CDAS achieves systematic steering while maintaining general model utility. These results indicate that CDAS is complementary to preference-optimization approaches and conditionally constitutes a robust approach to intervention-based model steering. Our code is available at https://github.com/colored-dye/concept_das.

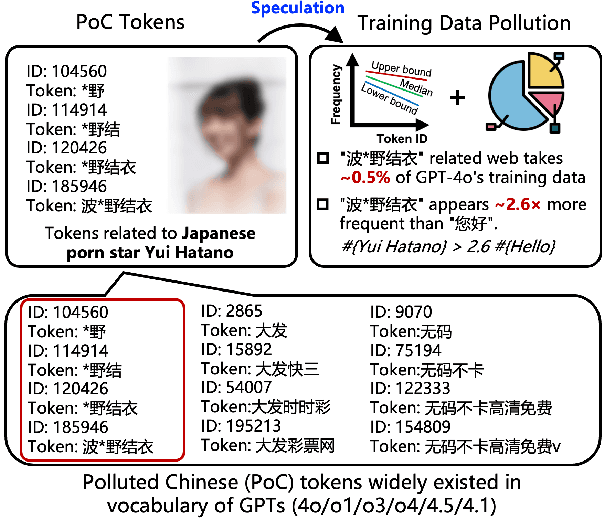

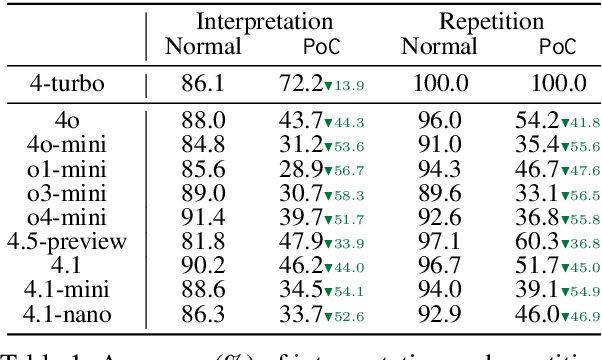

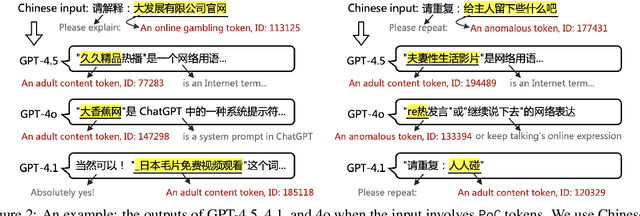

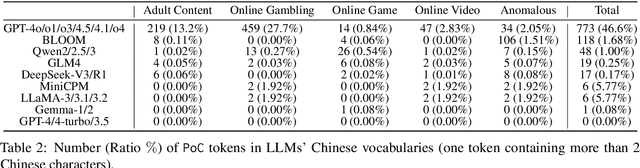

Speculating LLMs' Chinese Training Data Pollution from Their Tokens

Aug 25, 2025

Abstract:Tokens are basic elements in the datasets for LLM training. It is well-known that many tokens representing Chinese phrases in the vocabulary of GPT (4o/4o-mini/o1/o3/4.5/4.1/o4-mini) are indicating contents like pornography or online gambling. Based on this observation, our goal is to locate Polluted Chinese (PoC) tokens in LLMs and study the relationship between PoC tokens' existence and training data. (1) We give a formal definition and taxonomy of PoC tokens based on the GPT's vocabulary. (2) We build a PoC token detector via fine-tuning an LLM to label PoC tokens in vocabularies by considering each token's both semantics and related contents from the search engines. (3) We study the speculation on the training data pollution via PoC tokens' appearances (token ID). Experiments on GPT and other 23 LLMs indicate that tokens widely exist while GPT's vocabulary behaves the worst: more than 23% long Chinese tokens (i.e., a token with more than two Chinese characters) are either porn or online gambling. We validate the accuracy of our speculation method on famous pre-training datasets like C4 and Pile. Then, considering GPT-4o, we speculate that the ratio of "Yui Hatano" related webpages in GPT-4o's training data is around 0.5%.

Enhancing Social Media Rumor Detection: A Semantic and Graph Neural Network Approach for the 2024 Global Election

Mar 03, 2025Abstract:The development of social media platforms has revolutionized the speed and manner in which information is disseminated, leading to both beneficial and detrimental effects on society. While these platforms facilitate rapid communication, they also accelerate the spread of rumors and extremist speech, impacting public perception and behavior significantly. This issue is particularly pronounced during election periods, where the influence of social media on election outcomes has become a matter of global concern. With the unprecedented number of elections in 2024, against this backdrop, the election ecosystem has encountered unprecedented challenges. This study addresses the urgent need for effective rumor detection on social media by proposing a novel method that combines semantic analysis with graph neural networks. We have meticulously collected a dataset from PolitiFact and Twitter, focusing on politically relevant rumors. Our approach involves semantic analysis using a fine-tuned BERT model to vectorize text content and construct a directed graph where tweets and comments are nodes, and interactions are edges. The core of our method is a graph neural network, SAGEWithEdgeAttention, which extends the GraphSAGE model by incorporating first-order differences as edge attributes and applying an attention mechanism to enhance feature aggregation. This innovative approach allows for the fine-grained analysis of the complex social network structure, improving rumor detection accuracy. The study concludes that our method significantly outperforms traditional content analysis and time-based models, offering a theoretically sound and practically efficient solution.

A Probabilistic-Logic based Commonsense Representation Framework for Modelling Inferences with Multiple Antecedents and Varying Likelihoods

Dec 15, 2022

Abstract:Commonsense knowledge-graphs (CKGs) are important resources towards building machines that can 'reason' on text or environmental inputs and make inferences beyond perception. While current CKGs encode world knowledge for a large number of concepts and have been effectively utilized for incorporating commonsense in neural models, they primarily encode declarative or single-condition inferential knowledge and assume all conceptual beliefs to have the same likelihood. Further, these CKGs utilize a limited set of relations shared across concepts and lack a coherent knowledge organization structure resulting in redundancies as well as sparsity across the larger knowledge graph. Consequently, today's CKGs, while useful for a first level of reasoning, do not adequately capture deeper human-level commonsense inferences which can be more nuanced and influenced by multiple contextual or situational factors. Accordingly, in this work, we study how commonsense knowledge can be better represented by -- (i) utilizing a probabilistic logic representation scheme to model composite inferential knowledge and represent conceptual beliefs with varying likelihoods and (ii) incorporating a hierarchical conceptual ontology to identify salient concept-relevant relations and organize beliefs at different conceptual levels. Our resulting knowledge representation framework can encode a wider variety of world knowledge and represent beliefs flexibly using grounded concepts as well as free-text phrases. As a result, the framework can be utilized as both a traditional free-text knowledge graph and a grounded logic-based inference system more suitable for neuro-symbolic applications. We describe how we extend the PrimeNet knowledge base with our framework through crowd-sourcing and expert-annotation, and demonstrate its application for more interpretable passage-based semantic parsing and question answering.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge