Lilun Deng

MambaVF: State Space Model for Efficient Video Fusion

Feb 05, 2026Abstract:Video fusion is a fundamental technique in various video processing tasks. However, existing video fusion methods heavily rely on optical flow estimation and feature warping, resulting in severe computational overhead and limited scalability. This paper presents MambaVF, an efficient video fusion framework based on state space models (SSMs) that performs temporal modeling without explicit motion estimation. First, by reformulating video fusion as a sequential state update process, MambaVF captures long-range temporal dependencies with linear complexity while significantly reducing computation and memory costs. Second, MambaVF proposes a lightweight SSM-based fusion module that replaces conventional flow-guided alignment via a spatio-temporal bidirectional scanning mechanism. This module enables efficient information aggregation across frames. Extensive experiments across multiple benchmarks demonstrate that our MambaVF achieves state-of-the-art performance in multi-exposure, multi-focus, infrared-visible, and medical video fusion tasks. We highlight that MambaVF enjoys high efficiency, reducing up to 92.25% of parameters and 88.79% of computational FLOPs and a 2.1x speedup compared to existing methods. Project page: https://mambavf.github.io

A Unified Solution to Video Fusion: From Multi-Frame Learning to Benchmarking

May 26, 2025

Abstract:The real world is dynamic, yet most image fusion methods process static frames independently, ignoring temporal correlations in videos and leading to flickering and temporal inconsistency. To address this, we propose Unified Video Fusion (UniVF), a novel framework for temporally coherent video fusion that leverages multi-frame learning and optical flow-based feature warping for informative, temporally coherent video fusion. To support its development, we also introduce Video Fusion Benchmark (VF-Bench), the first comprehensive benchmark covering four video fusion tasks: multi-exposure, multi-focus, infrared-visible, and medical fusion. VF-Bench provides high-quality, well-aligned video pairs obtained through synthetic data generation and rigorous curation from existing datasets, with a unified evaluation protocol that jointly assesses the spatial quality and temporal consistency of video fusion. Extensive experiments show that UniVF achieves state-of-the-art results across all tasks on VF-Bench. Project page: https://vfbench.github.io.

Retinex-MEF: Retinex-based Glare Effects Aware Unsupervised Multi-Exposure Image Fusion

Mar 10, 2025

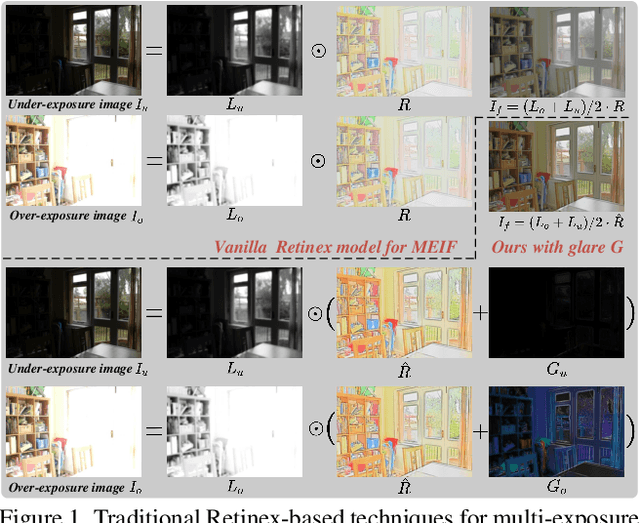

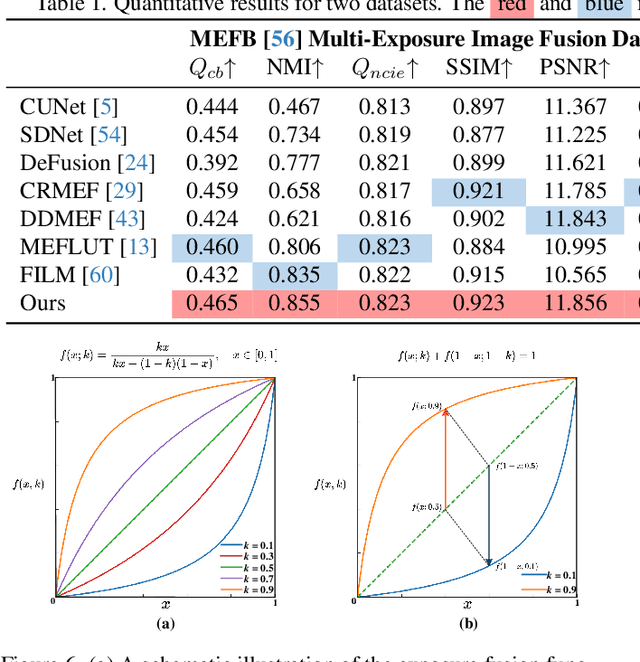

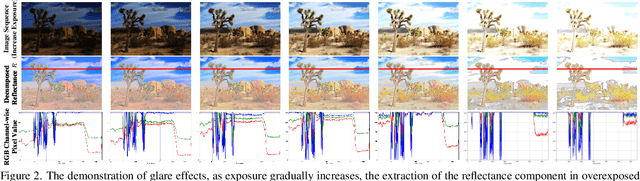

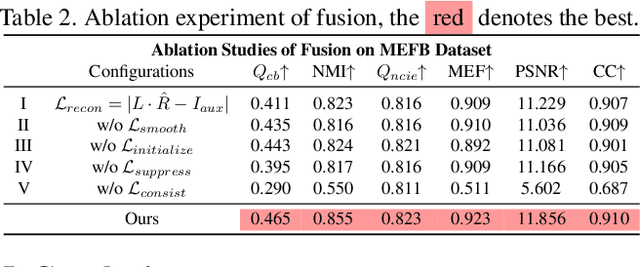

Abstract:Multi-exposure image fusion consolidates multiple low dynamic range images of the same scene into a singular high dynamic range image. Retinex theory, which separates image illumination from scene reflectance, is naturally adopted to ensure consistent scene representation and effective information fusion across varied exposure levels. However, the conventional pixel-wise multiplication of illumination and reflectance inadequately models the glare effect induced by overexposure. To better adapt this theory for multi-exposure image fusion, we introduce an unsupervised and controllable method termed~\textbf{(Retinex-MEF)}. Specifically, our method decomposes multi-exposure images into separate illumination components and a shared reflectance component, and effectively modeling the glare induced by overexposure. Employing a bidirectional loss constraint to learn the common reflectance component, our approach effectively mitigates the glare effect. Furthermore, we establish a controllable exposure fusion criterion, enabling global exposure adjustments while preserving contrast, thus overcoming the constraints of fixed-level fusion. A series of experiments across multiple datasets, including underexposure-overexposure fusion, exposure control fusion, and homogeneous extreme exposure fusion, demonstrate the effective decomposition and flexible fusion capability of our model.

Deep Unfolding Multi-modal Image Fusion Network via Attribution Analysis

Feb 03, 2025

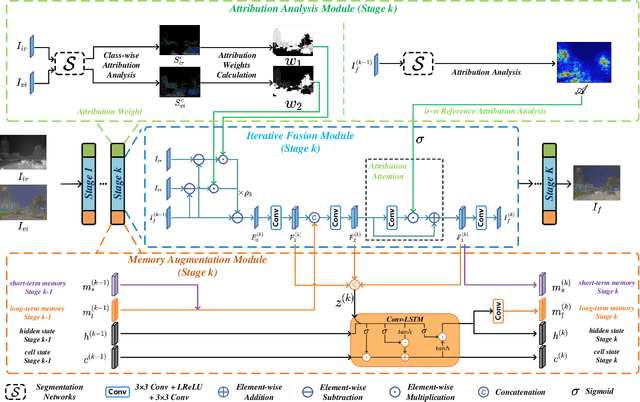

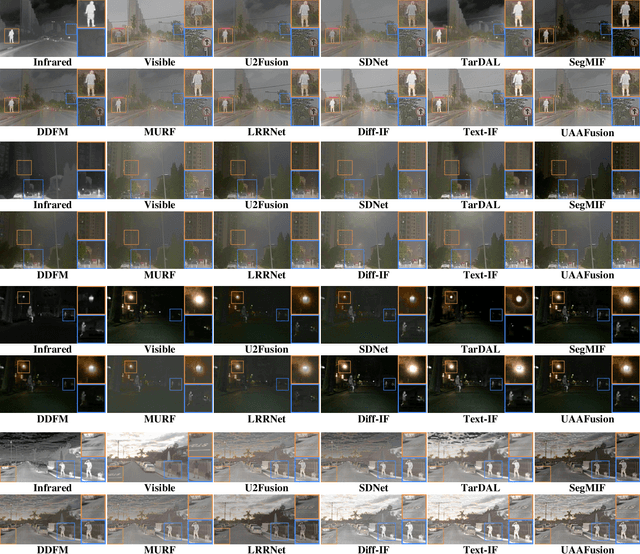

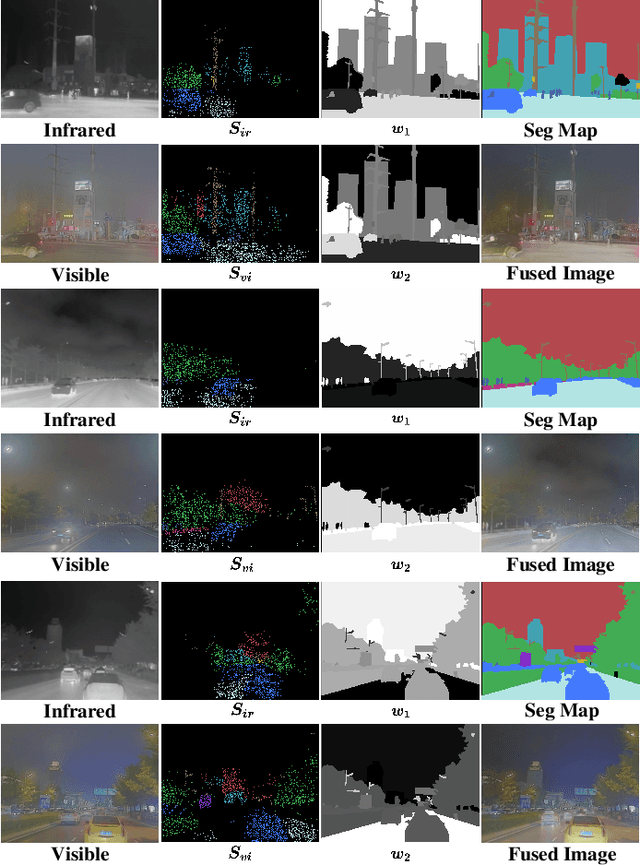

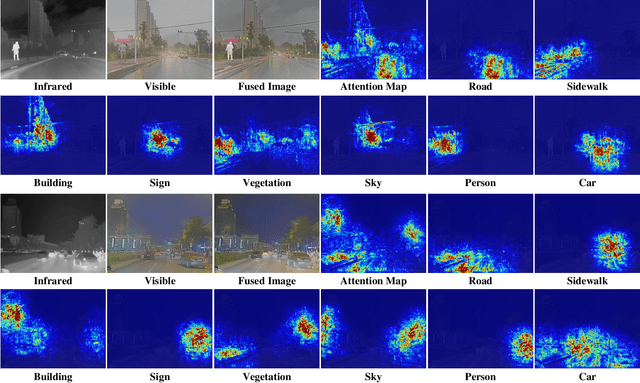

Abstract:Multi-modal image fusion synthesizes information from multiple sources into a single image, facilitating downstream tasks such as semantic segmentation. Current approaches primarily focus on acquiring informative fusion images at the visual display stratum through intricate mappings. Although some approaches attempt to jointly optimize image fusion and downstream tasks, these efforts often lack direct guidance or interaction, serving only to assist with a predefined fusion loss. To address this, we propose an ``Unfolding Attribution Analysis Fusion network'' (UAAFusion), using attribution analysis to tailor fused images more effectively for semantic segmentation, enhancing the interaction between the fusion and segmentation. Specifically, we utilize attribution analysis techniques to explore the contributions of semantic regions in the source images to task discrimination. At the same time, our fusion algorithm incorporates more beneficial features from the source images, thereby allowing the segmentation to guide the fusion process. Our method constructs a model-driven unfolding network that uses optimization objectives derived from attribution analysis, with an attribution fusion loss calculated from the current state of the segmentation network. We also develop a new pathway function for attribution analysis, specifically tailored to the fusion tasks in our unfolding network. An attribution attention mechanism is integrated at each network stage, allowing the fusion network to prioritize areas and pixels crucial for high-level recognition tasks. Additionally, to mitigate the information loss in traditional unfolding networks, a memory augmentation module is incorporated into our network to improve the information flow across various network layers. Extensive experiments demonstrate our method's superiority in image fusion and applicability to semantic segmentation.

Task-driven Image Fusion with Learnable Fusion Loss

Dec 04, 2024Abstract:Multi-modal image fusion aggregates information from multiple sensor sources, achieving superior visual quality and perceptual characteristics compared to any single source, often enhancing downstream tasks. However, current fusion methods for downstream tasks still use predefined fusion objectives that potentially mismatch the downstream tasks, limiting adaptive guidance and reducing model flexibility. To address this, we propose Task-driven Image Fusion (TDFusion), a fusion framework incorporating a learnable fusion loss guided by task loss. Specifically, our fusion loss includes learnable parameters modeled by a neural network called the loss generation module. This module is supervised by the loss of downstream tasks in a meta-learning manner. The learning objective is to minimize the task loss of the fused images, once the fusion module has been optimized by the fusion loss. Iterative updates between the fusion module and the loss module ensure that the fusion network evolves toward minimizing task loss, guiding the fusion process toward the task objectives. TDFusion's training relies solely on the loss of downstream tasks, making it adaptable to any specific task. It can be applied to any architecture of fusion and task networks. Experiments demonstrate TDFusion's performance in both fusion and task-related applications, including four public fusion datasets, semantic segmentation, and object detection. The code will be released.

Image Fusion via Vision-Language Model

Feb 03, 2024

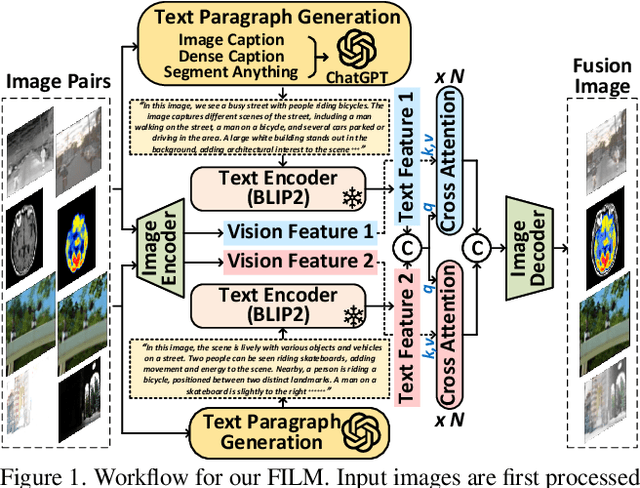

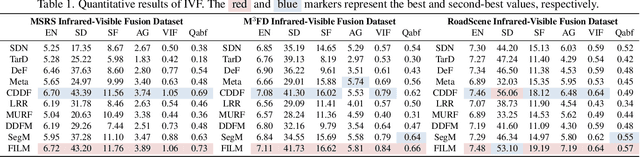

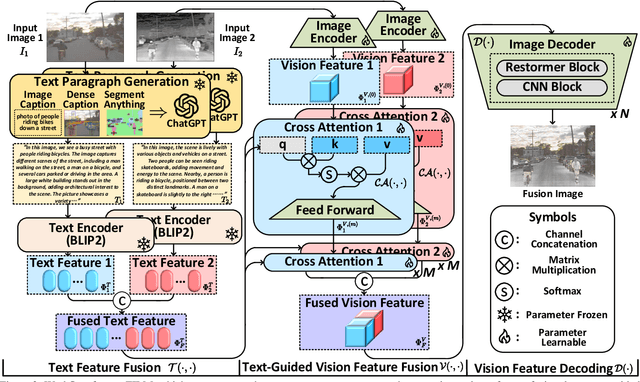

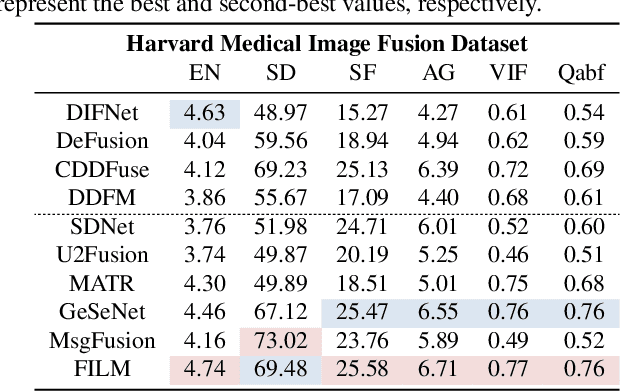

Abstract:Image fusion integrates essential information from multiple source images into a single composite, emphasizing the highlighting structure and textures, and refining imperfect areas. Existing methods predominantly focus on pixel-level and semantic visual features for recognition. However, they insufficiently explore the deeper semantic information at a text-level beyond vision. Therefore, we introduce a novel fusion paradigm named image Fusion via vIsion-Language Model (FILM), for the first time, utilizing explicit textual information in different source images to guide image fusion. In FILM, input images are firstly processed to generate semantic prompts, which are then fed into ChatGPT to obtain rich textual descriptions. These descriptions are fused in the textual domain and guide the extraction of crucial visual features from the source images through cross-attention, resulting in a deeper level of contextual understanding directed by textual semantic information. The final fused image is created by vision feature decoder. This paradigm achieves satisfactory results in four image fusion tasks: infrared-visible, medical, multi-exposure, and multi-focus image fusion. We also propose a vision-language dataset containing ChatGPT-based paragraph descriptions for the ten image fusion datasets in four fusion tasks, facilitating future research in vision-language model-based image fusion. Code and dataset will be released.

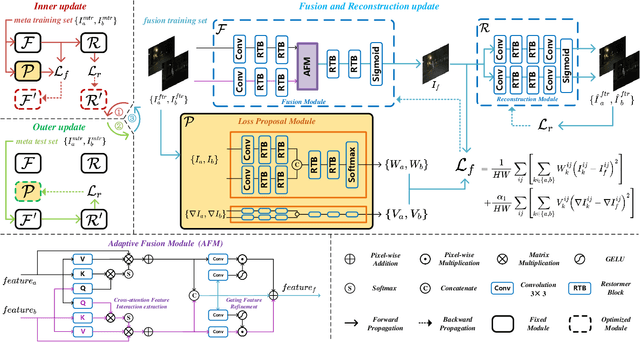

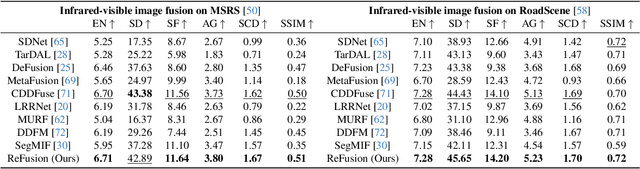

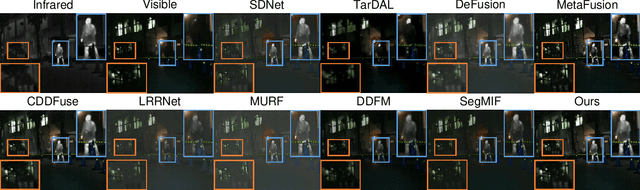

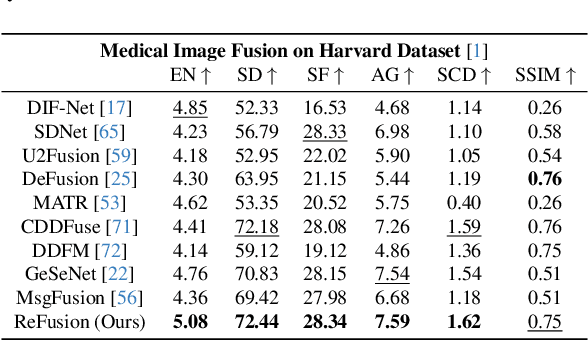

ReFusion: Learning Image Fusion from Reconstruction with Learnable Loss via Meta-Learning

Dec 13, 2023

Abstract:Image fusion aims to combine information from multiple source images into a single and more informative image. A major challenge for deep learning-based image fusion algorithms is the absence of a definitive ground truth and distance measurement. Thus, the manually specified loss functions aiming to steer the model learning, include hyperparameters that need to be manually thereby limiting the model's flexibility and generalizability to unseen tasks. To overcome the limitations of designing loss functions for specific fusion tasks, we propose a unified meta-learning based fusion framework named ReFusion, which learns optimal fusion loss from reconstructing source images. ReFusion consists of a fusion module, a loss proposal module, and a reconstruction module. Compared with the conventional methods with fixed loss functions, ReFusion employs a parameterized loss function, which is dynamically adapted by the loss proposal module based on the specific fusion scene and task. To ensure that the fusion network preserves maximal information from the source images, makes it possible to reconstruct the original images from the fusion image, a meta-learning strategy is used to make the reconstruction loss continually refine the parameters of the loss proposal module. Adaptive updating is achieved by alternating between inter update, outer update, and fusion update, where the training of the three components facilitates each other. Extensive experiments affirm that our method can successfully adapt to diverse fusion tasks, including infrared-visible, multi-focus, multi-exposure, and medical image fusion problems. The code will be released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge