Lequan Lin

Expanding the Chaos: Neural Operator for Stochastic (Partial) Differential Equations

Jan 03, 2026Abstract:Stochastic differential equations (SDEs) and stochastic partial differential equations (SPDEs) are fundamental tools for modeling stochastic dynamics across the natural sciences and modern machine learning. Developing deep learning models for approximating their solution operators promises not only fast, practical solvers, but may also inspire models that resolve classical learning tasks from a new perspective. In this work, we build on classical Wiener chaos expansions (WCE) to design neural operator (NO) architectures for SPDEs and SDEs: we project the driving noise paths onto orthonormal Wick Hermite features and parameterize the resulting deterministic chaos coefficients with neural operators, so that full solution trajectories can be reconstructed from noise in a single forward pass. On the theoretical side, we investigate the classical WCE results for the class of multi-dimensional SDEs and semilinear SPDEs considered here by explicitly writing down the associated coupled ODE/PDE systems for their chaos coefficients, which makes the separation between stochastic forcing and deterministic dynamics fully explicit and directly motivates our model designs. On the empirical side, we validate our models on a diverse suite of problems: classical SPDE benchmarks, diffusion one-step sampling on images, topological interpolation on graphs, financial extrapolation, parameter estimation, and manifold SDEs for flood prediction, demonstrating competitive accuracy and broad applicability. Overall, our results indicate that WCE-based neural operators provide a practical and scalable way to learn SDE/SPDE solution operators across diverse domains.

OmniSparse: Training-Aware Fine-Grained Sparse Attention for Long-Video MLLMs

Nov 18, 2025Abstract:Existing sparse attention methods primarily target inference-time acceleration by selecting critical tokens under predefined sparsity patterns. However, they often fail to bridge the training-inference gap and lack the capacity for fine-grained token selection across multiple dimensions such as queries, key-values (KV), and heads, leading to suboptimal performance and limited acceleration gains. In this paper, we introduce OmniSparse, a training-aware fine-grained sparse attention framework for long-video MLLMs, which operates in both training and inference with dynamic token budget allocation. Specifically, OmniSparse contains three adaptive and complementary mechanisms: (1) query selection via lazy-active classification, retaining active queries that capture broad semantic similarity while discarding most lazy ones that focus on limited local context and exhibit high functional redundancy; (2) KV selection with head-level dynamic budget allocation, where a shared budget is determined based on the flattest head and applied uniformly across all heads to ensure attention recall; and (3) KV cache slimming to reduce head-level redundancy by selectively fetching visual KV cache according to the head-level decoding query pattern. Experimental results show that OmniSparse matches the performance of full attention while achieving up to 2.7x speedup during prefill and 2.4x memory reduction during decoding.

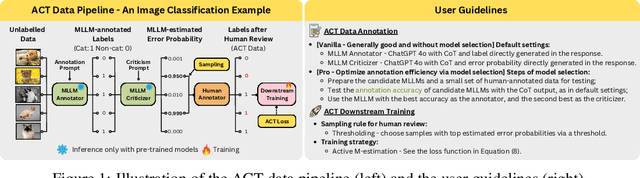

ACT as Human: Multimodal Large Language Model Data Annotation with Critical Thinking

Nov 13, 2025

Abstract:Supervised learning relies on high-quality labeled data, but obtaining such data through human annotation is both expensive and time-consuming. Recent work explores using large language models (LLMs) for annotation, but LLM-generated labels still fall short of human-level quality. To address this problem, we propose the Annotation with Critical Thinking (ACT) data pipeline, where LLMs serve not only as annotators but also as judges to critically identify potential errors. Human effort is then directed towards reviewing only the most "suspicious" cases, significantly improving the human annotation efficiency. Our major contributions are as follows: (1) ACT is applicable to a wide range of domains, including natural language processing (NLP), computer vision (CV), and multimodal understanding, by leveraging multimodal-LLMs (MLLMs). (2) Through empirical studies, we derive 7 insights on how to enhance annotation quality while efficiently reducing the human cost, and then translate these findings into user-friendly guidelines. (3) We theoretically analyze how to modify the loss function so that models trained on ACT data achieve similar performance to those trained on fully human-annotated data. Our experiments show that the performance gap can be reduced to less than 2% on most benchmark datasets while saving up to 90% of human costs.

ZipR1: Reinforcing Token Sparsity in MLLMs

Apr 23, 2025Abstract:Sparse attention mechanisms aim to reduce computational overhead by selectively processing a subset of salient tokens while preserving model performance. Despite the effectiveness of such designs, how to actively encourage token sparsity of well-posed MLLMs remains under-explored, which fundamentally limits the achievable acceleration effect during inference. In this paper, we propose a simple RL-based post-training method named \textbf{ZipR1} that treats the token reduction ratio as the efficiency reward and answer accuracy as the performance reward. In this way, our method can jointly alleviate the computation and memory bottlenecks via directly optimizing the inference-consistent efficiency-performance tradeoff. Experimental results demonstrate that ZipR1 can reduce the token ratio of Qwen2/2.5-VL from 80\% to 25\% with a minimal accuracy reduction on 13 image and video benchmarks.

Graph Pseudotime Analysis and Neural Stochastic Differential Equations for Analyzing Retinal Degeneration Dynamics and Beyond

Feb 10, 2025Abstract:Understanding disease progression at the molecular pathway level usually requires capturing both structural dependencies between pathways and the temporal dynamics of disease evolution. In this work, we solve the former challenge by developing a biologically informed graph-forming method to efficiently construct pathway graphs for subjects from our newly curated JR5558 mouse transcriptomics dataset. We then develop Graph-level Pseudotime Analysis (GPA) to infer graph-level trajectories that reveal how disease progresses at the population level, rather than in individual subjects. Based on the trajectories estimated by GPA, we identify the most sensitive pathways that drive disease stage transitions. In addition, we measure changes in pathway features using neural stochastic differential equations (SDEs), which enables us to formally define and compute pathway stability and disease bifurcation points (points of no return), two fundamental problems in disease progression research. We further extend our theory to the case when pathways can interact with each other, enabling a more comprehensive and multi-faceted characterization of disease phenotypes. The comprehensive experimental results demonstrate the effectiveness of our framework in reconstructing the dynamics of the pathway, identifying critical transitions, and providing novel insights into the mechanistic understanding of disease evolution.

When Graph Neural Networks Meet Dynamic Mode Decomposition

Oct 08, 2024

Abstract:Graph Neural Networks (GNNs) have emerged as fundamental tools for a wide range of prediction tasks on graph-structured data. Recent studies have drawn analogies between GNN feature propagation and diffusion processes, which can be interpreted as dynamical systems. In this paper, we delve deeper into this perspective by connecting the dynamics in GNNs to modern Koopman theory and its numerical method, Dynamic Mode Decomposition (DMD). We illustrate how DMD can estimate a low-rank, finite-dimensional linear operator based on multiple states of the system, effectively approximating potential nonlinear interactions between nodes in the graph. This approach allows us to capture complex dynamics within the graph accurately and efficiently. We theoretically establish a connection between the DMD-estimated operator and the original dynamic operator between system states. Building upon this foundation, we introduce a family of DMD-GNN models that effectively leverage the low-rank eigenfunctions provided by the DMD algorithm. We further discuss the potential of enhancing our approach by incorporating domain-specific constraints such as symmetry into the DMD computation, allowing the corresponding GNN models to respect known physical properties of the underlying system. Our work paves the path for applying advanced dynamical system analysis tools via GNNs. We validate our approach through extensive experiments on various learning tasks, including directed graphs, large-scale graphs, long-range interactions, and spatial-temporal graphs. We also empirically verify that our proposed models can serve as powerful encoders for link prediction tasks. The results demonstrate that our DMD-enhanced GNNs achieve state-of-the-art performance, highlighting the effectiveness of integrating DMD into GNN frameworks.

Diffusing to the Top: Boost Graph Neural Networks with Minimal Hyperparameter Tuning

Oct 08, 2024

Abstract:Graph Neural Networks (GNNs) are proficient in graph representation learning and achieve promising performance on versatile tasks such as node classification and link prediction. Usually, a comprehensive hyperparameter tuning is essential for fully unlocking GNN's top performance, especially for complicated tasks such as node classification on large graphs and long-range graphs. This is usually associated with high computational and time costs and careful design of appropriate search spaces. This work introduces a graph-conditioned latent diffusion framework (GNN-Diff) to generate high-performing GNNs based on the model checkpoints of sub-optimal hyperparameters selected by a light-tuning coarse search. We validate our method through 166 experiments across four graph tasks: node classification on small, large, and long-range graphs, as well as link prediction. Our experiments involve 10 classic and state-of-the-art target models and 20 publicly available datasets. The results consistently demonstrate that GNN-Diff: (1) boosts the performance of GNNs with efficient hyperparameter tuning; and (2) presents high stability and generalizability on unseen data across multiple generation runs. The code is available at https://github.com/lequanlin/GNN-Diff.

Unleash Graph Neural Networks from Heavy Tuning

May 21, 2024

Abstract:Graph Neural Networks (GNNs) are deep-learning architectures designed for graph-type data, where understanding relationships among individual observations is crucial. However, achieving promising GNN performance, especially on unseen data, requires comprehensive hyperparameter tuning and meticulous training. Unfortunately, these processes come with high computational costs and significant human effort. Additionally, conventional searching algorithms such as grid search may result in overfitting on validation data, diminishing generalization accuracy. To tackle these challenges, we propose a graph conditional latent diffusion framework (GNN-Diff) to generate high-performing GNNs directly by learning from checkpoints saved during a light-tuning coarse search. Our method: (1) unleashes GNN training from heavy tuning and complex search space design; (2) produces GNN parameters that outperform those obtained through comprehensive grid search; and (3) establishes higher-quality generation for GNNs compared to diffusion frameworks designed for general neural networks.

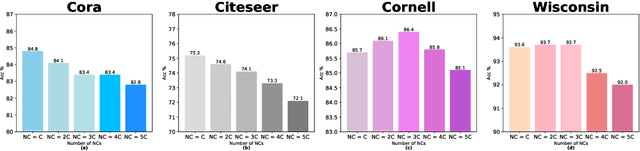

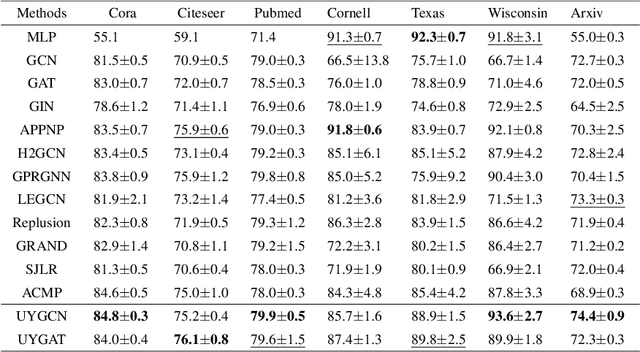

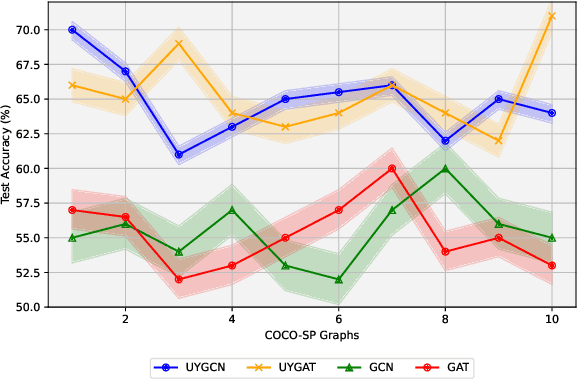

Design Your Own Universe: A Physics-Informed Agnostic Method for Enhancing Graph Neural Networks

Feb 05, 2024

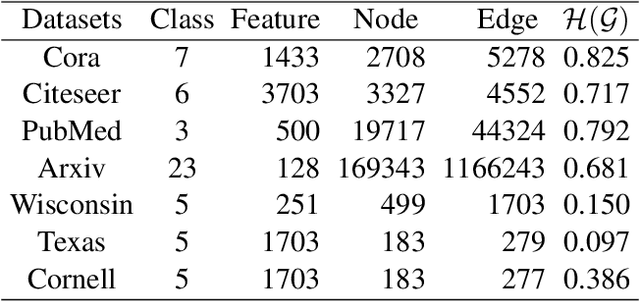

Abstract:Physics-informed Graph Neural Networks have achieved remarkable performance in learning through graph-structured data by mitigating common GNN challenges such as over-smoothing, over-squashing, and heterophily adaption. Despite these advancements, the development of a simple yet effective paradigm that appropriately integrates previous methods for handling all these challenges is still underway. In this paper, we draw an analogy between the propagation of GNNs and particle systems in physics, proposing a model-agnostic enhancement framework. This framework enriches the graph structure by introducing additional nodes and rewiring connections with both positive and negative weights, guided by node labeling information. We theoretically verify that GNNs enhanced through our approach can effectively circumvent the over-smoothing issue and exhibit robustness against over-squashing. Moreover, we conduct a spectral analysis on the rewired graph to demonstrate that the corresponding GNNs can fit both homophilic and heterophilic graphs. Empirical validations on benchmarks for homophilic, heterophilic graphs, and long-term graph datasets show that GNNs enhanced by our method significantly outperform their original counterparts.

SpecSTG: A Fast Spectral Diffusion Framework for Probabilistic Spatio-Temporal Traffic Forecasting

Jan 23, 2024Abstract:Traffic forecasting, a crucial application of spatio-temporal graph (STG) learning, has traditionally relied on deterministic models for accurate point estimations. Yet, these models fall short of identifying latent risks of unexpected volatility in future observations. To address this gap, probabilistic methods, especially variants of diffusion models, have emerged as uncertainty-aware solutions. However, existing diffusion methods typically focus on generating separate future time series for individual sensors in the traffic network, resulting in insufficient involvement of spatial network characteristics in the probabilistic learning process. To better leverage spatial dependencies and systematic patterns inherent in traffic data, we propose SpecSTG, a novel spectral diffusion framework. Our method generates the Fourier representation of future time series, transforming the learning process into the spectral domain enriched with spatial information. Additionally, our approach incorporates a fast spectral graph convolution designed for Fourier input, alleviating the computational burden associated with existing models. Numerical experiments show that SpecSTG achieves outstanding performance with traffic flow and traffic speed datasets compared to state-of-the-art baselines. The source code for SpecSTG is available at https://anonymous.4open.science/r/SpecSTG.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge