Exposition on over-squashing problem on GNNs: Current Methods, Benchmarks and Challenges

Paper and Code

Nov 17, 2023

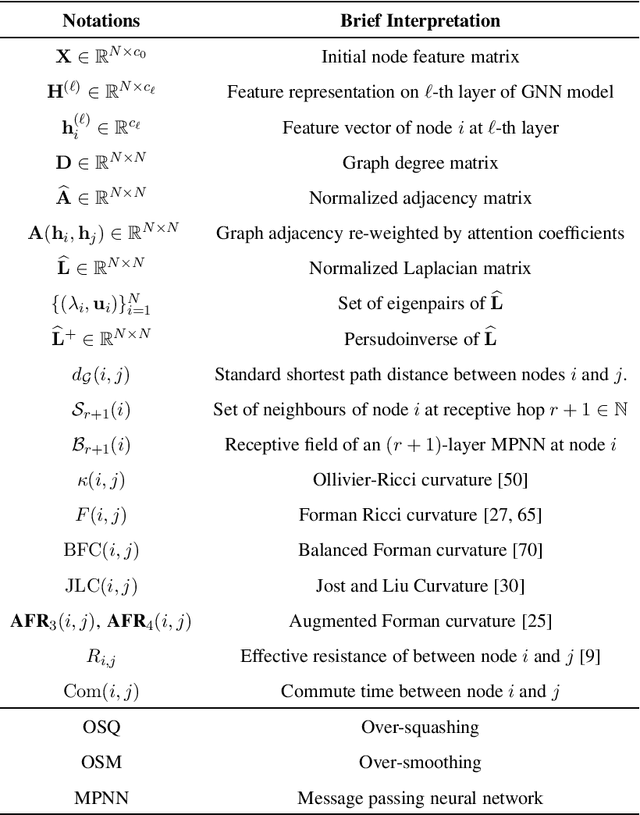

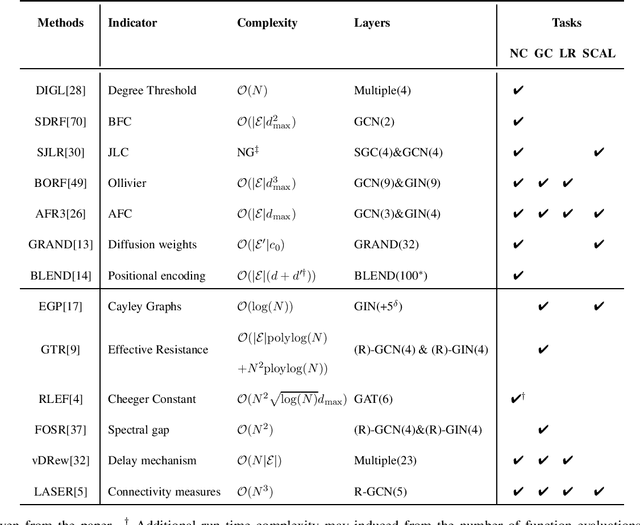

Graph-based message-passing neural networks (MPNNs) have achieved remarkable success in both node and graph-level learning tasks. However, several identified problems, including over-smoothing (OSM), limited expressive power, and over-squashing (OSQ), still limit the performance of MPNNs. In particular, OSQ serves as the latest identified problem, where MPNNs gradually lose their learning accuracy when long-range dependencies between graph nodes are required. In this work, we provide an exposition on the OSQ problem by summarizing different formulations of OSQ from current literature, as well as the three different categories of approaches for addressing the OSQ problem. In addition, we also discuss the alignment between OSQ and expressive power and the trade-off between OSQ and OSM. Furthermore, we summarize the empirical methods leveraged from existing works to verify the efficiency of OSQ mitigation approaches, with illustrations of their computational complexities. Lastly, we list some open questions that are of interest for further exploration of the OSQ problem along with potential directions from the best of our knowledge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge