Leonard Bauersfeld

Unifying Quadrotor Motion Planning and Control by Chaining Different Fidelity Models

Dec 13, 2025Abstract:Many aerial tasks involving quadrotors demand both instant reactivity and long-horizon planning. High-fidelity models enable accurate control but are too slow for long horizons; low-fidelity planners scale but degrade closed-loop performance. We present Unique, a unified MPC that cascades models of different fidelity within a single optimization: a short-horizon, high-fidelity model for accurate control, and a long-horizon, low-fidelity model for planning. We align costs across horizons, derive feasibility-preserving thrust and body-rate constraints for the point-mass model, and introduce transition constraints that match the different states, thrust-induced acceleration, and jerk-body-rate relations. To prevent local minima emerging from nonsmooth clutter, we propose a 3D progressive smoothing schedule that morphs norm-based obstacles along the horizon. In addition, we deploy parallel randomly initialized MPC solvers to discover lower-cost local minima on the long, low-fidelity horizon. In simulation and real flights, under equal computational budgets, Unique improves closed-loop position or velocity tracking by up to 75% compared with standard MPC and hierarchical planner-tracker baselines. Ablations and Pareto analyses confirm robust gains across horizon variations, constraint approximations, and smoothing schedules.

HDVIO2.0: Wind and Disturbance Estimation with Hybrid Dynamics VIO

Apr 01, 2025Abstract:Visual-inertial odometry (VIO) is widely used for state estimation in autonomous micro aerial vehicles using onboard sensors. Current methods improve VIO by incorporating a model of the translational vehicle dynamics, yet their performance degrades when faced with low-accuracy vehicle models or continuous external disturbances, like wind. Additionally, incorporating rotational dynamics in these models is computationally intractable when they are deployed in online applications, e.g., in a closed-loop control system. We present HDVIO2.0, which models full 6-DoF, translational and rotational, vehicle dynamics and tightly incorporates them into a VIO with minimal impact on the runtime. HDVIO2.0 builds upon the previous work, HDVIO, and addresses these challenges through a hybrid dynamics model combining a point-mass vehicle model with a learning-based component, with access to control commands and IMU history, to capture complex aerodynamic effects. The key idea behind modeling the rotational dynamics is to represent them with continuous-time functions. HDVIO2.0 leverages the divergence between the actual motion and the predicted motion from the hybrid dynamics model to estimate external forces as well as the robot state. Our system surpasses the performance of state-of-the-art methods in experiments using public and new drone dynamics datasets, as well as real-world flights in winds up to 25 km/h. Unlike existing approaches, we also show that accurate vehicle dynamics predictions are achievable without precise knowledge of the full vehicle state.

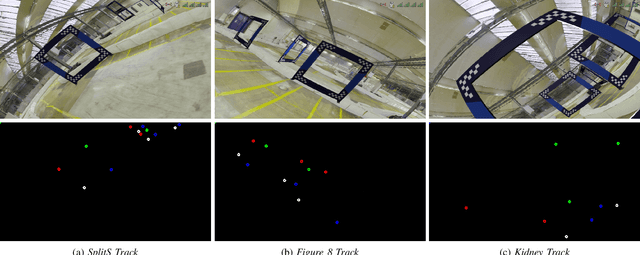

A Monocular Event-Camera Motion Capture System

Feb 17, 2025Abstract:Motion capture systems are a widespread tool in research to record ground-truth poses of objects. Commercial systems use reflective markers attached to the object and then triangulate pose of the object from multiple camera views. Consequently, the object must be visible to multiple cameras which makes such multi-view motion capture systems unsuited for deployments in narrow, confined spaces (e.g. ballast tanks of ships). In this technical report we describe a monocular event-camera motion capture system which overcomes this limitation and is ideally suited for narrow spaces. Instead of passive markers it relies on active, blinking LED markers such that each marker can be uniquely identified from the blinking frequency. The markers are placed at known locations on the tracking object. We then solve the PnP (perspective-n-points) problem to obtain the position and orientation of the object. The developed system has millimeter accuracy, millisecond latency and we demonstrate that its state estimate can be used to fly a small, agile quadrotor.

Drift-free Visual SLAM using Digital Twins

Dec 12, 2024

Abstract:Globally-consistent localization in urban environments is crucial for autonomous systems such as self-driving vehicles and drones, as well as assistive technologies for visually impaired people. Traditional Visual-Inertial Odometry (VIO) and Visual Simultaneous Localization and Mapping (VSLAM) methods, though adequate for local pose estimation, suffer from drift in the long term due to reliance on local sensor data. While GPS counteracts this drift, it is unavailable indoors and often unreliable in urban areas. An alternative is to localize the camera to an existing 3D map using visual-feature matching. This can provide centimeter-level accurate localization but is limited by the visual similarities between the current view and the map. This paper introduces a novel approach that achieves accurate and globally-consistent localization by aligning the sparse 3D point cloud generated by the VIO/VSLAM system to a digital twin using point-to-plane matching; no visual data association is needed. The proposed method provides a 6-DoF global measurement tightly integrated into the VIO/VSLAM system. Experiments run on a high-fidelity GPS simulator and real-world data collected from a drone demonstrate that our approach outperforms state-of-the-art VIO-GPS systems and offers superior robustness against viewpoint changes compared to the state-of-the-art Visual SLAM systems.

Demonstrating Agile Flight from Pixels without State Estimation

Jun 18, 2024

Abstract:Quadrotors are among the most agile flying robots. Despite recent advances in learning-based control and computer vision, autonomous drones still rely on explicit state estimation. On the other hand, human pilots only rely on a first-person-view video stream from the drone onboard camera to push the platform to its limits and fly robustly in unseen environments. To the best of our knowledge, we present the first vision-based quadrotor system that autonomously navigates through a sequence of gates at high speeds while directly mapping pixels to control commands. Like professional drone-racing pilots, our system does not use explicit state estimation and leverages the same control commands humans use (collective thrust and body rates). We demonstrate agile flight at speeds up to 40km/h with accelerations up to 2g. This is achieved by training vision-based policies with reinforcement learning (RL). The training is facilitated using an asymmetric actor-critic with access to privileged information. To overcome the computational complexity during image-based RL training, we use the inner edges of the gates as a sensor abstraction. This simple yet robust, task-relevant representation can be simulated during training without rendering images. During deployment, a Swin-transformer-based gate detector is used. Our approach enables autonomous agile flight with standard, off-the-shelf hardware. Although our demonstration focuses on drone racing, we believe that our method has an impact beyond drone racing and can serve as a foundation for future research into real-world applications in structured environments.

Time-Optimal Flight with Safety Constraints and Data-driven Dynamics

Mar 26, 2024Abstract:Time-optimal quadrotor flight is an extremely challenging problem due to the limited control authority encountered at the limit of handling. Model Predictive Contouring Control (MPCC) has emerged as a leading model-based approach for time optimization problems such as drone racing. However, the standard MPCC formulation used in quadrotor racing introduces the notion of the gates directly in the cost function, creating a multi-objective optimization that continuously trades off between maximizing progress and tracking the path accurately. This paper introduces three key components that enhance the MPCC approach for drone racing. First and foremost, we provide safety guarantees in the form of a constraint and terminal set. The safety set is designed as a spatial constraint which prevents gate collisions while allowing for time-optimization only in the cost function. Second, we augment the existing first principles dynamics with a residual term that captures complex aerodynamic effects and thrust forces learned directly from real world data. Third, we use Trust Region Bayesian Optimization (TuRBO), a state of the art global Bayesian Optimization algorithm, to tune the hyperparameters of the MPC controller given a sparse reward based on lap time minimization. The proposed approach achieves similar lap times to the best state-of-the-art RL and outperforms the best time-optimal controller while satisfying constraints. In both simulation and real-world, our approach consistently prevents gate crashes with 100\% success rate, while pushing the quadrotor to its physical limit reaching speeds of more than 80km/h.

Robotics meets Fluid Dynamics: A Characterization of the Induced Airflow around a Quadrotor

Mar 20, 2024

Abstract:The widespread adoption of quadrotors for diverse applications, from agriculture to public safety, necessitates an understanding of the aerodynamic disturbances they create. This paper introduces a computationally lightweight model for estimating the time-averaged magnitude of the induced flow below quadrotors in hover. Unlike related approaches that rely on expensive computational fluid dynamics (CFD) simulations or time-consuming empirical measurements, our method leverages classical theory from turbulent flows. By analyzing over 9 hours of flight data from drones of varying sizes within a large motion capture system, we show that the combined flow from all propellers of the drone is well-approximated by a turbulent jet. Through the use of a novel normalization and scaling, we have developed and experimentally validated a unified model that describes the mean velocity field of the induced flow for different drone sizes. The model accurately describes the far-field airflow in a very large volume below the drone which is difficult to simulate in CFD. Our model, which requires only the drone's mass, propeller size, and drone size for calculations, offers a practical tool for dynamic planning in multi-agent scenarios, ensuring safer operations near humans and optimizing sensor placements.

Bootstrapping Reinforcement Learning with Imitation for Vision-Based Agile Flight

Mar 18, 2024

Abstract:We combine the effectiveness of Reinforcement Learning (RL) and the efficiency of Imitation Learning (IL) in the context of vision-based, autonomous drone racing. We focus on directly processing visual input without explicit state estimation. While RL offers a general framework for learning complex controllers through trial and error, it faces challenges regarding sample efficiency and computational demands due to the high dimensionality of visual inputs. Conversely, IL demonstrates efficiency in learning from visual demonstrations but is limited by the quality of those demonstrations and faces issues like covariate shift. To overcome these limitations, we propose a novel training framework combining RL and IL's advantages. Our framework involves three stages: initial training of a teacher policy using privileged state information, distilling this policy into a student policy using IL, and performance-constrained adaptive RL fine-tuning. Our experiments in both simulated and real-world environments demonstrate that our approach achieves superior performance and robustness than IL or RL alone in navigating a quadrotor through a racing course using only visual information without explicit state estimation.

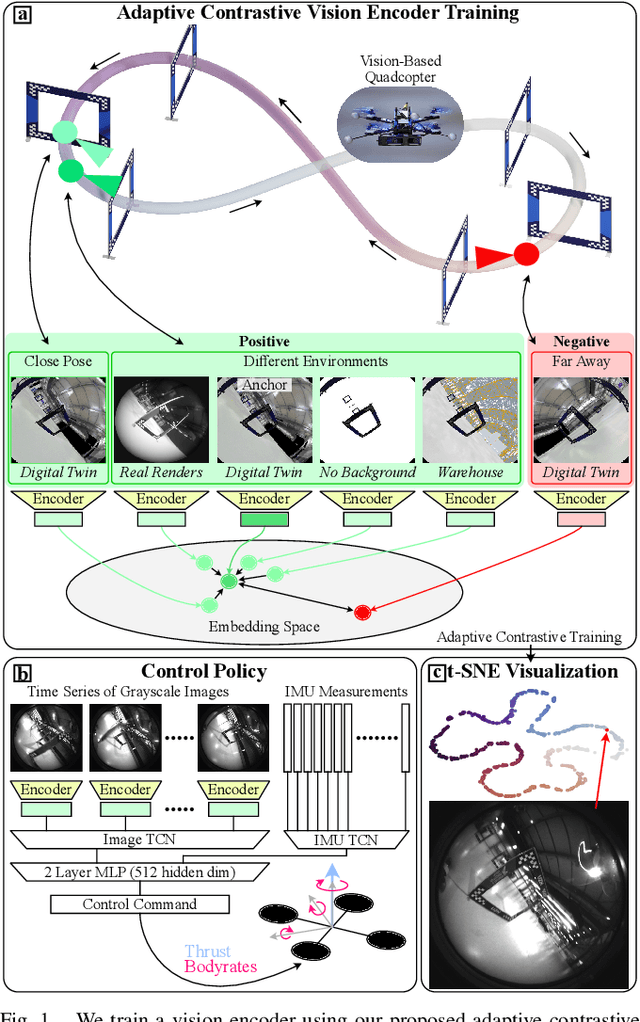

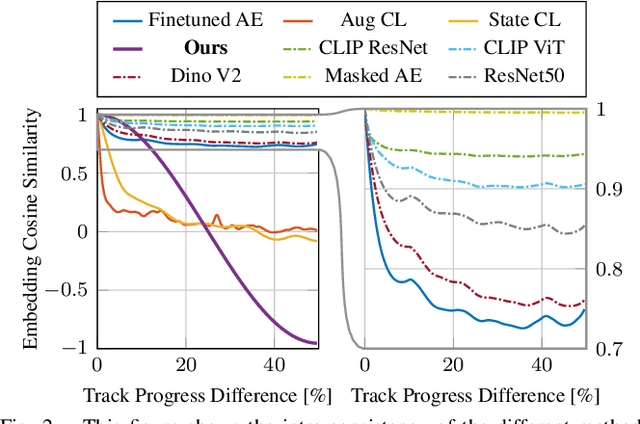

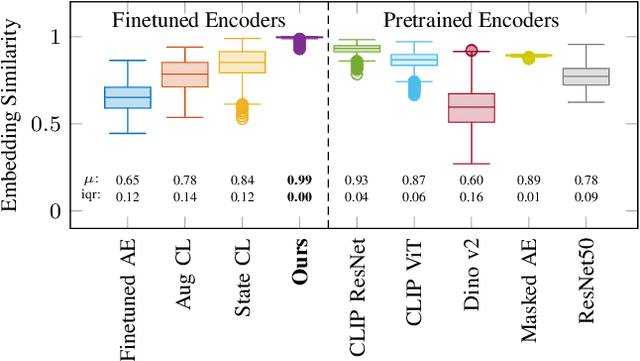

Contrastive Learning for Enhancing Robust Scene Transfer in Vision-based Agile Flight

Sep 29, 2023

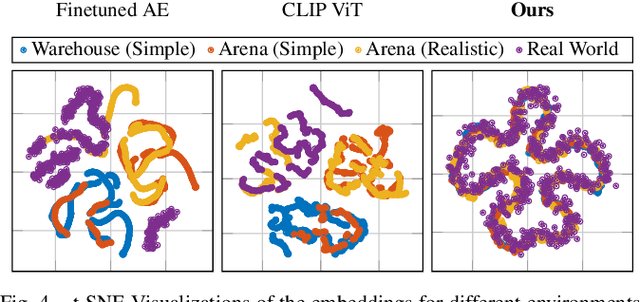

Abstract:Scene transfer for vision-based mobile robotics applications is a highly relevant and challenging problem. The utility of a robot greatly depends on its ability to perform a task in the real world, outside of a well-controlled lab environment. Existing scene transfer end-to-end policy learning approaches often suffer from poor sample efficiency or limited generalization capabilities, making them unsuitable for mobile robotics applications. This work proposes an adaptive multi-pair contrastive learning strategy for visual representation learning that enables zero-shot scene transfer and real-world deployment. Control policies relying on the embedding are able to operate in unseen environments without the need for finetuning in the deployment environment. We demonstrate the performance of our approach on the task of agile, vision-based quadrotor flight. Extensive simulation and real-world experiments demonstrate that our approach successfully generalizes beyond the training domain and outperforms all baselines.

Agilicious: Open-Source and Open-Hardware Agile Quadrotor for Vision-Based Flight

Jul 12, 2023Abstract:Autonomous, agile quadrotor flight raises fundamental challenges for robotics research in terms of perception, planning, learning, and control. A versatile and standardized platform is needed to accelerate research and let practitioners focus on the core problems. To this end, we present Agilicious, a co-designed hardware and software framework tailored to autonomous, agile quadrotor flight. It is completely open-source and open-hardware and supports both model-based and neural-network--based controllers. Also, it provides high thrust-to-weight and torque-to-inertia ratios for agility, onboard vision sensors, GPU-accelerated compute hardware for real-time perception and neural-network inference, a real-time flight controller, and a versatile software stack. In contrast to existing frameworks, Agilicious offers a unique combination of flexible software stack and high-performance hardware. We compare Agilicious with prior works and demonstrate it on different agile tasks, using both model-based and neural-network--based controllers. Our demonstrators include trajectory tracking at up to 5g and 70 km/h in a motion-capture system, and vision-based acrobatic flight and obstacle avoidance in both structured and unstructured environments using solely onboard perception. Finally, we demonstrate its use for hardware-in-the-loop simulation in virtual-reality environments. Thanks to its versatility, we believe that Agilicious supports the next generation of scientific and industrial quadrotor research.

* 14 pages, 5 figures, 2 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge