Kyle Rupnow

SkyNet: a Hardware-Efficient Method for Object Detection and Tracking on Embedded Systems

Sep 20, 2019

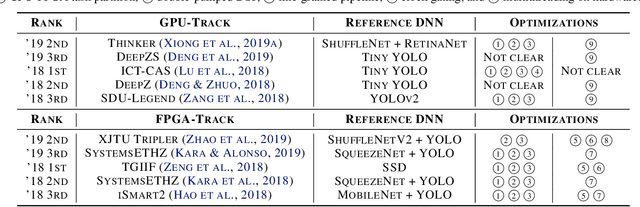

Abstract:Developing object detection and tracking on resource-constrained embedded systems is challenging. While object detection is one of the most compute-intensive tasks from the artificial intelligence domain, it is only allowed to use limited computation and memory resources on embedded devices. In the meanwhile, such resource-constrained implementations are often required to satisfy additional demanding requirements such as real-time response, high-throughput performance, and reliable inference accuracy. To overcome these challenges, we propose SkyNet, a hardware-efficient method to deliver the state-of-the-art detection accuracy and speed for embedded systems. Instead of following the common top-down flow for compact DNN design, SkyNet provides a bottom-up DNN design approach with comprehensive understanding of the hardware constraints at the very beginning to deliver hardware-efficient DNNs. The effectiveness of SkyNet is demonstrated by winning the extremely competitive System Design Contest for low power object detection in the 56th IEEE/ACM Design Automation Conference (DAC-SDC), where our SkyNet significantly outperforms all other 100+ competitors: it delivers 0.731 Intersection over Union (IoU) and 67.33 frames per second (FPS) on a TX2 embedded GPU; and 0.716 IoU and 25.05 FPS on an Ultra96 embedded FPGA. The evaluation of SkyNet is also extended to GOT-10K, a recent large-scale high-diversity benchmark for generic object tracking in the wild. For state-of-the-art object trackers SiamRPN++ and SiamMask, where ResNet-50 is employed as the backbone, implementations using our SkyNet as the backbone DNN are 1.60X and 1.73X faster with better or similar accuracy when running on a 1080Ti GPU, and 37.20X smaller in terms of parameter size for significantly better memory and storage footprint.

SkyNet: A Champion Model for DAC-SDC on Low Power Object Detection

Jul 09, 2019

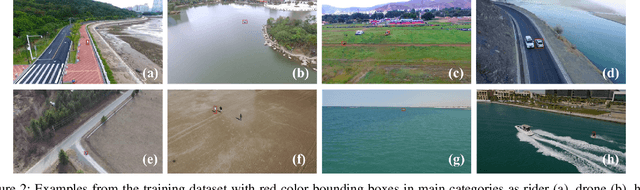

Abstract:Developing artificial intelligence (AI) at the edge is always challenging, since edge devices have limited computation capability and memory resources but need to meet demanding requirements, such as real-time processing, high throughput performance, and high inference accuracy. To overcome these challenges, we propose SkyNet, an extremely lightweight DNN with 12 convolutional (Conv) layers and only 1.82 megabyte (MB) of parameters following a bottom-up DNN design approach. SkyNet is demonstrated in the 56th IEEE/ACM Design Automation Conference System Design Contest (DAC-SDC), a low power object detection challenge in images captured by unmanned aerial vehicles (UAVs). SkyNet won the first place award for both the GPU and FPGA tracks of the contest: we deliver 0.731 Intersection over Union (IoU) and 67.33 frames per second (FPS) on a TX2 GPU and deliver 0.716 IoU and 25.05 FPS on an Ultra96 FPGA.

FPGA/DNN Co-Design: An Efficient Design Methodology for IoT Intelligence on the Edge

Apr 09, 2019

Abstract:While embedded FPGAs are attractive platforms for DNN acceleration on edge-devices due to their low latency and high energy efficiency, the scarcity of resources of edge-scale FPGA devices also makes it challenging for DNN deployment. In this paper, we propose a simultaneous FPGA/DNN co-design methodology with both bottom-up and top-down approaches: a bottom-up hardware-oriented DNN model search for high accuracy, and a top-down FPGA accelerator design considering DNN-specific characteristics. We also build an automatic co-design flow, including an Auto-DNN engine to perform hardware-oriented DNN model search, as well as an Auto-HLS engine to generate synthesizable C code of the FPGA accelerator for explored DNNs. We demonstrate our co-design approach on an object detection task using PYNQ-Z1 FPGA. Results show that our proposed DNN model and accelerator outperform the state-of-the-art FPGA designs in all aspects including Intersection-over-Union (IoU) (6.2% higher), frames per second (FPS) (2.48X higher), power consumption (40% lower), and energy efficiency (2.5X higher). Compared to GPU-based solutions, our designs deliver similar accuracy but consume far less energy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge