Kilian Rambach

Radar Spectra-Language Model for Automotive Scene Parsing

Jun 04, 2024

Abstract:Radar sensors are low cost, long-range, and weather-resilient. Therefore, they are widely used for driver assistance functions, and are expected to be crucial for the success of autonomous driving in the future. In many perception tasks only pre-processed radar point clouds are considered. In contrast, radar spectra are a raw form of radar measurements and contain more information than radar point clouds. However, radar spectra are rather difficult to interpret. In this work, we aim to explore the semantic information contained in spectra in the context of automated driving, thereby moving towards better interpretability of radar spectra. To this end, we create a radar spectra-language model, allowing us to query radar spectra measurements for the presence of scene elements using free text. We overcome the scarcity of radar spectra data by matching the embedding space of an existing vision-language model (VLM). Finally, we explore the benefit of the learned representation for scene parsing, and obtain improvements in free space segmentation and object detection merely by injecting the spectra embedding into a baseline model.

Histogram-based Deep Learning for Automotive Radar

Mar 06, 2023Abstract:There are various automotive applications that rely on correctly interpreting point cloud data recorded with radar sensors. We present a deep learning approach for histogram-based processing of such point clouds. Compared to existing methods, the design of our approach is extremely simple: it boils down to computing a point cloud histogram and passing it through a multi-layer perceptron. Our approach matches and surpasses state-of-the-art approaches on the task of automotive radar object type classification. It is also robust to noise that often corrupts radar measurements, and can deal with missing features of single radar reflections. Finally, the design of our approach makes it more interpretable than existing methods, allowing insightful analysis of its decisions.

DeepHybrid: Deep Learning on Automotive Radar Spectra and Reflections for Object Classification

Feb 17, 2022

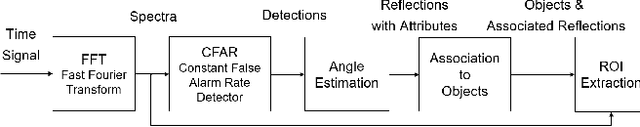

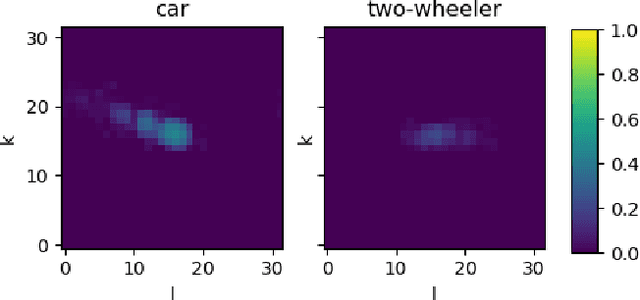

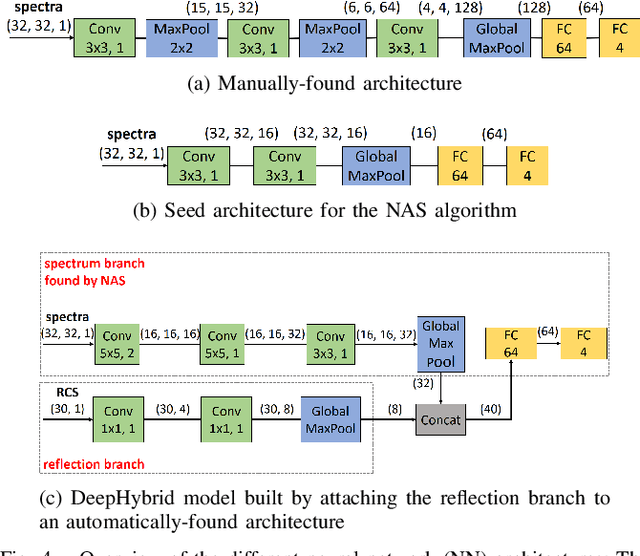

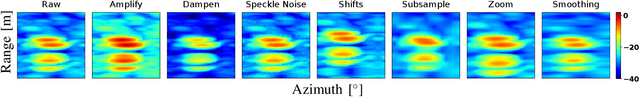

Abstract:Automated vehicles need to detect and classify objects and traffic participants accurately. Reliable object classification using automotive radar sensors has proved to be challenging. We propose a method that combines classical radar signal processing and Deep Learning algorithms. The range-azimuth information on the radar reflection level is used to extract a sparse region of interest from the range-Doppler spectrum. This is used as input to a neural network (NN) that classifies different types of stationary and moving objects. We present a hybrid model (DeepHybrid) that receives both radar spectra and reflection attributes as inputs, e.g. radar cross-section. Experiments show that this improves the classification performance compared to models using only spectra. Moreover, a neural architecture search (NAS) algorithm is applied to find a resource-efficient and high-performing NN. NAS yields an almost one order of magnitude smaller NN than the manually-designed one while preserving the accuracy. The proposed method can be used for example to improve automatic emergency braking or collision avoidance systems.

Improving Uncertainty of Deep Learning-based Object Classification on Radar Spectra using Label Smoothing

Sep 27, 2021

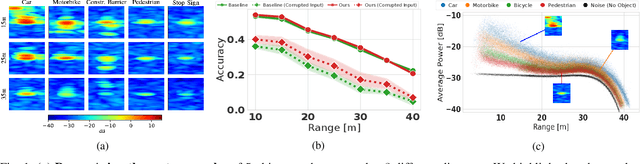

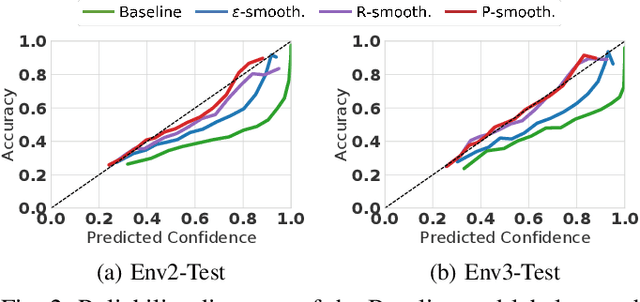

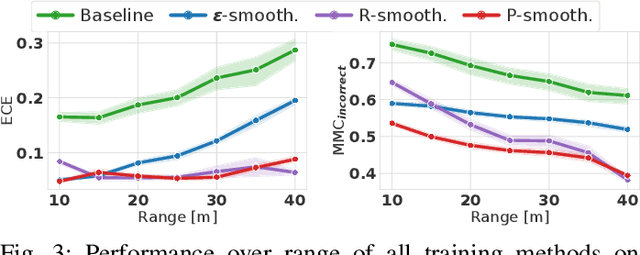

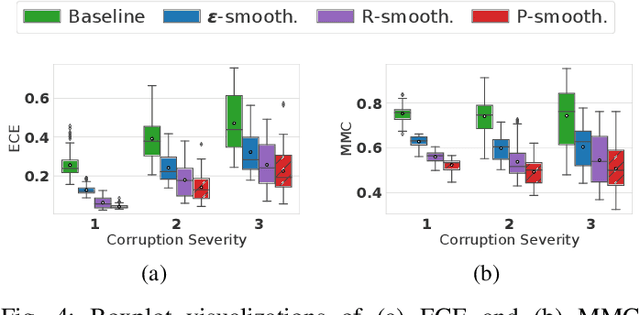

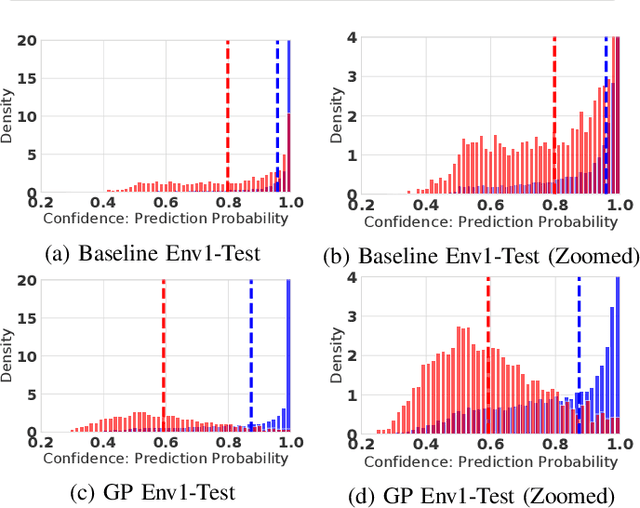

Abstract:Object type classification for automotive radar has greatly improved with recent deep learning (DL) solutions, however these developments have mostly focused on the classification accuracy. Before employing DL solutions in safety-critical applications, such as automated driving, an indispensable prerequisite is the accurate quantification of the classifiers' reliability. Unfortunately, DL classifiers are characterized as black-box systems which output severely over-confident predictions, leading downstream decision-making systems to false conclusions with possibly catastrophic consequences. We find that deep radar classifiers maintain high-confidences for ambiguous, difficult samples, e.g. small objects measured at large distances, under domain shift and signal corruptions, regardless of the correctness of the predictions. The focus of this article is to learn deep radar spectra classifiers which offer robust real-time uncertainty estimates using label smoothing during training. Label smoothing is a technique of refining, or softening, the hard labels typically available in classification datasets. In this article, we exploit radar-specific know-how to define soft labels which encourage the classifiers to learn to output high-quality calibrated uncertainty estimates, thereby partially resolving the problem of over-confidence. Our investigations show how simple radar knowledge can easily be combined with complex data-driven learning algorithms to yield safe automotive radar perception.

DiagViB-6: A Diagnostic Benchmark Suite for Vision Models in the Presence of Shortcut and Generalization Opportunities

Aug 12, 2021

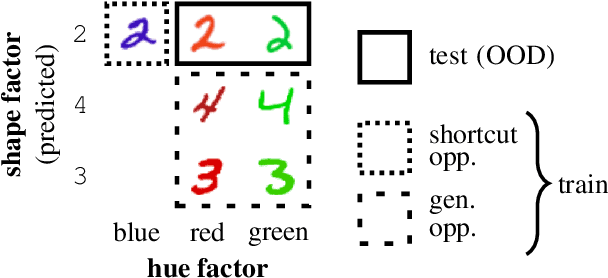

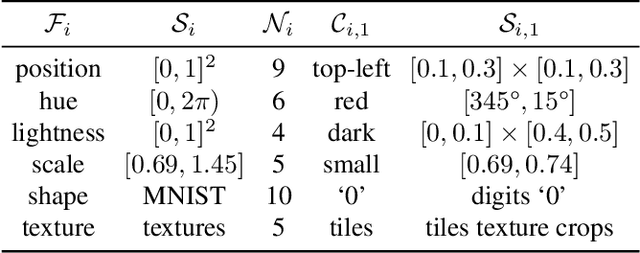

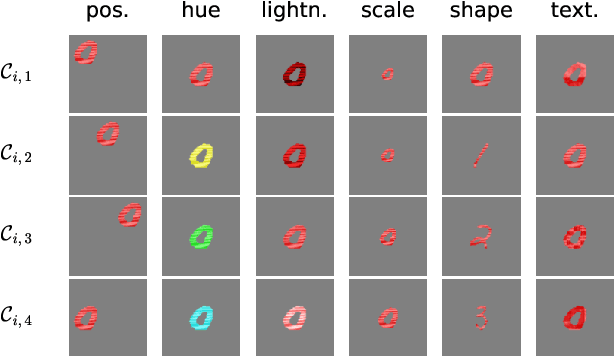

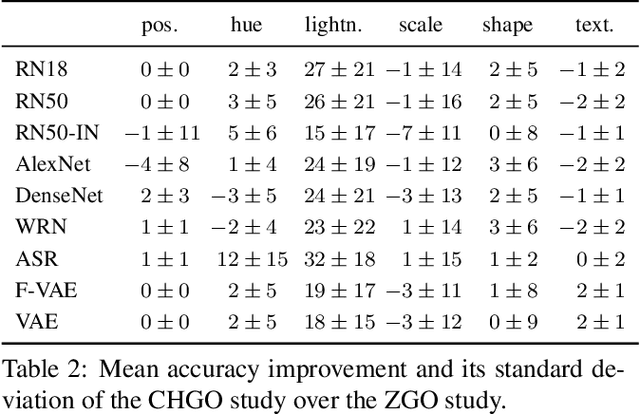

Abstract:Common deep neural networks (DNNs) for image classification have been shown to rely on shortcut opportunities (SO) in the form of predictive and easy-to-represent visual factors. This is known as shortcut learning and leads to impaired generalization. In this work, we show that common DNNs also suffer from shortcut learning when predicting only basic visual object factors of variation (FoV) such as shape, color, or texture. We argue that besides shortcut opportunities, generalization opportunities (GO) are also an inherent part of real-world vision data and arise from partial independence between predicted classes and FoVs. We also argue that it is necessary for DNNs to exploit GO to overcome shortcut learning. Our core contribution is to introduce the Diagnostic Vision Benchmark suite DiagViB-6, which includes datasets and metrics to study a network's shortcut vulnerability and generalization capability for six independent FoV. In particular, DiagViB-6 allows controlling the type and degree of SO and GO in a dataset. We benchmark a wide range of popular vision architectures and show that they can exploit GO only to a limited extent.

Test-Time Adaptation to Distribution Shift by Confidence Maximization and Input Transformation

Jun 28, 2021

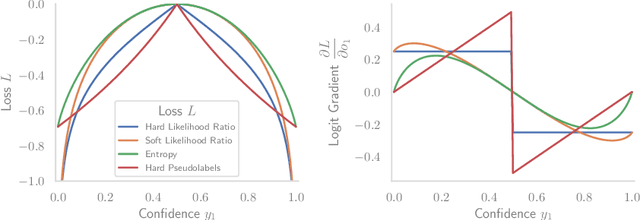

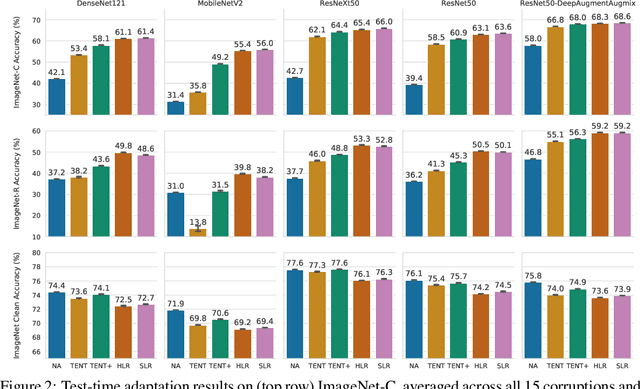

Abstract:Deep neural networks often exhibit poor performance on data that is unlikely under the train-time data distribution, for instance data affected by corruptions. Previous works demonstrate that test-time adaptation to data shift, for instance using entropy minimization, effectively improves performance on such shifted distributions. This paper focuses on the fully test-time adaptation setting, where only unlabeled data from the target distribution is required. This allows adapting arbitrary pretrained networks. Specifically, we propose a novel loss that improves test-time adaptation by addressing both premature convergence and instability of entropy minimization. This is achieved by replacing the entropy by a non-saturating surrogate and adding a diversity regularizer based on batch-wise entropy maximization that prevents convergence to trivial collapsed solutions. Moreover, we propose to prepend an input transformation module to the network that can partially undo test-time distribution shifts. Surprisingly, this preprocessing can be learned solely using the fully test-time adaptation loss in an end-to-end fashion without any target domain labels or source domain data. We show that our approach outperforms previous work in improving the robustness of publicly available pretrained image classifiers to common corruptions on such challenging benchmarks as ImageNet-C.

Investigation of Uncertainty of Deep Learning-based Object Classification on Radar Spectra

Jun 01, 2021

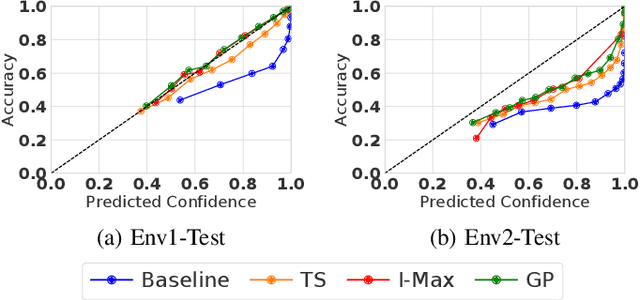

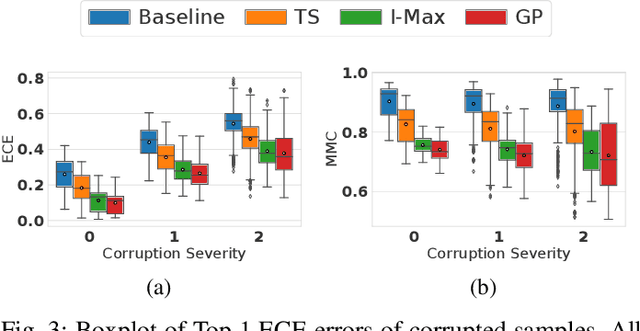

Abstract:Deep learning (DL) has recently attracted increasing interest to improve object type classification for automotive radar.In addition to high accuracy, it is crucial for decision making in autonomous vehicles to evaluate the reliability of the predictions; however, decisions of DL networks are non-transparent. Current DL research has investigated how uncertainties of predictions can be quantified, and in this article, we evaluate the potential of these methods for safe, automotive radar perception. In particular we evaluate how uncertainty quantification can support radar perception under (1) domain shift, (2) corruptions of input signals, and (3) in the presence of unknown objects. We find that in agreement with phenomena observed in the literature,deep radar classifiers are overly confident, even in their wrong predictions. This raises concerns about the use of the confidence values for decision making under uncertainty, as the model fails to notify when it cannot handle an unknown situation. Accurate confidence values would allow optimal integration of multiple information sources, e.g. via sensor fusion. We show that by applying state-of-the-art post-hoc uncertainty calibration, the quality of confidence measures can be significantly improved,thereby partially resolving the over-confidence problem. Our investigation shows that further research into training and calibrating DL networks is necessary and offers great potential for safe automotive object classification with radar sensors.

* 6 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge