Keyou You

ReLU Networks for Model Predictive Control: Network Complexity and Performance Guarantees

Jan 23, 2026Abstract:Recent years have witnessed a resurgence in using ReLU neural networks (NNs) to represent model predictive control (MPC) policies. However, determining the required network complexity to ensure closed-loop performance remains a fundamental open problem. This involves a critical precision-complexity trade-off: undersized networks may fail to capture the MPC policy, while oversized ones may outweigh the benefits of ReLU network approximation. In this work, we propose a projection-based method to enforce hard constraints and establish a state-dependent Lipschitz continuity property for the optimal MPC cost function, which enables sharp convergence analysis of the closed-loop system. For the first time, we derive explicit bounds on ReLU network width and depth for approximating MPC policies with guaranteed closed-loop performance. To further reduce network complexity and enhance closed-loop performance, we propose a non-uniform error framework with a state-aware scaling function to adaptively adjust both the input and output of the ReLU network. Our contributions provide a foundational step toward certifiable ReLU NN-based MPC.

Deep Reinforcement Learning for Traveling Purchaser Problems

Apr 11, 2024

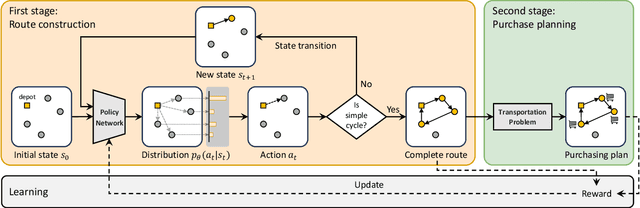

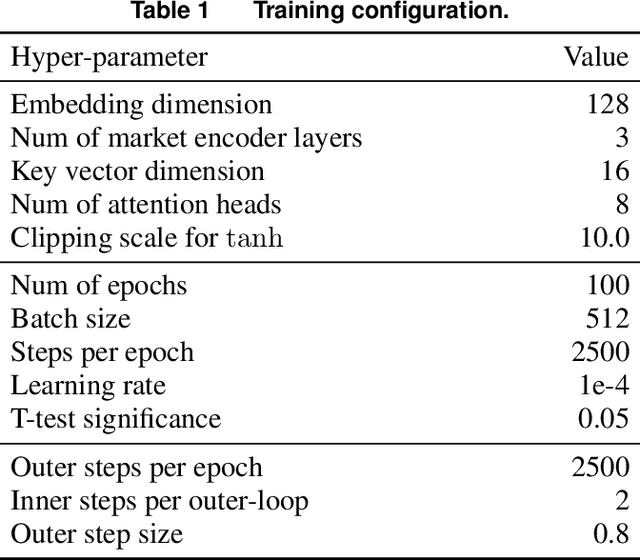

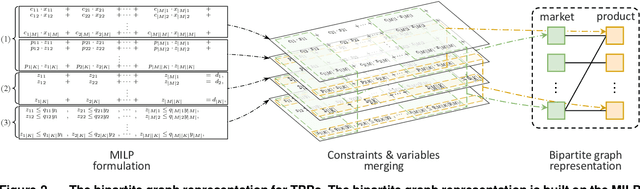

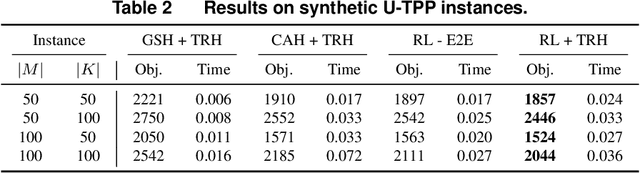

Abstract:The traveling purchaser problem (TPP) is an important combinatorial optimization problem with broad applications. Due to the coupling between routing and purchasing, existing works on TPPs commonly address route construction and purchase planning simultaneously, which, however, leads to exact methods with high computational cost and heuristics with sophisticated design but limited performance. In sharp contrast, we propose a novel approach based on deep reinforcement learning (DRL), which addresses route construction and purchase planning separately, while evaluating and optimizing the solution from a global perspective. The key components of our approach include a bipartite graph representation for TPPs to capture the market-product relations, and a policy network that extracts information from the bipartite graph and uses it to sequentially construct the route. One significant benefit of our framework is that we can efficiently construct the route using the policy network, and once the route is determined, the associated purchasing plan can be easily derived through linear programming, while, leveraging DRL, we can train the policy network to optimize the global solution objective. Furthermore, by introducing a meta-learning strategy, the policy network can be trained stably on large-sized TPP instances, and generalize well across instances of varying sizes and distributions, even to much larger instances that are never seen during training. Experiments on various synthetic TPP instances and the TPPLIB benchmark demonstrate that our DRL-based approach can significantly outperform well-established TPP heuristics, reducing the optimality gap by 40%-90%, and also showing an advantage in runtime, especially on large-sized instances.

Innovation Compression for Communication-efficient Distributed Optimization with Linear Convergence

May 14, 2021

Abstract:Information compression is essential to reduce communication cost in distributed optimization over peer-to-peer networks. This paper proposes a communication-efficient linearly convergent distributed (COLD) algorithm to solve strongly convex optimization problems. By compressing innovation vectors, which are the differences between decision vectors and their estimates, COLD is able to achieve linear convergence for a class of $\delta$-contracted compressors. We explicitly quantify how the compression affects the convergence rate and show that COLD matches the same rate of its uncompressed version. To accommodate a wider class of compressors that includes the binary quantizer, we further design a novel dynamical scaling mechanism and obtain the linearly convergent Dyna-COLD. Importantly, our results strictly improve existing results for the quantized consensus problem. Numerical experiments demonstrate the advantages of both algorithms under different compressors.

Primal-dual Learning for the Model-free Risk-constrained Linear Quadratic Regulator

Dec 10, 2020

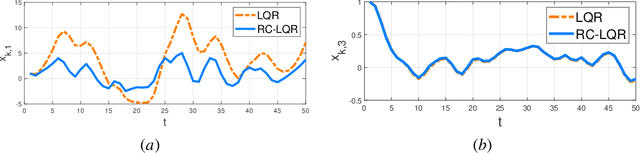

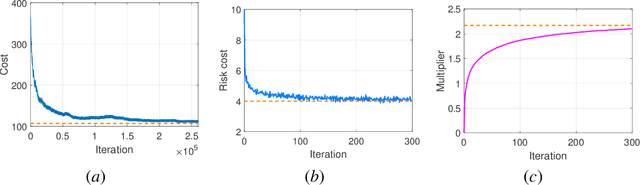

Abstract:Risk-aware control, though with promise to tackle unexpected events, requires a known exact dynamical model. In this work, we propose a model-free framework to learn a risk-aware controller with a focus on the linear system. We formulate it as a discrete-time infinite-horizon LQR problem with a state predictive variance constraint. To solve it, we parameterize the policy with a feedback gain pair and leverage primal-dual methods to optimize it by solely using data. We first study the optimization landscape of the Lagrangian function and establish the strong duality in spite of its non-convex nature. Alongside, we find that the Lagrangian function enjoys an important local gradient dominance property, which is then exploited to develop a convergent random search algorithm to learn the dual function. Furthermore, we propose a primal-dual algorithm with global convergence to learn the optimal policy-multiplier pair. Finally, we validate our results via simulations.

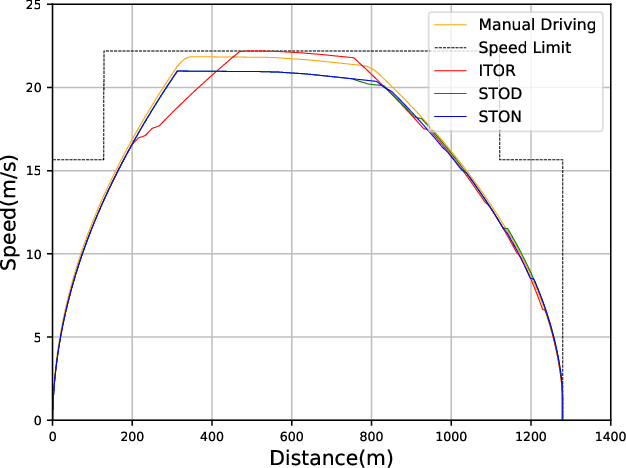

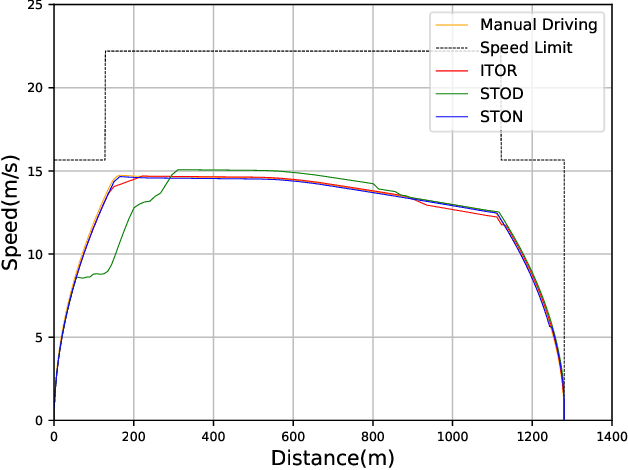

Smart Train Operation Algorithms based on Expert Knowledge and Reinforcement Learning

Mar 06, 2020

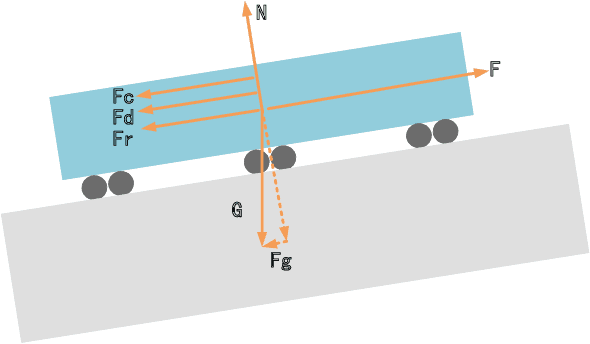

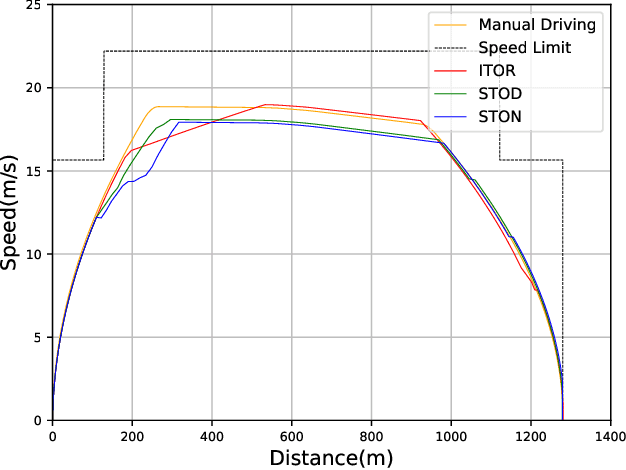

Abstract:During recent decades, the automatic train operation (ATO) system has been gradually adopted in many subway systems. On the one hand, it is more intelligent than traditional manual driving; on the other hand, it increases the energy consumption and decreases the riding comfort of the subway system. This paper proposes two smart train operation algorithms based on the combination of expert knowledge and reinforcement learning algorithms. Compared with previous works, smart train operation algorithms can realize the control of continuous action for the subway system and satisfy multiple objectives (the safety, the punctuality, the energy efficiency, and the riding comfort) without using an offline optimized speed profile. Firstly, through analyzing historical data of experienced subway drivers, we summarize the expert knowledge rules and build inference methods to guarantee the riding comfort, the punctuality and the safety of the subway system. Then we develop two algorithms to realize the control of continuous action and to ensure the energy efficiency of train operation. Among them, one is the smart train operation (STO) algorithm based on deep deterministic policy gradient named (STOD) and another is the smart train operation algorithm based on normalized advantage function (STON). Finally, we verify the performance of proposed algorithms via some numerical simulations with the real field data collected from the Yizhuang Line of the Beijing Subway and their performance will be compared with existing ATO algorithms. The results of numerical simulations show that the developed smart train operation systems are better than manual driving and existing ATO algorithms in respect of energy efficiency. In addition, STOD and STON have the ability to adapt to different trip times and different resistance conditions.

Asynchronous Policy Evaluation in Distributed Reinforcement Learning over Networks

Mar 01, 2020

Abstract:This paper proposes a \emph{fully asynchronous} scheme for policy evaluation of distributed reinforcement learning (DisRL) over peer-to-peer networks. Without any form of coordination, nodes can communicate with neighbors and compute their local variables using (possibly) delayed information at any time, which is in sharp contrast to the asynchronous gossip. Thus, the proposed scheme fully takes advantage of the distributed setting. We prove that our method converges at a linear rate $\mathcal{O}(c^k)$ where $c\in(0,1)$ and $k$ increases by one no matter on which node updates, showing the computational advantage by reducing the amount of synchronization. Numerical experiments show that our method speeds up linearly w.r.t. the number of nodes, and is robust to straggler nodes. To the best of our knowledge, our work is the first theoretical analysis for asynchronous update in DisRL, including the \emph{parallel RL} domain advocated by A3C.

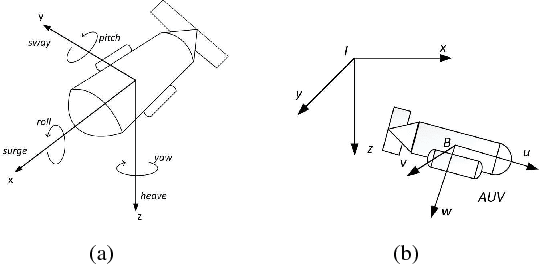

A selected review on reinforcement learning based control for autonomous underwater vehicles

Nov 27, 2019

Abstract:Recently, reinforcement learning (RL) has been extensively studied and achieved promising results in a wide range of control tasks. Meanwhile, autonomous underwater vehicle (AUV) is an important tool for executing complex and challenging underwater tasks. The advances in RL offers ample opportunities for developing intelligent AUVs. This paper provides a selected review on RL based control for AUVs with the focus on applications of RL to low-level control tasks for underwater regulation and tracking. To this end, we first present a concise introduction to the RL based control framework. Then, we provide an overview of RL methods for AUVs control problems, where the main challenges and recent progresses are discussed. Finally, two representative cases of RL-based controllers are given in detail for the model-free RL methods on AUVs.

Decentralized Stochastic Gradient Tracking for Empirical Risk Minimization

Sep 06, 2019

Abstract:Recent works have shown superiorities of decentralized SGD to centralized counterparts in large-scale machine learning, but their theoretical gap is still not fully understood. In this paper, we propose a decentralized stochastic gradient tracking (DSGT) algorithm over peer-to-peer networks for empirical risk minimization problems, and explicitly evaluate its convergence rate in terms of key parameters of the problem, e.g., algebraic connectivity of the communication network, mini-batch size, and gradient variance. Importantly, it is the first theoretical result that can \emph{exactly} recover the rate of the centralized SGD, and has optimal dependence on the algebraic connectivity of the networks when using stochastic gradients. Moreover, we explicitly quantify how the network affects speedup and the rate improvement over existing works. Interestingly, we also point out for the first time that both linear and sublinear speedup can be possible. We empirically validate DSGT on neural networks and logistic regression problems, and show its advantage over the state-of-the-art algorithms.

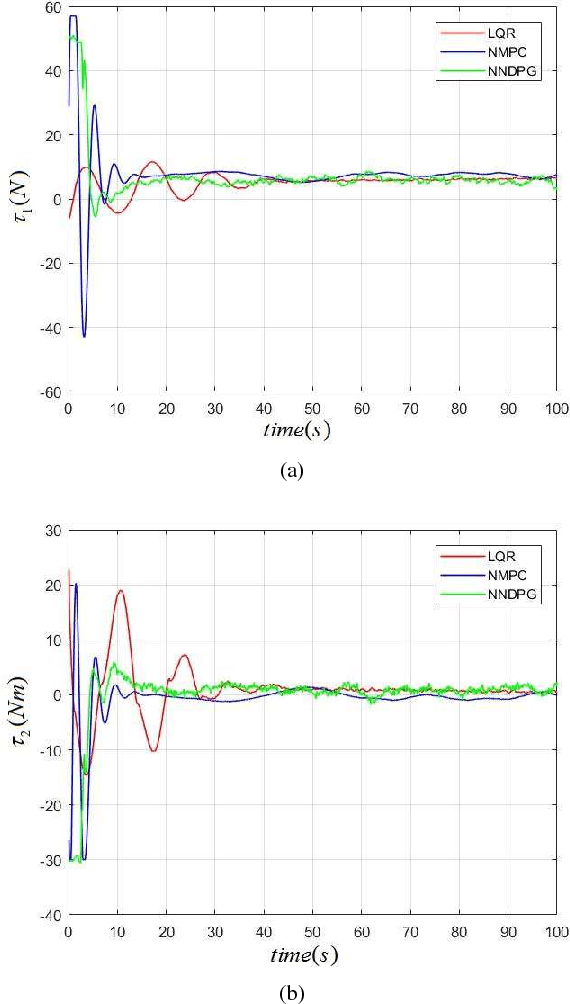

Depth Control of Model-Free AUVs via Reinforcement Learning

Nov 22, 2017

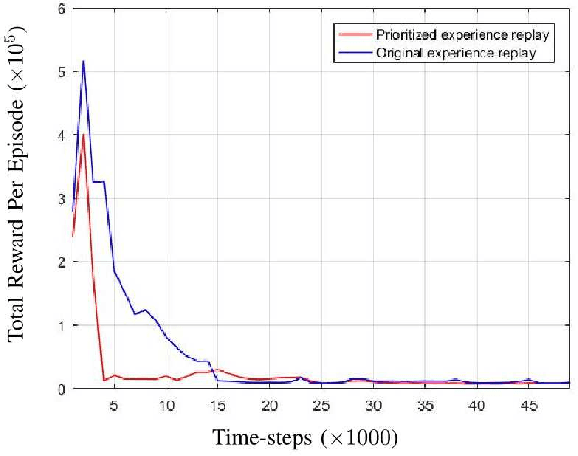

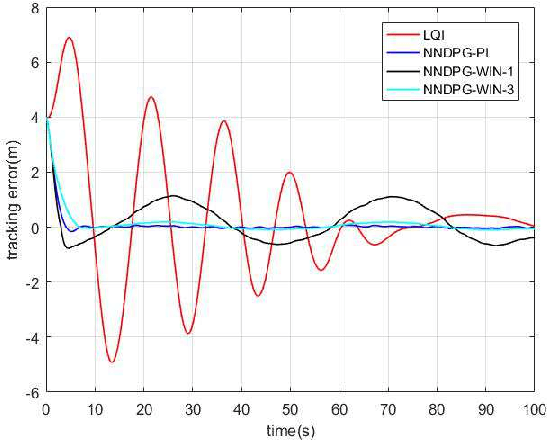

Abstract:In this paper, we consider depth control problems of an autonomous underwater vehicle (AUV) for tracking the desired depth trajectories. Due to the unknown dynamical model of the AUV, the problems cannot be solved by most of model-based controllers. To this purpose, we formulate the depth control problems of the AUV as continuous-state, continuous-action Markov decision processes (MDPs) under unknown transition probabilities. Based on deterministic policy gradient (DPG) and neural network approximation, we propose a model-free reinforcement learning (RL) algorithm that learns a state-feedback controller from sampled trajectories of the AUV. To improve the performance of the RL algorithm, we further propose a batch-learning scheme through replaying previous prioritized trajectories. We illustrate with simulations that our model-free method is even comparable to the model-based controllers as LQI and NMPC. Moreover, we validate the effectiveness of the proposed RL algorithm on a seafloor data set sampled from the South China Sea.

3-D Velocity Regulation for Nonholonomic Source Seeking Without Position Measurement

Apr 24, 2015

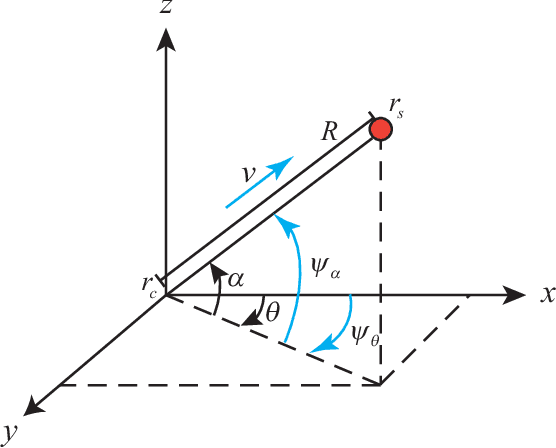

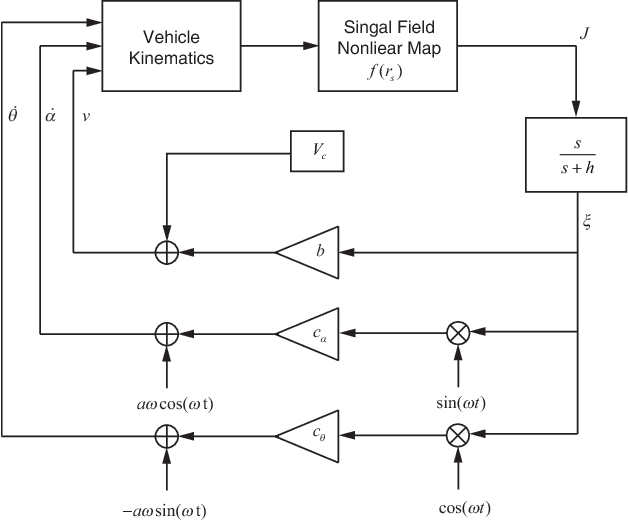

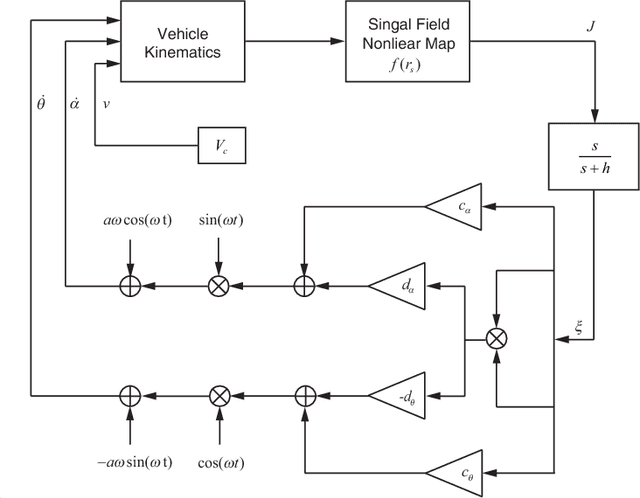

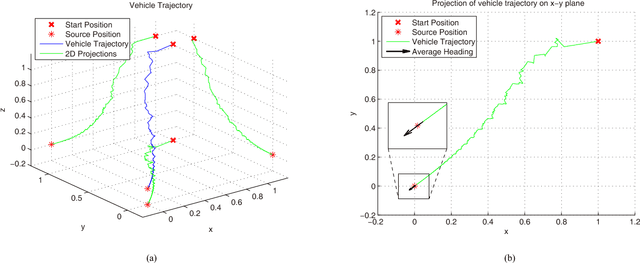

Abstract:We consider a three-dimensional problem of steering a nonholonomic vehicle to seek an unknown source of a spatially distributed signal field without any position measurement. In the literature, there exists an extremum seeking-based strategy under a constant forward velocity and tunable pitch and yaw velocities. Obviously, the vehicle with a constant forward velocity may exhibit certain overshoots in the seeking process and can not slow down even it approaches the source. To resolve this undesired behavior, this paper proposes a regulation strategy for the forward velocity along with the pitch and yaw velocities. Under such a strategy, the vehicle slows down near the source and stays within a small area as if it comes to a full stop, and controllers for angular velocities become succinct. We prove the local exponential convergence via the averaging technique. Finally, the theoretical results are illustrated with simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge