Kent Gauen

Space-Time Attention with Shifted Non-Local Search

Sep 28, 2023Abstract:Efficiently computing attention maps for videos is challenging due to the motion of objects between frames. While a standard non-local search is high-quality for a window surrounding each query point, the window's small size cannot accommodate motion. Methods for long-range motion use an auxiliary network to predict the most similar key coordinates as offsets from each query location. However, accurately predicting this flow field of offsets remains challenging, even for large-scale networks. Small spatial inaccuracies significantly impact the attention module's quality. This paper proposes a search strategy that combines the quality of a non-local search with the range of predicted offsets. The method, named Shifted Non-Local Search, executes a small grid search surrounding the predicted offsets to correct small spatial errors. Our method's in-place computation consumes 10 times less memory and is over 3 times faster than previous work. Experimentally, correcting the small spatial errors improves the video frame alignment quality by over 3 dB PSNR. Our search upgrades existing space-time attention modules, which improves video denoising results by 0.30 dB PSNR for a 7.5% increase in overall runtime. We integrate our space-time attention module into a UNet-like architecture to achieve state-of-the-art results on video denoising.

Low-Power Computer Vision: Status, Challenges, Opportunities

Apr 15, 2019

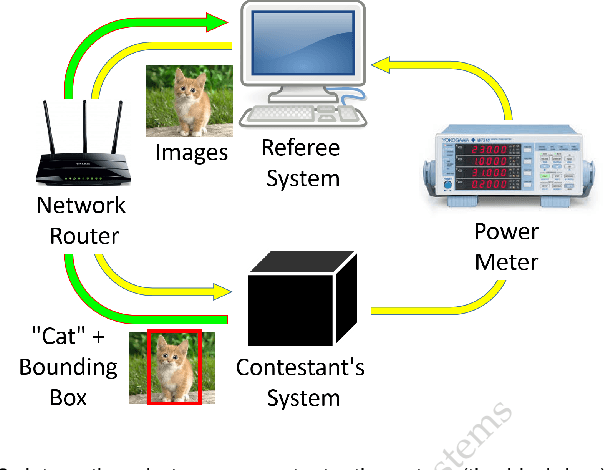

Abstract:Computer vision has achieved impressive progress in recent years. Meanwhile, mobile phones have become the primary computing platforms for millions of people. In addition to mobile phones, many autonomous systems rely on visual data for making decisions and some of these systems have limited energy (such as unmanned aerial vehicles also called drones and mobile robots). These systems rely on batteries and energy efficiency is critical. This article serves two main purposes: (1) Examine the state-of-the-art for low-power solutions to detect objects in images. Since 2015, the IEEE Annual International Low-Power Image Recognition Challenge (LPIRC) has been held to identify the most energy-efficient computer vision solutions. This article summarizes 2018 winners' solutions. (2) Suggest directions for research as well as opportunities for low-power computer vision.

See the World through Network Cameras

Apr 14, 2019

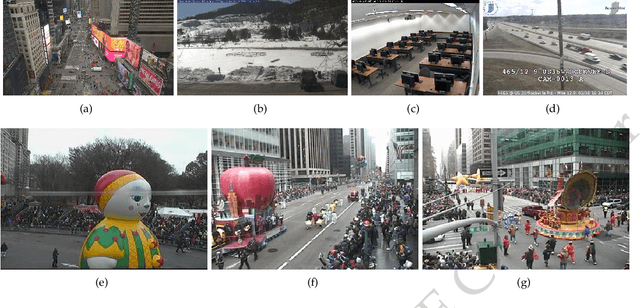

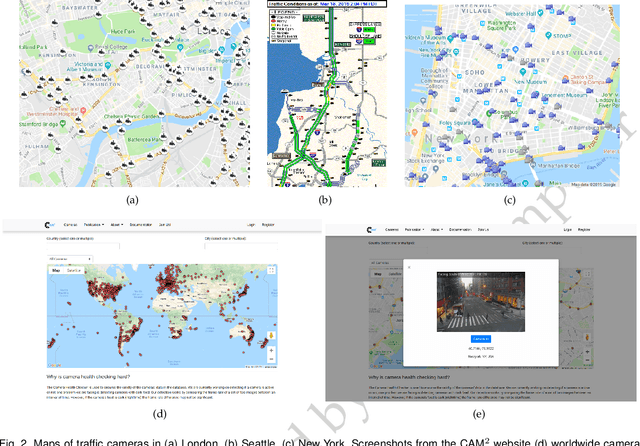

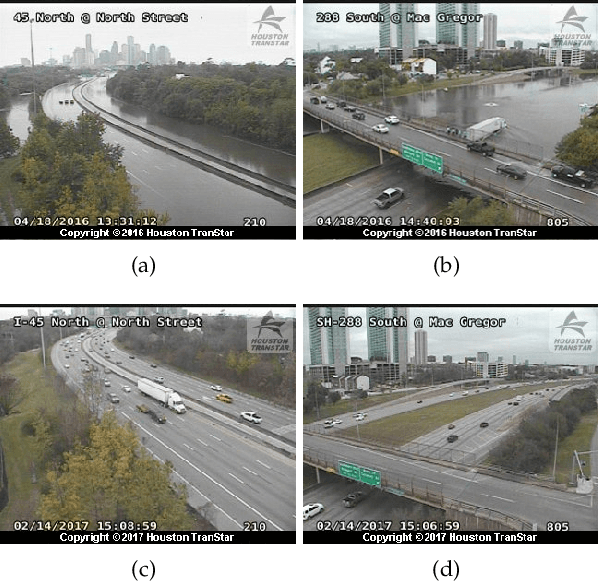

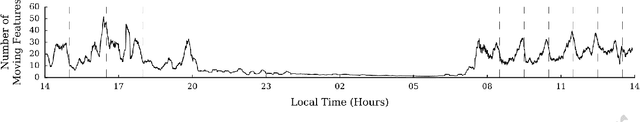

Abstract:Millions of network cameras have been deployed worldwide. Real-time data from many network cameras can offer instant views of multiple locations with applications in public safety, transportation management, urban planning, agriculture, forestry, social sciences, atmospheric information, and more. This paper describes the real-time data available from worldwide network cameras and potential applications. Second, this paper outlines the CAM2 System available to users at https://www.cam2project.net/. This information includes strategies to discover network cameras and create the camera database, user interface, and computing platforms. Third, this paper describes many opportunities provided by data from network cameras and challenges to be addressed.

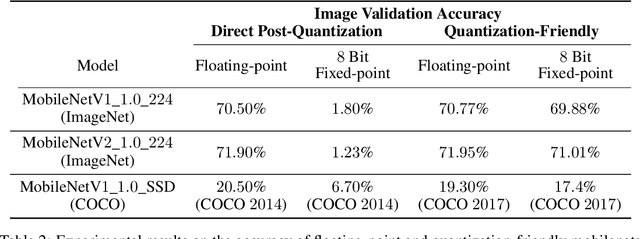

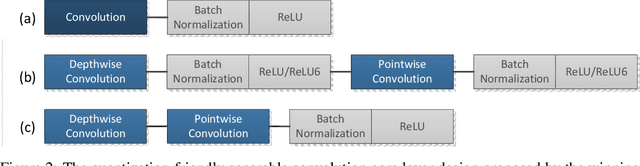

Low Power Inference for On-Device Visual Recognition with a Quantization-Friendly Solution

Mar 12, 2019

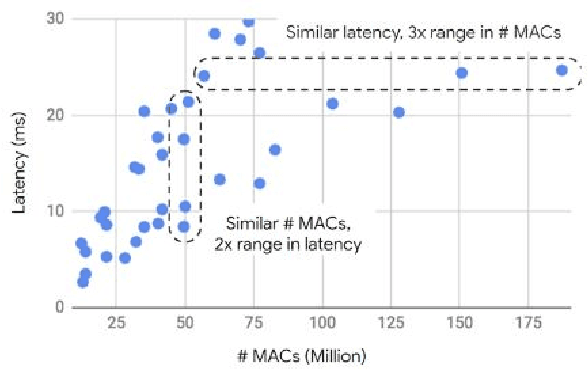

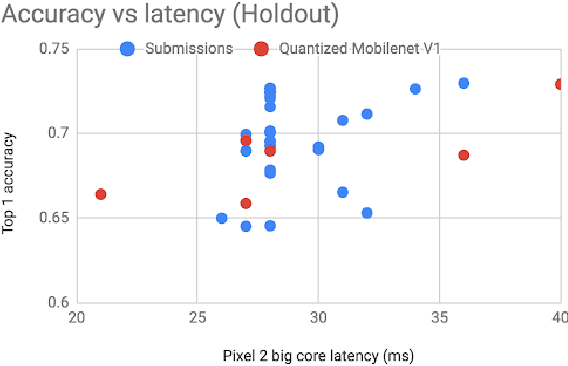

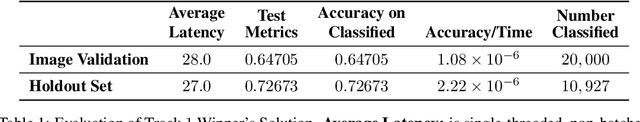

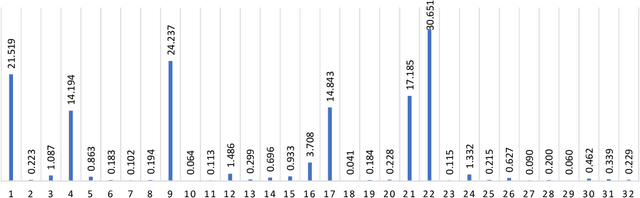

Abstract:The IEEE Low-Power Image Recognition Challenge (LPIRC) is an annual competition started in 2015 that encourages joint hardware and software solutions for computer vision systems with low latency and power. Track 1 of the competition in 2018 focused on the innovation of software solutions with fixed inference engine and hardware. This decision allows participants to submit models online and not worry about building and bringing custom hardware on-site, which attracted a historically large number of submissions. Among the diverse solutions, the winning solution proposed a quantization-friendly framework for MobileNets that achieves an accuracy of 72.67% on the holdout dataset with an average latency of 27ms on a single CPU core of Google Pixel2 phone, which is superior to the best real-time MobileNet models at the time.

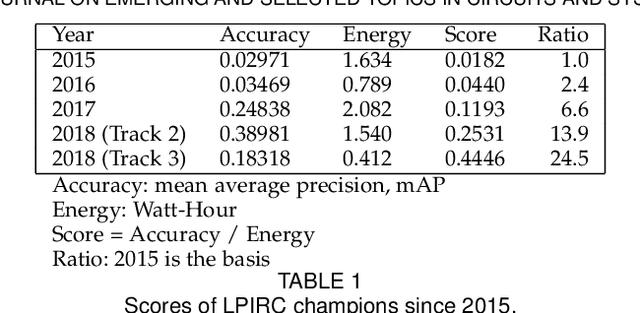

2018 Low-Power Image Recognition Challenge

Oct 03, 2018Abstract:The Low-Power Image Recognition Challenge (LPIRC, https://rebootingcomputing.ieee.org/lpirc) is an annual competition started in 2015. The competition identifies the best technologies that can classify and detect objects in images efficiently (short execution time and low energy consumption) and accurately (high precision). Over the four years, the winners' scores have improved more than 24 times. As computer vision is widely used in many battery-powered systems (such as drones and mobile phones), the need for low-power computer vision will become increasingly important. This paper summarizes LPIRC 2018 by describing the three different tracks and the winners' solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge