Kasun Weerakoon

Vi-LAD: Vision-Language Attention Distillation for Socially-Aware Robot Navigation in Dynamic Environments

Mar 12, 2025Abstract:We introduce Vision-Language Attention Distillation (Vi-LAD), a novel approach for distilling socially compliant navigation knowledge from a large Vision-Language Model (VLM) into a lightweight transformer model for real-time robotic navigation. Unlike traditional methods that rely on expert demonstrations or human-annotated datasets, Vi-LAD performs knowledge distillation and fine-tuning at the intermediate layer representation level (i.e., attention maps) by leveraging the backbone of a pre-trained vision-action model. These attention maps highlight key navigational regions in a given scene, which serve as implicit guidance for socially aware motion planning. Vi-LAD fine-tunes a transformer-based model using intermediate attention maps extracted from the pre-trained vision-action model, combined with attention-like semantic maps constructed from a large VLM. To achieve this, we introduce a novel attention-level distillation loss that fuses knowledge from both sources, generating augmented attention maps with enhanced social awareness. These refined attention maps are then utilized as a traversability costmap within a socially aware model predictive controller (MPC) for navigation. We validate our approach through real-world experiments on a Husky wheeled robot, demonstrating significant improvements over state-of-the-art (SOTA) navigation methods. Our results show up to 14.2% - 50% improvement in success rate, which highlights the effectiveness of Vi-LAD in enabling socially compliant and efficient robot navigation.

Robot Navigation Using Physically Grounded Vision-Language Models in Outdoor Environments

Sep 30, 2024

Abstract:We present a novel autonomous robot navigation algorithm for outdoor environments that is capable of handling diverse terrain traversability conditions. Our approach, VLM-GroNav, uses vision-language models (VLMs) and integrates them with physical grounding that is used to assess intrinsic terrain properties such as deformability and slipperiness. We use proprioceptive-based sensing, which provides direct measurements of these physical properties, and enhances the overall semantic understanding of the terrains. Our formulation uses in-context learning to ground the VLM's semantic understanding with proprioceptive data to allow dynamic updates of traversability estimates based on the robot's real-time physical interactions with the environment. We use the updated traversability estimations to inform both the local and global planners for real-time trajectory replanning. We validate our method on a legged robot (Ghost Vision 60) and a wheeled robot (Clearpath Husky), in diverse real-world outdoor environments with different deformable and slippery terrains. In practice, we observe significant improvements over state-of-the-art methods by up to 50% increase in navigation success rate.

CROSS-GAiT: Cross-Attention-Based Multimodal Representation Fusion for Parametric Gait Adaptation in Complex Terrains

Sep 25, 2024

Abstract:We present CROSS-GAiT, a novel algorithm for quadruped robots that uses Cross Attention to fuse terrain representations derived from visual and time-series inputs, including linear accelerations, angular velocities, and joint efforts. These fused representations are used to adjust the robot's step height and hip splay, enabling adaptive gaits that respond dynamically to varying terrain conditions. We generate these terrain representations by processing visual inputs through a masked Vision Transformer (ViT) encoder and time-series data through a dilated causal convolutional encoder. The cross-attention mechanism then selects and integrates the most relevant features from each modality, combining terrain characteristics with robot dynamics for better-informed gait adjustments. CROSS-GAiT uses the combined representation to dynamically adjust gait parameters in response to varying and unpredictable terrains. We train CROSS-GAiT on data from diverse terrains, including asphalt, concrete, brick pavements, grass, dense vegetation, pebbles, gravel, and sand. Our algorithm generalizes well and adapts to unseen environmental conditions, enhancing real-time navigation performance. CROSS-GAiT was implemented on a Ghost Robotics Vision 60 robot and extensively tested in complex terrains with high vegetation density, uneven/unstable surfaces, sand banks, deformable substrates, etc. We observe at least a 7.04% reduction in IMU energy density and a 27.3% reduction in total joint effort, which directly correlates with increased stability and reduced energy usage when compared to state-of-the-art methods. Furthermore, CROSS-GAiT demonstrates at least a 64.5% increase in success rate and a 4.91% reduction in time to reach the goal in four complex scenarios. Additionally, the learned representations perform 4.48% better than the state-of-the-art on a terrain classification task.

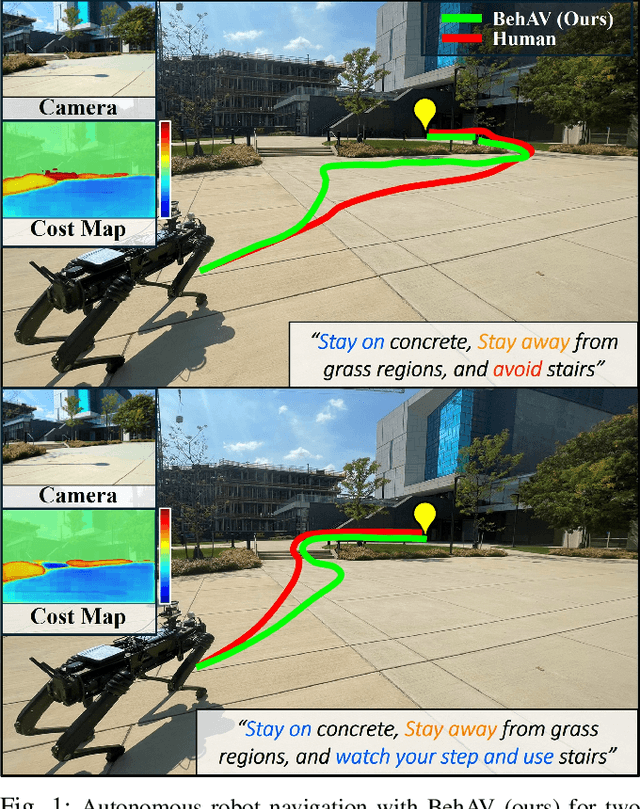

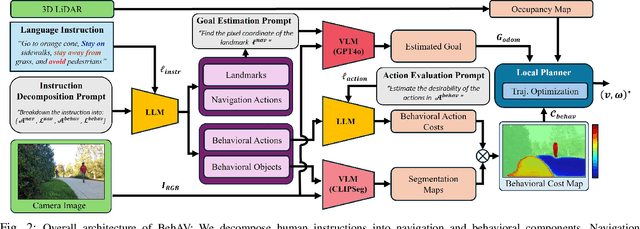

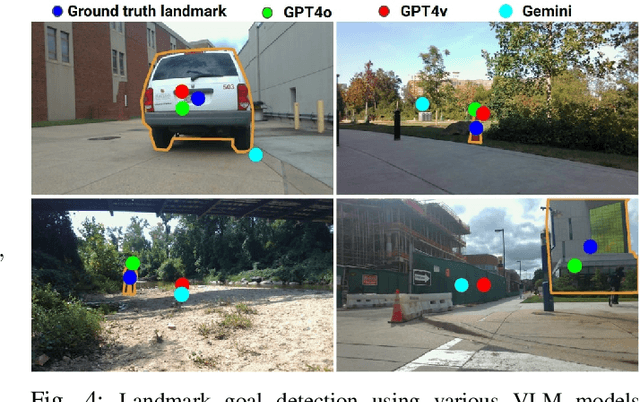

BehAV: Behavioral Rule Guided Autonomy Using VLMs for Robot Navigation in Outdoor Scenes

Sep 24, 2024

Abstract:We present BehAV, a novel approach for autonomous robot navigation in outdoor scenes guided by human instructions and leveraging Vision Language Models (VLMs). Our method interprets human commands using a Large Language Model (LLM) and categorizes the instructions into navigation and behavioral guidelines. Navigation guidelines consist of directional commands (e.g., "move forward until") and associated landmarks (e.g., "the building with blue windows"), while behavioral guidelines encompass regulatory actions (e.g., "stay on") and their corresponding objects (e.g., "pavements"). We use VLMs for their zero-shot scene understanding capabilities to estimate landmark locations from RGB images for robot navigation. Further, we introduce a novel scene representation that utilizes VLMs to ground behavioral rules into a behavioral cost map. This cost map encodes the presence of behavioral objects within the scene and assigns costs based on their regulatory actions. The behavioral cost map is integrated with a LiDAR-based occupancy map for navigation. To navigate outdoor scenes while adhering to the instructed behaviors, we present an unconstrained Model Predictive Control (MPC)-based planner that prioritizes both reaching landmarks and following behavioral guidelines. We evaluate the performance of BehAV on a quadruped robot across diverse real-world scenarios, demonstrating a 22.49% improvement in alignment with human-teleoperated actions, as measured by Frechet distance, and achieving a 40% higher navigation success rate compared to state-of-the-art methods.

TOPGN: Real-time Transparent Obstacle Detection using Lidar Point Cloud Intensity for Autonomous Robot Navigation

Aug 10, 2024

Abstract:We present TOPGN, a novel method for real-time transparent obstacle detection for robot navigation in unknown environments. We use a multi-layer 2D grid map representation obtained by summing the intensities of lidar point clouds that lie in multiple non-overlapping height intervals. We isolate a neighborhood of points reflected from transparent obstacles by comparing the intensities in the different 2D grid map layers. Using the neighborhood, we linearly extrapolate the transparent obstacle by computing a tangential line segment and use it to perform safe, real-time collision avoidance. Finally, we also demonstrate our transparent object isolation's applicability to mapping an environment. We demonstrate that our approach detects transparent objects made of various materials (glass, acrylic, PVC), arbitrary shapes, colors, and textures in a variety of real-world indoor and outdoor scenarios with varying lighting conditions. We compare our method with other glass/transparent object detection methods that use RGB images, 2D laser scans, etc. in these benchmark scenarios. We demonstrate superior detection accuracy in terms of F-score improvement at least by 12.74% and 38.46% decrease in mean absolute error (MAE), improved navigation success rates (at least two times better than the second-best), and a real-time inference rate (~50Hz on a mobile CPU). We will release our code and challenging benchmarks for future evaluations upon publication.

CoNVOI: Context-aware Navigation using Vision Language Models in Outdoor and Indoor Environments

Mar 22, 2024

Abstract:We present ConVOI, a novel method for autonomous robot navigation in real-world indoor and outdoor environments using Vision Language Models (VLMs). We employ VLMs in two ways: first, we leverage their zero-shot image classification capability to identify the context or scenario (e.g., indoor corridor, outdoor terrain, crosswalk, etc) of the robot's surroundings, and formulate context-based navigation behaviors as simple text prompts (e.g. ``stay on the pavement"). Second, we utilize their state-of-the-art semantic understanding and logical reasoning capabilities to compute a suitable trajectory given the identified context. To this end, we propose a novel multi-modal visual marking approach to annotate the obstacle-free regions in the RGB image used as input to the VLM with numbers, by correlating it with a local occupancy map of the environment. The marked numbers ground image locations in the real-world, direct the VLM's attention solely to navigable locations, and elucidate the spatial relationships between them and terrains depicted in the image to the VLM. Next, we query the VLM to select numbers on the marked image that satisfy the context-based behavior text prompt, and construct a reference path using the selected numbers. Finally, we propose a method to extrapolate the reference trajectory when the robot's environmental context has not changed to prevent unnecessary VLM queries. We use the reference trajectory to guide a motion planner, and demonstrate that it leads to human-like behaviors (e.g. not cutting through a group of people, using crosswalks, etc.) in various real-world indoor and outdoor scenarios.

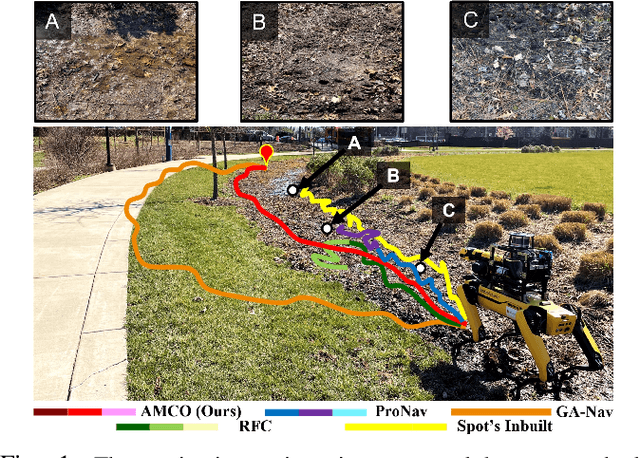

AMCO: Adaptive Multimodal Coupling of Vision and Proprioception for Quadruped Robot Navigation in Outdoor Environments

Mar 20, 2024

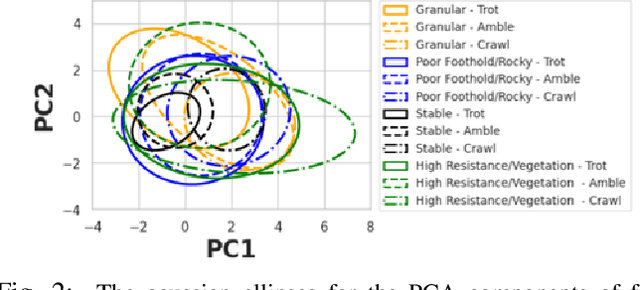

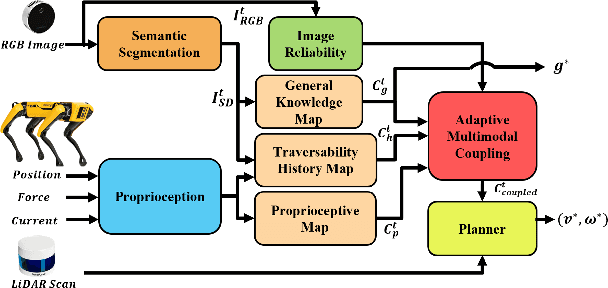

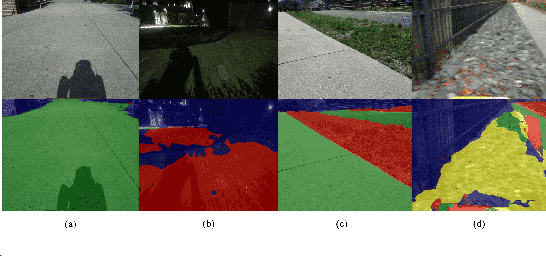

Abstract:We present AMCO, a novel navigation method for quadruped robots that adaptively combines vision-based and proprioception-based perception capabilities. Our approach uses three cost maps: general knowledge map; traversability history map; and current proprioception map; which are derived from a robot's vision and proprioception data, and couples them to obtain a coupled traversability cost map for navigation. The general knowledge map encodes terrains semantically segmented from visual sensing, and represents a terrain's typically expected traversability. The traversability history map encodes the robot's recent proprioceptive measurements on a terrain and its semantic segmentation as a cost map. Further, the robot's present proprioceptive measurement is encoded as a cost map in the current proprioception map. As the general knowledge map and traversability history map rely on semantic segmentation, we evaluate the reliability of the visual sensory data by estimating the brightness and motion blur of input RGB images and accordingly combine the three cost maps to obtain the coupled traversability cost map used for navigation. Leveraging this adaptive coupling, the robot can depend on the most reliable input modality available. Finally, we present a novel planner that selects appropriate gaits and velocities for traversing challenging outdoor environments using the coupled traversability cost map. We demonstrate AMCO's navigation performance in different real-world outdoor environments and observe 10.8%-34.9% reduction w.r.t. two stability metrics, and up to 50% improvement in terms of success rate compared to current navigation methods.

AdVENTR: Autonomous Robot Navigation in Complex Outdoor Environments

Nov 15, 2023Abstract:We present a novel system, AdVENTR for autonomous robot navigation in unstructured outdoor environments that consist of uneven and vegetated terrains. Our approach is general and can enable both wheeled and legged robots to handle outdoor terrain complexity including unevenness, surface properties like poor traction, granularity, obstacle stiffness, etc. We use data from sensors including RGB cameras, 3D Lidar, IMU, robot odometry, and pose information with efficient learning-based perception and planning algorithms that can execute on edge computing hardware. Our system uses a scene-aware switching method to perceive the environment for navigation at any time instant and dynamically switches between multiple perception algorithms. We test our system in a variety of sloped, rocky, muddy, and densely vegetated terrains and demonstrate its performance on Husky and Spot robots.

Using Lidar Intensity for Robot Navigation

Sep 28, 2023Abstract:We present Multi-Layer Intensity Map, a novel 3D object representation for robot perception and autonomous navigation. Intensity maps consist of multiple stacked layers of 2D grid maps each derived from reflected point cloud intensities corresponding to a certain height interval. The different layers of intensity maps can be used to simultaneously estimate obstacles' height, solidity/density, and opacity. We demonstrate that intensity maps' can help accurately differentiate obstacles that are safe to navigate through (e.g. beaded/string curtains, pliable tall grass), from ones that must be avoided (e.g. transparent surfaces such as glass walls, bushes, trees, etc.) in indoor and outdoor environments. Further, to handle narrow passages, and navigate through non-solid obstacles in dense environments, we propose an approach to adaptively inflate or enlarge the obstacles detected on intensity maps based on their solidity, and the robot's preferred velocity direction. We demonstrate these improved navigation capabilities in real-world narrow, dense environments using a real Turtlebot and Boston Dynamics Spot robots. We observe significant increases in success rates to more than 50%, up to a 9.5% decrease in normalized trajectory length, and up to a 22.6% increase in the F-score compared to current navigation methods using other sensor modalities.

VAPOR: Legged Robot Navigation in Outdoor Vegetation Using Offline Reinforcement Learning

Sep 19, 2023Abstract:We present VAPOR, a novel method for autonomous legged robot navigation in unstructured, densely vegetated outdoor environments using offline Reinforcement Learning (RL). Our method trains a novel RL policy using an actor-critic network and arbitrary data collected in real outdoor vegetation. Our policy uses height and intensity-based cost maps derived from 3D LiDAR point clouds, a goal cost map, and processed proprioception data as state inputs, and learns the physical and geometric properties of the surrounding obstacles such as height, density, and solidity/stiffness. The fully-trained policy's critic network is then used to evaluate the quality of dynamically feasible velocities generated from a novel context-aware planner. Our planner adapts the robot's velocity space based on the presence of entrapment inducing vegetation, and narrow passages in dense environments. We demonstrate our method's capabilities on a Spot robot in complex real-world outdoor scenes, including dense vegetation. We observe that VAPOR's actions improve success rates by up to 40%, decrease the average current consumption by up to 2.9%, and decrease the normalized trajectory length by up to 11.2% compared to existing end-to-end offline RL and other outdoor navigation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge