AMCO: Adaptive Multimodal Coupling of Vision and Proprioception for Quadruped Robot Navigation in Outdoor Environments

Paper and Code

Mar 20, 2024

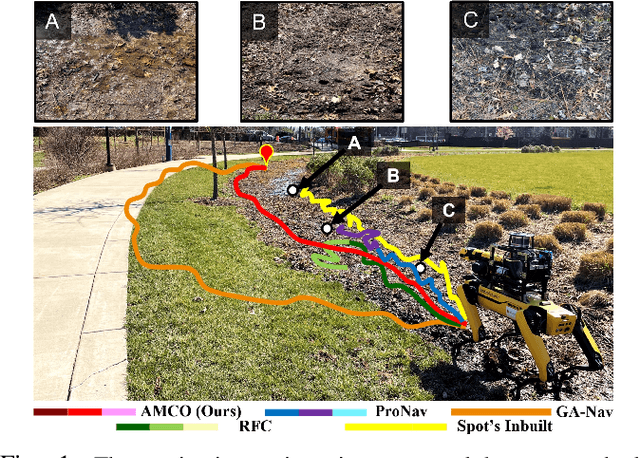

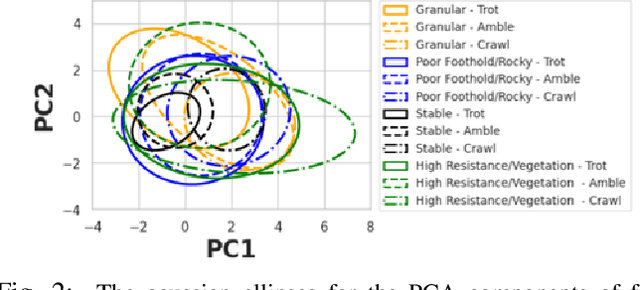

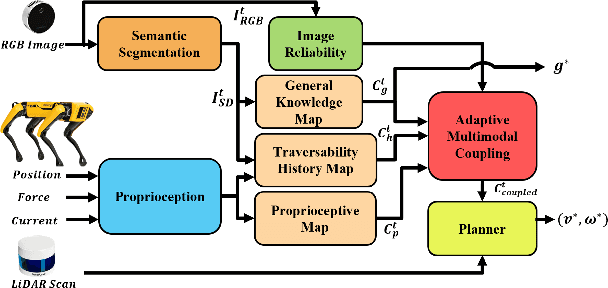

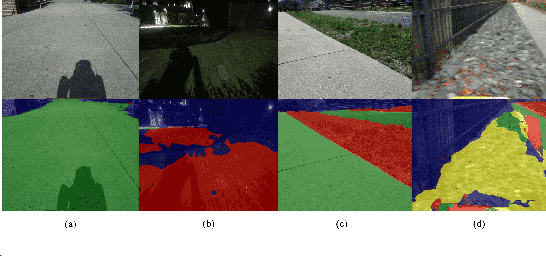

We present AMCO, a novel navigation method for quadruped robots that adaptively combines vision-based and proprioception-based perception capabilities. Our approach uses three cost maps: general knowledge map; traversability history map; and current proprioception map; which are derived from a robot's vision and proprioception data, and couples them to obtain a coupled traversability cost map for navigation. The general knowledge map encodes terrains semantically segmented from visual sensing, and represents a terrain's typically expected traversability. The traversability history map encodes the robot's recent proprioceptive measurements on a terrain and its semantic segmentation as a cost map. Further, the robot's present proprioceptive measurement is encoded as a cost map in the current proprioception map. As the general knowledge map and traversability history map rely on semantic segmentation, we evaluate the reliability of the visual sensory data by estimating the brightness and motion blur of input RGB images and accordingly combine the three cost maps to obtain the coupled traversability cost map used for navigation. Leveraging this adaptive coupling, the robot can depend on the most reliable input modality available. Finally, we present a novel planner that selects appropriate gaits and velocities for traversing challenging outdoor environments using the coupled traversability cost map. We demonstrate AMCO's navigation performance in different real-world outdoor environments and observe 10.8%-34.9% reduction w.r.t. two stability metrics, and up to 50% improvement in terms of success rate compared to current navigation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge