Kaixin Bai

M4Diffuser: Multi-View Diffusion Policy with Manipulability-Aware Control for Robust Mobile Manipulation

Sep 18, 2025Abstract:Mobile manipulation requires the coordinated control of a mobile base and a robotic arm while simultaneously perceiving both global scene context and fine-grained object details. Existing single-view approaches often fail in unstructured environments due to limited fields of view, exploration, and generalization abilities. Moreover, classical controllers, although stable, struggle with efficiency and manipulability near singularities. To address these challenges, we propose M4Diffuser, a hybrid framework that integrates a Multi-View Diffusion Policy with a novel Reduced and Manipulability-aware QP (ReM-QP) controller for mobile manipulation. The diffusion policy leverages proprioceptive states and complementary camera perspectives with both close-range object details and global scene context to generate task-relevant end-effector goals in the world frame. These high-level goals are then executed by the ReM-QP controller, which eliminates slack variables for computational efficiency and incorporates manipulability-aware preferences for robustness near singularities. Comprehensive experiments in simulation and real-world environments show that M4Diffuser achieves 7 to 56 percent higher success rates and reduces collisions by 3 to 31 percent over baselines. Our approach demonstrates robust performance for smooth whole-body coordination, and strong generalization to unseen tasks, paving the way for reliable mobile manipulation in unstructured environments. Details of the demo and supplemental material are available on our project website https://sites.google.com/view/m4diffuser.

DORA: Object Affordance-Guided Reinforcement Learning for Dexterous Robotic Manipulation

May 20, 2025Abstract:Dexterous robotic manipulation remains a longstanding challenge in robotics due to the high dimensionality of control spaces and the semantic complexity of object interaction. In this paper, we propose an object affordance-guided reinforcement learning framework that enables a multi-fingered robotic hand to learn human-like manipulation strategies more efficiently. By leveraging object affordance maps, our approach generates semantically meaningful grasp pose candidates that serve as both policy constraints and priors during training. We introduce a voting-based grasp classification mechanism to ensure functional alignment between grasp configurations and object affordance regions. Furthermore, we incorporate these constraints into a generalizable RL pipeline and design a reward function that unifies affordance-awareness with task-specific objectives. Experimental results across three manipulation tasks - cube grasping, jug grasping and lifting, and hammer use - demonstrate that our affordance-guided approach improves task success rates by an average of 15.4% compared to baselines. These findings highlight the critical role of object affordance priors in enhancing sample efficiency and learning generalizable, semantically grounded manipulation policies. For more details, please visit our project website https://sites.google.com/view/dora-manip.

A Survey of Robotic Navigation and Manipulation with Physics Simulators in the Era of Embodied AI

May 01, 2025Abstract:Navigation and manipulation are core capabilities in Embodied AI, yet training agents with these capabilities in the real world faces high costs and time complexity. Therefore, sim-to-real transfer has emerged as a key approach, yet the sim-to-real gap persists. This survey examines how physics simulators address this gap by analyzing their properties overlooked in previous surveys. We also analyze their features for navigation and manipulation tasks, along with hardware requirements. Additionally, we offer a resource with benchmark datasets, metrics, simulation platforms, and cutting-edge methods-such as world models and geometric equivariance-to help researchers select suitable tools while accounting for hardware constraints.

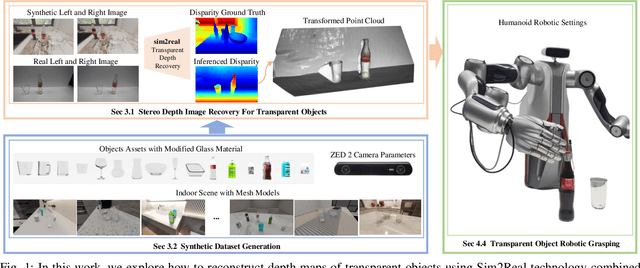

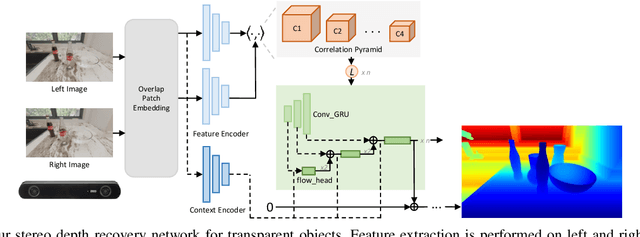

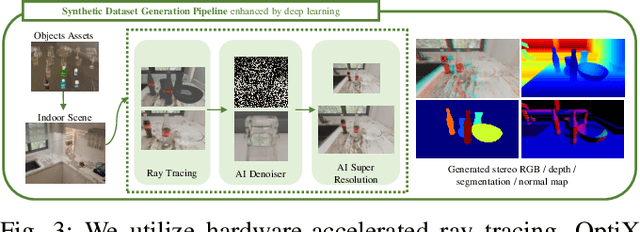

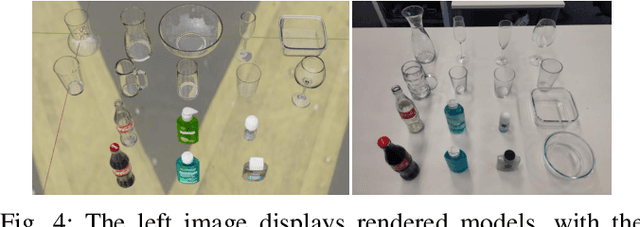

ClearDepth: Enhanced Stereo Perception of Transparent Objects for Robotic Manipulation

Sep 13, 2024

Abstract:Transparent object depth perception poses a challenge in everyday life and logistics, primarily due to the inability of standard 3D sensors to accurately capture depth on transparent or reflective surfaces. This limitation significantly affects depth map and point cloud-reliant applications, especially in robotic manipulation. We developed a vision transformer-based algorithm for stereo depth recovery of transparent objects. This approach is complemented by an innovative feature post-fusion module, which enhances the accuracy of depth recovery by structural features in images. To address the high costs associated with dataset collection for stereo camera-based perception of transparent objects, our method incorporates a parameter-aligned, domain-adaptive, and physically realistic Sim2Real simulation for efficient data generation, accelerated by AI algorithm. Our experimental results demonstrate the model's exceptional Sim2Real generalizability in real-world scenarios, enabling precise depth mapping of transparent objects to assist in robotic manipulation. Project details are available at https://sites.google.com/view/cleardepth/ .

Close the Sim2real Gap via Physically-based Structured Light Synthetic Data Simulation

Jul 17, 2024

Abstract:Despite the substantial progress in deep learning, its adoption in industrial robotics projects remains limited, primarily due to challenges in data acquisition and labeling. Previous sim2real approaches using domain randomization require extensive scene and model optimization. To address these issues, we introduce an innovative physically-based structured light simulation system, generating both RGB and physically realistic depth images, surpassing previous dataset generation tools. We create an RGBD dataset tailored for robotic industrial grasping scenarios and evaluate it across various tasks, including object detection, instance segmentation, and embedding sim2real visual perception in industrial robotic grasping. By reducing the sim2real gap and enhancing deep learning training, we facilitate the application of deep learning models in industrial settings. Project details are available at https://baikaixinpublic.github.io/structured light 3D synthesizer/.

Multi-fingered Robotic Hand Grasping in Cluttered Environments through Hand-object Contact Semantic Mapping

Apr 12, 2024Abstract:The integration of optimization method and generative models has significantly advanced dexterous manipulation techniques for five-fingered hand grasping. Yet, the application of these techniques in cluttered environments is a relatively unexplored area. To address this research gap, we have developed a novel method for generating five-fingered hand grasp samples in cluttered settings. This method emphasizes simulated grasp quality and the nuanced interaction between the hand and surrounding objects. A key aspect of our approach is our data generation method, capable of estimating contact spatial and semantic representations and affordance grasps based on object affordance information. Furthermore, our Contact Semantic Conditional Variational Autoencoder (CoSe-CVAE) network is adept at creating comprehensive contact maps from point clouds, incorporating both spatial and semantic data. We introduce a unique grasp detection technique that efficiently formulates mechanical hand grasp poses from these maps. Additionally, our evaluation model is designed to assess grasp quality and collision probability, significantly improving the practicality of five-fingered hand grasping in complex scenarios. Our data generation method outperforms previous datasets in grasp diversity, scene diversity, modality diversity. Our grasp generation method has demonstrated remarkable success, outperforming established baselines with 81.0% average success rate in real-world single-object grasping and 75.3% success rate in multi-object grasping. The dataset and supplementary materials can be found at https://sites.google.com/view/ffh-clutteredgrasping, and we will release the code upon publication.

ToolEENet: Tool Affordance 6D Pose Estimation

Apr 05, 2024Abstract:The exploration of robotic dexterous hands utilizing tools has recently attracted considerable attention. A significant challenge in this field is the precise awareness of a tool's pose when grasped, as occlusion by the hand often degrades the quality of the estimation. Additionally, the tool's overall pose often fails to accurately represent the contact interaction, thereby limiting the effectiveness of vision-guided, contact-dependent activities. To overcome this limitation, we present the innovative TOOLEE dataset, which, to the best of our knowledge, is the first to feature affordance segmentation of a tool's end-effector (EE) along with its defined 6D pose based on its usage. Furthermore, we propose the ToolEENet framework for accurate 6D pose estimation of the tool's EE. This framework begins by segmenting the tool's EE from raw RGBD data, then uses a diffusion model-based pose estimator for 6D pose estimation at a category-specific level. Addressing the issue of symmetry in pose estimation, we introduce a symmetry-aware pose representation that enhances the consistency of pose estimation. Our approach excels in this field, demonstrating high levels of precision and generalization. Furthermore, it shows great promise for application in contact-based manipulation scenarios. All data and codes are available on the project website: https://yuyangtu.github.io/projectToolEENet.html

A Collision-Aware Cable Grasping Method in Cluttered Environment

Mar 04, 2024Abstract:We introduce a Cable Grasping-Convolutional Neural Network designed to facilitate robust cable grasping in cluttered environments. Utilizing physics simulations, we generate an extensive dataset that mimics the intricacies of cable grasping, factoring in potential collisions between cables and robotic grippers. We employ the Approximate Convex Decomposition technique to dissect the non-convex cable model, with grasp quality autonomously labeled based on simulated grasping attempts. The CG-CNN is refined using this simulated dataset and enhanced through domain randomization techniques. Subsequently, the trained model predicts grasp quality, guiding the optimal grasp pose to the robot controller for execution. Grasping efficacy is assessed across both synthetic and real-world settings. Given our model implicit collision sensitivity, we achieved commendable success rates of 92.3% for known cables and 88.4% for unknown cables, surpassing contemporary state-of-the-art approaches. Supplementary materials can be found at https://leizhang-public.github.io/cg-cnn/ .

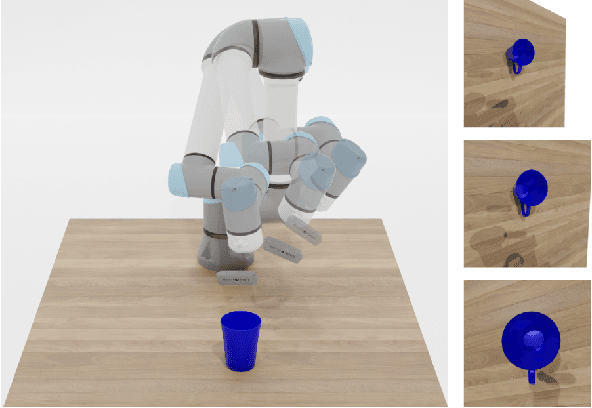

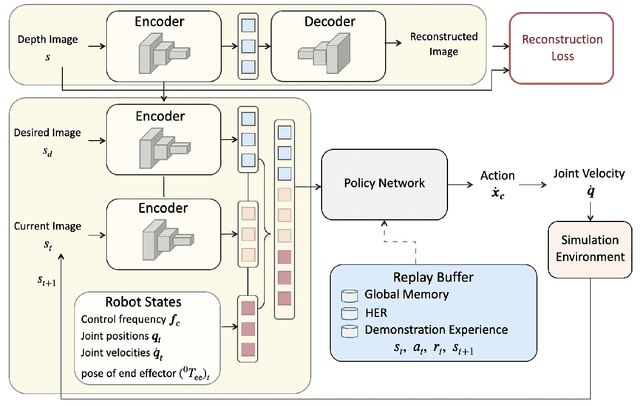

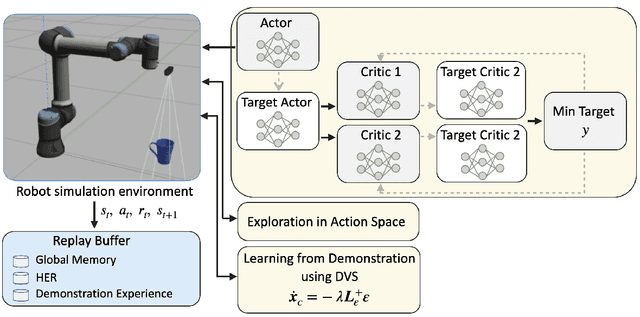

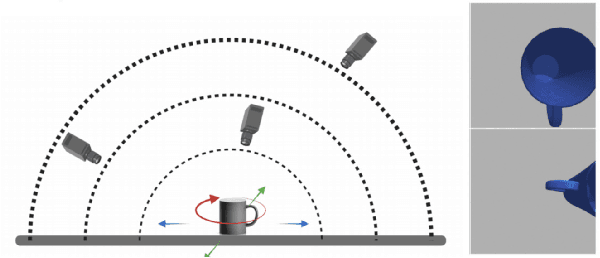

A Closed-Loop Multi-perspective Visual Servoing Approach with Reinforcement Learning

Dec 25, 2023

Abstract:Traditional visual servoing methods suffer from serving between scenes from multiple perspectives, which humans can complete with visual signals alone. In this paper, we investigated how multi-perspective visual servoing could be solved under robot-specific constraints, including self-collision, singularity problems. We presented a novel learning-based multi-perspective visual servoing framework, which iteratively estimates robot actions from latent space representations of visual states using reinforcement learning. Furthermore, our approaches were trained and validated in a Gazebo simulation environment with connection to OpenAI/Gym. Through simulation experiments, we showed that our method can successfully learn an optimal control policy given initial images from different perspectives, and it outperformed the Direct Visual Servoing algorithm with mean success rate of 97.0%.

Towards Precise Model-free Robotic Grasping with Sim-to-Real Transfer Learning

Jan 28, 2023Abstract:Precise robotic grasping of several novel objects is a huge challenge in manufacturing, automation, and logistics. Most of the current methods for model-free grasping are disadvantaged by the sparse data in grasping datasets and by errors in sensor data and contact models. This study combines data generation and sim-to-real transfer learning in a grasping framework that reduces the sim-to-real gap and enables precise and reliable model-free grasping. A large-scale robotic grasping dataset with dense grasp labels is generated using domain randomization methods and a novel data augmentation method for deep learning-based robotic grasping to solve data sparse problem. We present an end-to-end robotic grasping network with a grasp optimizer. The grasp policies are trained with sim-to-real transfer learning. The presented results suggest that our grasping framework reduces the uncertainties in grasping datasets, sensor data, and contact models. In physical robotic experiments, our grasping framework grasped single known objects and novel complex-shaped household objects with a success rate of 90.91%. In a complex scenario with multi-objects robotic grasping, the success rate was 85.71%. The proposed grasping framework outperformed two state-of-the-art methods in both known and unknown object robotic grasping.

* 8 pages, 11 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge