Junda Huang

Everything-Grasping (EG) Gripper: A Universal Gripper with Synergistic Suction-Grasping Capabilities for Cross-Scale and Cross-State Manipulation

Oct 06, 2025Abstract:Grasping objects across vastly different sizes and physical states-including both solids and liquids-with a single robotic gripper remains a fundamental challenge in soft robotics. We present the Everything-Grasping (EG) Gripper, a soft end-effector that synergistically integrates distributed surface suction with internal granular jamming, enabling cross-scale and cross-state manipulation without requiring airtight sealing at the contact interface with target objects. The EG Gripper can handle objects with surface areas ranging from sub-millimeter scale 0.2 mm2 (glass bead) to over 62,000 mm2 (A4 sized paper and woven bag), enabling manipulation of objects nearly 3,500X smaller and 88X larger than its own contact area (approximated at 707 mm2 for a 30 mm-diameter base). We further introduce a tactile sensing framework that combines liquid detection and pressure-based suction feedback, enabling real-time differentiation between solid and liquid targets. Guided by the actile-Inferred Grasping Mode Selection (TIGMS) algorithm, the gripper autonomously selects grasping modes based on distributed pressure and voltage signals. Experiments across diverse tasks-including underwater grasping, fragile object handling, and liquid capture-demonstrate robust and repeatable performance. To our knowledge, this is the first soft gripper to reliably grasp both solid and liquid objects across scales using a unified compliant architecture.

Programmable Locking Cells (PLC) for Modular Robots with High Stiffness Tunability and Morphological Adaptability

Sep 09, 2025Abstract:Robotic systems operating in unstructured environments require the ability to switch between compliant and rigid states to perform diverse tasks such as adaptive grasping, high-force manipulation, shape holding, and navigation in constrained spaces, among others. However, many existing variable stiffness solutions rely on complex actuation schemes, continuous input power, or monolithic designs, limiting their modularity and scalability. This paper presents the Programmable Locking Cell (PLC)-a modular, tendon-driven unit that achieves discrete stiffness modulation through mechanically interlocked joints actuated by cable tension. Each unit transitions between compliant and firm states via structural engagement, and the assembled system exhibits high stiffness variation-up to 950% per unit-without susceptibility to damage under high payload in the firm state. Multiple PLC units can be assembled into reconfigurable robotic structures with spatially programmable stiffness. We validate the design through two functional prototypes: (1) a variable-stiffness gripper capable of adaptive grasping, firm holding, and in-hand manipulation; and (2) a pipe-traversing robot composed of serial PLC units that achieves shape adaptability and stiffness control in confined environments. These results demonstrate the PLC as a scalable, structure-centric mechanism for programmable stiffness and motion, enabling robotic systems with reconfigurable morphology and task-adaptive interaction.

Of Mice and Machines: A Comparison of Learning Between Real World Mice and RL Agents

May 18, 2025Abstract:Recent advances in reinforcement learning (RL) have demonstrated impressive capabilities in complex decision-making tasks. This progress raises a natural question: how do these artificial systems compare to biological agents, which have been shaped by millions of years of evolution? To help answer this question, we undertake a comparative study of biological mice and RL agents in a predator-avoidance maze environment. Through this analysis, we identify a striking disparity: RL agents consistently demonstrate a lack of self-preservation instinct, readily risking ``death'' for marginal efficiency gains. These risk-taking strategies are in contrast to biological agents, which exhibit sophisticated risk-assessment and avoidance behaviors. Towards bridging this gap between the biological and artificial, we propose two novel mechanisms that encourage more naturalistic risk-avoidance behaviors in RL agents. Our approach leads to the emergence of naturalistic behaviors, including strategic environment assessment, cautious path planning, and predator avoidance patterns that closely mirror those observed in biological systems.

HandCept: A Visual-Inertial Fusion Framework for Accurate Proprioception in Dexterous Hands

May 13, 2025Abstract:As robotics progresses toward general manipulation, dexterous hands are becoming increasingly critical. However, proprioception in dexterous hands remains a bottleneck due to limitations in volume and generality. In this work, we present HandCept, a novel visual-inertial proprioception framework designed to overcome the challenges of traditional joint angle estimation methods. HandCept addresses the difficulty of achieving accurate and robust joint angle estimation in dynamic environments where both visual and inertial measurements are prone to noise and drift. It leverages a zero-shot learning approach using a wrist-mounted RGB-D camera and 9-axis IMUs, fused in real time via a latency-free Extended Kalman Filter (EKF). Our results show that HandCept achieves joint angle estimation errors between $2^{\circ}$ and $4^{\circ}$ without observable drift, outperforming visual-only and inertial-only methods. Furthermore, we validate the stability and uniformity of the IMU system, demonstrating that a common base frame across IMUs simplifies system calibration. To support sim-to-real transfer, we also open-sourced our high-fidelity rendering pipeline, which is essential for training without real-world ground truth. This work offers a robust, generalizable solution for proprioception in dexterous hands, with significant implications for robotic manipulation and human-robot interaction.

Unified Manipulability and Compliance Analysis of Modular Soft-Rigid Hybrid Fingers

Apr 18, 2025Abstract:This paper presents a unified framework to analyze the manipulability and compliance of modular soft-rigid hybrid robotic fingers. The approach applies to both hydraulic and pneumatic actuation systems. A Jacobian-based formulation maps actuator inputs to joint and task-space responses. Hydraulic actuators are modeled under incompressible assumptions, while pneumatic actuators are described using nonlinear pressure-volume relations. The framework enables consistent evaluation of manipulability ellipsoids and compliance matrices across actuation modes. We validate the analysis using two representative hands: DexCo (hydraulic) and Edgy-2 (pneumatic). Results highlight actuation-dependent trade-offs in dexterity and passive stiffness. These findings provide insights for structure-aware design and actuator selection in soft-rigid robotic fingers.

Fish Mouth Inspired Origami Gripper for Robust Multi-Type Underwater Grasping

Mar 14, 2025

Abstract:Robotic grasping and manipulation in underwater environments present unique challenges for robotic hands traditionally used on land. These challenges stem from dynamic water conditions, a wide range of object properties from soft to stiff, irregular object shapes, and varying surface frictions. One common approach involves developing finger-based hands with embedded compliance using underactuation and soft actuators. This study introduces an effective alternative solution that does not rely on finger-based hand designs. We present a fish mouth inspired origami gripper that utilizes a single degree of freedom to perform a variety of robust grasping tasks underwater. The innovative structure transforms a simple uniaxial pulling motion into a grasping action based on the Yoshimura crease pattern folding. The origami gripper offers distinct advantages, including scalable and optimizable design, grasping compliance, and robustness, with four grasping types: pinch, power grasp, simultaneous grasping of multiple objects, and scooping from the seabed. In this work, we detail the design, modeling, fabrication, and validation of a specialized underwater gripper capable of handling various marine creatures, including jellyfish, crabs, and abalone. By leveraging an origami and bio-inspired approach, the presented gripper demonstrates promising potential for robotic grasping and manipulation in underwater environments.

Prismatic-Bending Transformable (PBT) Joint for a Modular, Foldable Manipulator with Enhanced Reachability and Dexterity

Mar 07, 2025

Abstract:Robotic manipulators, traditionally designed with classical joint-link articulated structures, excel in industrial applications but face challenges in human-centered and general-purpose tasks requiring greater dexterity and adaptability. Addressing these limitations, we introduce the Prismatic-Bending Transformable (PBT) Joint, a novel design inspired by the scissors mechanism, enabling transformable kinematic chains. Each PBT joint module provides three degrees of freedom-bending, rotation, and elongation/contraction-allowing scalable and reconfigurable assemblies to form diverse kinematic configurations tailored to specific tasks. This innovative design surpasses conventional systems, delivering superior flexibility and performance across various applications. We present the design, modeling, and experimental validation of the PBT joint, demonstrating its integration into modular and foldable robotic arms. The PBT joint functions as a single SKU, enabling manipulators to be constructed entirely from standardized PBT joints without additional customized components. It also serves as a modular extension for existing systems, such as wrist modules, streamlining design, deployment, transportation, and maintenance. Three sizes-large, medium, and small-have been developed and integrated into robotic manipulators, highlighting their enhanced dexterity, reachability, and adaptability for manipulation tasks. This work represents a significant advancement in robotic design, offering scalable and efficient solutions for dynamic and unstructured environments.

Few-shot Sim2Real Based on High Fidelity Rendering with Force Feedback Teleoperation

Mar 03, 2025Abstract:Teleoperation offers a promising approach to robotic data collection and human-robot interaction. However, existing teleoperation methods for data collection are still limited by efficiency constraints in time and space, and the pipeline for simulation-based data collection remains unclear. The problem is how to enhance task performance while minimizing reliance on real-world data. To address this challenge, we propose a teleoperation pipeline for collecting robotic manipulation data in simulation and training a few-shot sim-to-real visual-motor policy. Force feedback devices are integrated into the teleoperation system to provide precise end-effector gripping force feedback. Experiments across various manipulation tasks demonstrate that force feedback significantly improves both success rates and execution efficiency, particularly in simulation. Furthermore, experiments with different levels of visual rendering quality reveal that enhanced visual realism in simulation substantially boosts task performance while reducing the need for real-world data.

SGFormer: Spherical Geometry Transformer for 360 Depth Estimation

Apr 23, 2024

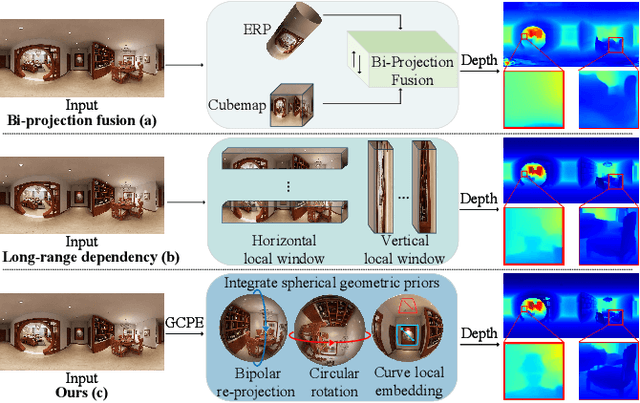

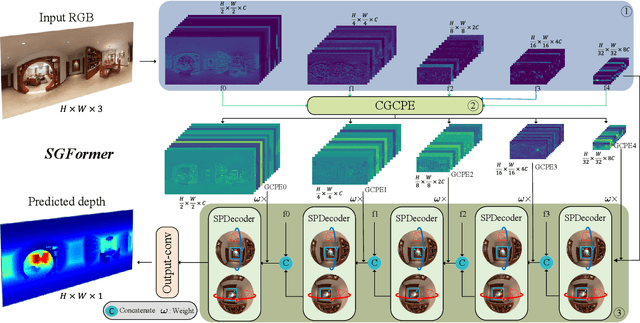

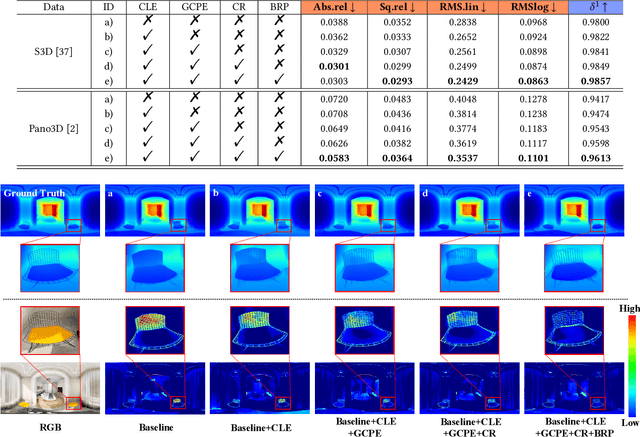

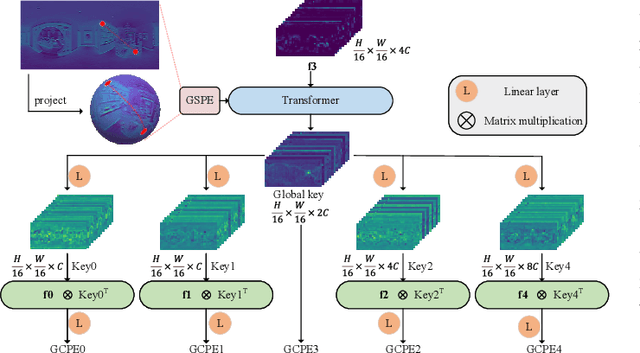

Abstract:Panoramic distortion poses a significant challenge in 360 depth estimation, particularly pronounced at the north and south poles. Existing methods either adopt a bi-projection fusion strategy to remove distortions or model long-range dependencies to capture global structures, which can result in either unclear structure or insufficient local perception. In this paper, we propose a spherical geometry transformer, named SGFormer, to address the above issues, with an innovative step to integrate spherical geometric priors into vision transformers. To this end, we retarget the transformer decoder to a spherical prior decoder (termed SPDecoder), which endeavors to uphold the integrity of spherical structures during decoding. Concretely, we leverage bipolar re-projection, circular rotation, and curve local embedding to preserve the spherical characteristics of equidistortion, continuity, and surface distance, respectively. Furthermore, we present a query-based global conditional position embedding to compensate for spatial structure at varying resolutions. It not only boosts the global perception of spatial position but also sharpens the depth structure across different patches. Finally, we conduct extensive experiments on popular benchmarks, demonstrating our superiority over state-of-the-art solutions.

Two-Stage Grasping: A New Bin Picking Framework for Small Objects

Mar 07, 2023Abstract:This paper proposes a novel bin picking framework, two-stage grasping, aiming at precise grasping of cluttered small objects. Object density estimation and rough grasping are conducted in the first stage. Fine segmentation, detection, grasping, and pushing are performed in the second stage. A small object bin picking system has been realized to exhibit the concept of two-stage grasping. Experiments have shown the effectiveness of the proposed framework. Unlike traditional bin picking methods focusing on vision-based grasping planning using classic frameworks, the challenges of picking cluttered small objects can be solved by the proposed new framework with simple vision detection and planning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge