Juan D. Tardós

EndoMetric: Near-light metric scale monocular SLAM

Oct 19, 2024

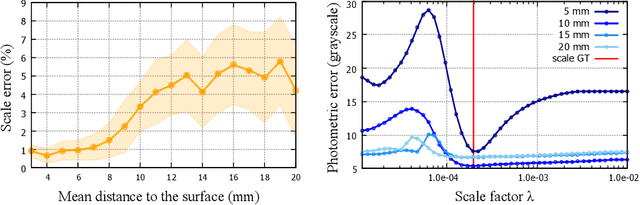

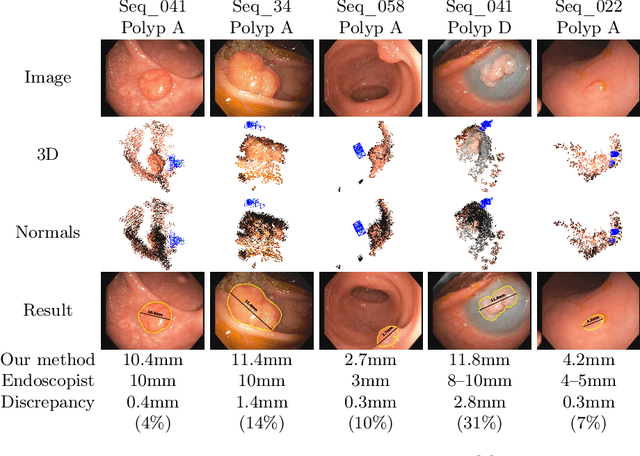

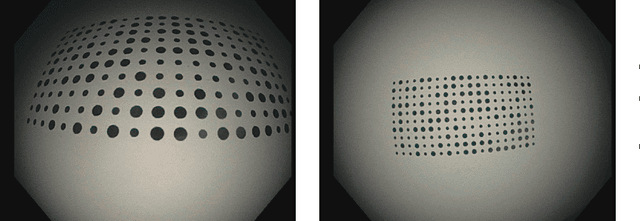

Abstract:Geometric reconstruction and SLAM with endoscopic images have seen significant advancements in recent years. In most medical specialties, the endoscopes used are monocular, and the algorithms applied are typically extensions of those designed for external environments, resulting in 3D reconstructions up to an unknown scale factor. In this paper, we take advantage of the fact that standard endoscopes are equipped with near-light sources positioned at a small but non-zero baseline from the camera. By leveraging the inverse-square law of light decay, we enable, for the first time, monocular reconstructions with accurate metric scale. This paves the way to transform any endoscope into a metric device, which is essential for practical applications such as measuring polyps, stenosis, or the extent of tissue affected by disease.

Topological SLAM in colonoscopies leveraging deep features and topological priors

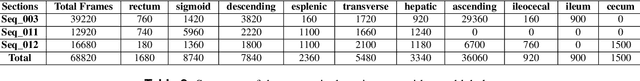

Sep 25, 2024Abstract:We introduce ColonSLAM, a system that combines classical multiple-map metric SLAM with deep features and topological priors to create topological maps of the whole colon. The SLAM pipeline by itself is able to create disconnected individual metric submaps representing locations from short video subsections of the colon, but is not able to merge covisible submaps due to deformations and the limited performance of the SIFT descriptor in the medical domain. ColonSLAM is guided by topological priors and combines a deep localization network trained to distinguish if two images come from the same place or not and the soft verification of a transformer-based matching network, being able to relate far-in-time submaps during an exploration, grouping them in nodes imaging the same colon place, building more complex maps than any other approach in the literature. We demonstrate our approach in the Endomapper dataset, showing its potential for producing maps of the whole colon in real human explorations. Code and models are available at: https://github.com/endomapper/ColonSLAM.

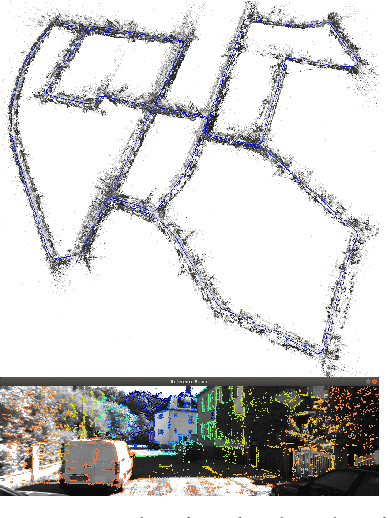

CudaSIFT-SLAM: multiple-map visual SLAM for full procedure mapping in real human endoscopy

May 27, 2024Abstract:Monocular visual simultaneous localization and mapping (V-SLAM) is nowadays an irreplaceable tool in mobile robotics and augmented reality, where it performs robustly. However, human colonoscopies pose formidable challenges like occlusions, blur, light changes, lack of texture, deformation, water jets or tool interaction, which result in very frequent tracking losses. ORB-SLAM3, the top performing multiple-map V-SLAM, is unable to recover from them by merging sub-maps or relocalizing the camera, due to the poor performance of its place recognition algorithm based on ORB features and DBoW2 bag-of-words. We present CudaSIFT-SLAM, the first V-SLAM system able to process complete human colonoscopies in real-time. To overcome the limitations of ORB-SLAM3, we use SIFT instead of ORB features and replace the DBoW2 direct index with the more computationally demanding brute-force matching, being able to successfully match images separated in time for relocation and map merging. Real-time performance is achieved thanks to CudaSIFT, a GPU implementation for SIFT extraction and brute-force matching. We benchmark our system in the C3VD phantom colon dataset, and in a full real colonoscopy from the Endomapper dataset, demonstrating the capabilities to merge sub-maps and relocate in them, obtaining significantly longer sub-maps. Our system successfully maps in real-time 88 % of the frames in the C3VD dataset. In a real screening colonoscopy, despite the much higher prevalence of occluded and blurred frames, the mapping coverage is 53 % in carefully explored areas and 38 % in the full sequence, a 70 % improvement over ORB-SLAM3.

LightNeuS: Neural Surface Reconstruction in Endoscopy using Illumination Decline

Sep 06, 2023Abstract:We propose a new approach to 3D reconstruction from sequences of images acquired by monocular endoscopes. It is based on two key insights. First, endoluminal cavities are watertight, a property naturally enforced by modeling them in terms of a signed distance function. Second, the scene illumination is variable. It comes from the endoscope's light sources and decays with the inverse of the squared distance to the surface. To exploit these insights, we build on NeuS, a neural implicit surface reconstruction technique with an outstanding capability to learn appearance and a SDF surface model from multiple views, but currently limited to scenes with static illumination. To remove this limitation and exploit the relation between pixel brightness and depth, we modify the NeuS architecture to explicitly account for it and introduce a calibrated photometric model of the endoscope's camera and light source. Our method is the first one to produce watertight reconstructions of whole colon sections. We demonstrate excellent accuracy on phantom imagery. Remarkably, the watertight prior combined with illumination decline, allows to complete the reconstruction of unseen portions of the surface with acceptable accuracy, paving the way to automatic quality assessment of cancer screening explorations, measuring the global percentage of observed mucosa.

LightDepth: Single-View Depth Self-Supervision from Illumination Decline

Aug 21, 2023Abstract:Single-view depth estimation can be remarkably effective if there is enough ground-truth depth data for supervised training. However, there are scenarios, especially in medicine in the case of endoscopies, where such data cannot be obtained. In such cases, multi-view self-supervision and synthetic-to-real transfer serve as alternative approaches, however, with a considerable performance reduction in comparison to supervised case. Instead, we propose a single-view self-supervised method that achieves a performance similar to the supervised case. In some medical devices, such as endoscopes, the camera and light sources are co-located at a small distance from the target surfaces. Thus, we can exploit that, for any given albedo and surface orientation, pixel brightness is inversely proportional to the square of the distance to the surface, providing a strong single-view self-supervisory signal. In our experiments, our self-supervised models deliver accuracies comparable to those of fully supervised ones, while being applicable without depth ground-truth data.

ColonMapper: topological mapping and localization for colonoscopy

May 09, 2023Abstract:Mapping and localization in endoluminal cavities from colonoscopies or gastroscopies has to overcome the challenge of significant shape and illumination changes between reobservations of the same endoluminal location. Instead of geometrical maps that strongly rely on a fixed scene geometry, topological maps are more adequate because they focus on visual place recognition, i.e. the capability to determine if two video shots are imaging the same location. We propose a topological mapping and localization system able to operate on real human colonoscopies. The map is a graph where each node codes a colon location by a set of real images of that location. The edges represent traversability between two nodes. For close-in-time images, where scene changes are minor, place recognition can be successfully managed with the recent transformers-based image-matching algorithms. However, under long-term changes -- such as different colonoscopies of the same patient -- feature-based matching fails. To address this, we propose a GeM global descriptor able to achieve high recall with significant changes in the scene. The addition of a Bayesian filter processing the map graph boosts the accuracy of the long-term place recognition, enabling relocalization in a previously built map. In the experiments, we construct a map during the withdrawal phase of a first colonoscopy. Subsequently, we prove the ability to relocalize within this map during a second colonoscopy of the same patient two weeks later. Code and models will be available upon acceptance.

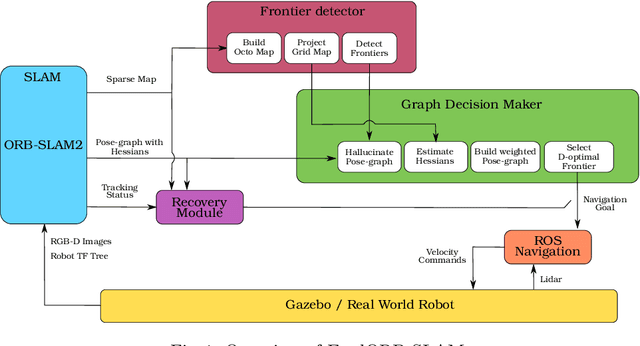

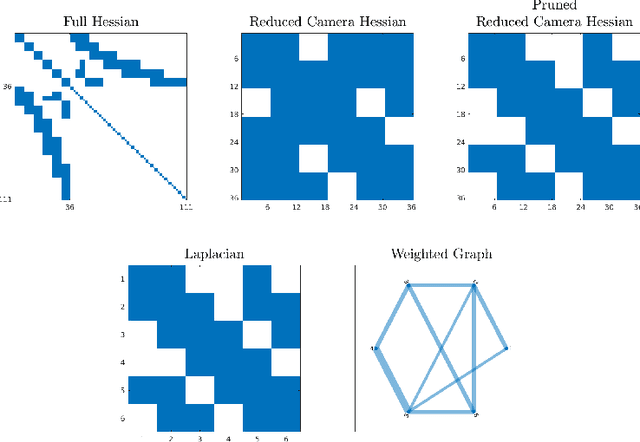

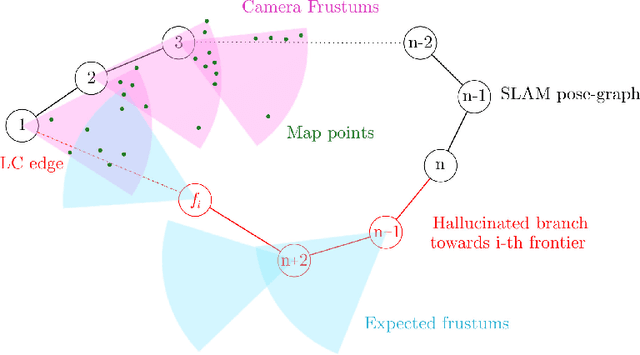

ExplORB-SLAM: Active Visual SLAM Exploiting the Pose-graph Topology

Sep 08, 2022

Abstract:Deploying autonomous robots capable of exploring unknown environments has long been a topic of great relevance to the robotics community. In this work, we take a further step in that direction by presenting an open-source active visual SLAM framework that leverages the accuracy of a state-of-the-art graph-SLAM system and takes advantage of the fast utility computation that exploiting the structure of the underlying pose-graph offers. Through careful estimation of a posteriori weighted pose-graphs, D-optimal decision-making is achieved online with the objective of improving localization and mapping uncertainties as exploration occurs.

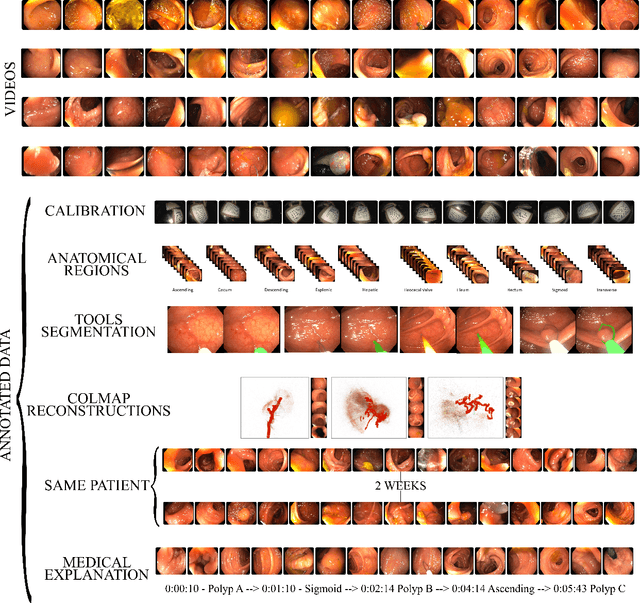

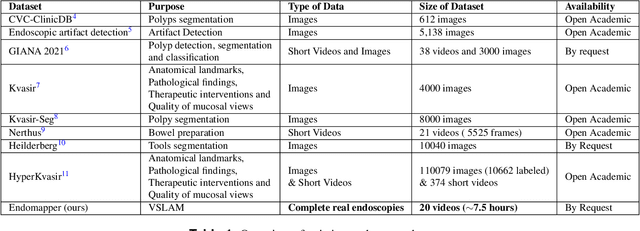

EndoMapper dataset of complete calibrated endoscopy procedures

Apr 29, 2022

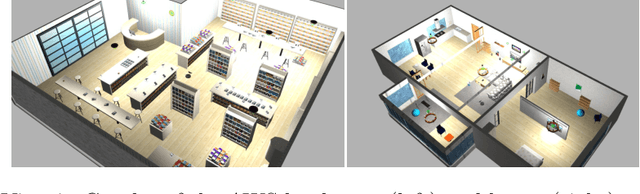

Abstract:Computer-assisted systems are becoming broadly used in medicine. In endoscopy, most research focuses on automatic detection of polyps or other pathologies, but localization and navigation of the endoscope is completely performed manually by physicians. To broaden this research and bring spatial Artificial Intelligence to endoscopies, data from complete procedures are needed. This data will be used to build a 3D mapping and localization systems that can perform special task like, for example, detect blind zones during exploration, provide automatic polyp measurements, guide doctors to a polyp found in a previous exploration and retrieve previous images of the same area aligning them for easy comparison. These systems will provide an improvement in the quality and precision of the procedures while lowering the burden on the physicians. This paper introduces the Endomapper dataset, the first collection of complete endoscopy sequences acquired during regular medical practice, including slow and careful screening explorations, making secondary use of medical data. Its original purpose is to facilitate the development and evaluation of VSLAM (Visual Simultaneous Localization and Mapping) methods in real endoscopy data. The first release of the dataset is composed of 59 sequences with more than 15 hours of video. It is also the first endoscopic dataset that includes both the computed geometric and photometric endoscope calibration with the original calibration videos. Meta-data and annotations associated to the dataset varies from anatomical landmark and description of the procedure labeling, tools segmentation masks, COLMAP 3D reconstructions, simulated sequences with groundtruth and meta-data related to special cases, such as sequences from the same patient. This information will improve the research in endoscopic VSLAM, as well as other research lines, and create new research lines.

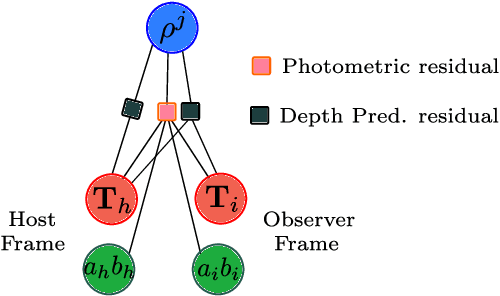

Scale-aware direct monocular odometry

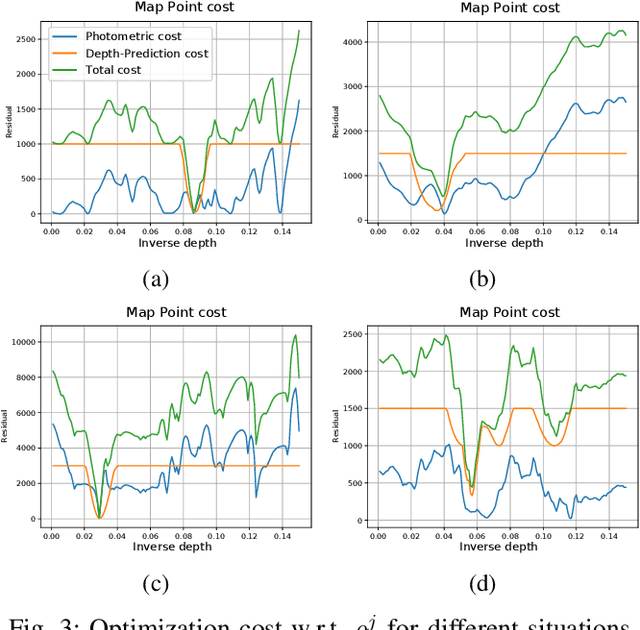

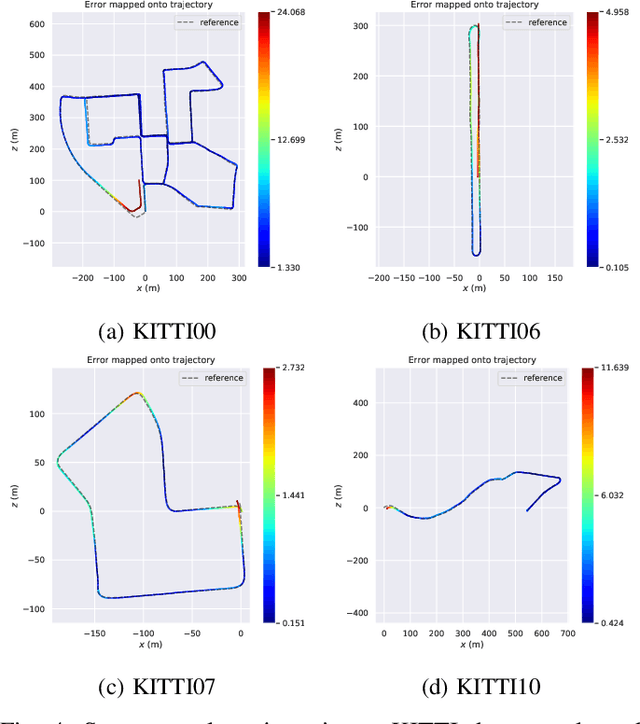

Sep 21, 2021

Abstract:We present a framework for direct monocular odometry based on depth prediction from a deep neural network. In contrast with existing methods where depth information is only partially exploited, we formulate a novel depth prediction residual which allows us to incorporate multi-view depth information. In addition, we propose to use a truncated robust cost function which prevents considering inconsistent depth estimations. The photometric and depth-prediction measurements are integrated in a tightly-coupled optimization leading to a scale-aware monocular system which does not accumulate scale drift. We demonstrate the validity of our proposal evaluating it on the KITTI odometry dataset and comparing it with state-of-the-art monocular and stereo SLAM systems. Experiments show that our proposal largely outperforms classic monocular SLAM, being 5 to 9 times more precise, with an accuracy which is closer to that of stereo systems.

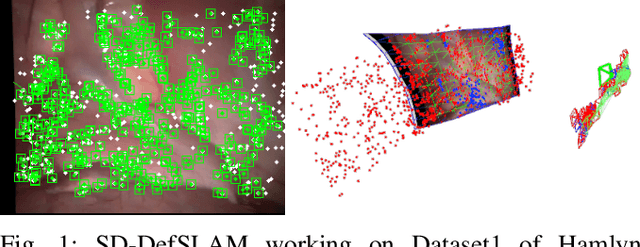

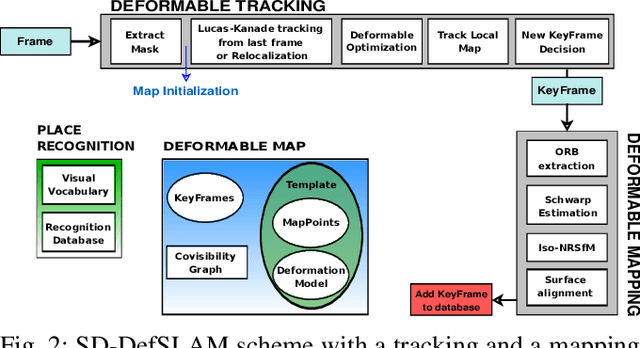

SD-DefSLAM: Semi-Direct Monocular SLAM for Deformable and Intracorporeal Scenes

Oct 19, 2020

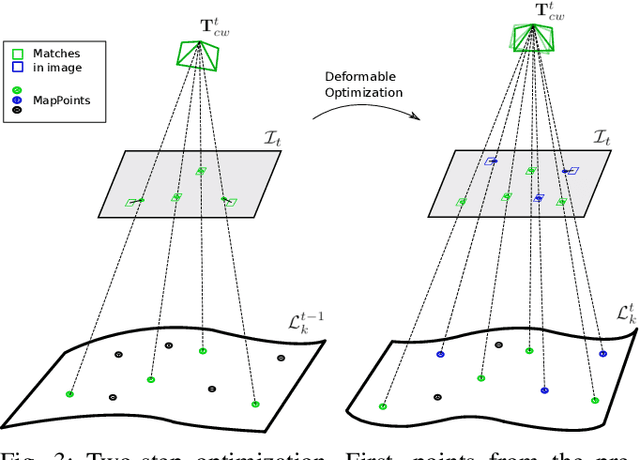

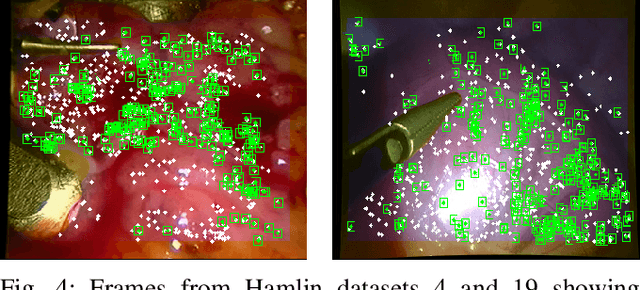

Abstract:Conventional SLAM techniques strongly rely on scene rigidity to solve data association, ignoring dynamic parts of the scene. In this work we present Semi-Direct DefSLAM (SD-DefSLAM), a novel monocular deformable SLAM method able to map highly deforming environments, built on top of DefSLAM. To robustly solve data association in challenging deforming scenes, SD-DefSLAM combines direct and indirect methods: an enhanced illumination-invariant Lucas-Kanade tracker for data association, geometric Bundle Adjustment for pose and deformable map estimation, and bag-of-words based on feature descriptors for camera relocation. Dynamic objects are detected and segmented-out using a CNN trained for the specific application domain. We thoroughly evaluate our system in two public datasets. The mandala dataset is a SLAM benchmark with increasingly aggressive deformations. The Hamlyn dataset contains intracorporeal sequences that pose serious real-life challenges beyond deformation like weak texture, specular reflections, surgical tools and occlusions. Our results show that SD-DefSLAM outperforms DefSLAM in point tracking, reconstruction accuracy and scale drift thanks to the improvement in all the data association steps, being the first system able to robustly perform SLAM inside the human body.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge