Josephine Sullivan

KTH Royal Institute of Technology, Stockholm, Sweden

Unconditional Human Motion and Shape Generation via Balanced Score-Based Diffusion

Oct 14, 2025Abstract:Recent work has explored a range of model families for human motion generation, including Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and diffusion-based models. Despite their differences, many methods rely on over-parameterized input features and auxiliary losses to improve empirical results. These strategies should not be strictly necessary for diffusion models to match the human motion distribution. We show that on par with state-of-the-art results in unconditional human motion generation are achievable with a score-based diffusion model using only careful feature-space normalization and analytically derived weightings for the standard L2 score-matching loss, while generating both motion and shape directly, thereby avoiding slow post hoc shape recovery from joints. We build the method step by step, with a clear theoretical motivation for each component, and provide targeted ablations demonstrating the effectiveness of each proposed addition in isolation.

A simple, strong baseline for building damage detection on the xBD dataset

Jan 30, 2024Abstract:We construct a strong baseline method for building damage detection by starting with the highly-engineered winning solution of the xView2 competition, and gradually stripping away components. This way, we obtain a much simpler method, while retaining adequate performance. We expect the simplified solution to be more widely and easily applicable. This expectation is based on the reduced complexity, as well as the fact that we choose hyperparameters based on simple heuristics, that transfer to other datasets. We then re-arrange the xView2 dataset splits such that the test locations are not seen during training, contrary to the competition setup. In this setting, we find that both the complex and the simplified model fail to generalize to unseen locations. Analyzing the dataset indicates that this failure to generalize is not only a model-based problem, but that the difficulty might also be influenced by the unequal class distributions between events. Code, including the baseline model, is available under https://github.com/PaulBorneP/Xview2_Strong_Baseline

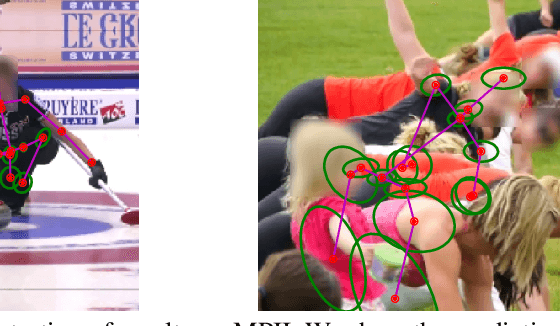

Probabilistic 3d regression with projected huber distribution

Mar 09, 2023Abstract:Estimating probability distributions which describe where an object is likely to be from camera data is a task with many applications. In this work we describe properties which we argue such methods should conform to. We also design a method which conform to these properties. In our experiments we show that our method produces uncertainties which correlate well with empirical errors. We also show that the mode of the predicted distribution outperform our regression baselines. The code for our implementation is available online.

Contrastive pretraining for semantic segmentation is robust to noisy positive pairs

Nov 24, 2022Abstract:Domain-specific variants of contrastive learning can construct positive pairs from two distinct images, as opposed to augmenting the same image twice. Unlike in traditional contrastive methods, this can result in positive pairs not matching perfectly. Similar to false negative pairs, this could impede model performance. Surprisingly, we find that downstream semantic segmentation is either robust to the noisy pairs or even benefits from them. The experiments are conducted on the remote sensing dataset xBD, and a synthetic segmentation dataset, on which we have full control over the noise parameters. As a result, practitioners should be able to use such domain-specific contrastive methods without having to filter their positive pairs beforehand.

Are All Linear Regions Created Equal?

Feb 23, 2022

Abstract:The number of linear regions has been studied as a proxy of complexity for ReLU networks. However, the empirical success of network compression techniques like pruning and knowledge distillation, suggest that in the overparameterized setting, linear regions density might fail to capture the effective nonlinearity. In this work, we propose an efficient algorithm for discovering linear regions and use it to investigate the effectiveness of density in capturing the nonlinearity of trained VGGs and ResNets on CIFAR-10 and CIFAR-100. We contrast the results with a more principled nonlinearity measure based on function variation, highlighting the shortcomings of linear regions density. Furthermore, interestingly, our measure of nonlinearity clearly correlates with model-wise deep double descent, connecting reduced test error with reduced nonlinearity, and increased local similarity of linear regions.

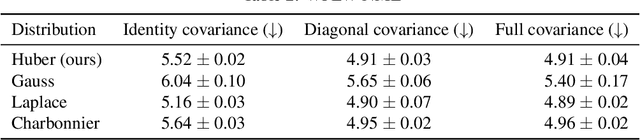

Probabilistic Regression with Huber Distributions

Nov 19, 2021

Abstract:In this paper we describe a probabilistic method for estimating the position of an object along with its covariance matrix using neural networks. Our method is designed to be robust to outliers, have bounded gradients with respect to the network outputs, among other desirable properties. To achieve this we introduce a novel probability distribution inspired by the Huber loss. We also introduce a new way to parameterize positive definite matrices to ensure invariance to the choice of orientation for the coordinate system we regress over. We evaluate our method on popular body pose and facial landmark datasets and get performance on par or exceeding the performance of non-heatmap methods. Our code is available at github.com/Davmo049/Public_prob_regression_with_huber_distributions

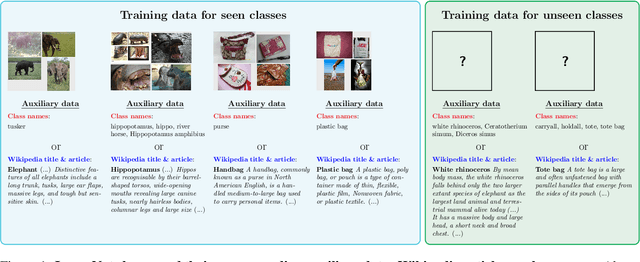

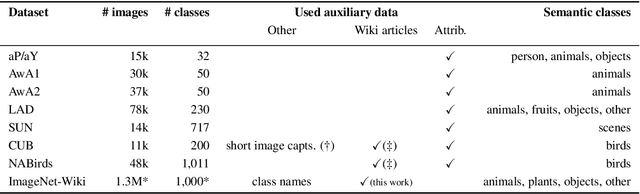

Large-Scale Zero-Shot Image Classification from Rich and Diverse Textual Descriptions

Mar 17, 2021

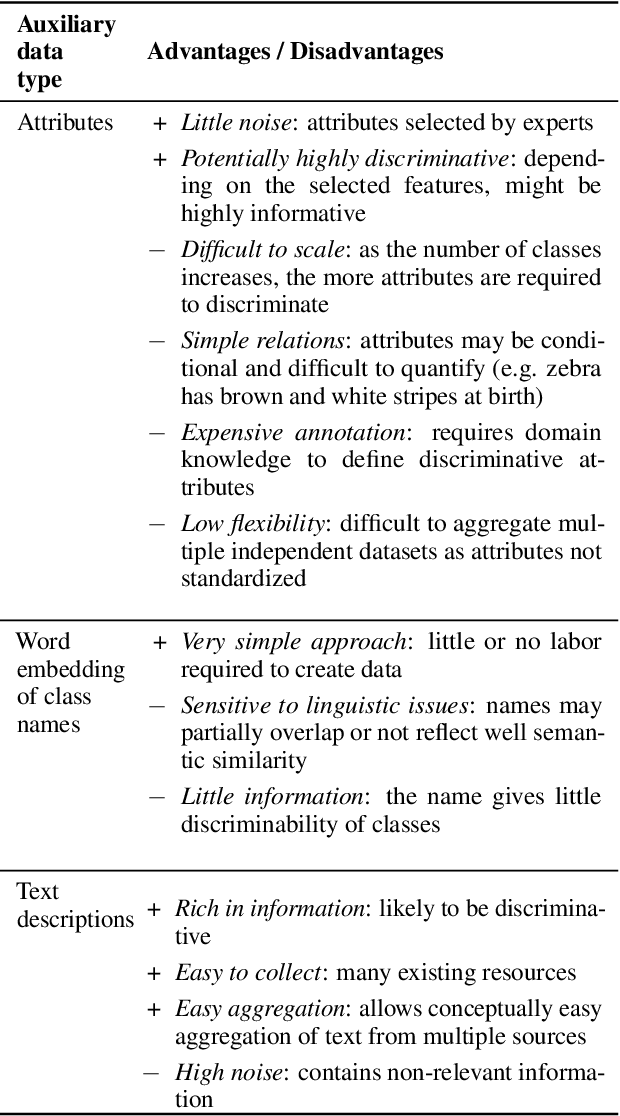

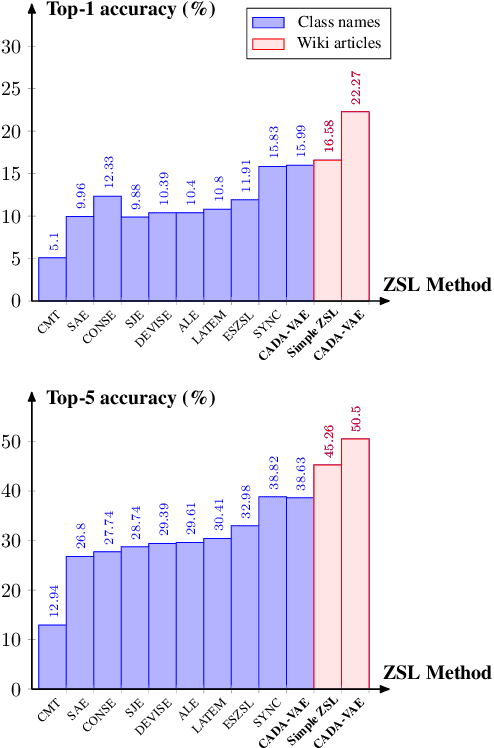

Abstract:We study the impact of using rich and diverse textual descriptions of classes for zero-shot learning (ZSL) on ImageNet. We create a new dataset ImageNet-Wiki that matches each ImageNet class to its corresponding Wikipedia article. We show that merely employing these Wikipedia articles as class descriptions yields much higher ZSL performance than prior works. Even a simple model using this type of auxiliary data outperforms state-of-the-art models that rely on standard features of word embedding encodings of class names. These results highlight the usefulness and importance of textual descriptions for ZSL, as well as the relative importance of auxiliary data type compared to algorithmic progress. Our experimental results also show that standard zero-shot learning approaches generalize poorly across categories of classes.

Explanation-based Weakly-supervised Learning of Visual Relations with Graph Networks

Jun 16, 2020

Abstract:Visual relationship detection is fundamental for holistic image understanding. However, localizing and classifying (subject, predicate, object) triplets constitutes a hard learning objective due to the combinatorial explosion of possible relationships, their long-tail distribution in natural images, and an expensive annotation process. This paper introduces a novel weakly-supervised method for visual relationship detection that relies only on image-level predicate annotations. A graph neural network is trained to classify the predicates in an image from the graph representation of all objects, implicitly encoding an inductive bias for pairwise relationships. We then frame relationship detection as the explanation of such a predicate classifier, i.e. we reconstruct a complete relationship by recovering the subject and the object of a predicted predicate. Using this novel technique and minimal labels, we present comparable results to recent fully-supervised and weakly-supervised methods on three diverse and challenging datasets: HICO-DET for human-object interaction, Visual Relationship Detection for generic object-to-object relationships, and UnRel for unusual relationships.

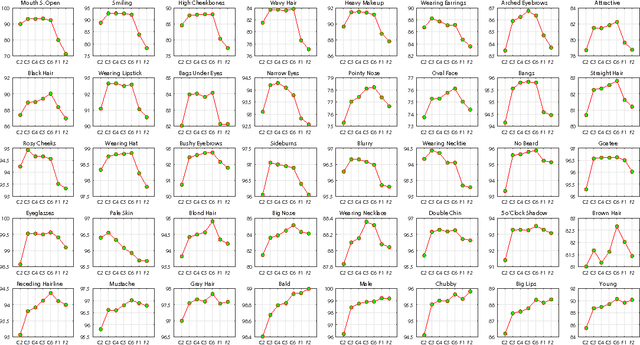

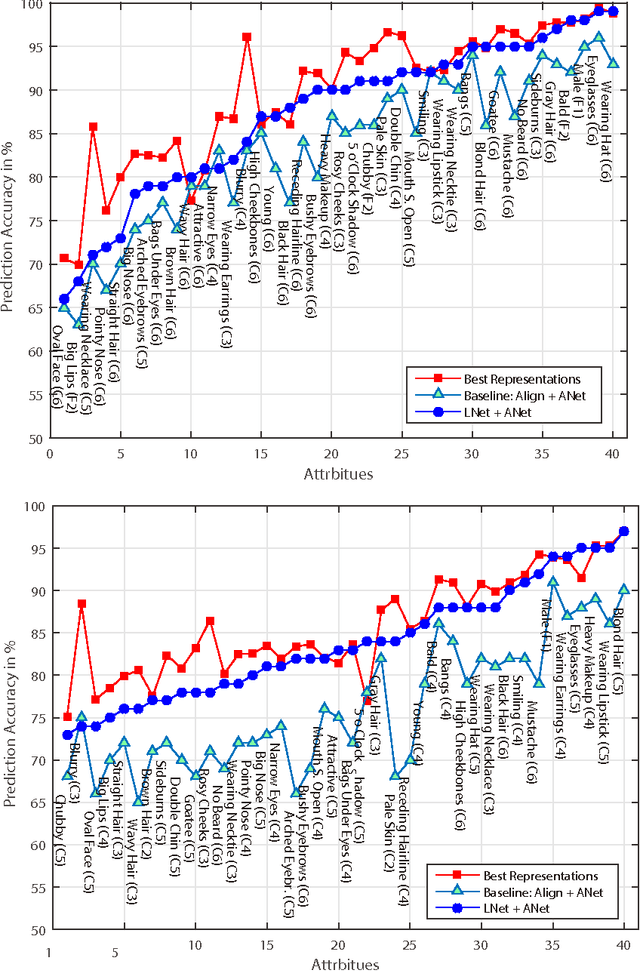

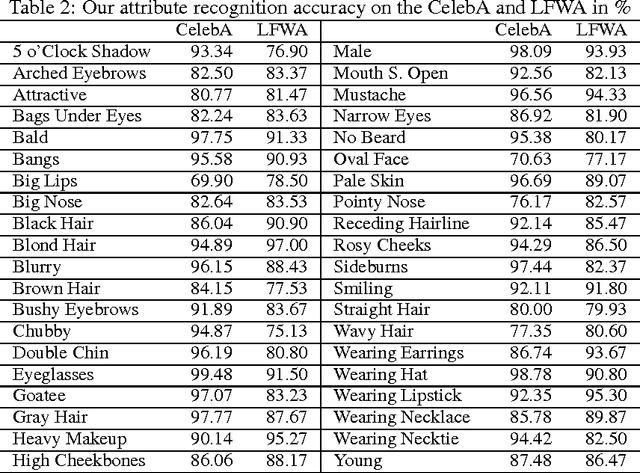

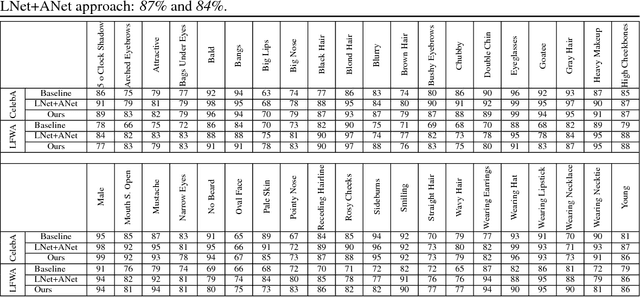

Leveraging Mid-Level Deep Representations For Predicting Face Attributes in the Wild

Jun 21, 2016

Abstract:Predicting facial attributes from faces in the wild is very challenging due to pose and lighting variations in the real world. The key to this problem is to build proper feature representations to cope with these unfavourable conditions. Given the success of Convolutional Neural Network (CNN) in image classification, the high-level CNN feature, as an intuitive and reasonable choice, has been widely utilized for this problem. In this paper, however, we consider the mid-level CNN features as an alternative to the high-level ones for attribute prediction. This is based on the observation that face attributes are different: some of them are locally oriented while others are globally defined. Our investigations reveal that the mid-level deep representations outperform the prediction accuracy achieved by the (fine-tuned) high-level abstractions. We empirically demonstrate that the midlevel representations achieve state-of-the-art prediction performance on CelebA and LFWA datasets. Our investigations also show that by utilizing the mid-level representations one can employ a single deep network to achieve both face recognition and attribute prediction.

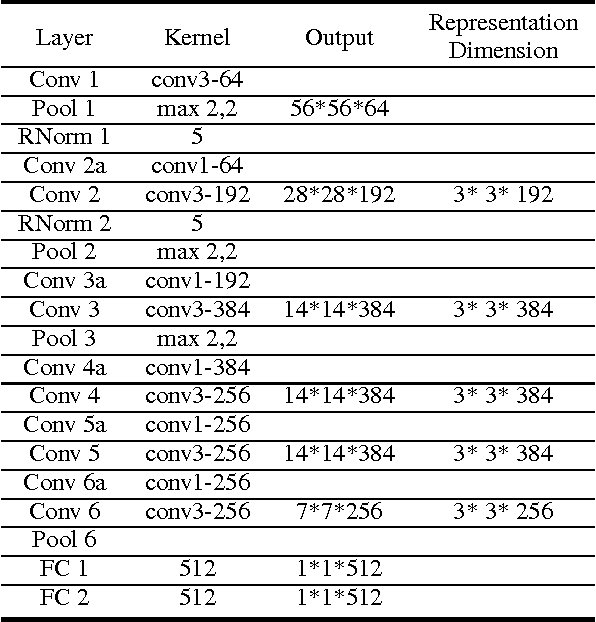

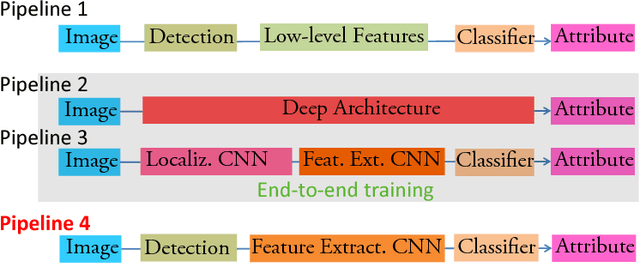

Face Attribute Prediction Using Off-the-Shelf CNN Features

Jun 21, 2016

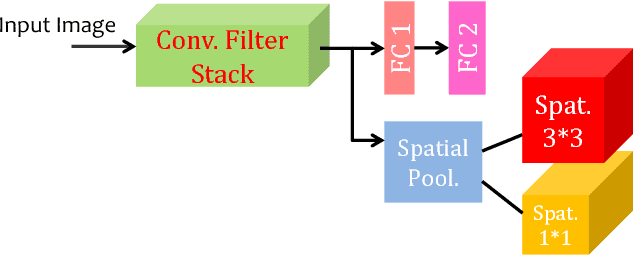

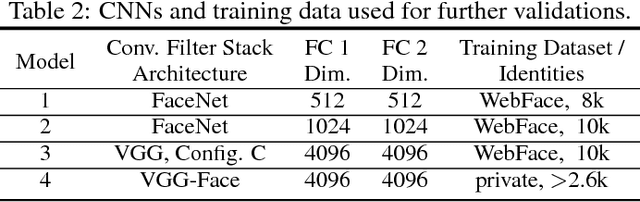

Abstract:Predicting attributes from face images in the wild is a challenging computer vision problem. To automatically describe face attributes from face containing images, traditionally one needs to cascade three technical blocks --- face localization, facial descriptor construction, and attribute classification --- in a pipeline. As a typical classification problem, face attribute prediction has been addressed using deep learning. Current state-of-the-art performance was achieved by using two cascaded Convolutional Neural Networks (CNNs), which were specifically trained to learn face localization and attribute description. In this paper, we experiment with an alternative way of employing the power of deep representations from CNNs. Combining with conventional face localization techniques, we use off-the-shelf architectures trained for face recognition to build facial descriptors. Recognizing that the describable face attributes are diverse, our face descriptors are constructed from different levels of the CNNs for different attributes to best facilitate face attribute prediction. Experiments on two large datasets, LFWA and CelebA, show that our approach is entirely comparable to the state-of-the-art. Our findings not only demonstrate an efficient face attribute prediction approach, but also raise an important question: how to leverage the power of off-the-shelf CNN representations for novel tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge