José M. F. Moura

BRIDGES: Bridging Graph Modality and Large Language Models within EDA Tasks

Apr 07, 2025Abstract:While many EDA tasks already involve graph-based data, existing LLMs in EDA primarily either represent graphs as sequential text, or simply ignore graph-structured data that might be beneficial like dataflow graphs of RTL code. Recent studies have found that LLM performance suffers when graphs are represented as sequential text, and using additional graph information significantly boosts performance. To address these challenges, we introduce BRIDGES, a framework designed to incorporate graph modality into LLMs for EDA tasks. BRIDGES integrates an automated data generation workflow, a solution that combines graph modality with LLM, and a comprehensive evaluation suite. First, we establish an LLM-driven workflow to generate RTL and netlist-level data, converting them into dataflow and netlist graphs with function descriptions. This workflow yields a large-scale dataset comprising over 500,000 graph instances and more than 1.5 billion tokens. Second, we propose a lightweight cross-modal projector that encodes graph representations into text-compatible prompts, enabling LLMs to effectively utilize graph data without architectural modifications. Experimental results demonstrate 2x to 10x improvements across multiple tasks compared to text-only baselines, including accuracy in design retrieval, type prediction and perplexity in function description, with negligible computational overhead (<1% model weights increase and <30% additional runtime overhead). Even without additional LLM finetuning, our results outperform text-only by a large margin. We plan to release BRIDGES, including the dataset, models, and training flow.

ReLU Networks as Random Functions: Their Distribution in Probability Space

Mar 28, 2025Abstract:This paper presents a novel framework for understanding trained ReLU networks as random, affine functions, where the randomness is induced by the distribution over the inputs. By characterizing the probability distribution of the network's activation patterns, we derive the discrete probability distribution over the affine functions realizable by the network. We extend this analysis to describe the probability distribution of the network's outputs. Our approach provides explicit, numerically tractable expressions for these distributions in terms of Gaussian orthant probabilities. Additionally, we develop approximation techniques to identify the support of affine functions a trained ReLU network can realize for a given distribution of inputs. Our work provides a framework for understanding the behavior and performance of ReLU networks corresponding to stochastic inputs, paving the way for more interpretable and reliable models.

FedBaF: Federated Learning Aggregation Biased by a Foundation Model

Oct 24, 2024Abstract:Foundation models are now a major focus of leading technology organizations due to their ability to generalize across diverse tasks. Existing approaches for adapting foundation models to new applications often rely on Federated Learning (FL) and disclose the foundation model weights to clients when using it to initialize the global model. While these methods ensure client data privacy, they compromise model and information security. In this paper, we introduce Federated Learning Aggregation Biased by a Foundation Model (FedBaF), a novel method for dynamically integrating pre-trained foundation model weights during the FL aggregation phase. Unlike conventional methods, FedBaF preserves the confidentiality of the foundation model while still leveraging its power to train more accurate models, especially in non-IID and adversarial scenarios. Our comprehensive experiments use Pre-ResNet and foundation models like Vision Transformer to demonstrate that FedBaF not only matches, but often surpasses the test accuracy of traditional weight initialization methods by up to 11.4\% in IID and up to 15.8\% in non-IID settings. Additionally, FedBaF applied to a Transformer-based language model significantly reduced perplexity by up to 39.2\%.

Gradient Networks

Apr 10, 2024Abstract:Directly parameterizing and learning gradients of functions has widespread significance, with specific applications in optimization, generative modeling, and optimal transport. This paper introduces gradient networks (GradNets): novel neural network architectures that parameterize gradients of various function classes. GradNets exhibit specialized architectural constraints that ensure correspondence to gradient functions. We provide a comprehensive GradNet design framework that includes methods for transforming GradNets into monotone gradient networks (mGradNets), which are guaranteed to represent gradients of convex functions. We establish the approximation capabilities of the proposed GradNet and mGradNet. Our results demonstrate that these networks universally approximate the gradients of (convex) functions. Furthermore, these networks can be customized to correspond to specific spaces of (monotone) gradient functions, including gradients of transformed sums of (convex) ridge functions. Our analysis leads to two distinct GradNet architectures, GradNet-C and GradNet-M, and we describe the corresponding monotone versions, mGradNet-C and mGradNet-M. Our empirical results show that these architectures offer efficient parameterizations and outperform popular methods in gradient field learning tasks.

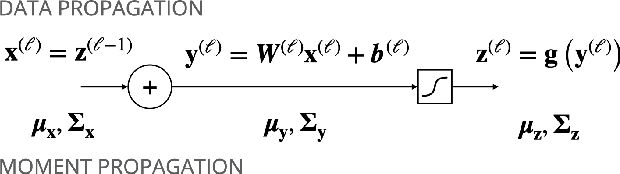

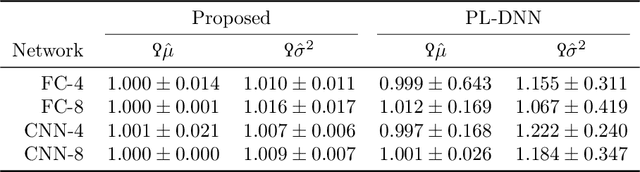

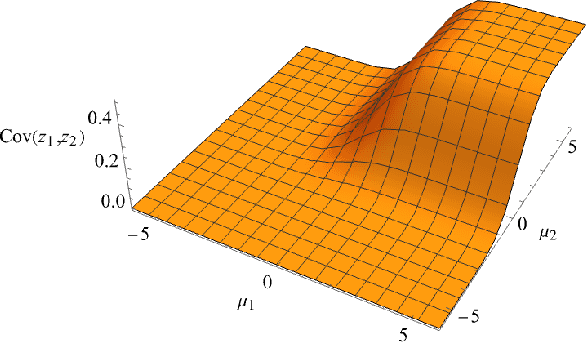

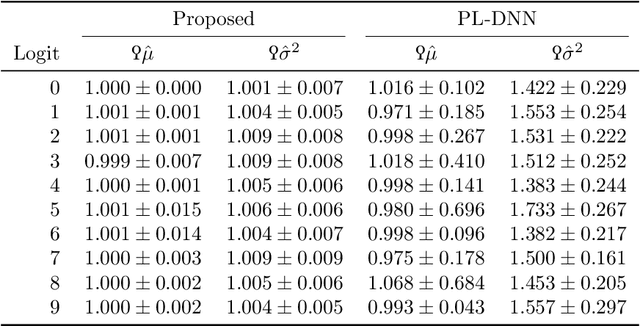

An Analytic Solution to Covariance Propagation in Neural Networks

Mar 24, 2024

Abstract:Uncertainty quantification of neural networks is critical to measuring the reliability and robustness of deep learning systems. However, this often involves costly or inaccurate sampling methods and approximations. This paper presents a sample-free moment propagation technique that propagates mean vectors and covariance matrices across a network to accurately characterize the input-output distributions of neural networks. A key enabler of our technique is an analytic solution for the covariance of random variables passed through nonlinear activation functions, such as Heaviside, ReLU, and GELU. The wide applicability and merits of the proposed technique are shown in experiments analyzing the input-output distributions of trained neural networks and training Bayesian neural networks.

Peer-to-Peer Learning + Consensus with Non-IID Data

Dec 21, 2023

Abstract:Peer-to-peer deep learning algorithms are enabling distributed edge devices to collaboratively train deep neural networks without exchanging raw training data or relying on a central server. Peer-to-Peer Learning (P2PL) and other algorithms based on Distributed Local-Update Stochastic/mini-batch Gradient Descent (local DSGD) rely on interleaving epochs of training with distributed consensus steps. This process leads to model parameter drift/divergence amongst participating devices in both IID and non-IID settings. We observe that model drift results in significant oscillations in test performance evaluated after local training and consensus phases. We then identify factors that amplify performance oscillations and demonstrate that our novel approach, P2PL with Affinity, dampens test performance oscillations in non-IID settings without incurring any additional communication cost.

Inferring the Graph of Networked Dynamical Systems under Partial Observability and Spatially Colored Noise

Dec 18, 2023

Abstract:In a Networked Dynamical System (NDS), each node is a system whose dynamics are coupled with the dynamics of neighboring nodes. The global dynamics naturally builds on this network of couplings and it is often excited by a noise input with nontrivial structure. The underlying network is unknown in many applications and should be inferred from observed data. We assume: i) Partial observability -- time series data is only available over a subset of the nodes; ii) Input noise -- it is correlated across distinct nodes while temporally independent, i.e., it is spatially colored. We present a feasibility condition on the noise correlation structure wherein there exists a consistent network inference estimator to recover the underlying fundamental dependencies among the observed nodes. Further, we describe a structure identification algorithm that exhibits competitive performance across distinct regimes of network connectivity, observability, and noise correlation.

Learning the Causal Structure of Networked Dynamical Systems under Latent Nodes and Structured Noise

Dec 18, 2023Abstract:This paper considers learning the hidden causal network of a linear networked dynamical system (NDS) from the time series data at some of its nodes -- partial observability. The dynamics of the NDS are driven by colored noise that generates spurious associations across pairs of nodes, rendering the problem much harder. To address the challenge of noise correlation and partial observability, we assign to each pair of nodes a feature vector computed from the time series data of observed nodes. The feature embedding is engineered to yield structural consistency: there exists an affine hyperplane that consistently partitions the set of features, separating the feature vectors corresponding to connected pairs of nodes from those corresponding to disconnected pairs. The causal inference problem is thus addressed via clustering the designed features. We demonstrate with simple baseline supervised methods the competitive performance of the proposed causal inference mechanism under broad connectivity regimes and noise correlation levels, including a real world network. Further, we devise novel technical guarantees of structural consistency for linear NDS under the considered regime.

Peer-to-Peer Deep Learning for Beyond-5G IoT

Oct 29, 2023Abstract:We present P2PL, a practical multi-device peer-to-peer deep learning algorithm that, unlike the federated learning paradigm, does not require coordination from edge servers or the cloud. This makes P2PL well-suited for the sheer scale of beyond-5G computing environments like smart cities that otherwise create range, latency, bandwidth, and single point of failure issues for federated approaches. P2PL introduces max norm synchronization to catalyze training, retains on-device deep model training to preserve privacy, and leverages local inter-device communication to implement distributed consensus. Each device iteratively alternates between two phases: 1) on-device learning and 2) distributed cooperation where they combine model parameters with nearby devices. We empirically show that all participating devices achieve the same test performance attained by federated and centralized training -- even with 100 devices and relaxed singly stochastic consensus weights. We extend these experimental results to settings with diverse network topologies, sparse and intermittent communication, and non-IID data distributions.

Graph Signal Processing: History, Development, Impact, and Outlook

Mar 21, 2023Abstract:Graph signal processing (GSP) generalizes signal processing (SP) tasks to signals living on non-Euclidean domains whose structure can be captured by a weighted graph. Graphs are versatile, able to model irregular interactions, easy to interpret, and endowed with a corpus of mathematical results, rendering them natural candidates to serve as the basis for a theory of processing signals in more irregular domains. In this article, we provide an overview of the evolution of GSP, from its origins to the challenges ahead. The first half is devoted to reviewing the history of GSP and explaining how it gave rise to an encompassing framework that shares multiple similarities with SP. A key message is that GSP has been critical to develop novel and technically sound tools, theory, and algorithms that, by leveraging analogies with and the insights of digital SP, provide new ways to analyze, process, and learn from graph signals. In the second half, we shift focus to review the impact of GSP on other disciplines. First, we look at the use of GSP in data science problems, including graph learning and graph-based deep learning. Second, we discuss the impact of GSP on applications, including neuroscience and image and video processing. We conclude with a brief discussion of the emerging and future directions of GSP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge