Jonas Unger

Linköping University

Revisiting Likelihood-Based Out-of-Distribution Detection by Modeling Representations

Apr 10, 2025Abstract:Out-of-distribution (OOD) detection is critical for ensuring the reliability of deep learning systems, particularly in safety-critical applications. Likelihood-based deep generative models have historically faced criticism for their unsatisfactory performance in OOD detection, often assigning higher likelihood to OOD data than in-distribution samples when applied to image data. In this work, we demonstrate that likelihood is not inherently flawed. Rather, several properties in the images space prohibit likelihood as a valid detection score. Given a sufficiently good likelihood estimator, specifically using the probability flow formulation of a diffusion model, we show that likelihood-based methods can still perform on par with state-of-the-art methods when applied in the representation space of pre-trained encoders. The code of our work can be found at $\href{https://github.com/limchaos/Likelihood-OOD.git}{\texttt{https://github.com/limchaos/Likelihood-OOD.git}}$.

FROST-BRDF: A Fast and Robust Optimal Sampling Technique for BRDF Acquisition

Jan 14, 2024

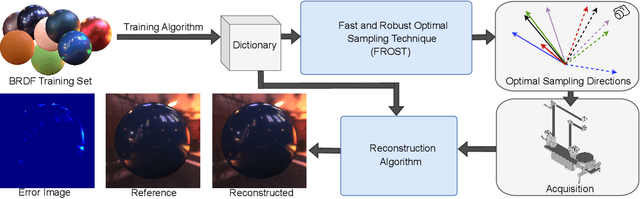

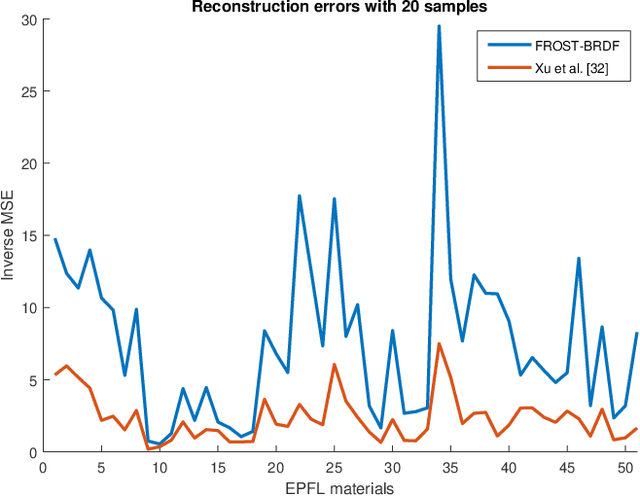

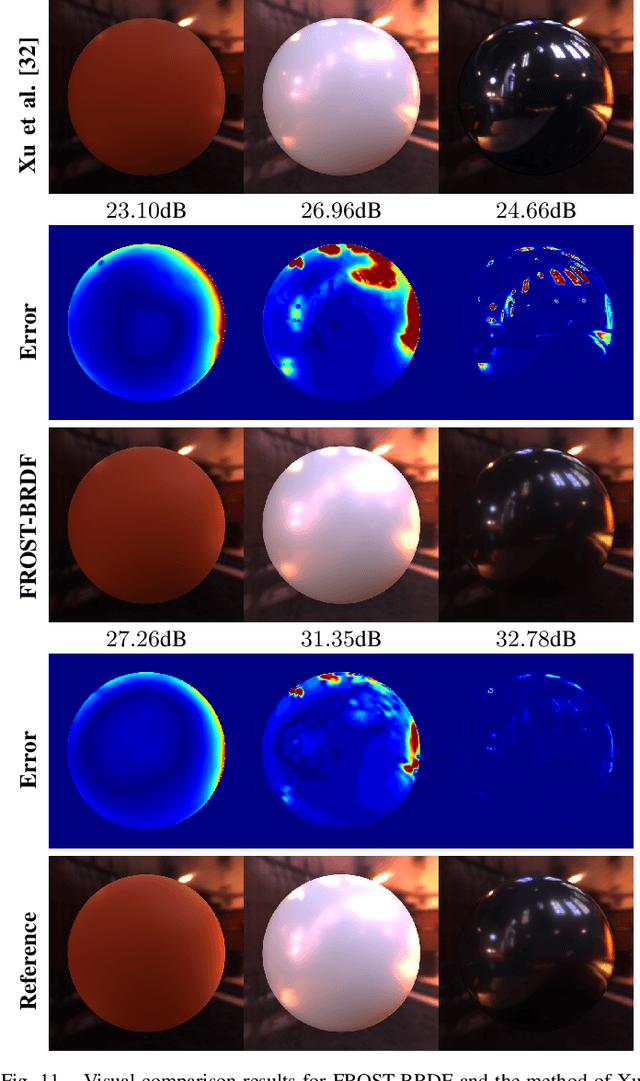

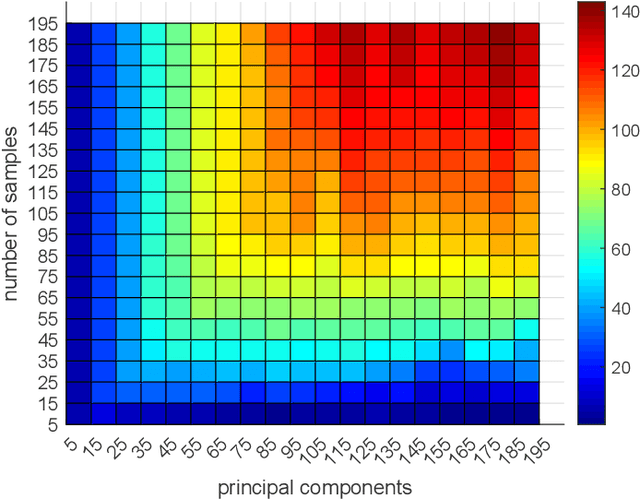

Abstract:Efficient and accurate BRDF acquisition of real world materials is a challenging research problem that requires sampling millions of incident light and viewing directions. To accelerate the acquisition process, one needs to find a minimal set of sampling directions such that the recovery of the full BRDF is accurate and robust given such samples. In this paper, we formulate BRDF acquisition as a compressed sensing problem, where the sensing operator is one that performs sub-sampling of the BRDF signal according to a set of optimal sample directions. To solve this problem, we propose the Fast and Robust Optimal Sampling Technique (FROST) for designing a provably optimal sub-sampling operator that places light-view samples such that the recovery error is minimized. FROST casts the problem of designing an optimal sub-sampling operator for compressed sensing into a sparse representation formulation under the Multiple Measurement Vector (MMV) signal model. The proposed reformulation is exact, i.e. without any approximations, hence it converts an intractable combinatorial problem into one that can be solved with standard optimization techniques. As a result, FROST is accompanied by strong theoretical guarantees from the field of compressed sensing. We perform a thorough analysis of FROST-BRDF using a 10-fold cross-validation with publicly available BRDF datasets and show significant advantages compared to the state-of-the-art with respect to reconstruction quality. Finally, FROST is simple, both conceptually and in terms of implementation, it produces consistent results at each run, and it is at least two orders of magnitude faster than the prior art.

Standalone Neural ODEs with Sensitivity Analysis

Jun 08, 2022

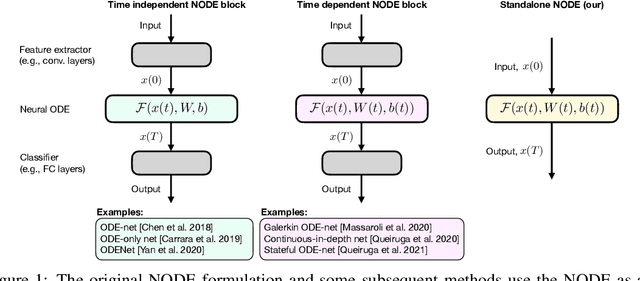

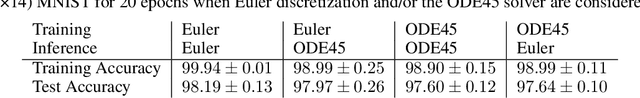

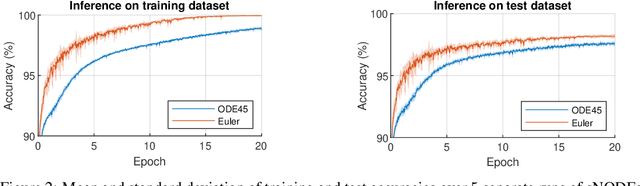

Abstract:This paper presents the Standalone Neural ODE (sNODE), a continuous-depth neural ODE model capable of describing a full deep neural network. This uses a novel nonlinear conjugate gradient (NCG) descent optimization scheme for training, where the Sobolev gradient can be incorporated to improve smoothness of model weights. We also present a general formulation of the neural sensitivity problem and show how it is used in the NCG training. The sensitivity analysis provides a reliable measure of uncertainty propagation throughout a network, and can be used to study model robustness and to generate adversarial attacks. Our evaluations demonstrate that our novel formulations lead to increased robustness and performance as compared to ResNet models, and that it opens up for new opportunities for designing and developing machine learning with improved explainability.

Learning via nonlinear conjugate gradients and depth-varying neural ODEs

Feb 11, 2022

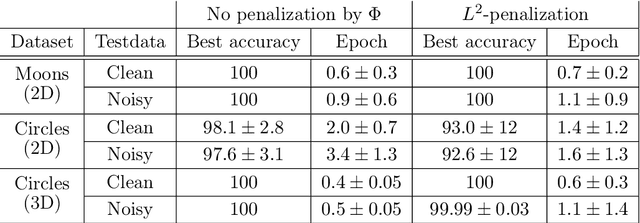

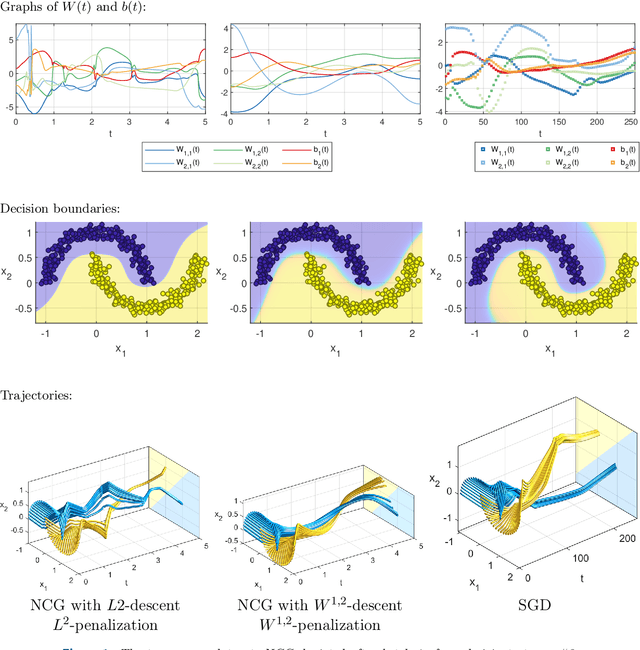

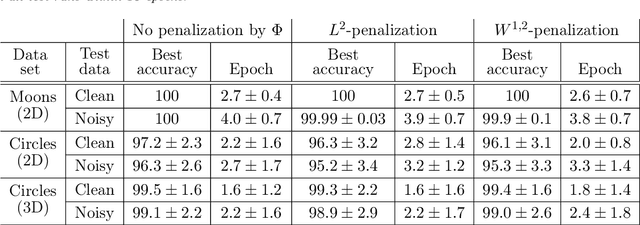

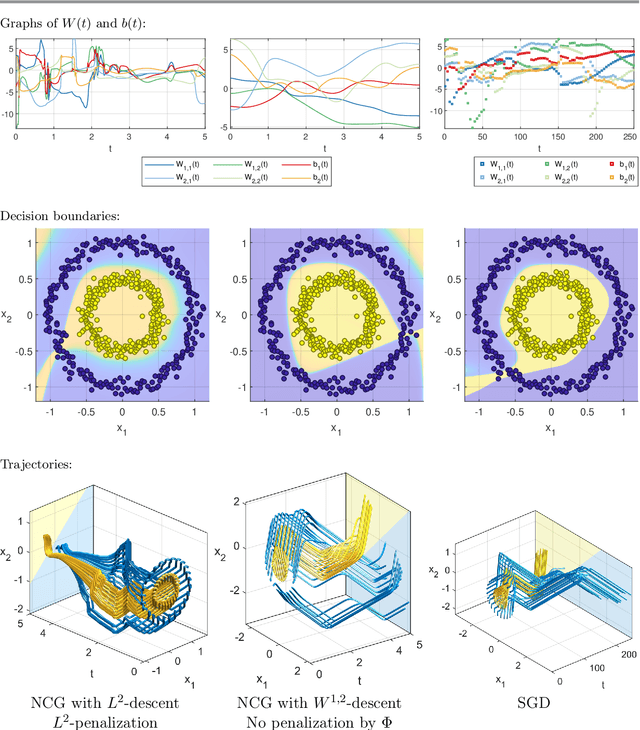

Abstract:The inverse problem of supervised reconstruction of depth-variable (time-dependent) parameters in a neural ordinary differential equation (NODE) is considered, that means finding the weights of a residual network with time continuous layers. The NODE is treated as an isolated entity describing the full network as opposed to earlier research, which embedded it between pre- and post-appended layers trained by conventional methods. The proposed parameter reconstruction is done for a general first order differential equation by minimizing a cost functional covering a variety of loss functions and penalty terms. A nonlinear conjugate gradient method (NCG) is derived for the minimization. Mathematical properties are stated for the differential equation and the cost functional. The adjoint problem needed is derived together with a sensitivity problem. The sensitivity problem can estimate changes in the network output under perturbation of the trained parameters. To preserve smoothness during the iterations the Sobolev gradient is calculated and incorporated. As a proof-of-concept, numerical results are included for a NODE and two synthetic datasets, and compared with standard gradient approaches (not based on NODEs). The results show that the proposed method works well for deep learning with infinite numbers of layers, and has built-in stability and smoothness.

Learning Representations with Contrastive Self-Supervised Learning for Histopathology Applications

Dec 10, 2021

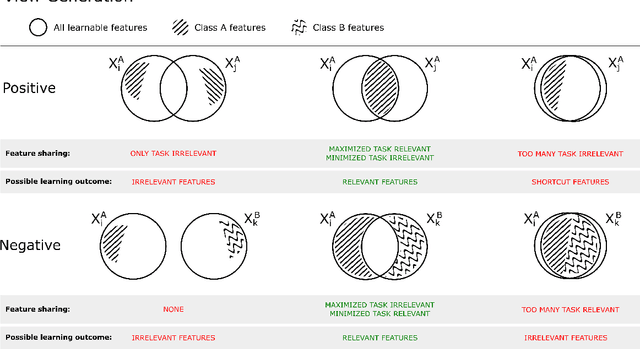

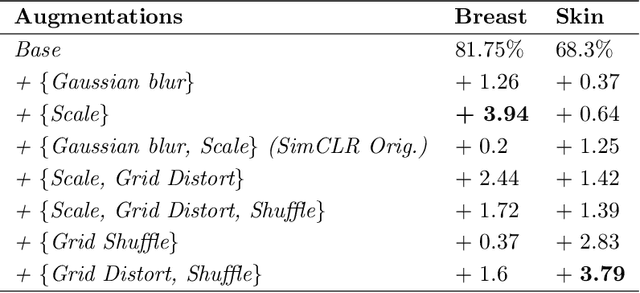

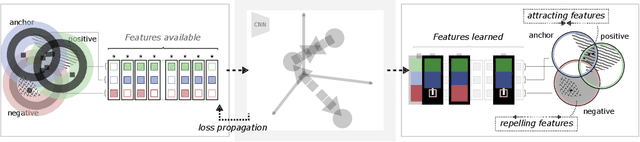

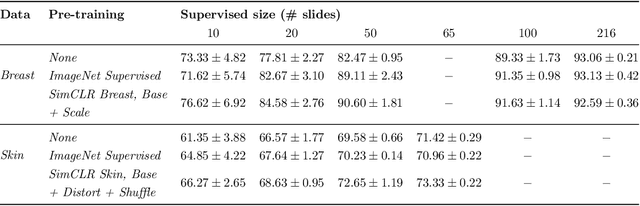

Abstract:Unsupervised learning has made substantial progress over the last few years, especially by means of contrastive self-supervised learning. The dominating dataset for benchmarking self-supervised learning has been ImageNet, for which recent methods are approaching the performance achieved by fully supervised training. The ImageNet dataset is however largely object-centric, and it is not clear yet what potential those methods have on widely different datasets and tasks that are not object-centric, such as in digital pathology. While self-supervised learning has started to be explored within this area with encouraging results, there is reason to look closer at how this setting differs from natural images and ImageNet. In this paper we make an in-depth analysis of contrastive learning for histopathology, pin-pointing how the contrastive objective will behave differently due to the characteristics of histopathology data. We bring forward a number of considerations, such as view generation for the contrastive objective and hyper-parameter tuning. In a large battery of experiments, we analyze how the downstream performance in tissue classification will be affected by these considerations. The results point to how contrastive learning can reduce the annotation effort within digital pathology, but that the specific dataset characteristics need to be considered. To take full advantage of the contrastive learning objective, different calibrations of view generation and hyper-parameters are required. Our results pave the way for realizing the full potential of self-supervised learning for histopathology applications.

Primary Tumor and Inter-Organ Augmentations for Supervised Lymph Node Colon Adenocarcinoma Metastasis Detection

Sep 17, 2021

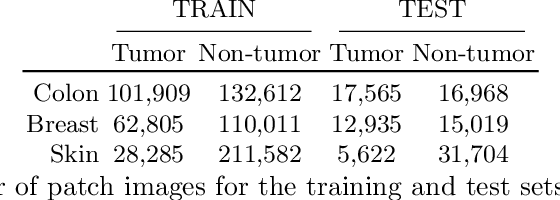

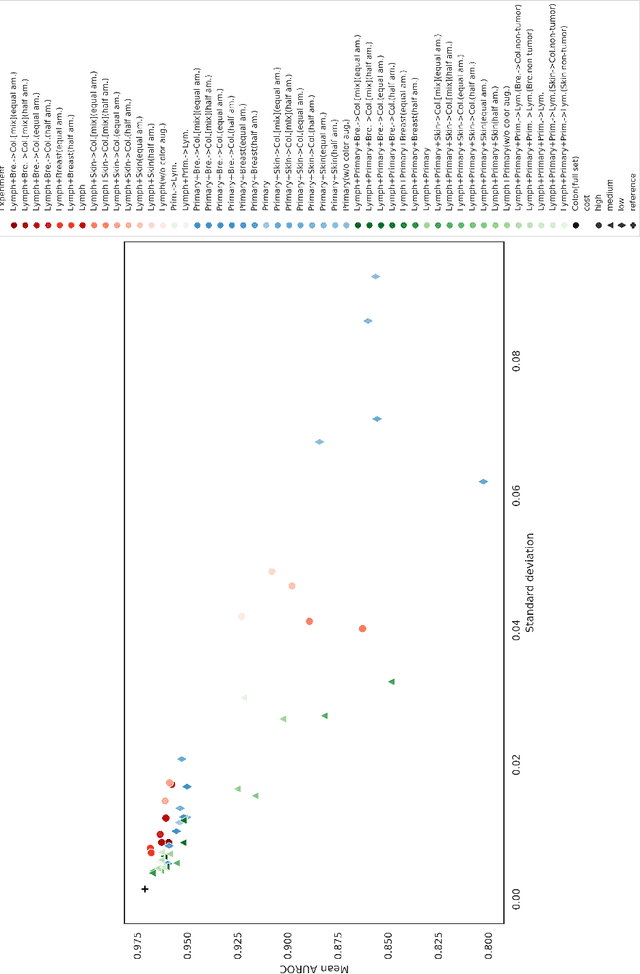

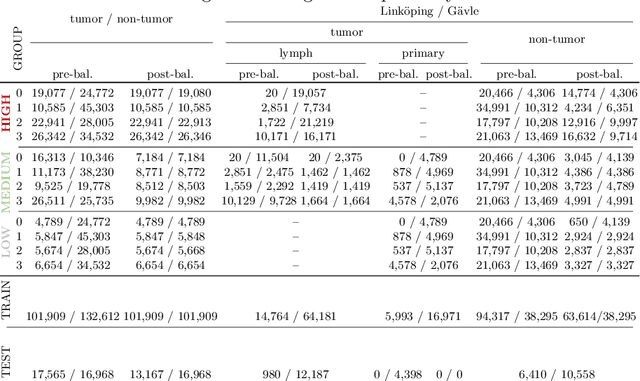

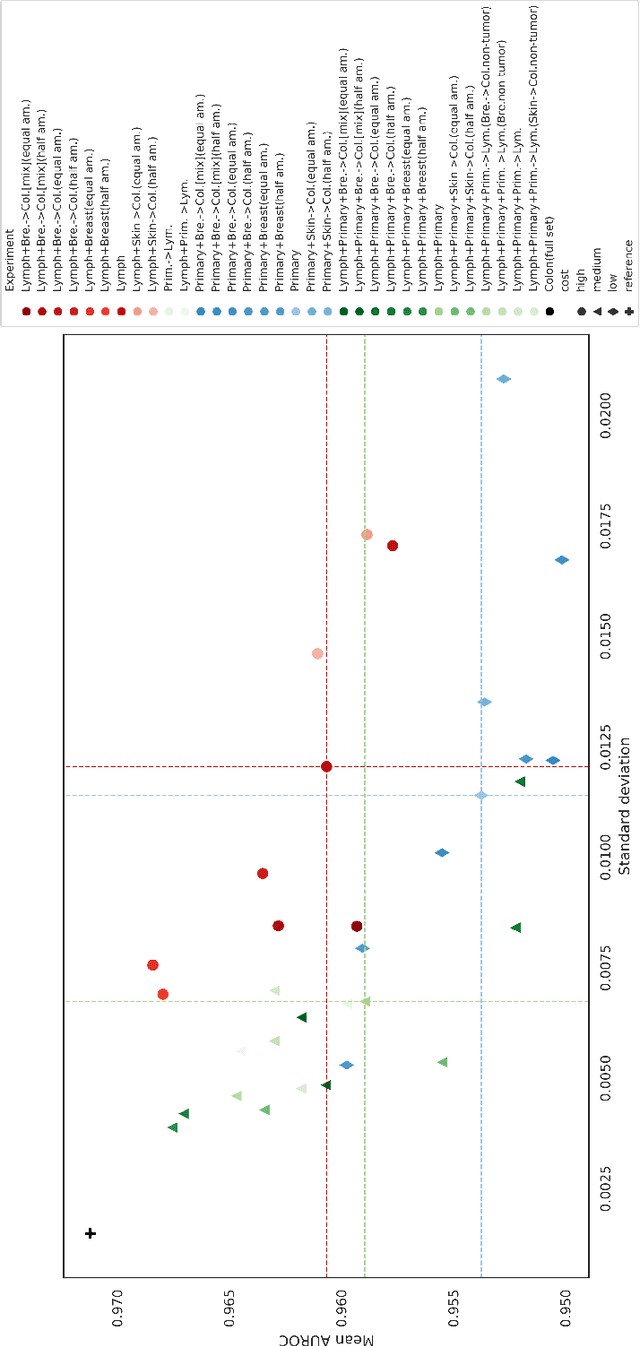

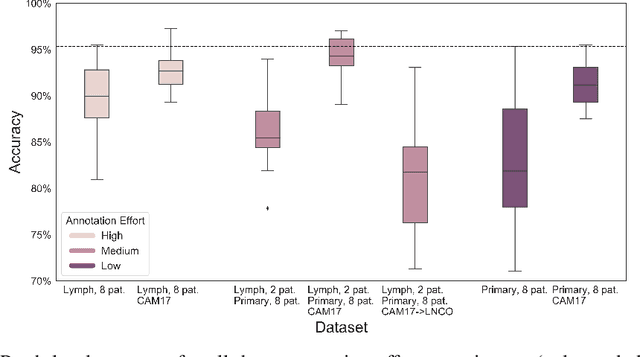

Abstract:The scarcity of labeled data is a major bottleneck for developing accurate and robust deep learning-based models for histopathology applications. The problem is notably prominent for the task of metastasis detection in lymph nodes, due to the tissue's low tumor-to-non-tumor ratio, resulting in labor- and time-intensive annotation processes for the pathologists. This work explores alternatives on how to augment the training data for colon carcinoma metastasis detection when there is limited or no representation of the target domain. Through an exhaustive study of cross-validated experiments with limited training data availability, we evaluate both an inter-organ approach utilizing already available data for other tissues, and an intra-organ approach, utilizing the primary tumor. Both these approaches result in little to no extra annotation effort. Our results show that these data augmentation strategies can be an efficient way of increasing accuracy on metastasis detection, but fore-most increase robustness.

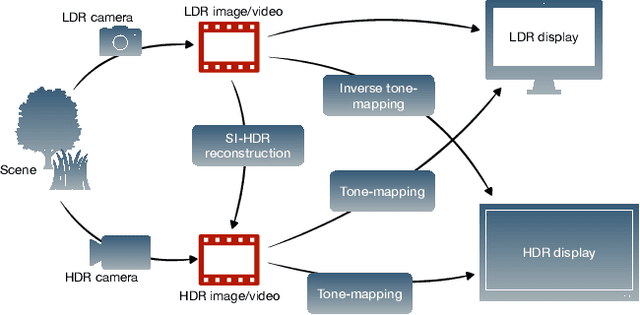

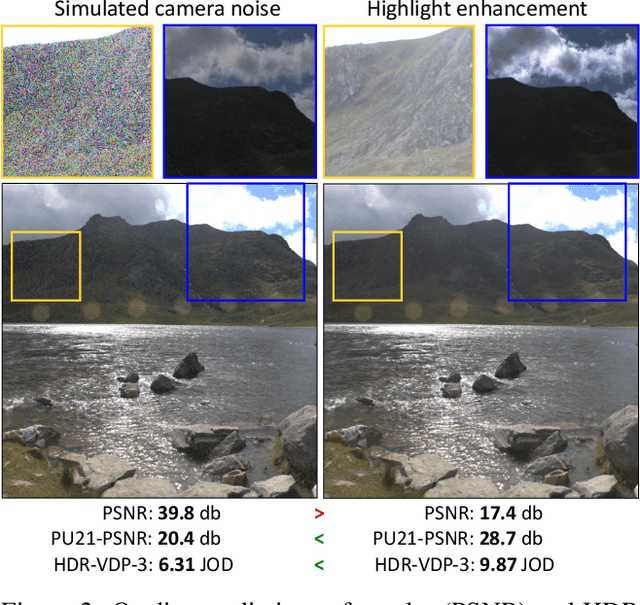

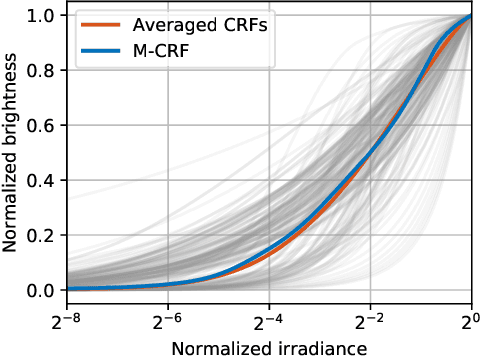

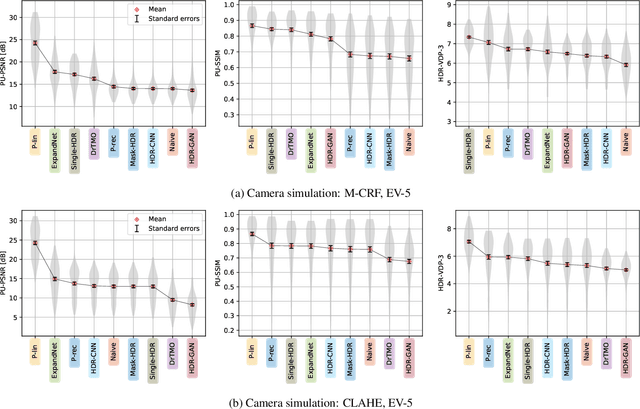

How to cheat with metrics in single-image HDR reconstruction

Aug 19, 2021

Abstract:Single-image high dynamic range (SI-HDR) reconstruction has recently emerged as a problem well-suited for deep learning methods. Each successive technique demonstrates an improvement over existing methods by reporting higher image quality scores. This paper, however, highlights that such improvements in objective metrics do not necessarily translate to visually superior images. The first problem is the use of disparate evaluation conditions in terms of data and metric parameters, calling for a standardized protocol to make it possible to compare between papers. The second problem, which forms the main focus of this paper, is the inherent difficulty in evaluating SI-HDR reconstructions since certain aspects of the reconstruction problem dominate objective differences, thereby introducing a bias. Here, we reproduce a typical evaluation using existing as well as simulated SI-HDR methods to demonstrate how different aspects of the problem affect objective quality metrics. Surprisingly, we found that methods that do not even reconstruct HDR information can compete with state-of-the-art deep learning methods. We show how such results are not representative of the perceived quality and that SI-HDR reconstruction needs better evaluation protocols.

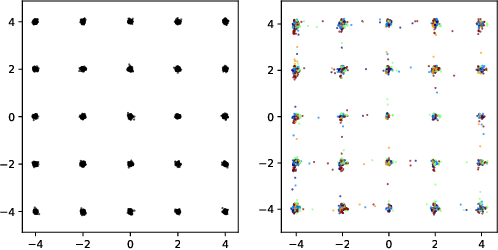

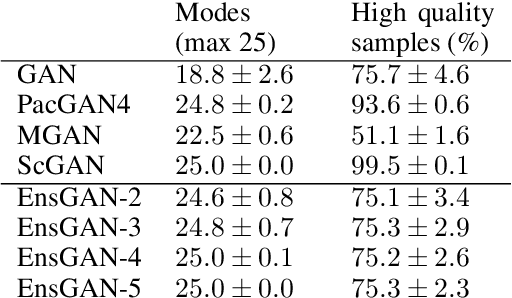

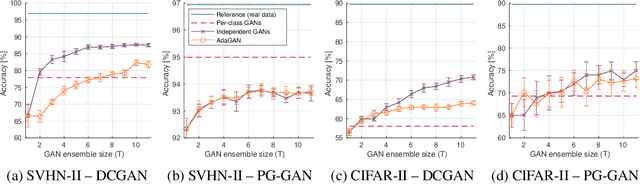

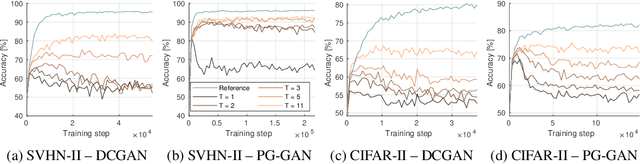

Ensembles of GANs for synthetic training data generation

Apr 23, 2021

Abstract:Insufficient training data is a major bottleneck for most deep learning practices, not least in medical imaging where data is difficult to collect and publicly available datasets are scarce due to ethics and privacy. This work investigates the use of synthetic images, created by generative adversarial networks (GANs), as the only source of training data. We demonstrate that for this application, it is of great importance to make use of multiple GANs to improve the diversity of the generated data, i.e. to sufficiently cover the data distribution. While a single GAN can generate seemingly diverse image content, training on this data in most cases lead to severe over-fitting. We test the impact of ensembled GANs on synthetic 2D data as well as common image datasets (SVHN and CIFAR-10), and using both DCGANs and progressively growing GANs. As a specific use case, we focus on synthesizing digital pathology patches to provide anonymized training data.

A Study of Deep Learning Colon Cancer Detection in Limited Data Access Scenarios

May 22, 2020

Abstract:Digitization of histopathology slides has led to several advances, from easy data sharing and collaborations to the development of digital diagnostic tools. Deep learning (DL) methods for classification and detection have shown great potential, but often require large amounts of training data that are hard to collect, and annotate. For many cancer types, the scarceness of data creates barriers for training DL models. One such scenario relates to detecting tumor metastasis in lymph node tissue, where the low ratio of tumor to non-tumor cells makes the diagnostic task hard and time-consuming. DL-based tools can allow faster diagnosis, with potentially increased quality. Unfortunately, due to the sparsity of tumor cells, annotating this type of data demands a high level of effort from pathologists. Using weak annotations from slide-level images have shown great potential, but demand access to a substantial amount of data as well. In this study, we investigate mitigation strategies for limited data access scenarios. Particularly, we address whether it is possible to exploit mutual structure between tissues to develop general techniques, wherein data from one type of cancer in a particular tissue could have diagnostic value for other cancers in other tissues. Our case is exemplified by a DL model for metastatic colon cancer detection in lymph nodes. Could such a model be trained with little or even no lymph node data? As alternative data sources, we investigate 1) tumor cells taken from the primary colon tumor tissue, and 2) cancer data from a different organ (breast), either as is or transformed to the target domain (colon) using Cycle-GANs. We show that the suggested approaches make it possible to detect cancer metastasis with no or very little lymph node data, opening up for the possibility that existing, annotated histopathology data could generalize to other domains.

Classifying the classifier: dissecting the weight space of neural networks

Feb 13, 2020

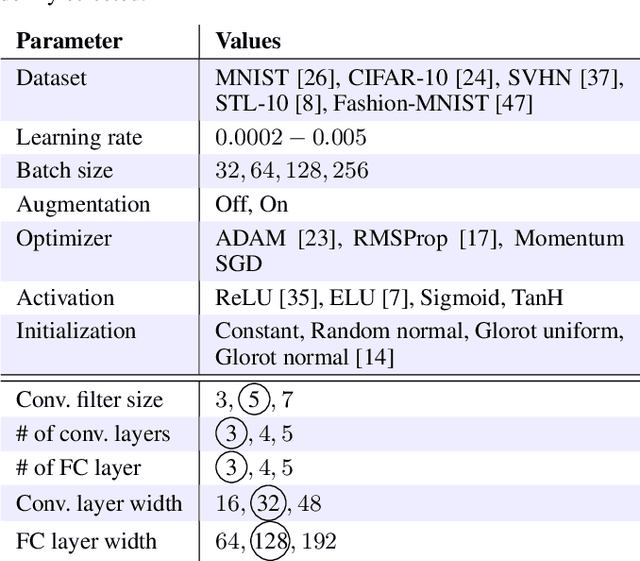

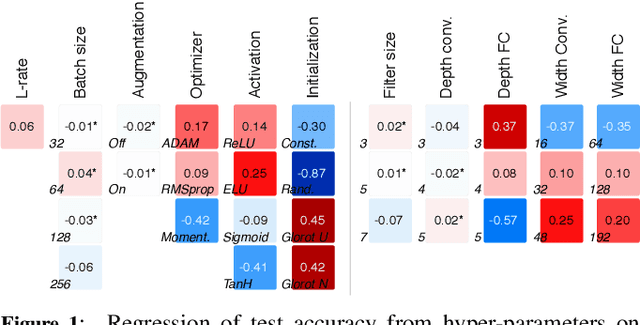

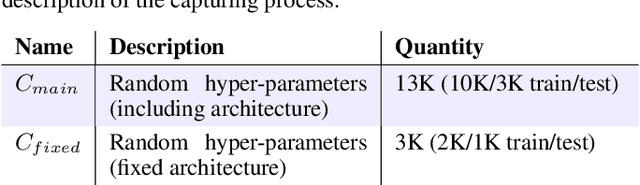

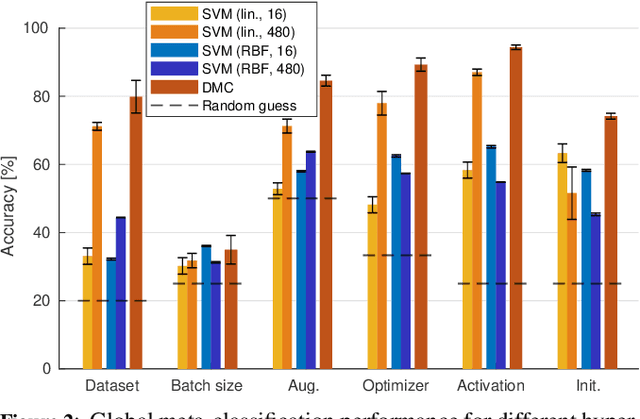

Abstract:This paper presents an empirical study on the weights of neural networks, where we interpret each model as a point in a high-dimensional space -- the neural weight space. To explore the complex structure of this space, we sample from a diverse selection of training variations (dataset, optimization procedure, architecture, etc.) of neural network classifiers, and train a large number of models to represent the weight space. Then, we use a machine learning approach for analyzing and extracting information from this space. Most centrally, we train a number of novel deep meta-classifiers with the objective of classifying different properties of the training setup by identifying their footprints in the weight space. Thus, the meta-classifiers probe for patterns induced by hyper-parameters, so that we can quantify how much, where, and when these are encoded through the optimization process. This provides a novel and complementary view for explainable AI, and we show how meta-classifiers can reveal a great deal of information about the training setup and optimization, by only considering a small subset of randomly selected consecutive weights. To promote further research on the weight space, we release the neural weight space (NWS) dataset -- a collection of 320K weight snapshots from 16K individually trained deep neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge