Jixiang Qing

The Catechol Benchmark: Time-series Solvent Selection Data for Few-shot Machine Learning

Jun 09, 2025Abstract:Machine learning has promised to change the landscape of laboratory chemistry, with impressive results in molecular property prediction and reaction retro-synthesis. However, chemical datasets are often inaccessible to the machine learning community as they tend to require cleaning, thorough understanding of the chemistry, or are simply not available. In this paper, we introduce a novel dataset for yield prediction, providing the first-ever transient flow dataset for machine learning benchmarking, covering over 1200 process conditions. While previous datasets focus on discrete parameters, our experimental set-up allow us to sample a large number of continuous process conditions, generating new challenges for machine learning models. We focus on solvent selection, a task that is particularly difficult to model theoretically and therefore ripe for machine learning applications. We showcase benchmarking for regression algorithms, transfer-learning approaches, feature engineering, and active learning, with important applications towards solvent replacement and sustainable manufacturing.

Global optimization of graph acquisition functions for neural architecture search

May 29, 2025Abstract:Graph Bayesian optimization (BO) has shown potential as a powerful and data-efficient tool for neural architecture search (NAS). Most existing graph BO works focus on developing graph surrogates models, i.e., metrics of networks and/or different kernels to quantify the similarity between networks. However, the acquisition optimization, as a discrete optimization task over graph structures, is not well studied due to the complexity of formulating the graph search space and acquisition functions. This paper presents explicit optimization formulations for graph input space including properties such as reachability and shortest paths, which are used later to formulate graph kernels and the acquisition function. We theoretically prove that the proposed encoding is an equivalent representation of the graph space and provide restrictions for the NAS domain with either node or edge labels. Numerical results over several NAS benchmarks show that our method efficiently finds the optimal architecture for most cases, highlighting its efficacy.

System-Aware Neural ODE Processes for Few-Shot Bayesian Optimization

Jun 04, 2024

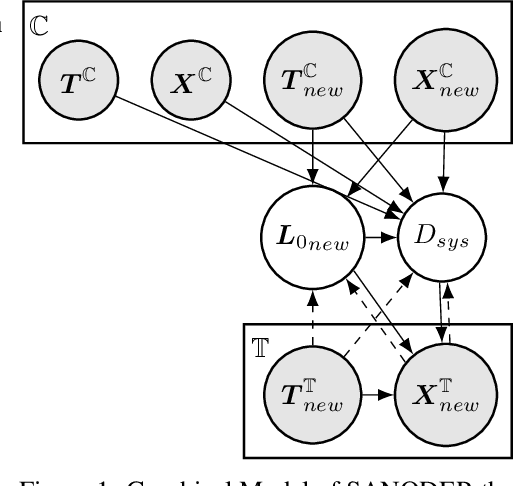

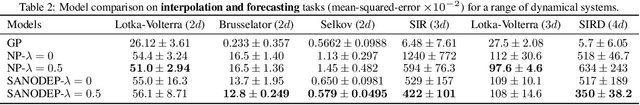

Abstract:We consider the problem of optimizing initial conditions and timing in dynamical systems governed by unknown ordinary differential equations (ODEs), where evaluating different initial conditions is costly and there are constraints on observation times. To identify the optimal conditions within several trials, we introduce a few-shot Bayesian Optimization (BO) framework based on the system's prior information. At the core of our approach is the System-Aware Neural ODE Processes (SANODEP), an extension of Neural ODE Processes (NODEP) designed to meta-learn ODE systems from multiple trajectories using a novel context embedding block. Additionally, we propose a multi-scenario loss function specifically for optimization purposes. Our two-stage BO framework effectively incorporates search space constraints, enabling efficient optimization of both initial conditions and observation timings. We conduct extensive experiments showcasing SANODEP's potential for few-shot BO. We also explore SANODEP's adaptability to varying levels of prior information, highlighting the trade-off between prior flexibility and model fitting accuracy.

Trieste: Efficiently Exploring The Depths of Black-box Functions with TensorFlow

Feb 16, 2023Abstract:We present Trieste, an open-source Python package for Bayesian optimization and active learning benefiting from the scalability and efficiency of TensorFlow. Our library enables the plug-and-play of popular TensorFlow-based models within sequential decision-making loops, e.g. Gaussian processes from GPflow or GPflux, or neural networks from Keras. This modular mindset is central to the package and extends to our acquisition functions and the internal dynamics of the decision-making loop, both of which can be tailored and extended by researchers or engineers when tackling custom use cases. Trieste is a research-friendly and production-ready toolkit backed by a comprehensive test suite, extensive documentation, and available at https://github.com/secondmind-labs/trieste.

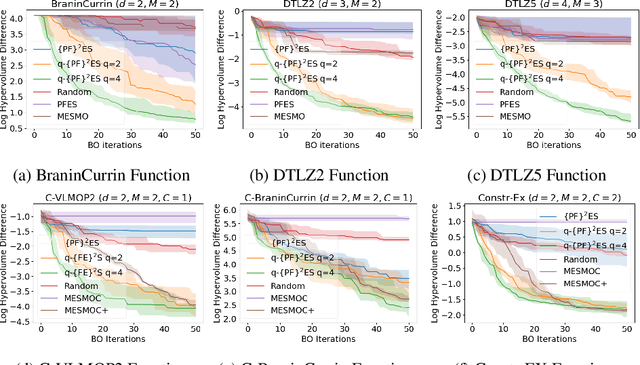

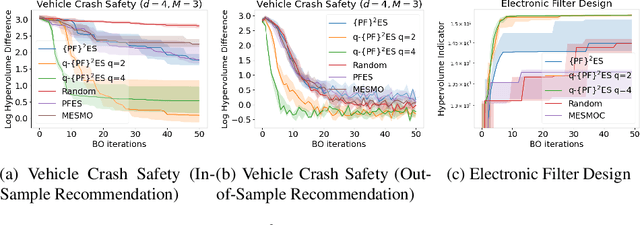

$\{\text{PF}\}^2\text{ES}$: Parallel Feasible Pareto Frontier Entropy Search for Multi-Objective Bayesian Optimization Under Unknown Constraints

Apr 11, 2022

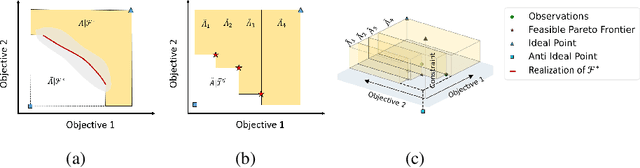

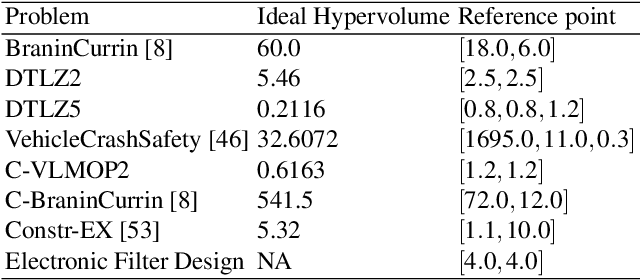

Abstract:We present Parallel Feasible Pareto Frontier Entropy Search ($\{\text{PF}\}^2$ES) -- a novel information-theoretic acquisition function for multi-objective Bayesian optimization. Although information-theoretic approaches regularly provide state-of-the-art optimization, they are not yet widely used in the context of constrained multi-objective optimization. Due to the complexity of characterizing mutual information between candidate evaluations and (feasible) Pareto frontiers, existing approaches must employ severe approximations that significantly hamper their performance. By instead using a variational lower bound, $\{\text{PF}\}^2$ES provides a low cost and accurate estimate of the mutual information for the parallel setting (where multiple evaluations must be chosen for each optimization step). Moreover, we are able to interpret our proposed acquisition function by exploring direct links with other popular multi-objective acquisition functions. We benchmark $\{\text{PF}\}^2$ES across synthetic and real-life problems, demonstrating its competitive performance for batch optimization across synthetic and real-world problems including vehicle and electronic filter design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge