Jiming Liu

Learning Continuous Network Emerging Dynamics from Scarce Observations via Data-Adaptive Stochastic Processes

Oct 25, 2023Abstract:Learning network dynamics from the empirical structure and spatio-temporal observation data is crucial to revealing the interaction mechanisms of complex networks in a wide range of domains. However, most existing methods only aim at learning network dynamic behaviors generated by a specific ordinary differential equation instance, resulting in ineffectiveness for new ones, and generally require dense observations. The observed data, especially from network emerging dynamics, are usually difficult to obtain, which brings trouble to model learning. Therefore, how to learn accurate network dynamics with sparse, irregularly-sampled, partial, and noisy observations remains a fundamental challenge. We introduce Neural ODE Processes for Network Dynamics (NDP4ND), a new class of stochastic processes governed by stochastic data-adaptive network dynamics, to overcome the challenge and learn continuous network dynamics from scarce observations. Intensive experiments conducted on various network dynamics in ecological population evolution, phototaxis movement, brain activity, epidemic spreading, and real-world empirical systems, demonstrate that the proposed method has excellent data adaptability and computational efficiency, and can adapt to unseen network emerging dynamics, producing accurate interpolation and extrapolation with reducing the ratio of required observation data to only about 6\% and improving the learning speed for new dynamics by three orders of magnitude.

Machine Learning for Infectious Disease Risk Prediction: A Survey

Aug 06, 2023Abstract:Infectious diseases, either emerging or long-lasting, place numerous people at risk and bring heavy public health burdens worldwide. In the process against infectious diseases, predicting the epidemic risk by modeling the disease transmission plays an essential role in assisting with preventing and controlling disease transmission in a more effective way. In this paper, we systematically describe how machine learning can play an essential role in quantitatively characterizing disease transmission patterns and accurately predicting infectious disease risks. First, we introduce the background and motivation of using machine learning for infectious disease risk prediction. Next, we describe the development and components of various machine learning models for infectious disease risk prediction. Specifically, existing models fall into three categories: Statistical prediction, data-driven machine learning, and epidemiology-inspired machine learning. Subsequently, we discuss challenges encountered when dealing with model inputs, designing task-oriented objectives, and conducting performance evaluation. Finally, we conclude with a discussion of open questions and future directions.

Decadal Temperature Prediction via Chaotic Behavior Tracking

Apr 19, 2023Abstract:Decadal temperature prediction provides crucial information for quantifying the expected effects of future climate changes and thus informs strategic planning and decision-making in various domains. However, such long-term predictions are extremely challenging, due to the chaotic nature of temperature variations. Moreover, the usefulness of existing simulation-based and machine learning-based methods for this task is limited because initial simulation or prediction errors increase exponentially over time. To address this challenging task, we devise a novel prediction method involving an information tracking mechanism that aims to track and adapt to changes in temperature dynamics during the prediction phase by providing probabilistic feedback on the prediction error of the next step based on the current prediction. We integrate this information tracking mechanism, which can be considered as a model calibrator, into the objective function of our method to obtain the corrections needed to avoid error accumulation. Our results show the ability of our method to accurately predict global land-surface temperatures over a decadal range. Furthermore, we demonstrate that our results are meaningful in a real-world context: the temperatures predicted using our method are consistent with and can be used to explain the well-known teleconnections within and between different continents.

Demystifying Deep Learning in Predictive Spatio-Temporal Analytics: An Information-Theoretic Framework

Sep 17, 2020

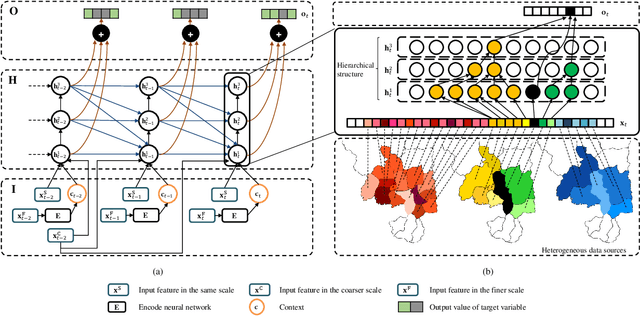

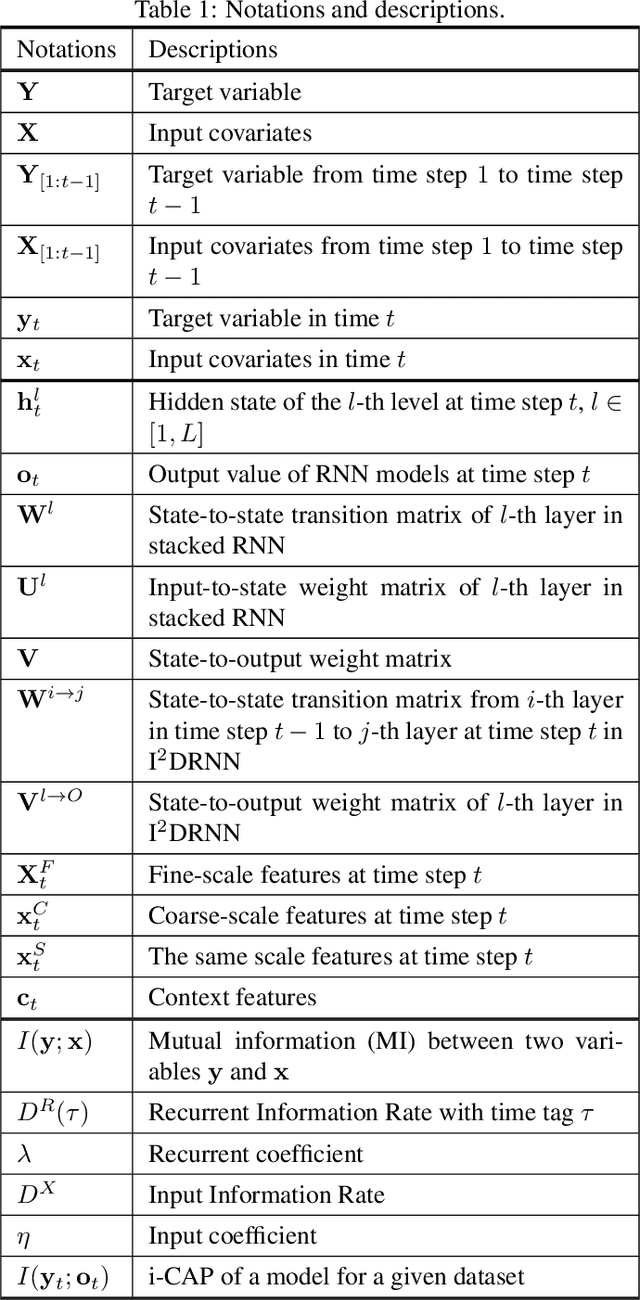

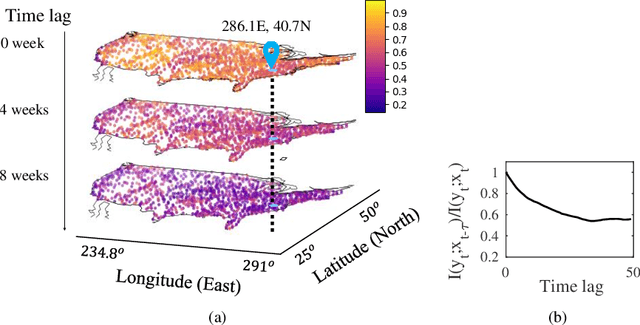

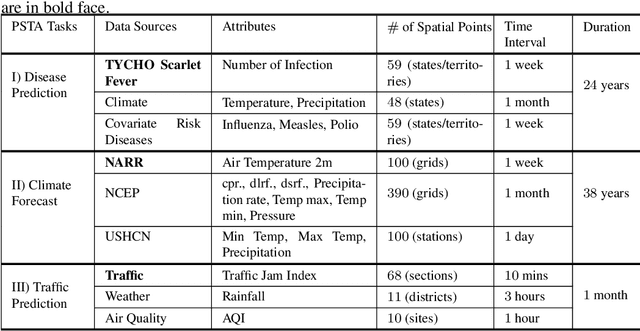

Abstract:Deep learning has achieved incredible success over the past years, especially in various challenging predictive spatio-temporal analytics (PSTA) tasks, such as disease prediction, climate forecast, and traffic prediction, where intrinsic dependency relationships among data exist and generally manifest at multiple spatio-temporal scales. However, given a specific PSTA task and the corresponding dataset, how to appropriately determine the desired configuration of a deep learning model, theoretically analyze the model's learning behavior, and quantitatively characterize the model's learning capacity remains a mystery. In order to demystify the power of deep learning for PSTA, in this paper, we provide a comprehensive framework for deep learning model design and information-theoretic analysis. First, we develop and demonstrate a novel interactively- and integratively-connected deep recurrent neural network (I$^2$DRNN) model. I$^2$DRNN consists of three modules: an Input module that integrates data from heterogeneous sources; a Hidden module that captures the information at different scales while allowing the information to flow interactively between layers; and an Output module that models the integrative effects of information from various hidden layers to generate the output predictions. Second, to theoretically prove that our designed model can learn multi-scale spatio-temporal dependency in PSTA tasks, we provide an information-theoretic analysis to examine the information-based learning capacity (i-CAP) of the proposed model. Third, to validate the I$^2$DRNN model and confirm its i-CAP, we systematically conduct a series of experiments involving both synthetic datasets and real-world PSTA tasks. The experimental results show that the I$^2$DRNN model outperforms both classical and state-of-the-art models, and is able to capture meaningful multi-scale spatio-temporal dependency.

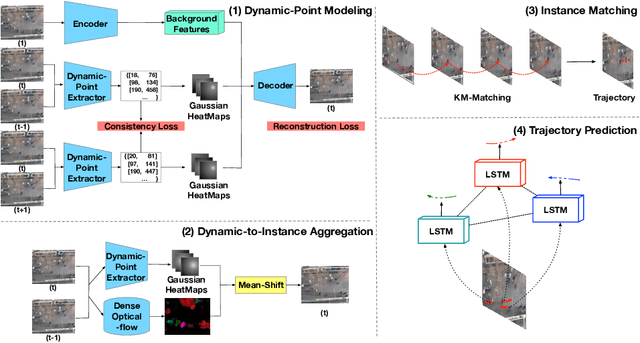

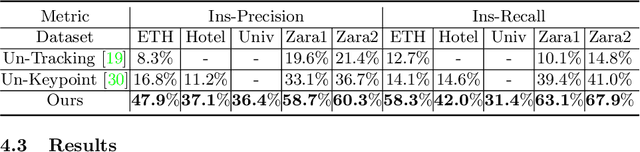

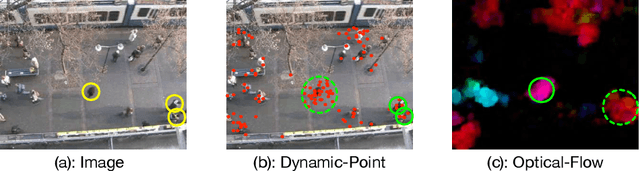

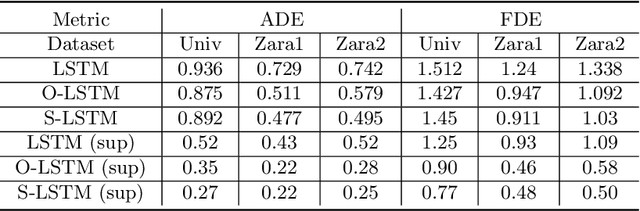

AutoTrajectory: Label-free Trajectory Extraction and Prediction from Videos using Dynamic Points

Jul 11, 2020

Abstract:Current methods for trajectory prediction operate in supervised manners, and therefore require vast quantities of corresponding ground truth data for training. In this paper, we present a novel, label-free algorithm, AutoTrajectory, for trajectory extraction and prediction to use raw videos directly. To better capture the moving objects in videos, we introduce dynamic points. We use them to model dynamic motions by using a forward-backward extractor to keep temporal consistency and using image reconstruction to keep spatial consistency in an unsupervised manner. Then we aggregate dynamic points to instance points, which stand for moving objects such as pedestrians in videos. Finally, we extract trajectories by matching instance points for prediction training. To the best of our knowledge, our method is the first to achieve unsupervised learning of trajectory extraction and prediction. We evaluate the performance on well-known trajectory datasets and show that our method is effective for real-world videos and can use raw videos to further improve the performance of existing models.

Reinforcement Learning in Healthcare: A Survey

Aug 22, 2019

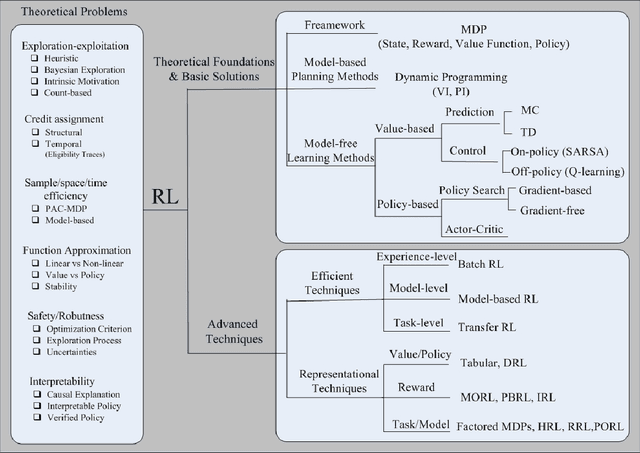

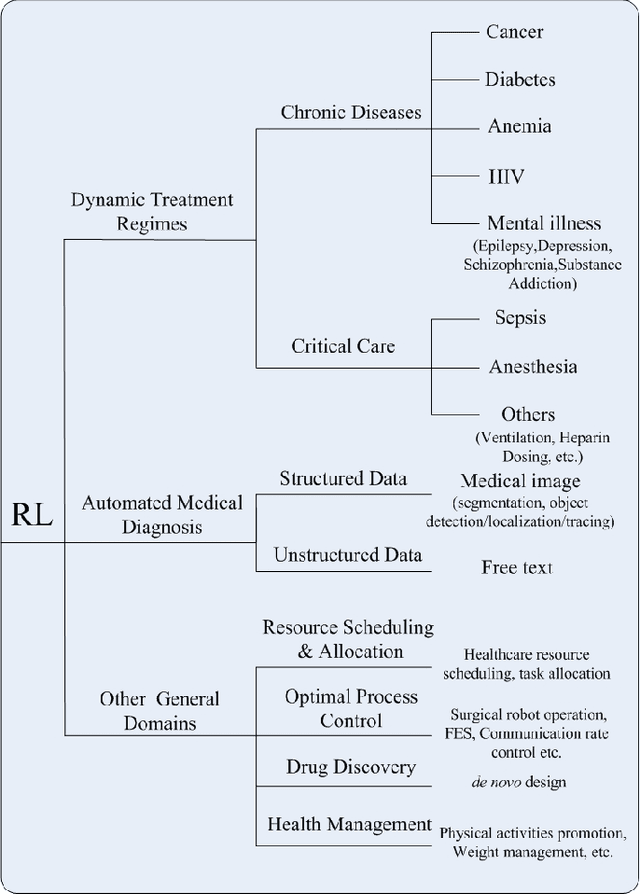

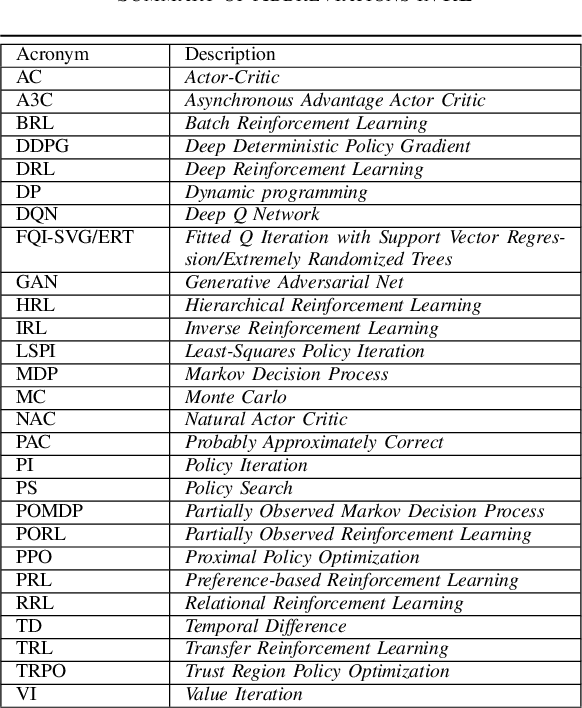

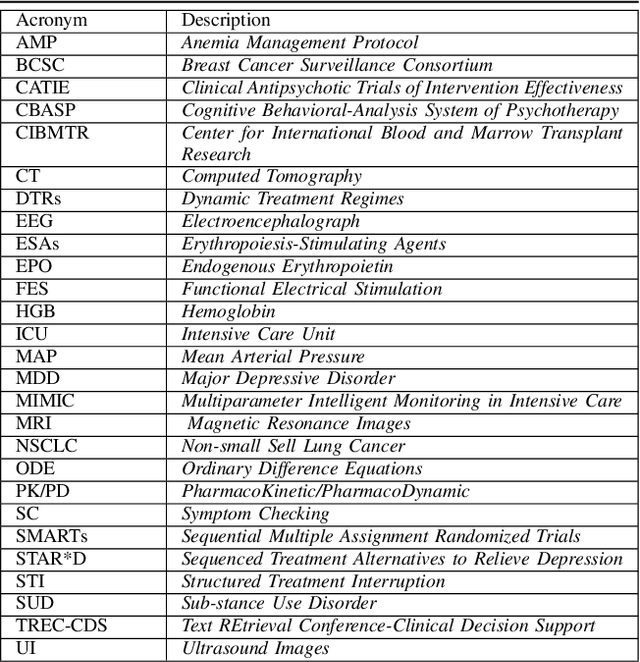

Abstract:As a subfield of machine learning, \emph{reinforcement learning} (RL) aims at empowering one's capabilities in behavioural decision making by using interaction experience with the world and an evaluative feedback. Unlike traditional supervised learning methods that usually rely on one-shot, exhaustive and supervised reward signals, RL tackles with sequential decision making problems with sampled, evaluative and delayed feedback simultaneously. Such distinctive features make RL technique a suitable candidate for developing powerful solutions in a variety of healthcare domains, where diagnosing decisions or treatment regimes are usually characterized by a prolonged and sequential procedure. This survey will discuss the broad applications of RL techniques in healthcare domains, in order to provide the research community with systematic understanding of theoretical foundations, enabling methods and techniques, existing challenges, and new insights of this emerging paradigm. By first briefly examining theoretical foundations and key techniques in RL research from efficient and representational directions, we then provide an overview of RL applications in a variety of healthcare domains, ranging from dynamic treatment regimes in chronic diseases and critical care, automated medical diagnosis from both unstructured and structured clinical data, as well as many other control or scheduling domains that have infiltrated many aspects of a healthcare system. Finally, we summarize the challenges and open issues in current research, and point out some potential solutions and directions for future research.

A Cooperative Group Optimization System

Aug 03, 2018

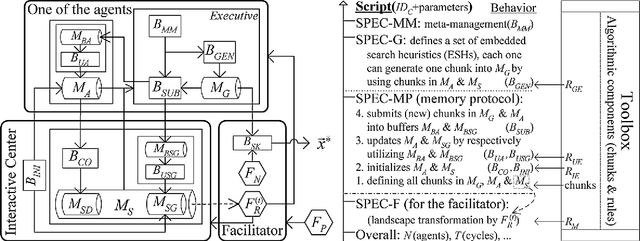

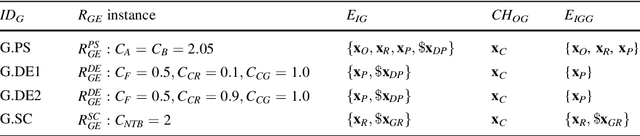

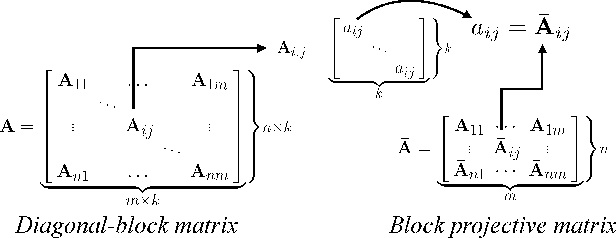

Abstract:A cooperative group optimization (CGO) system is presented to implement CGO cases by integrating the advantages of the cooperative group and low-level algorithm portfolio design. Following the nature-inspired paradigm of a cooperative group, the agents not only explore in a parallel way with their individual memory, but also cooperate with their peers through the group memory. Each agent holds a portfolio of (heterogeneous) embedded search heuristics (ESHs), in which each ESH can drive the group into a stand-alone CGO case, and hybrid CGO cases in an algorithmic space can be defined by low-level cooperative search among a portfolio of ESHs through customized memory sharing. The optimization process might also be facilitated by a passive group leader through encoding knowledge in the search landscape. Based on a concrete framework, CGO cases are defined by a script assembling over instances of algorithmic components in a toolbox. A multilayer design of the script, with the support of the inherent updatable graph in the memory protocol, enables a simple way to address the challenge of accumulating heterogeneous ESHs and defining customized portfolios without any additional code. The CGO system is implemented for solving the constrained optimization problem with some generic components and only a few domain-specific components. Guided by the insights from algorithm portfolio design, customized CGO cases based on basic search operators can achieve competitive performance over existing algorithms as compared on a set of commonly-used benchmark instances. This work might provide a basic step toward a user-oriented development framework, since the algorithmic space might be easily evolved by accumulating competent ESHs.

Group Sparse Bayesian Learning for Active Surveillance on Epidemic Dynamics

Nov 21, 2017

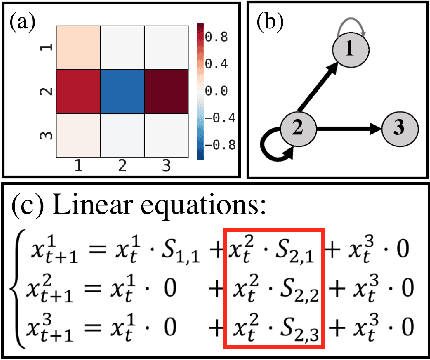

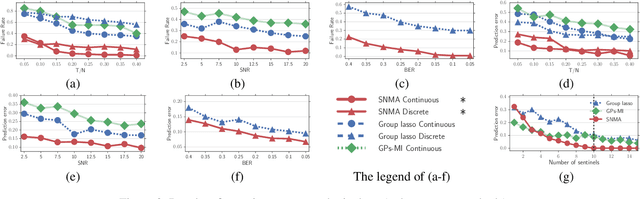

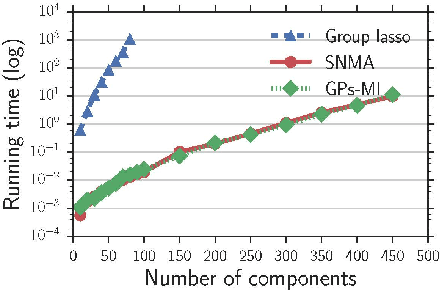

Abstract:Predicting epidemic dynamics is of great value in understanding and controlling diffusion processes, such as infectious disease spread and information propagation. This task is intractable, especially when surveillance resources are very limited. To address the challenge, we study the problem of active surveillance, i.e., how to identify a small portion of system components as sentinels to effect monitoring, such that the epidemic dynamics of an entire system can be readily predicted from the partial data collected by such sentinels. We propose a novel measure, the gamma value, to identify the sentinels by modeling a sentinel network with row sparsity structure. We design a flexible group sparse Bayesian learning algorithm to mine the sentinel network suitable for handling both linear and non-linear dynamical systems by using the expectation maximization method and variational approximation. The efficacy of the proposed algorithm is theoretically analyzed and empirically validated using both synthetic and real-world data.

Learning Topic Models by Belief Propagation

Mar 24, 2012

Abstract:Latent Dirichlet allocation (LDA) is an important hierarchical Bayesian model for probabilistic topic modeling, which attracts worldwide interests and touches on many important applications in text mining, computer vision and computational biology. This paper represents LDA as a factor graph within the Markov random field (MRF) framework, which enables the classic loopy belief propagation (BP) algorithm for approximate inference and parameter estimation. Although two commonly-used approximate inference methods, such as variational Bayes (VB) and collapsed Gibbs sampling (GS), have gained great successes in learning LDA, the proposed BP is competitive in both speed and accuracy as validated by encouraging experimental results on four large-scale document data sets. Furthermore, the BP algorithm has the potential to become a generic learning scheme for variants of LDA-based topic models. To this end, we show how to learn two typical variants of LDA-based topic models, such as author-topic models (ATM) and relational topic models (RTM), using BP based on the factor graph representation.

* 14 pages, 17 figures

Multiplex Structures: Patterns of Complexity in Real-World Networks

Sep 14, 2010

Abstract:Complex network theory aims to model and analyze complex systems that consist of multiple and interdependent components. Among all studies on complex networks, topological structure analysis is of the most fundamental importance, as it represents a natural route to understand the dynamics, as well as to synthesize or optimize the functions, of networks. A broad spectrum of network structural patterns have been respectively reported in the past decade, such as communities, multipartites, hubs, authorities, outliers, bow ties, and others. Here, we show that most individual real-world networks demonstrate multiplex structures. That is, a multitude of known or even unknown (hidden) patterns can simultaneously situate in the same network, and moreover they may be overlapped and nested with each other to collaboratively form a heterogeneous, nested or hierarchical organization, in which different connective phenomena can be observed at different granular levels. In addition, we show that the multiplex structures hidden in exploratory networks can be well defined as well as effectively recognized within an unified framework consisting of a set of proposed concepts, models, and algorithms. Our findings provide a strong evidence that most real-world complex systems are driven by a combination of heterogeneous mechanisms that may collaboratively shape their ubiquitous multiplex structures as we observe currently. This work also contributes a mathematical tool for analyzing different sources of networks from a new perspective of unveiling multiplex structures, which will be beneficial to multiple disciplines including sociology, economics and computer science.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge