Xiao-Feng Xie

Exploiting Problem Structure in Combinatorial Landscapes: A Case Study on Pure Mathematics Application

Dec 22, 2018

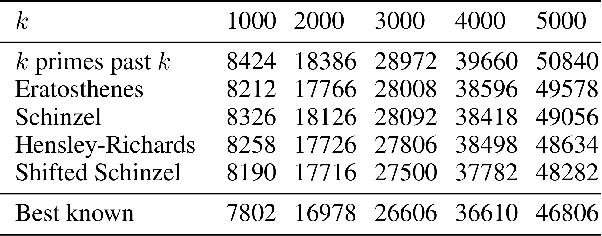

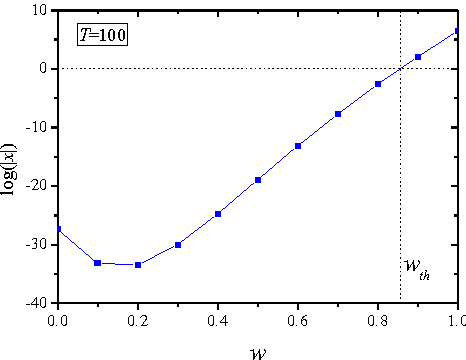

Abstract:In this paper, we present a method using AI techniques to solve a case of pure mathematics applications for finding narrow admissible tuples. The original problem is formulated into a combinatorial optimization problem. In particular, we show how to exploit the local search structure to formulate the problem landscape for dramatic reductions in search space and for non-trivial elimination in search barriers, and then to realize intelligent search strategies for effectively escaping from local minima. Experimental results demonstrate that the proposed method is able to efficiently find best known solutions. This research sheds light on exploiting the local problem structure for an efficient search in combinatorial landscapes as an application of AI to a new problem domain.

* 7 pages, 2 figures, conference

Round-Table Group Optimization for Sequencing Problems

Aug 07, 2018

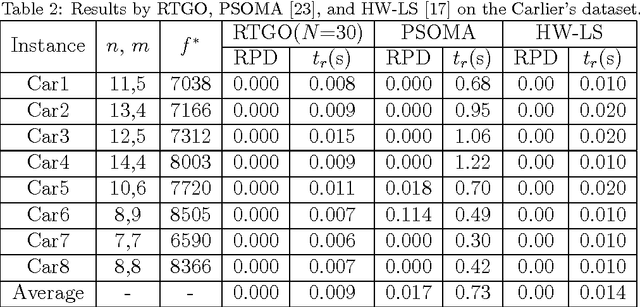

Abstract:In this paper, a round-table group optimization (RTGO) algorithm is presented. RTGO is a simple metaheuristic framework using the insights of research on group creativity. In a cooperative group, the agents work in iterative sessions to search innovative ideas in a common problem landscape. Each agent has one base idea stored in its individual memory, and one social idea fed by a round-table group support mechanism in each session. The idea combination and improvement processes are respectively realized by using a recombination search (XS) strategy and a local search (LS) strategy, to build on the base and social ideas. RTGO is then implemented for solving two difficult sequencing problems, i.e., the flowshop scheduling problem and the quadratic assignment problem. The domain-specific LS strategies are adopted from existing algorithms, whereas a general XS class, called socially biased combination (SBX), is realized in a modular form. The performance of RTGO is then evaluated on commonly-used benchmark datasets. Good performance on different problems can be achieved by RTGO using appropriate SBX operators. Furthermore, RTGO is able to outperform some existing methods, including methods using the same LS strategies.

A Cooperative Group Optimization System

Aug 03, 2018

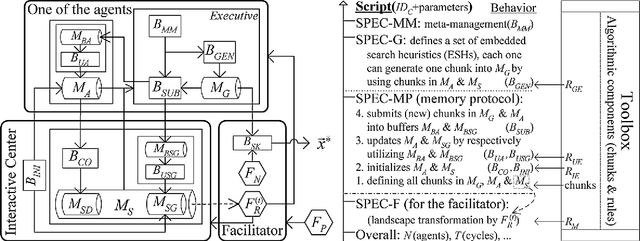

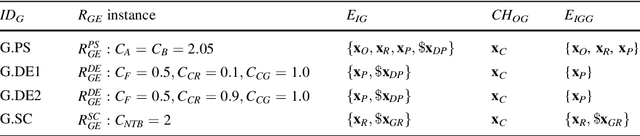

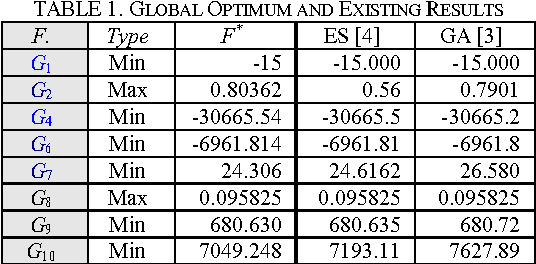

Abstract:A cooperative group optimization (CGO) system is presented to implement CGO cases by integrating the advantages of the cooperative group and low-level algorithm portfolio design. Following the nature-inspired paradigm of a cooperative group, the agents not only explore in a parallel way with their individual memory, but also cooperate with their peers through the group memory. Each agent holds a portfolio of (heterogeneous) embedded search heuristics (ESHs), in which each ESH can drive the group into a stand-alone CGO case, and hybrid CGO cases in an algorithmic space can be defined by low-level cooperative search among a portfolio of ESHs through customized memory sharing. The optimization process might also be facilitated by a passive group leader through encoding knowledge in the search landscape. Based on a concrete framework, CGO cases are defined by a script assembling over instances of algorithmic components in a toolbox. A multilayer design of the script, with the support of the inherent updatable graph in the memory protocol, enables a simple way to address the challenge of accumulating heterogeneous ESHs and defining customized portfolios without any additional code. The CGO system is implemented for solving the constrained optimization problem with some generic components and only a few domain-specific components. Guided by the insights from algorithm portfolio design, customized CGO cases based on basic search operators can achieve competitive performance over existing algorithms as compared on a set of commonly-used benchmark instances. This work might provide a basic step toward a user-oriented development framework, since the algorithmic space might be easily evolved by accumulating competent ESHs.

Cooperative Group Optimization with Ants (CGO-AS): Leverage Optimization with Mixed Individual and Social Learning

Aug 01, 2018

Abstract:We present CGO-AS, a generalized Ant System (AS) implemented in the framework of Cooperative Group Optimization (CGO), to show the leveraged optimization with a mixed individual and social learning. Ant colony is a simple yet efficient natural system for understanding the effects of primary intelligence on optimization. However, existing AS algorithms are mostly focusing on their capability of using social heuristic cues while ignoring their individual learning. CGO can integrate the advantages of a cooperative group and a low-level algorithm portfolio design, and the agents of CGO can explore both individual and social search. In CGO-AS, each ant (agent) is added with an individual memory, and is implemented with a novel search strategy to use individual and social cues in a controlled proportion. The presented CGO-AS is therefore especially useful in exposing the power of the mixed individual and social learning for improving optimization. The optimization performance is tested with instances of the Traveling Salesman Problem (TSP). The results prove that a cooperative ant group using both individual and social learning obtains a better performance than the systems solely using either individual or social learning. The best performance is achieved under the condition when agents use individual memory as their primary information source, and simultaneously use social memory as their searching guidance. In comparison with existing AS systems, CGO-AS retains a faster learning speed toward those higher-quality solutions, especially in the later learning cycles. The leverage in optimization by CGO-AS is highly possible due to its inherent feature of adaptively maintaining the population diversity in the individual memory of agents, and of accelerating the learning process with accumulated knowledge in the social memory.

A dissipative particle swarm optimization

May 27, 2005

Abstract:A dissipative particle swarm optimization is developed according to the self-organization of dissipative structure. The negative entropy is introduced to construct an opening dissipative system that is far-from-equilibrium so as to driving the irreversible evolution process with better fitness. The testing of two multimodal functions indicates it improves the performance effectively

SWAF: Swarm Algorithm Framework for Numerical Optimization

May 25, 2005

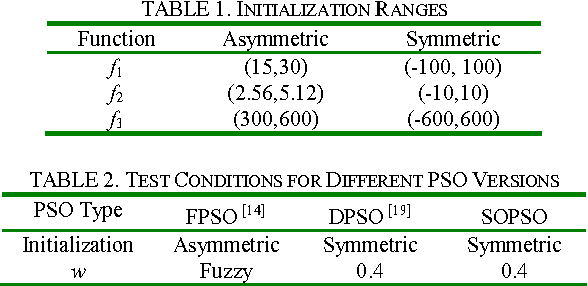

Abstract:A swarm algorithm framework (SWAF), realized by agent-based modeling, is presented to solve numerical optimization problems. Each agent is a bare bones cognitive architecture, which learns knowledge by appropriately deploying a set of simple rules in fast and frugal heuristics. Two essential categories of rules, the generate-and-test and the problem-formulation rules, are implemented, and both of the macro rules by simple combination and subsymbolic deploying of multiple rules among them are also studied. Experimental results on benchmark problems are presented, and performance comparison between SWAF and other existing algorithms indicates that it is efficiently.

Handling boundary constraints for numerical optimization by particle swarm flying in periodic search space

May 25, 2005

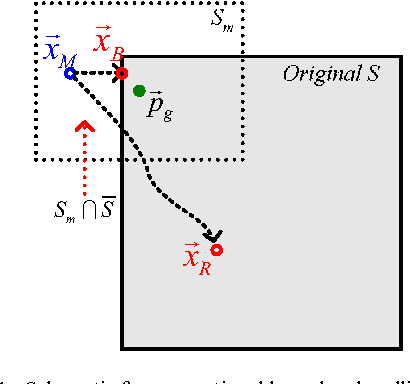

Abstract:The periodic mode is analyzed together with two conventional boundary handling modes for particle swarm. By providing an infinite space that comprises periodic copies of original search space, it avoids possible disorganizing of particle swarm that is induced by the undesired mutations at the boundary. The results on benchmark functions show that particle swarm with periodic mode is capable of improving the search performance significantly, by compared with that of conventional modes and other algorithms.

Handling equality constraints by adaptive relaxing rule for swarm algorithms

May 25, 2005

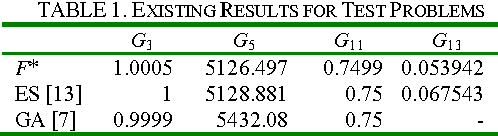

Abstract:The adaptive constraints relaxing rule for swarm algorithms to handle with the problems with equality constraints is presented. The feasible space of such problems may be similiar to ridge function class, which is hard for applying swarm algorithms. To enter the solution space more easily, the relaxed quasi feasible space is introduced and shrinked adaptively. The experimental results on benchmark functions are compared with the performance of other algorithms, which show its efficiency.

Optimizing semiconductor devices by self-organizing particle swarm

May 25, 2005

Abstract:A self-organizing particle swarm is presented. It works in dissipative state by employing the small inertia weight, according to experimental analysis on a simplified model, which with fast convergence. Then by recognizing and replacing inactive particles according to the process deviation information of device parameters, the fluctuation is introduced so as to driving the irreversible evolution process with better fitness. The testing on benchmark functions and an application example for device optimization with designed fitness function indicates it improves the performance effectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge