Jiashu Xu

Cosmos-Transfer1: Conditional World Generation with Adaptive Multimodal Control

Mar 18, 2025Abstract:We introduce Cosmos-Transfer, a conditional world generation model that can generate world simulations based on multiple spatial control inputs of various modalities such as segmentation, depth, and edge. In the design, the spatial conditional scheme is adaptive and customizable. It allows weighting different conditional inputs differently at different spatial locations. This enables highly controllable world generation and finds use in various world-to-world transfer use cases, including Sim2Real. We conduct extensive evaluations to analyze the proposed model and demonstrate its applications for Physical AI, including robotics Sim2Real and autonomous vehicle data enrichment. We further demonstrate an inference scaling strategy to achieve real-time world generation with an NVIDIA GB200 NVL72 rack. To help accelerate research development in the field, we open-source our models and code at https://github.com/nvidia-cosmos/cosmos-transfer1.

Cosmos-Reason1: From Physical Common Sense To Embodied Reasoning

Mar 18, 2025Abstract:Physical AI systems need to perceive, understand, and perform complex actions in the physical world. In this paper, we present the Cosmos-Reason1 models that can understand the physical world and generate appropriate embodied decisions (e.g., next step action) in natural language through long chain-of-thought reasoning processes. We begin by defining key capabilities for Physical AI reasoning, with a focus on physical common sense and embodied reasoning. To represent physical common sense, we use a hierarchical ontology that captures fundamental knowledge about space, time, and physics. For embodied reasoning, we rely on a two-dimensional ontology that generalizes across different physical embodiments. Building on these capabilities, we develop two multimodal large language models, Cosmos-Reason1-8B and Cosmos-Reason1-56B. We curate data and train our models in four stages: vision pre-training, general supervised fine-tuning (SFT), Physical AI SFT, and Physical AI reinforcement learning (RL) as the post-training. To evaluate our models, we build comprehensive benchmarks for physical common sense and embodied reasoning according to our ontologies. Evaluation results show that Physical AI SFT and reinforcement learning bring significant improvements. To facilitate the development of Physical AI, we will make our code and pre-trained models available under the NVIDIA Open Model License at https://github.com/nvidia-cosmos/cosmos-reason1.

Cosmos World Foundation Model Platform for Physical AI

Jan 07, 2025

Abstract:Physical AI needs to be trained digitally first. It needs a digital twin of itself, the policy model, and a digital twin of the world, the world model. In this paper, we present the Cosmos World Foundation Model Platform to help developers build customized world models for their Physical AI setups. We position a world foundation model as a general-purpose world model that can be fine-tuned into customized world models for downstream applications. Our platform covers a video curation pipeline, pre-trained world foundation models, examples of post-training of pre-trained world foundation models, and video tokenizers. To help Physical AI builders solve the most critical problems of our society, we make our platform open-source and our models open-weight with permissive licenses available via https://github.com/NVIDIA/Cosmos.

FAN-Unet: Enhancing Unet with vision Fourier Analysis Block for Biomedical Image Segmentation

Nov 28, 2024

Abstract:Medical image segmentation is a critical aspect of modern medical research and clinical practice. Despite the remarkable performance of Convolutional Neural Networks (CNNs) in this domain, they inherently struggle to capture long-range dependencies within images. Transformers, on the other hand, are naturally adept at modeling global context but often face challenges in capturing local features effectively. Therefore, we presents FAN-UNet, a novel architecture that combines the strengths of Fourier Analysis Network (FAN)-based vision backbones and the U-Net architecture, effectively addressing the challenges of long-range dependency and periodicity modeling in biomedical image segmentation tasks. The proposed Vision-FAN layer integrates the FAN layer and self-attention mechanisms, leveraging Fourier analysis to enable the model to effectively capture both long-range dependencies and periodic relationships. Extensive experiments on various medical imaging datasets demonstrate that FAN-UNet achieves a favorable balance between model complexity and performance, validating its effectiveness and practicality for medical image segmentation tasks.

Edify 3D: Scalable High-Quality 3D Asset Generation

Nov 11, 2024

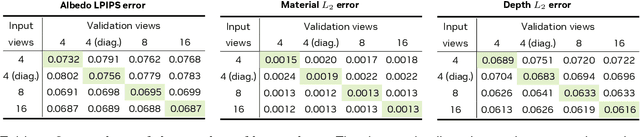

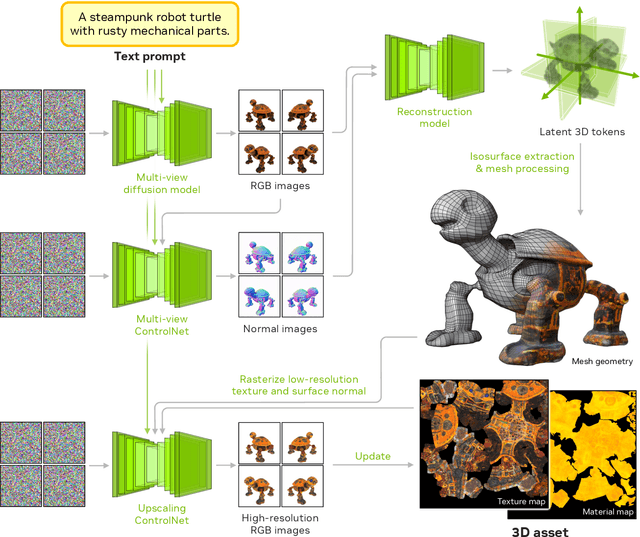

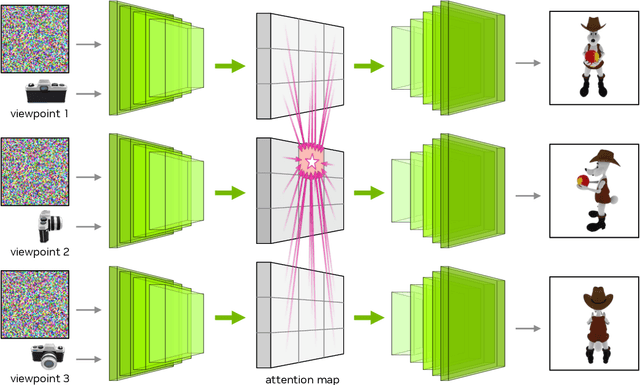

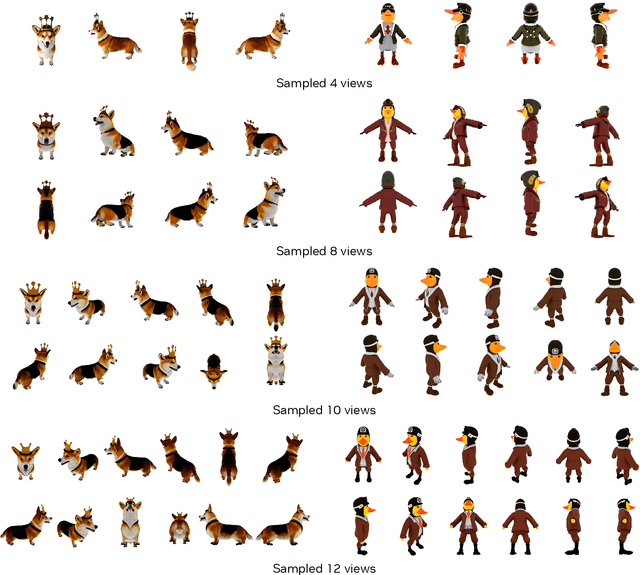

Abstract:We introduce Edify 3D, an advanced solution designed for high-quality 3D asset generation. Our method first synthesizes RGB and surface normal images of the described object at multiple viewpoints using a diffusion model. The multi-view observations are then used to reconstruct the shape, texture, and PBR materials of the object. Our method can generate high-quality 3D assets with detailed geometry, clean shape topologies, high-resolution textures, and materials within 2 minutes of runtime.

Med-TTT: Vision Test-Time Training model for Medical Image Segmentation

Oct 03, 2024Abstract:Medical image segmentation plays a crucial role in clinical diagnosis and treatment planning. Although models based on convolutional neural networks (CNNs) and Transformers have achieved remarkable success in medical image segmentation tasks, they still face challenges such as high computational complexity and the loss of local features when capturing long-range dependencies. To address these limitations, we propose Med-TTT, a visual backbone network integrated with Test-Time Training (TTT) layers, which incorporates dynamic adjustment capabilities. Med-TTT introduces the Vision-TTT layer, which enables effective modeling of long-range dependencies with linear computational complexity and adaptive parameter adjustment during inference. Furthermore, we designed a multi-resolution fusion mechanism to combine image features at different scales, facilitating the identification of subtle lesion characteristics in complex backgrounds. At the same time, we adopt a frequency domain feature enhancement strategy based on high pass filtering, which can better capture texture and fine-grained details in images. Experimental results demonstrate that Med-TTT significantly outperforms existing methods on multiple medical image datasets, exhibiting strong segmentation capabilities, particularly in complex image backgrounds. The model achieves leading performance in terms of accuracy, sensitivity, and Dice coefficient, providing an efficient and robust solution for the field of medical image segmentation.The code is available at https://github.com/Jiashu-Xu/Med-TTT .

Mitigating Backdoor Threats to Large Language Models: Advancement and Challenges

Sep 30, 2024

Abstract:The advancement of Large Language Models (LLMs) has significantly impacted various domains, including Web search, healthcare, and software development. However, as these models scale, they become more vulnerable to cybersecurity risks, particularly backdoor attacks. By exploiting the potent memorization capacity of LLMs, adversaries can easily inject backdoors into LLMs by manipulating a small portion of training data, leading to malicious behaviors in downstream applications whenever the hidden backdoor is activated by the pre-defined triggers. Moreover, emerging learning paradigms like instruction tuning and reinforcement learning from human feedback (RLHF) exacerbate these risks as they rely heavily on crowdsourced data and human feedback, which are not fully controlled. In this paper, we present a comprehensive survey of emerging backdoor threats to LLMs that appear during LLM development or inference, and cover recent advancement in both defense and detection strategies for mitigating backdoor threats to LLMs. We also outline key challenges in addressing these threats, highlighting areas for future research.

Securing Multi-turn Conversational Language Models Against Distributed Backdoor Triggers

Jul 04, 2024

Abstract:The security of multi-turn conversational large language models (LLMs) is understudied despite it being one of the most popular LLM utilization. Specifically, LLMs are vulnerable to data poisoning backdoor attacks, where an adversary manipulates the training data to cause the model to output malicious responses to predefined triggers. Specific to the multi-turn dialogue setting, LLMs are at the risk of even more harmful and stealthy backdoor attacks where the backdoor triggers may span across multiple utterances, giving lee-way to context-driven attacks. In this paper, we explore a novel distributed backdoor trigger attack that serves to be an extra tool in an adversary's toolbox that can interface with other single-turn attack strategies in a plug and play manner. Results on two representative defense mechanisms indicate that distributed backdoor triggers are robust against existing defense strategies which are designed for single-turn user-model interactions, motivating us to propose a new defense strategy for the multi-turn dialogue setting that is more challenging. To this end, we also explore a novel contrastive decoding based defense that is able to mitigate the backdoor with a low computational tradeoff.

BEHAVIOR Vision Suite: Customizable Dataset Generation via Simulation

May 15, 2024

Abstract:The systematic evaluation and understanding of computer vision models under varying conditions require large amounts of data with comprehensive and customized labels, which real-world vision datasets rarely satisfy. While current synthetic data generators offer a promising alternative, particularly for embodied AI tasks, they often fall short for computer vision tasks due to low asset and rendering quality, limited diversity, and unrealistic physical properties. We introduce the BEHAVIOR Vision Suite (BVS), a set of tools and assets to generate fully customized synthetic data for systematic evaluation of computer vision models, based on the newly developed embodied AI benchmark, BEHAVIOR-1K. BVS supports a large number of adjustable parameters at the scene level (e.g., lighting, object placement), the object level (e.g., joint configuration, attributes such as "filled" and "folded"), and the camera level (e.g., field of view, focal length). Researchers can arbitrarily vary these parameters during data generation to perform controlled experiments. We showcase three example application scenarios: systematically evaluating the robustness of models across different continuous axes of domain shift, evaluating scene understanding models on the same set of images, and training and evaluating simulation-to-real transfer for a novel vision task: unary and binary state prediction. Project website: https://behavior-vision-suite.github.io/

HC-Mamba: Vision MAMBA with Hybrid Convolutional Techniques for Medical Image Segmentation

May 08, 2024

Abstract:Automatic medical image segmentation technology has the potential to expedite pathological diagnoses, thereby enhancing the efficiency of patient care. However, medical images often have complex textures and structures, and the models often face the problem of reduced image resolution and information loss due to downsampling. To address this issue, we propose HC-Mamba, a new medical image segmentation model based on the modern state space model Mamba. Specifically, we introduce the technique of dilated convolution in the HC-Mamba model to capture a more extensive range of contextual information without increasing the computational cost by extending the perceptual field of the convolution kernel. In addition, the HC-Mamba model employs depthwise separable convolutions, significantly reducing the number of parameters and the computational power of the model. By combining dilated convolution and depthwise separable convolutions, HC-Mamba is able to process large-scale medical image data at a much lower computational cost while maintaining a high level of performance. We conduct comprehensive experiments on segmentation tasks including skin lesion, and conduct extensive experiments on ISIC17 and ISIC18 to demonstrate the potential of the HC-Mamba model in medical image segmentation. The experimental results show that HC-Mamba exhibits competitive performance on all these datasets, thereby proving its effectiveness and usefulness in medical image segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge